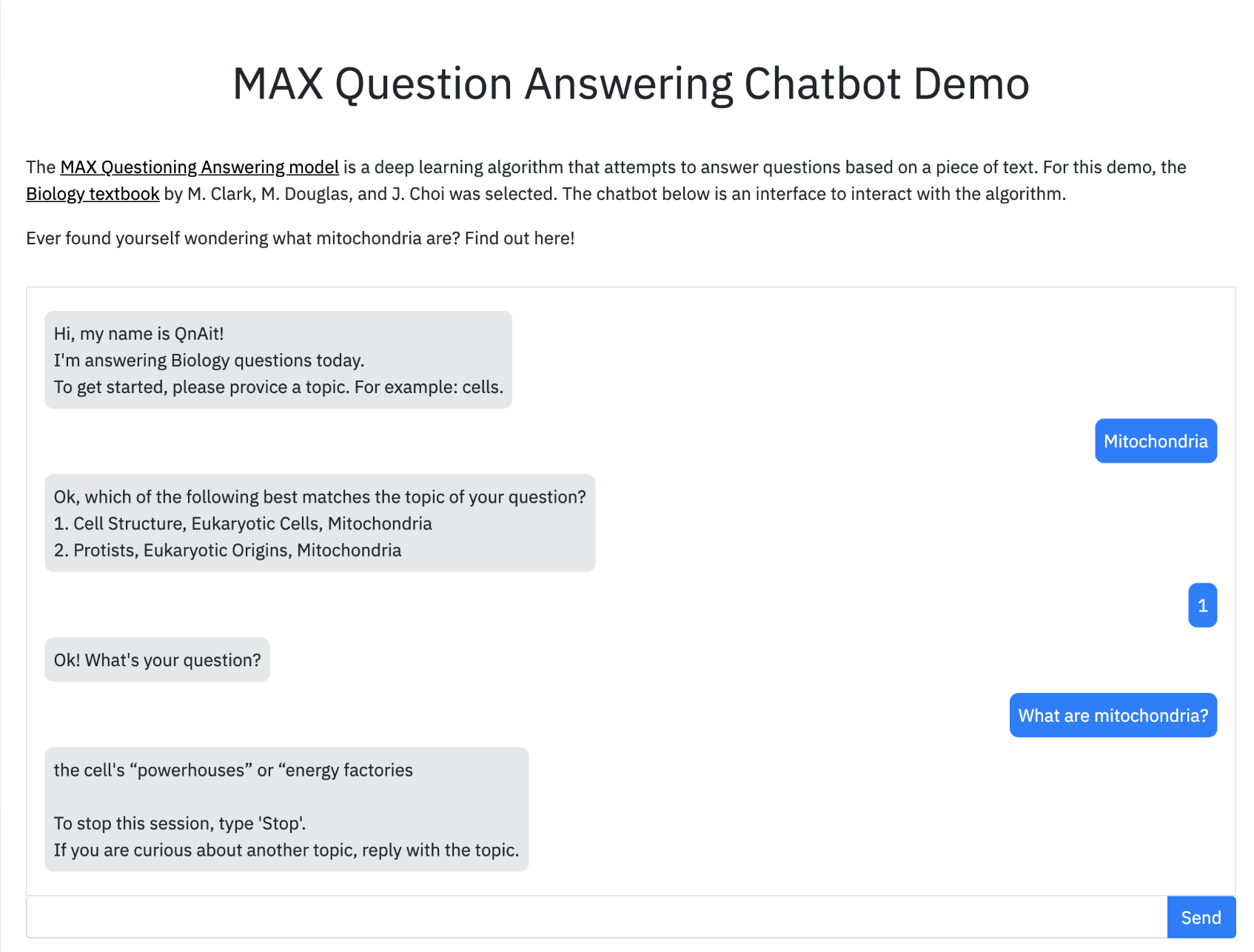

Ever found yourself wondering what mitochondria are? Perhaps you are curious about how neurons communicate with each other? A Google search works well to answer your questions, but how about something still digestible, but more precise? This code pattern will show you how to build a chatbot that will answer questions found in a college biology textbook. In this pattern, the textbook used is Biology 2e by Mary Ann Clark, Matthew Douglas, and Jung Choi.

The web app uses the Model Asset eXchange (MAX) Question Answering Model to answer questions that are typed in by the user. The web application provides a chat-like interface that allows users to type in questions that are sent to a Flask python server. The backend then sends the question and related body of text from the textbook to a REST end point exposed by the MAX model which returns an answer to the question, displayed as a response from the chatbot. The model's REST endpoint is set up using the docker image provided on MAX.

When the reader has completed this Code Pattern, they will understand how to:

- Build a Docker image of the Model Asset eXchange (MAX) Question Answering model

- Deploy a deep learning model with a REST endpoint

- Generate answers to questions using the MAX Model's REST API

- Run a web application that using the model's REST API

- User interacts with Web UI and asks questions until the query is narrow enough to select text from the textbook.

- Web UI requests answers from Server and updates chat when data is returned.

- Server sends question and body of text to Model API and receives answer data.

- IBM Model Asset Exchange: A place for developers to find and use free and open source deep learning models.

- Docker: Docker is a tool designed to make it easier to create, deploy, and run applications by using containers.

- Python: Python is a programming language that lets you work more quickly and integrate your systems more effectively.

- JQuery: jQuery is a cross-platform JavaScript library designed to simplify the client-side scripting of HTML.

- Bootstrap 3: Bootstrap is a free and open-source front-end library for designing websites and web applications.

- Flask: A lightweight Python web application framework.

Ways you can run this code pattern:

You can deploy the model and web app on Kubernetes using the latest docker images on Quay.

On your Kubernetes cluster, run the following commands:

kubectl apply -f https://raw.githubusercontent.com/IBM/MAX-Question-Answering/master/max-question-answering.yaml

kubectl apply -f https://raw.githubusercontent.com/IBM/MAX-Question-Answering-Web-App/master/max-question-answering-web-app.yaml

The web app will be available at port 8088 of your cluster.

The model will only be available internally, but can be accessed externally through the NodePort.

Start the Model API

Build the Web App

- Clone the repository

- Install dependencies

- Start the server

- Configure ports (Optional)

- Instructions for Docker (Optional)

To run the docker image, which automatically starts the model serving API, run:

$ docker run -it -p 5000:5000 quay.io/codait/max-question-answering

This will pull a pre-built image from Quay (or use an existing image if already cached locally) and run it. If you'd rather build and run the model locally, or deploy on a Kubernetes cluster, you can follow the steps in the model README

The API server automatically generates an interactive Swagger documentation page.

Go to http://localhost:5000 to load it. From there you can explore the API and also create test requests.

Use the model/predict endpoint to enter in text data and get answers from the model.

The model samples folder contains samples you can use to test out the API, or you can use your own.

You can also test it on the command line, for example:

$ curl -X POST "http://localhost:5000/model/predict" -H "accept: application/json" -H "Content-Type: application/json" -d "{\"paragraphs\": [{ \"context\": \"John lives in Brussels and works for the EU\", \"questions\": [\"Where does John Live?\",\"What does John do?\",\"What is his name?\" ]},{ \"context\": \"Jane lives in Paris and works for the UN\", \"questions\": [\"Where does Jane Live?\",\"What does Jane do?\" ]}]}"Clone the web app repository locally. In a terminal, run the following command:

$ git clone https://github.com/IBM/MAX-Question-Answering-Web-App.git

Change directory into the repository base folder:

$ cd MAX-Question-Answering-Web-App

Before running this web app you must install its dependencies:

$ pip install -r requirements.txt

You then start the web app by running:

$ python app.py

You can then access the web app at: http://localhost:8000

If you want to use a different port or are running the model API at a different location you can change them with command-line options:

$ python app.py --port=[new port] --model=[endpoint url including protocol and port]

To run the web app with Docker the containers running the web server and the REST endpoint need to share the same network stack. This is done in the following steps:

Modify the command that runs the MAX Question Answering REST endpoint to map an additional port in the container to a

port on the host machine. In the example below it is mapped to port 8000 on the host but other ports can also be used.

docker run -it -p 5000:5000 -p 8000:8000 --name max-question-answering quay.io/codait/max-question-answering

Build the web app image by running:

docker build -t max-question-answering-web-app .

Run the web app container using:

docker run --net='container:max-question-answering' -it max-question-answering-web-app