We provide a PyTorch implementation of MONet.

This project is built on top of the CycleGAN/pix2pix code written by Jun-Yan Zhu and Taesung Park, and supported by Tongzhou Wang.

Note: The implementation is developed and tested on Python 3.7 and PyTorch 1.1.

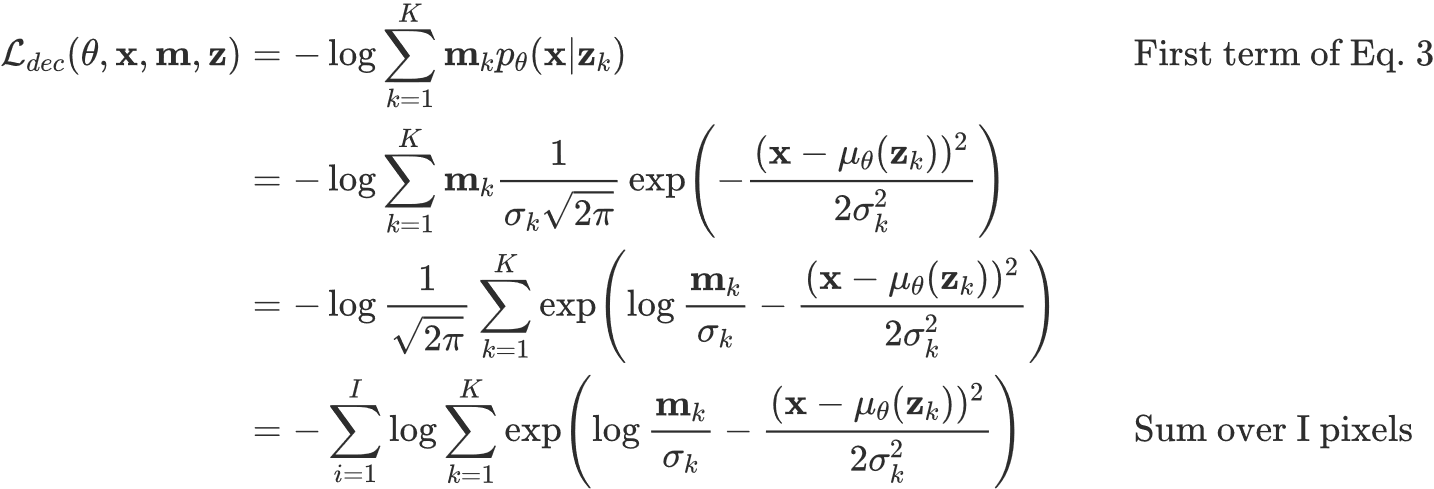

where I is the number of pixels in the image, and K is the number of mixture components. The inner term of the loss function is implemented using torch.logsumexp(). Each pixel of the decoder output is assumed to be iid Gaussian, where sigma (the "component scale") is a fixed scalar (See Section B.1 of the Supplementary Material).

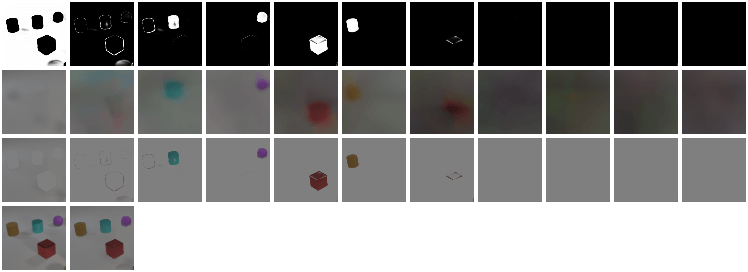

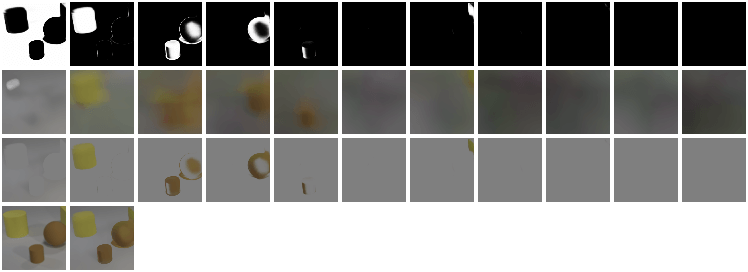

The first three rows correspond to the Attention network outputs (masks), raw Component VAE (CVAE) outputs, and the masked CVAE outputs, respectively. Each column corresponds to one of the K mixture components.

For the fourth row, the first image is the ground truth while the second one is the composite image created by the pixel-wise addition of the K component images (third row).

- Linux or macOS (not tested)

- Python 3.7

- CPU or NVIDIA GPU + CUDA 10 + CuDNN

- Clone this repo:

git clone https://github.com/baudm/MONet-pytorch.git

cd MONet-pytorch- Install [PyTorch](http://pytorch.org and) 1.1+ and other dependencies (e.g., torchvision, visdom and dominate).

- For pip users, please type the command

pip install -r requirements.txt. - For Conda users, we provide a installation script

./scripts/conda_deps.sh. Alternatively, you can create a new Conda environment usingconda env create -f environment.yml. - For Docker users, we provide the pre-built Docker image and Dockerfile. Please refer to our Docker page.

- For pip users, please type the command

- Download a MONet dataset (e.g. CLEVR):

wget -cN https://dl.fbaipublicfiles.com/clevr/CLEVR_v1.0.zip- To view training results and loss plots, run

python -m visdom.serverand click the URL http://localhost:8097. - Train a model:

python train.py --dataroot ./datasets/CLEVR_v1.0 --name clevr_monet --model monetTo see more intermediate results, check out ./checkpoints/clevr_monet/web/index.html.

To generate a montage of the model outputs like the ones shown above:

./scripts/test_monet.sh

./scripts/generate_monet_montage.sh- Download pretrained weights for CLEVR 64x64:

./scripts/download_monet_model.sh clevr