PennBook is an implementation of the core functionalities of facebook.com. It uses a Node.js server, React.js for the frontend, and Hadoop libraries such Apache Spark along with AWS Elastic MapReduce for the Big Data functionalities. Below are implementation details. The actual code is in a private repo per university policies. Feel free to reach out for more details.

-

Divya Somayajula

-

Olivia O'Dwyer

-

Ishaan Rao

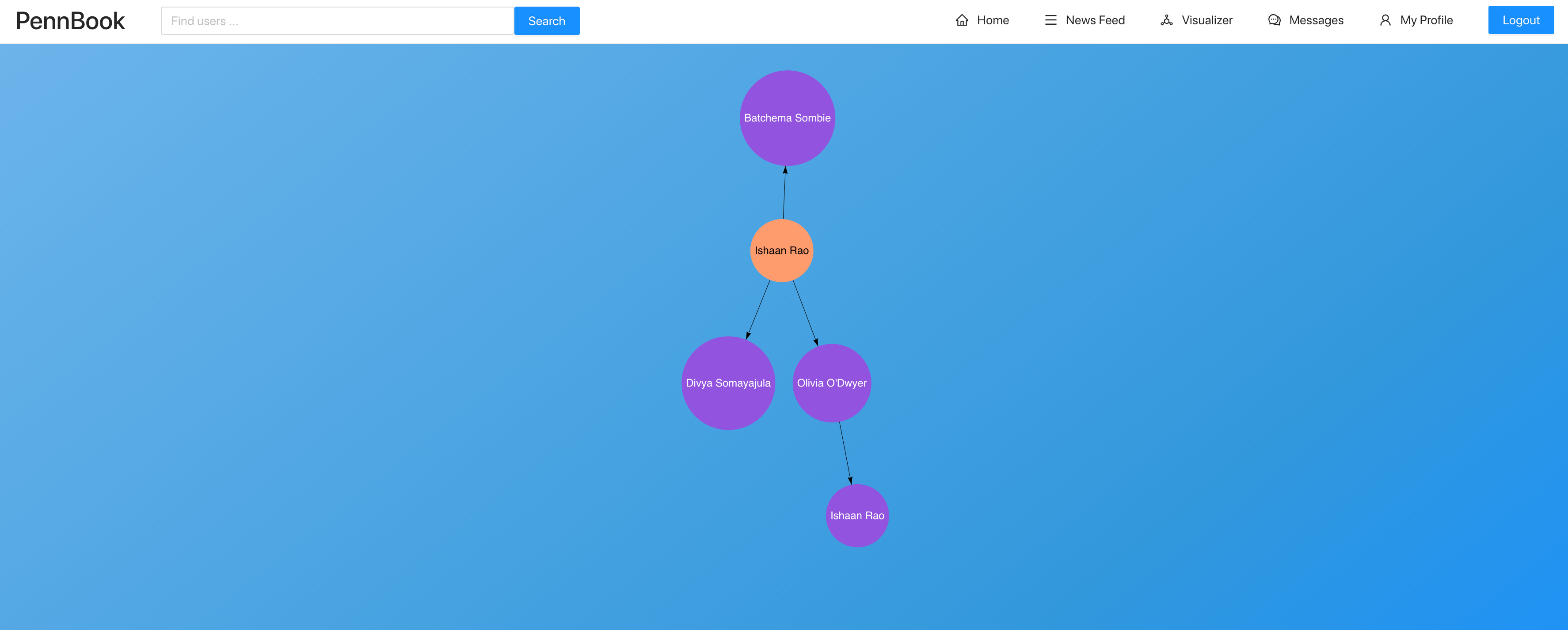

Friend Network Visualizer Page

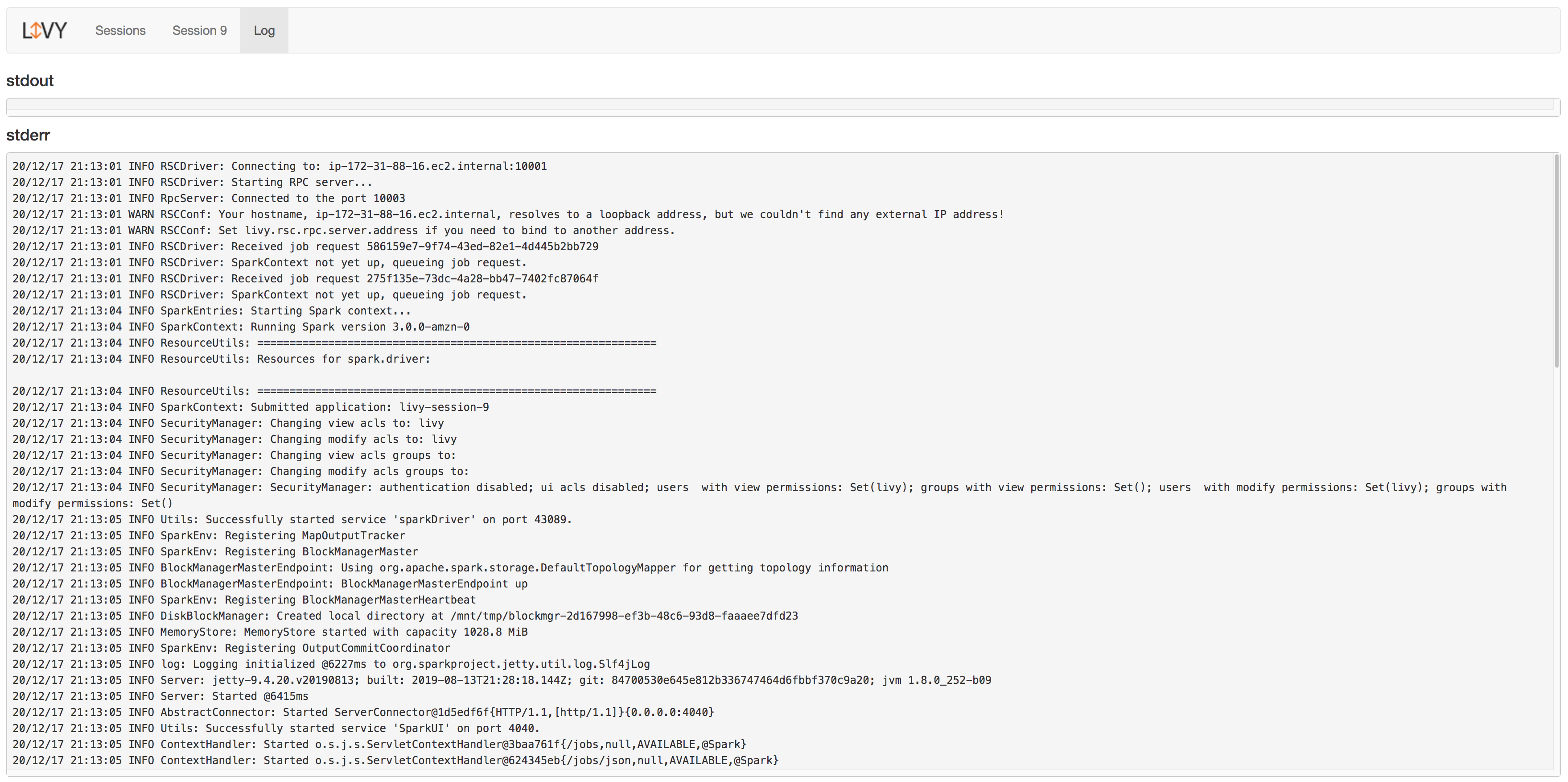

Spark Job Running on AWS EMR (Livy Interface)

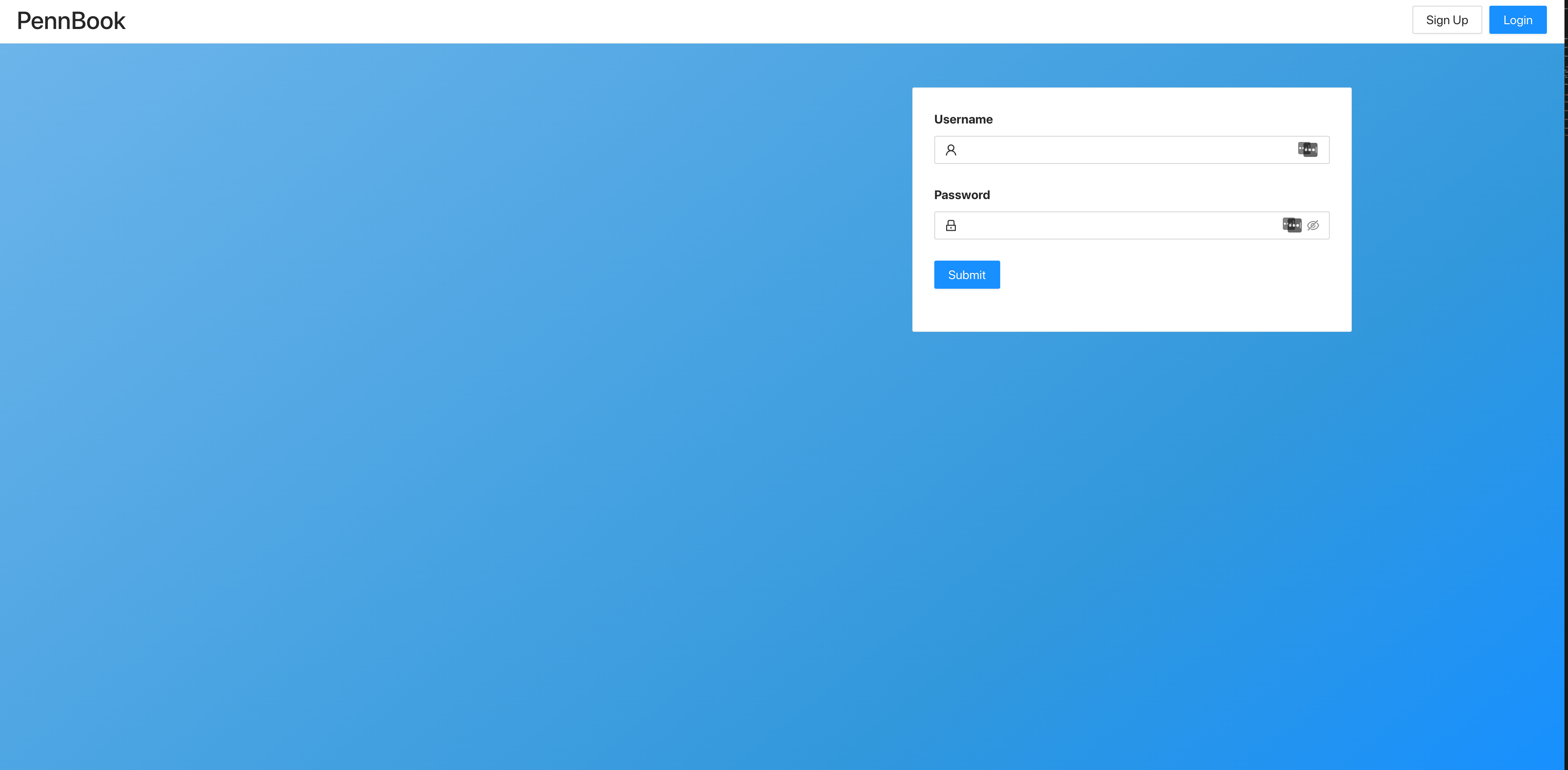

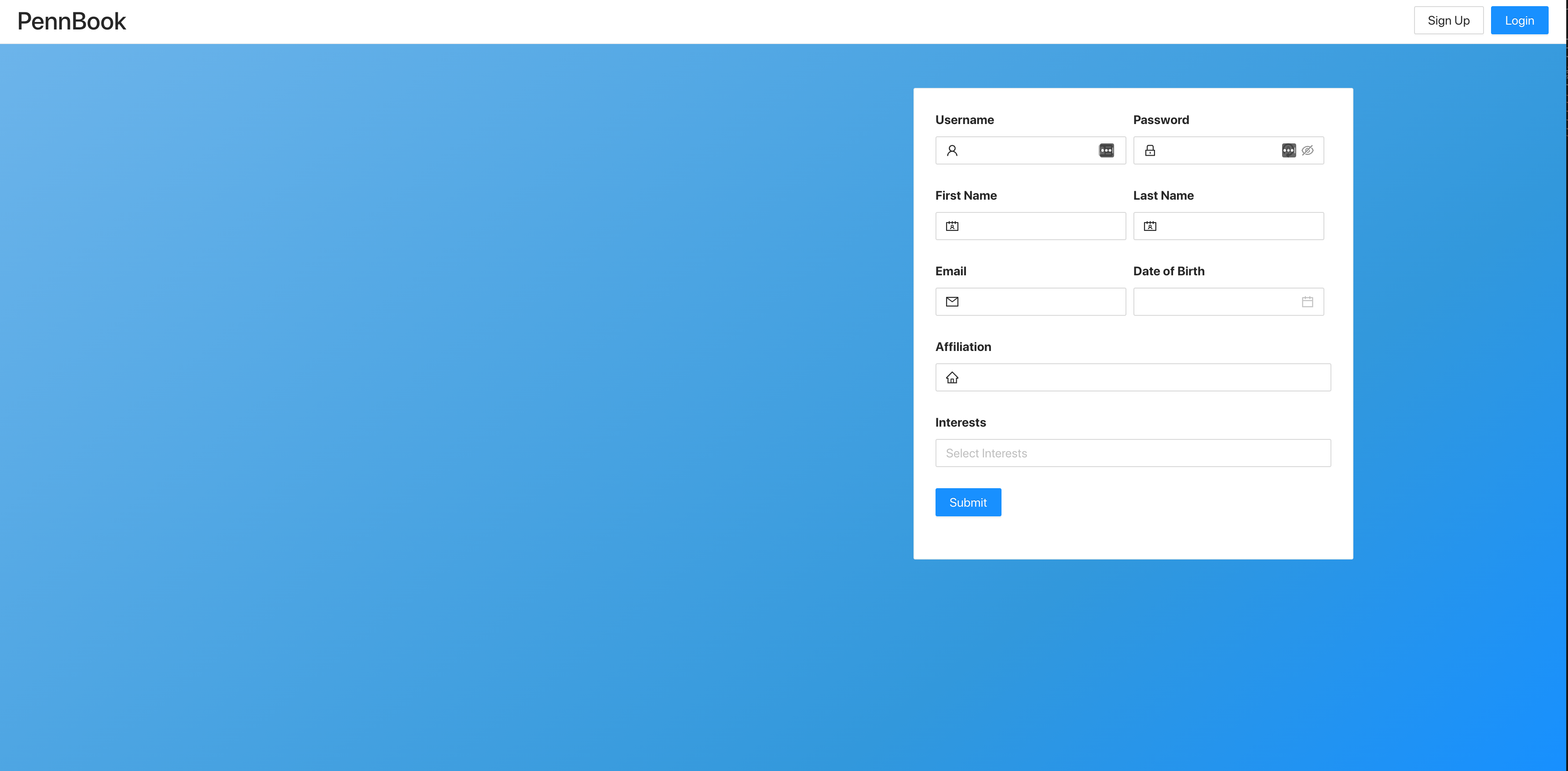

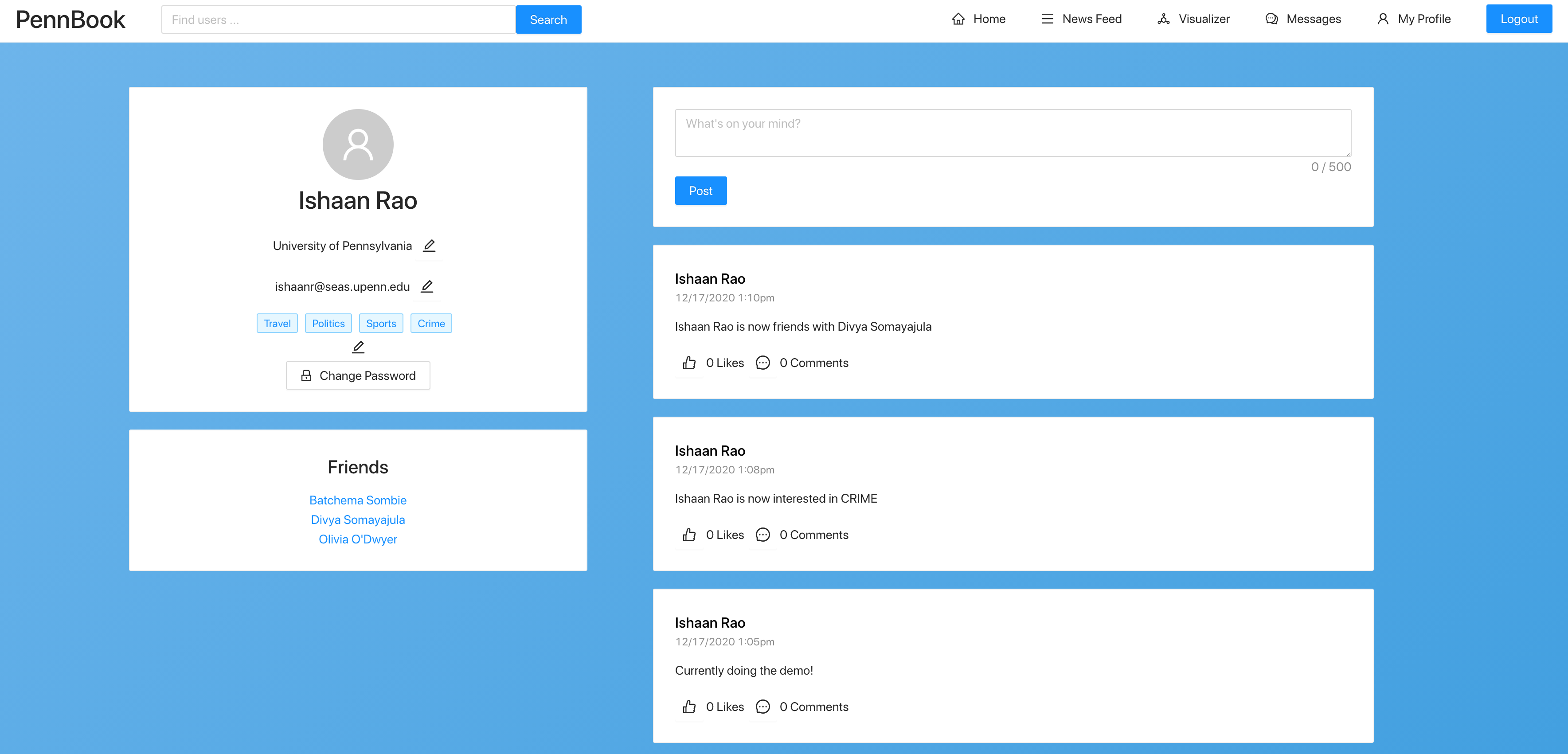

Accounts

Users have the ability to create accounts, sign in and sign out, have a hashed password for security, and can change any of their interests, affiliation, or password. Users can create posts, friend other users, comment on posts, as well as like posts, articles, and comments.

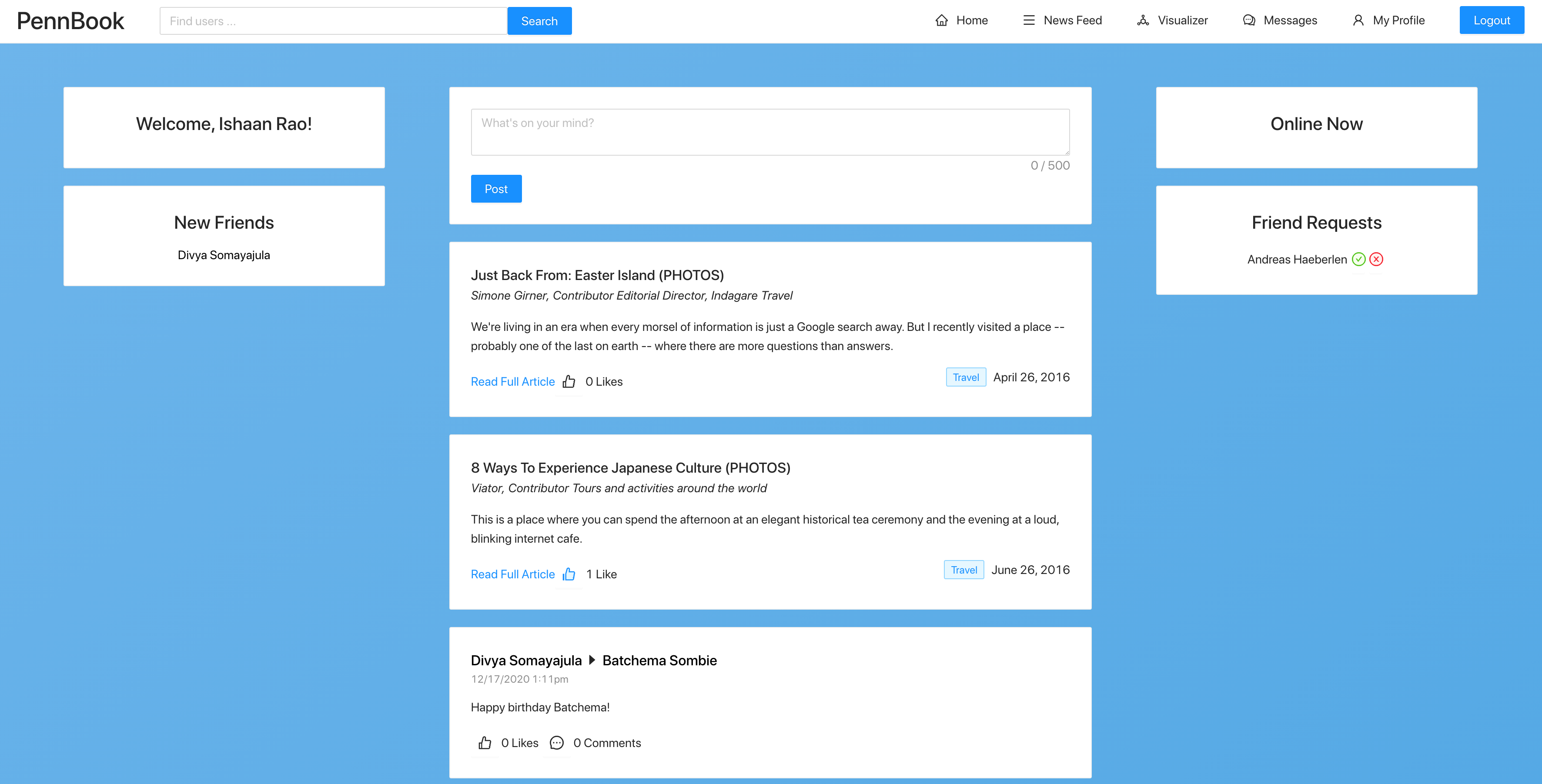

Walls and Home Pages

Each user has their own wall that other people can post on and their status updates are displayed on. Each user's home page shows a feed of their friends' posts and status updates as well as two recommended news articles. The home page also shows a list of new friendships, friends who are online, and any pending friend requests. Users can start a chat session with any online friend from the home page. The wall contains all basic information about the user and a list of their friends.

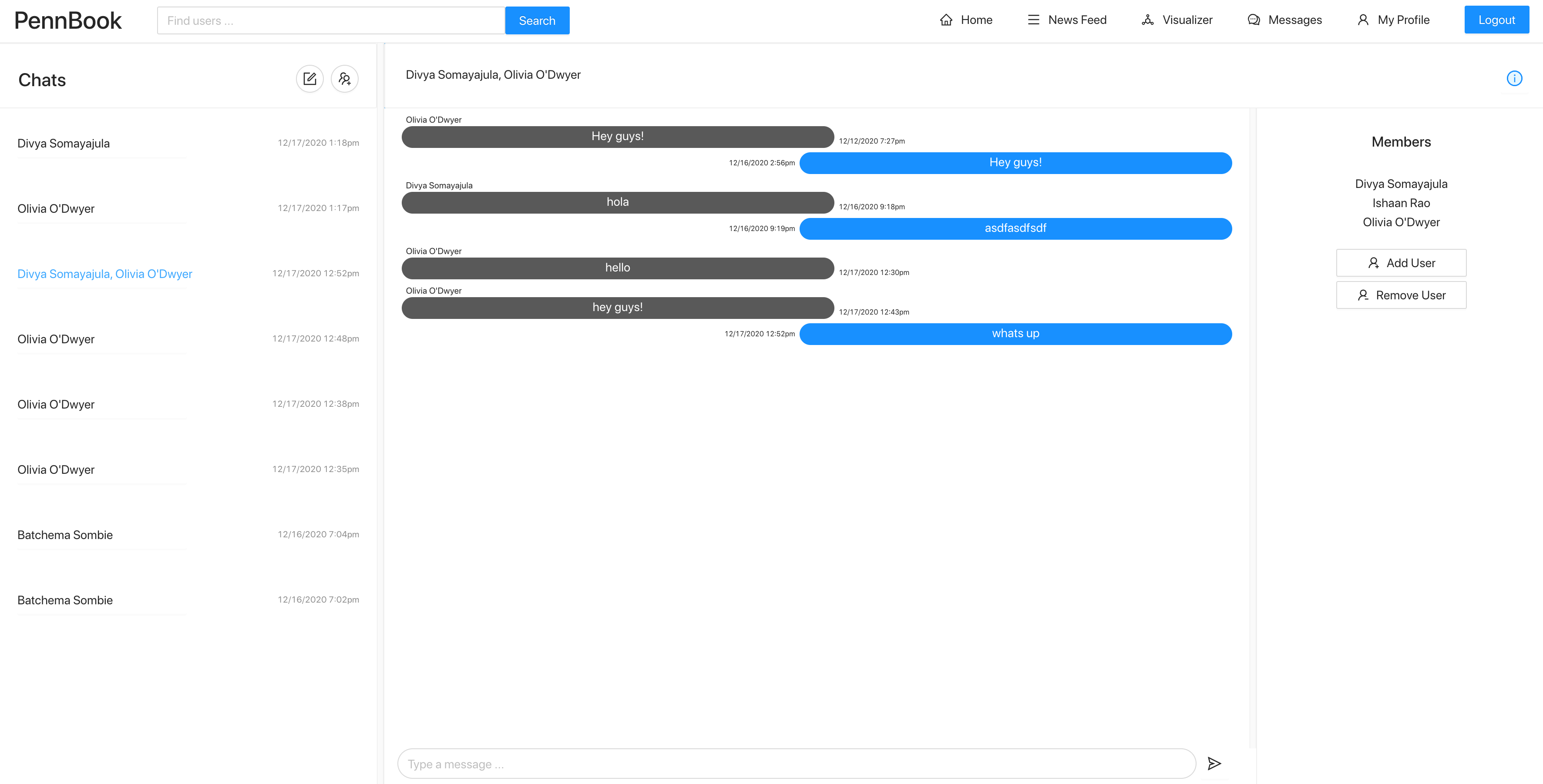

Chat

Each user can create chats with other users, including group chats; notifications are sent when a chat is received, and unread chats show up as bolded for distinction. All chats are persistent and real-time if both users are online (using socket.io).

Search

Users can search for other users by name using the search bar, which queries a table that stores all possible prefixes for each user.

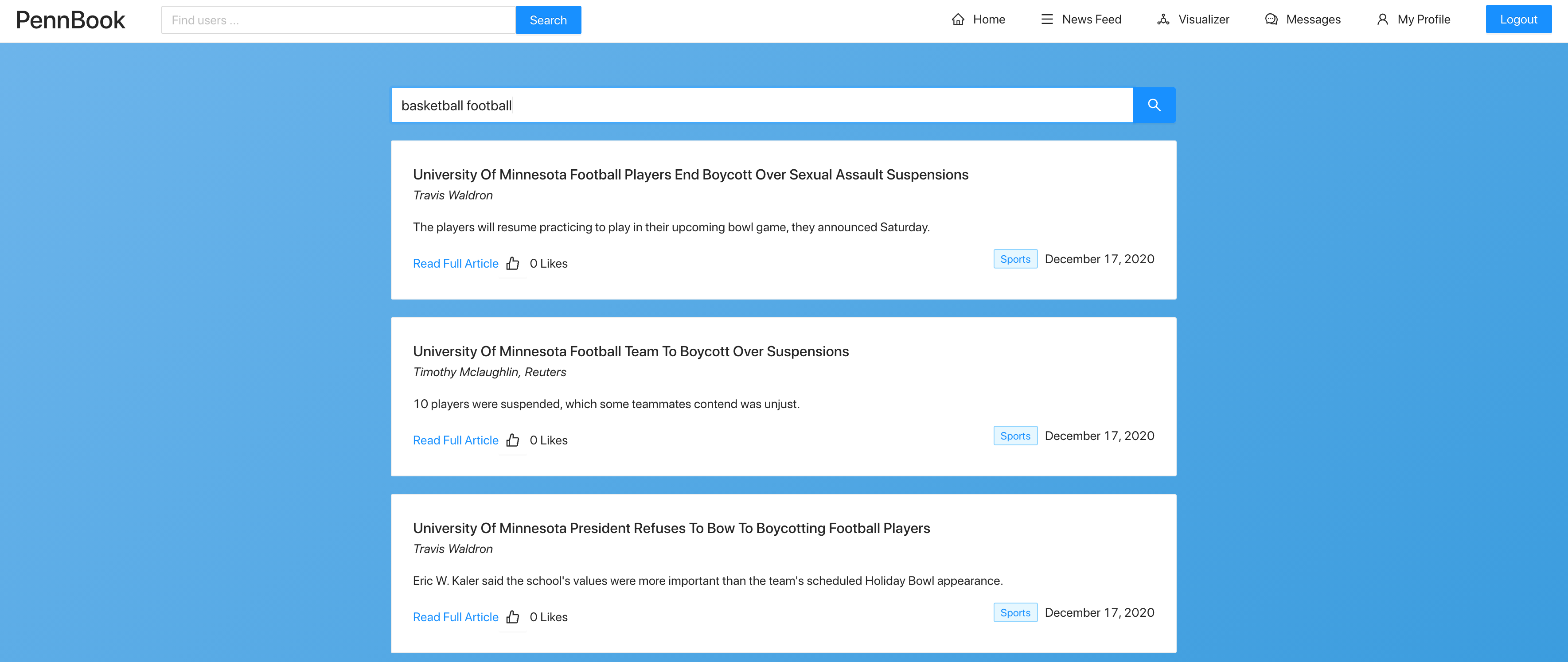

News Feeds

Each user has a news feed with relevant news articles. Articles are recommended to users based on their interests and which articles they have liked in the past, and all of these show up on the home page. In addition to these, users can search for news articles and will get relevant, sorted results.

The recommendations system is run as a Spark job on Livy every hour and on trigger when a user changes their interests.

Extra Credit

- Replicating Facebook style likes for posts

- Liking comments and articles

- Pending/Accepting friend requests

- Chat Notifications (per message)

client/

Comment.js, Home.js, HomeNavBar.js, Messages.js, NewsArticle.js, NewsFeed.js, Post.js, Profile.js, Signup.js, Visualizer.js, newsConstants.js, ports.js, auth.js, AuthRoute.js, Home.css, HomeNavBar.css, Login.css, MainNavBar.css, Messages.css, NewsFeed.css, Profile.css, Signup.css, utils.js, App.js, App.css

server/

accounts.js, articles_upload.js, articles-likes.js, articles.js, chats.js, comments-likes.js, friends.js, groups.js, posts_likes.js, posts.js, recommendations.js, account_routes.js, chat_routes.js, comments_likes_routes.js, friends_routes.js, login_routes.js, news_feed_routes.js, recommendations.js, search_routes.js, visualizer_routes.js, wall_home_routes.js, config.js, jwt.js, app.js

spark/

Config.java, AdsorptionJob.java, ComputeLivy.java, DataManager.java, DynamoConnector.java, S3Connector.java, SparkConnector.java, MyPair.java

Third Party Libraries

(for Node server and React Frontend. Check spark/pom.xml for java dependencies)

- Bcrypt - used for secure password hashing

- React-graph-vis - used for visualizer

- Ant-design - used for frontend design

- UUIDv4 - used for generating UUIDs for chat ids and visualizer graphs

- Socket.io - used for realtime chat and notifications

System Requirements

- Node.js version 12.X

- Java JDK 15

- Maven 3.6.3

- Make sure that all system requirements are met. Follow these links for Node, Java JDK, and Maven installations. It is critical that the versions match, especially for the Java JDK and Apache Maven.

- In the

server/folder, runnpm ci - In the

client/folder, runnpm ci - In the

client/folder, runnpm run build - In the

client/folder, runmv -f build ../server - In the spark folder, run

mvn install - Loading the Database:

- Download the articles json file as

articles.jsonand put the file in theserverfolder. Then run shell commandnpm run upload-articlesfrom theserverfolder. - Call the

/getloadallkeywordsroute in order to populate the articles_keywords table

- Download the articles json file as

- App Configurations:

- Create

server/.envand look intoserver/.env.distfor the format of the.envfile. For example, if running locally, setSERVER_PORT=8000andSERVER_IP=localhost - Navigate to

client/utils/ports.jsand setAPI_ENDPOINTto the correct host and port combination. For example, if running locally, setvar API_ENDPOINT = "localhost:8000". - Create the folder

.awsand put the credentials in.aws/credentials. Then, inComputeLivy.java, change the StringlivyURIto the corresponding cluster Livy URL. There is a TODO comment next to it. Finally, runmvn install,mvn compile, and thenmvn packagein the spark folder

- Create

- Running the App - there are two options for running the application:

- Local: If running locally, open two terminal windows. In one of them navigate to

client/and runnpm start. In the other terminal, navigate toserver/and runnode app.js. You should see the application atlocalhost:3000. - Deployed on EC2: If running on EC2, navigate to

server/and runnpm run start-prod. You should see the application at<PublicIP>:80.

- Local: If running locally, open two terminal windows. In one of them navigate to

We used DynamoDB. bold fields are keys. Partition keys are indicated

-

accounts

- username - String (partition)

- firstname

- lastname

- password

- affiliation

- dob

- interests

- last_active

-

article_likes_by_username

- username - String (partition)

- article_id - String (sort)

-

article_likes_by_article_id

- article_id - String (partition)

- username - String (sort)

-

articles_keyword

- keyword - String (partition)

- article_id - String (sort)

- headline

- url

- date

- authors

- category

- short_description

-

chat

- _id - String (primary)

- timestamp - Number (sort)

- content

- sender

- sender_full_name

-

comment_likes

- comment_id - String (partition)

- username - String (sort)

-

comments

- post_id - String (partition)

- timestamp - Number (sort)

- content

- commenter_username

- number_of_likes

- commenter_full_name

-

friends

- friendA - String (partition)

- friendB - String (sort)

- friendA_full_name

- friendB_full_name

- timestamp

- sender

- accepted

-

news_articles

- article_id - String (partition)

- date

- authors

- category

- short_description

- headline

- link

- num_likes

-

post_likes

- post_id - String (partition)

- username - String (sort)

-

posts

- post_id - String

- timestamp - Number

- content

- poster

- postee

- num_likes

- num_comments

- poster_full_name

- postee_full_name

-

recommendations

- username - String (partition)

- article_ids

-

search_cache

- query- String (partition)

- articles

-

user_chat

- username - String (partition)

- _id - String (sort)

- members

- last_modified

- is_group

- last_read

-

user_search

- prefix - String (partition)

- username - String (sort)

- fullname