Full name: Basyir Bin Othman Email address: basyir.othman@u.nus.edu

├── src

│ └── main.py

│ └── settings.py

│ └── preprocessing.py

│ └── feature_engineering.py

│ └── models.py

├── data

│ └── survive.db

├── README.md

├── eda.ipynb

├── requirements.txt

└── run.sh

- From the source folder, run

pip3 install -r requirements.txt. This should load up all necessary dependencies for the EDA and for the main program - Run

./run.sh. If there is an error, try runningchmod u+x run.shfirst

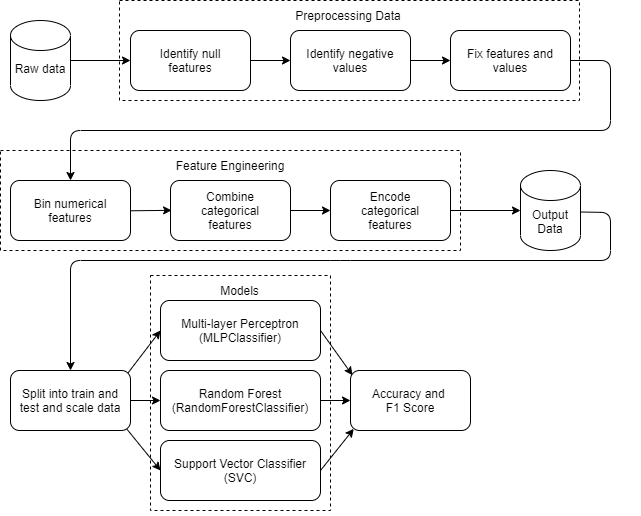

Within the pipeline, 3 models from scikit-learn are used (MLPClassifier, RandomForestClassifer and SVC (SupportVectorClassifier)). If you wish to test other models from scikit-learn out (or try other hyperparameters):

- Navigate to

src/models.pyand import the relevant model fromscikit-learn - Go to the function

define_modelsand add your model

- Null features and numerical features with negative values are processed and fixed as their values are incorrect

- Null features are replaced with the median values while numerical features take an

abs()value - Relabel data that has the same label meanings but are inconsistent (eg

'Yes' and 'yes')

- Some numerical features are binned to ease skewed data

- Some categorical features are combined to account for underrepresented groups

- Categorical features are one-hot encoded for ML purposes

- Output data is scaled using

StandardScaler()and passed into 3 models (MLPClassifier,RandomForestClassifierandSVC) - Metric scores like

accuracyandf1_scoreare obtained

- There were 1843 repeated ids from ID. Upon further investigation, the values for some repeated IDs are impossible so all repeated IDs were dropped.

- Surival rate for this dataset is slightly biased towards non-survivors (only 32.18% survived or had class 1)

- For numerical features:

- Some ages in Age was negative.

abs()value of the ages were taken. Ranges ofabs()ages were consistent with positive ages, so likely age value themselves were not errornous and only the negative was. - Creatinine had missing values. All missing values came from non-survivors so the median value according to gender was used to fill up the missing values

- Some features were skewed (Sodium, Creatinine) and extremely skewed (Platelets, Creatinine phosphokinase).

- Survival rate increased as Blood Pressure increased

- Survival rate decreased as Hemoglobin increased

- Survival rate increased as Weight increased

- Age and Height showed no clear relationship to survival

- Some ages in Age was negative.

- For categorical features:

- Smoke and Ejection Fraction had labels with same meaning but different syntax

- Survival rates for groups within each feature remained almost consistent, except for Ejection Fraction

- However, across the groups, clear relationships were seen for survival rates

- Favorite Color seems to play no part in survival rate

- Skewed numerical features from 1.3 were binned to obtain the following:

- Survival decreased as Sodium increased

- Survival increased as Creatinine increased

- Platelets and Creatine phosphokinase showed no clear relationships to survival

- Grouped high and normal for Ejection Fraction as high was very underrepresented

- Best dataset obtained had dropped Favorite color and Height dropped after model evaluation

3 models were used for evaluation, MLPClassifier (Neural Network), RandomForestClassifier, AdaBoostClassifier

- MLPClassifier:

- Neural networks with perform quite well on classification tasks with distinct features and are easily customizable by varying the number of hidden neurons and hidden layers to improve performance on the task

- RandomForestClassifier:

- Decision trees are usually good at separating features according to how much a certain feature plays a part in determining the output. By combining many decision trees (random forest), we can aggregate a good model output

- AdaBoostClassifier:

- AdaBoost is good at combining weak learners into a single strong classifier. Adaboost would work well with using the other classifiers above and thus would naturally be a good model to use.

Metrics at time of writing:

- MLPClassifier

- Accuracy - 99.85%

- F1 Score - 1.00

- RandomForestClassifier

- Accuracy - 90.48%

- F1 Score - 0.83

- AdaBoostClassifier

- Accuracy - 99.14%

- F1 Score - 0.99

MLPClassifier is the best amongst the models as it has the highest accuracy and the highest F1 score. Despite the dataset being slightly skewed towards non-survivors, the F1 score shows that there is little misclassification in important output (more true positives and lesser false negatives)