An Apache Mesos framework for Riak TS and Riak KV, a distributed NoSQL key-value data store that offers high availability, fault tolerance, operational simplicity, and scalability.

Preview available at basho-labs.github.io/riak-mesos.

The Riak Mesos Framework supports the following environments:

- Riak KV v2.2.0 (See here for supported packages)

- Riak TS v1.5.1 (See here for supported packages)

- Mesos version:

- v0.28

- v1.0+

- OS:

- Ubuntu 14.04

- CentOS 7

- Debian 8

To install the Riak Mesos Framework in your Mesos cluster, please refer to the documentation in riak-mesos-tools for information about installation from published packages and usage of the Riak Mesos Framework.

To install the Riak Mesos Framework in your DC/OS cluster, please see the Getting Started guide.

For build and testing information, visit docs/DEVELOPMENT.md.

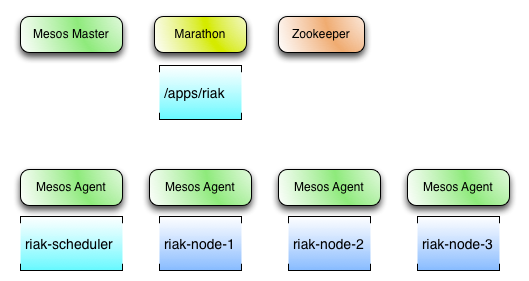

The Riak Mesos Framework is typically deployed as a Marathon app via a CLI tool such as riak-mesos or dcos riak. Once deployed, it can accept commands which result in the creation of Riak nodes as additional tasks on other Mesos agents.

The RMF Scheduler uses the Mesos Master HTTP API. It maintains the current cluster configuration in Zookeeper (providing resilience against Scheduler failure/restart), and ensures the running cluster topology matches the configuration at all times. The Scheduler provides an HTTP API to mutate the cluster configuration, as well as running a copy of Riak Explorer to monitor the cluster.

The Scheduler can be configured with several strategies for placement of Riak nodes, configurable via constraints.

- Unique hostnames: each Riak node will only be allowed on a Mesos Agent with no existing Riak node

- Distribution: spread Riak nodes across separate agents where possible

- Host avoidance: avoid specific hosts or hostnames matching a pattern

- Many more!

There are 2 different sets of constraints to configure in your config.json:

-

.riak.constraints: the Marathon constraints for the Scheduler task

-

.riak.scheduler.constraints: the internal constraints for the Riak nodes

- sets the constraints that will be set for the Scheduler task within Marathon - this way you can e.g. make sure multiple framework instances run on separate agents.

- sets constraints that will affect how the Scheduler places Riak nodes across your Mesos cluster.

Both follow the exact same format, with the exception that, due to how DC/OS validates configuration against a schema, 2. must be a quoted string within DC/OS clusters, e.g.

{"riak": {

...

"scheduler": {

...

"constraints": "[[ \"hostname\", \"UNIQUE\" ]]",

}

}}

This example is the most common usage for .riak.scheduler.constraints: it rigidly ensures all your Riak nodes are placed on unique Mesos agents. NB: if you try to start more Riak nodes than you have Mesos Agents, this will result in some Riak nodes not starting until resources are freed. Instead, one may use the "CLUSTER" constraint to distribute tasks across fairly across Agents with a specific attribute:

{"riak": {

...

"scheduler": {

...

"constraints": "[[ \"rack_id\", \"CLUSTER\", \"rack-1\" ]]"

}

}}

Or the GROUP_BY operator to distribute nodes across Agents by a specific attribute:

{"riak": {

...

"scheduler": {

...

"constraints": "[[ \"rack_id\", \"GROUP_BY\" ]]",

}

}}

For full documentation of available constraint formats, and how to set attributes for your Agents, see the Marathon documentation

The RMF Executor takes care of configuring and running a Riak node and a complementary EPMD replacement.

Under normal operation, riak nodes communicate with each other by first connecting on EPMD's default port, then communicating the necessary information for the nodes to connect. In a Mesos environment, however, it is not possible to assume that a specific port is available for use. To enable inter-riak-node communication within Mesos, we use ErlPMD. ErlPMD listens on a port chosen by the Scheduler from those made available by Mesos and coordinates the ports each node can be reached at by storing it in Zookeeper.

Being a distributed application, the Riak Mesos Framework consists of multiple components, each of which may fail independently. The following is a description of how the RMF architecture mitigates the effects of such situations.

The Riak Mesos Scheduler does the following to deal with potential failures:

- Registers a failover timeout: Doing this allows the scheduler to reconnect to the Mesos master within a certain amount of time.

- Persists the Framework ID for failover: The scheduler persists the Framework ID to Zookeeper so that it can reregister itself with the Mesos Master, allowing access to tasks previously launched.

- Persists task IDs for failover: The scheduler stores metadata about each of the tasks (Riak nodes) that it launches including the task ID in Zookeeper.

- Reconciles tasks upon failover: In the event of a scheduler failover, the scheduler reconciles each of the task IDs which were previously persisted to Zookeeper so that the most up to date task status can be provided by Mesos.

With the above features implemented, the workflow for the scheduler startup process looks like this (with or without a failure):

- Check Zookeeper to see if a Framework ID has been persisted for this named instance of the Riak Mesos Scheduler.

- If it has, attempt to reregister the same Framework ID with the Mesos master

- If it has not, perform a normal Framework registration, and then persist the assigned Framework ID in Zookeeper for future failover.

- Check Zookeeper to see if any nodes have already been launched for this instance of the framework.

- If some tasks exist and had previously been launched, perform task reconciliation on each of the task IDs previously persisted to Zookeeper, and react to the status updates once the Mesos master sends them

- If there are no tasks from previous runs, reconciliation can be skipped

- At this point, the current state of each running task should be known, and the scheduler can continue normal operation by responding to resource offers and API commands from users.

What happens when a Riak Mesos Executor or Riak node fails?

The executor also needs to employ some features for fault tolerance, much like the scheduler. The Riak Mesos Executor does the following:

- Enables checkpointing: Checkpointing tells Mesos to persist status updates for tasks to disk, allowing those tasks to reconnect to the Mesos agent for certain failure modes.

- Uses resource reservations: Performing a

RESERVEoperation before launching tasks allows a task to be launched on the same Mesos agent again after a failure without the possibility of another framework taking those resources before a failover can take place. - Uses persistent volumes: Performing a

CREATEopertaion before launching tasks (and after aRESERVEoperation) instructs Mesos agents to create a volume for stateful data (such as the Riak data directory) which exists outside of the tasks container (which is normally deleted with garbage collection if a task fails).

Given those features, the following is what a node launch workflow looks like (from the point of view of the scheduler):

- Receive a

createNodeoperation from the API (user initiated). - Wait for resources from the Mesos master.

- Check offers to ensure that there is enough capacity on the Mesos agent for the Riak node.

- Perform a

RESERVEoperation to reserve the required resources on the Mesos agent. - Perform a

CREATEoperation to create a persistent volume on the Mesos agent for the Riak data. - Perform a

LAUNCHoperation to launch the Riak Mesos Executor / Riak node on the given Mesos agent. - Wait for the executor to send a

TASK_RUNNINGupdate back to the scheduler through Mesos.- If the Riak node is already part of a cluster, the node is now successfully running.

- If the Riak node is not part of a cluster, and there are other nodes in the named cluster, attempt to perform a cluster join from the new node to existing nodes.

The node failure workflow looks like the following:

- Scheduler receives a failure status update such as a lost executor, or a task status update such as

TASK_ERROR,TASK_LOST,TASK_FAILED, orTASK_KILLED. - Determine whether or not the task failure was intentional (such as a node restart, or removal of the node)

- If the failure was intentional, remove the node from the Riak cluster, and continue normal operation.

- If the failure was unintentional, attempt to relaunch the task with a

LAUNCHoperation on the same Mesos agent and the same persistence id by looking up metadata for that Riak node previously stored in Zookeeper.

- If the executor is relaunched, the executor and Riak software will be redeployed to the same Mesos agent. The Riak software will get extracted (from a tarball) into the already existing persistent volume.

- If there is already Riak data in the persistent volume, the extraction will overwrite all of the old Riak software files while preserving the Riak data directory from the previous instance of Riak. This mechanism also allows us to perform upgrades for the version of Riak desired.

- The executor will then ask the scheduler for the current

riak.confandadvanced.configtemplates which could have been updated via the scheduler's API. - The executor can then attempt to start the Riak node, and send a

TASK_RUNNINGupate to the scheduler through Mesos. The scheduler can then perform cluster logic operations as described above.

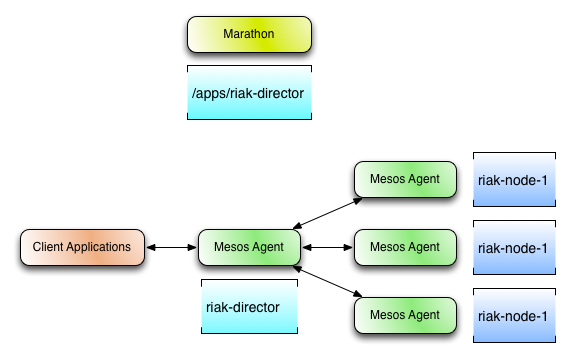

Due to the nature of Apache Mesos and the potential for Riak nodes to come and go on a regular basis, client applications using a Mesos based cluster must be kept up to date on the cluster's current state. Instead of requiring this intelligence to be built into Riak client libraries, a smart proxy application named Director has been created which can run alongside client applications.

For more information related to the Riak Mesos Director, please read docs/DIRECTOR.md