Bahjat Kawar* 1, Jiaming Song* 2, Stefano Ermon3, Michael Elad1

1 Technion, 2NVIDIA, 3Stanford University, *Equal contribution.

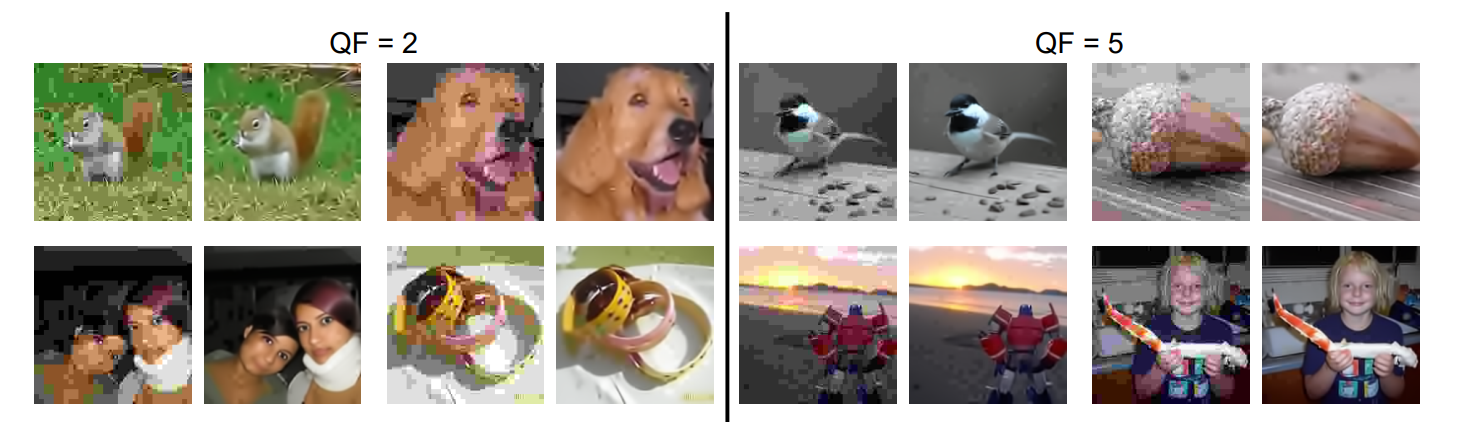

We extend DDRM (Denoising Diffusion Restoration Models) for the problems of JPEG artifact correction and image dequantization.

The code has been tested on PyTorch 1.8 and PyTorch 1.10. Please refer to environment.yml for a list of conda/mamba environments that can be used to run the code. The codebase is based heavily on the original DDRM codebase.

We use pretrained models from https://github.com/openai/guided-diffusion, https://github.com/pesser/pytorch_diffusion and https://github.com/ermongroup/SDEdit

We use 1,000 images from the ImageNet validation set for comparison with other methods. The list of images is taken from https://github.com/XingangPan/deep-generative-prior/

The models and datasets are placed in the exp/ folder as follows:

<exp> # a folder named by the argument `--exp` given to main.py

├── datasets # all dataset files

│ ├── celeba # all CelebA files

│ ├── imagenet # all ImageNet files

│ ├── ood # out of distribution ImageNet images

│ ├── ood_bedroom # out of distribution bedroom images

│ ├── ood_cat # out of distribution cat images

│ └── ood_celeba # out of distribution CelebA images

├── logs # contains checkpoints and samples produced during training

│ ├── celeba

│ │ └── celeba_hq.ckpt # the checkpoint file for CelebA-HQ

│ ├── diffusion_models_converted

│ │ └── ema_diffusion_lsun_<category>_model

│ │ └── model-x.ckpt # the checkpoint file saved at the x-th training iteration

│ ├── imagenet # ImageNet checkpoint files

│ │ ├── 256x256_classifier.pt

│ │ ├── 256x256_diffusion.pt

│ │ ├── 256x256_diffusion_uncond.pt

│ │ ├── 512x512_classifier.pt

│ │ └── 512x512_diffusion.pt

├── image_samples # contains generated samples

└── imagenet_val_1k.txt # list of the 1k images used in ImageNet-1K.The general command to sample from the model is as follows:

python main.py --ni --config {CONFIG}.yml --doc {DATASET} -i {IMAGE_FOLDER} --timesteps {STEPS} --init_timestep {INIT_T} --eta {ETA} --etaB {ETA_B} --deg {DEGRADATION} --num_avg_samples {NUM_AVG}

where the following are options

ETAis the eta hyperparameter in the paper. (default:1)ETA_Bis the eta_b hyperparameter in the paper. (default:0.4)STEPScontrols how many timesteps used in the process. (default:20)INIT_Tcontrols the timestep to start sampling from. (default:300)NUM_AVGis the number of samples per input to average for the final result. (default:1)DEGREDATIONis the type of degredation used. (One of:quantfor dequantization, orjpegXXfor JPEG with quality factorXX, e.g.jpeg80)CONFIGis the name of the config file (seeconfigs/for a list), including hyperparameters such as batch size and network architectures.DATASETis the name of the dataset used, to determine where the checkpoint file is found.IMAGE_FOLDERis the name of the folder the resulting images will be placed in (default:images)

For example, to use the default settings from the paper on the ImageNet 256x256 dataset, the problem of JPEG artifact correction for QF=80, and averaging 8 samples per input:

python main.py --ni --config imagenet_256.yml --doc imagenet -i imagenet --deg jpeg80 --num_avg_samples 8

The generated images are place in the <exp>/image_samples/{IMAGE_FOLDER} folder, where orig_{id}.png, y0_{id}.png, {id}_-1.png refer to the original, degraded, restored images respectively.

The config files contain a setting controlling whether to test on samples from the trained dataset's distribution or not.

@inproceedings{kawar2022jpeg,

title={JPEG Artifact Correction using Denoising Diffusion Restoration Models},

author={Bahjat Kawar and Jiaming Song and Stefano Ermon and Michael Elad},

booktitle={Neural Information Processing Systems (NeurIPS) Workshop on Score-Based Methods},

year={2022}

}

@inproceedings{kawar2022denoising,

title={Denoising Diffusion Restoration Models},

author={Bahjat Kawar and Michael Elad and Stefano Ermon and Jiaming Song},

booktitle={Advances in Neural Information Processing Systems},

year={2022}

}

This implementation is based on / inspired by https://github.com/bahjat-kawar/ddrm

The code is released under the MIT License.