Instructor is a Python library that makes it a breeze to work with structured outputs from large language models (LLMs). Built on top of Pydantic, it provides a simple, transparent, and user-friendly API to manage validation, retries, and streaming responses. Get ready to supercharge your LLM workflows!

- Response Models: Specify Pydantic models to define the structure of your LLM outputs

- Retry Management: Easily configure the number of retry attempts for your requests

- Validation: Ensure LLM responses conform to your expectations with Pydantic validation

- Streaming Support: Work with Lists and Partial responses effortlessly

- Flexible Backends: Seamlessly integrate with various LLM providers beyond OpenAI

Install Instructor with a single command:

pip install -U instructorNow, let's see Instructor in action with a simple example:

import instructor

from pydantic import BaseModel

from openai import OpenAI

# Define your desired output structure

class UserInfo(BaseModel):

name: str

age: int

# Patch the OpenAI client

client = instructor.from_openai(OpenAI())

# Extract structured data from natural language

user_info = client.chat.completions.create(

model="gpt-3.5-turbo",

response_model=UserInfo,

messages=[{"role": "user", "content": "John Doe is 30 years old."}],

)

print(user_info.name)

#> John Doe

print(user_info.age)

#> 30import instructor

from anthropic import Anthropic

from pydantic import BaseModel

class User(BaseModel):

name: str

age: int

client = instructor.from_anthropic(Anthropic())

# note that client.chat.completions.create will also work

resp = client.messages.create(

model="claude-3-opus-20240229",

max_tokens=1024,

messages=[

{

"role": "user",

"content": "Extract Jason is 25 years old.",

}

],

response_model=User,

)

assert isinstance(resp, User)

assert resp.name == "Jason"

assert resp.age == 25Make sure to install cohere and set your system environment variable with export CO_API_KEY=<YOUR_COHERE_API_KEY>.

pip install cohere

import instructor

import cohere

from pydantic import BaseModel

class User(BaseModel):

name: str

age: int

client = instructor.from_cohere(cohere.Client())

# note that client.chat.completions.create will also work

resp = client.chat.completions.create(

model="command-r-plus",

max_tokens=1024,

messages=[

{

"role": "user",

"content": "Extract Jason is 25 years old.",

}

],

response_model=User,

)

assert isinstance(resp, User)

assert resp.name == "Jason"

assert resp.age == 25import instructor

from litellm import completion

from pydantic import BaseModel

class User(BaseModel):

name: str

age: int

client = instructor.from_litellm(completion)

resp = client.chat.completions.create(

model="claude-3-opus-20240229",

max_tokens=1024,

messages=[

{

"role": "user",

"content": "Extract Jason is 25 years old.",

}

],

response_model=User,

)

assert isinstance(resp, User)

assert resp.name == "Jason"

assert resp.age == 25This was the dream of instructor but due to the patching of openai, it wasnt possible for me to get typing to work well. Now, with the new client, we can get typing to work well! We've also added a few create_* methods to make it easier to create iterables and partials, and to access the original completion.

import openai

import instructor

from pydantic import BaseModel

class User(BaseModel):

name: str

age: int

client = instructor.from_openai(openai.OpenAI())

user = client.chat.completions.create(

model="gpt-4-turbo-preview",

messages=[

{"role": "user", "content": "Create a user"},

],

response_model=User,

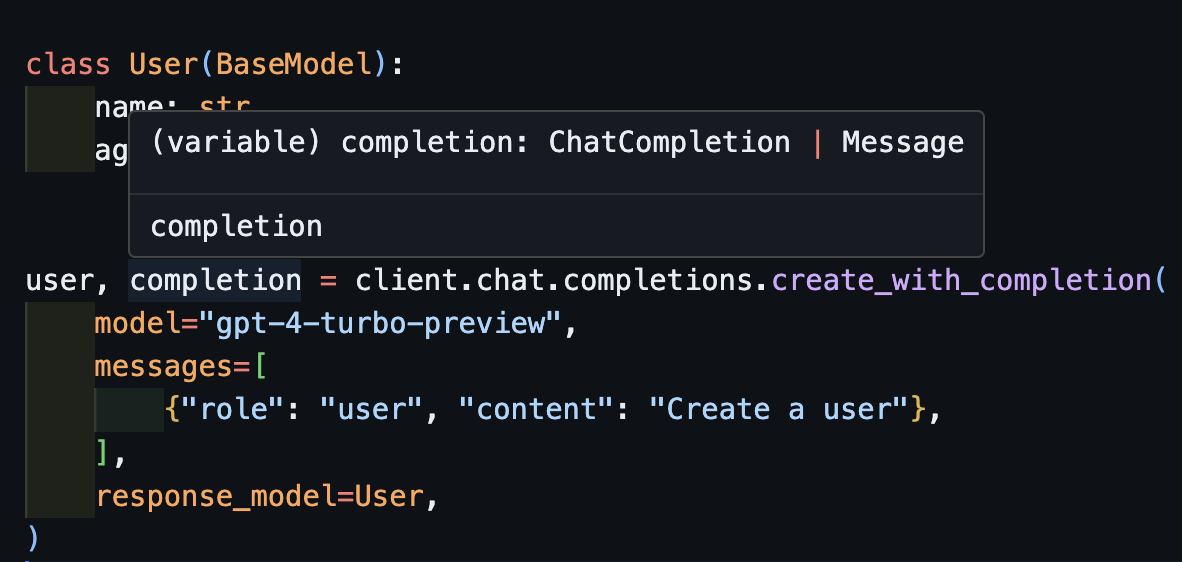

)Now if you use a IDE, you can see the type is correctly inferred.

This will also work correctly with asynchronous clients.

import openai

import instructor

from pydantic import BaseModel

client = instructor.from_openai(openai.AsyncOpenAI())

class User(BaseModel):

name: str

age: int

async def extract():

return await client.chat.completions.create(

model="gpt-4-turbo-preview",

messages=[

{"role": "user", "content": "Create a user"},

],

response_model=User,

)Notice that simply because we return the create method, the extract() function will return the correct user type.

You can also return the original completion object

import openai

import instructor

from pydantic import BaseModel

client = instructor.from_openai(openai.OpenAI())

class User(BaseModel):

name: str

age: int

user, completion = client.chat.completions.create_with_completion(

model="gpt-4-turbo-preview",

messages=[

{"role": "user", "content": "Create a user"},

],

response_model=User,

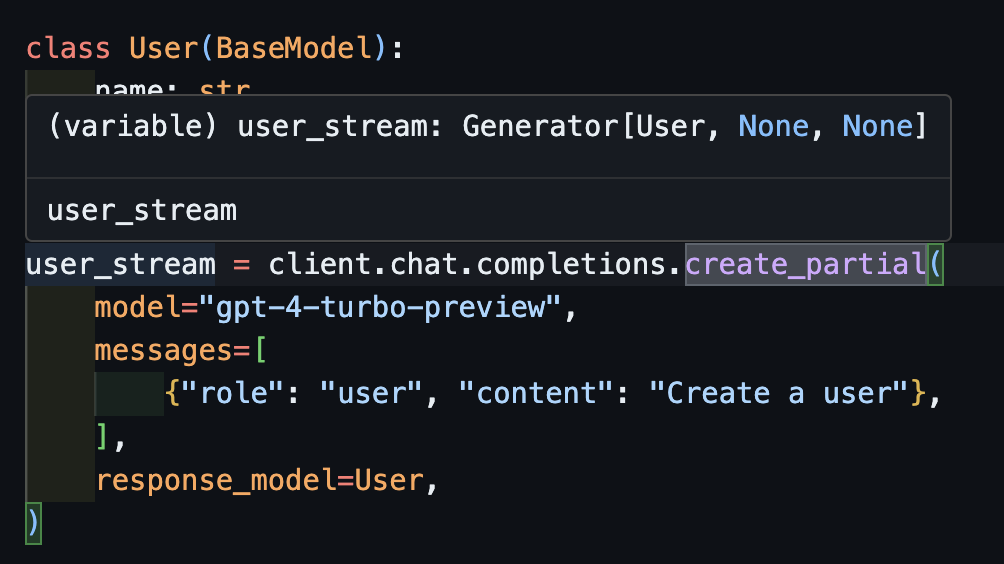

)In order to handle streams, we still support Iterable[T] and Partial[T] but to simply the type inference, we've added create_iterable and create_partial methods as well!

import openai

import instructor

from pydantic import BaseModel

client = instructor.from_openai(openai.OpenAI())

class User(BaseModel):

name: str

age: int

user_stream = client.chat.completions.create_partial(

model="gpt-4-turbo-preview",

messages=[

{"role": "user", "content": "Create a user"},

],

response_model=User,

)

for user in user_stream:

print(user)

#> name=None age=None

#> name=None age=None

#> name=None age=None

#> name=None age=None

#> name=None age=25

#> name=None age=25

#> name=None age=25

#> name=None age=25

#> name=None age=25

#> name=None age=25

#> name='John Doe' age=25

# name=None age=None

# name='' age=None

# name='John' age=None

# name='John Doe' age=None

# name='John Doe' age=30Notice now that the type inferred is Generator[User, None]

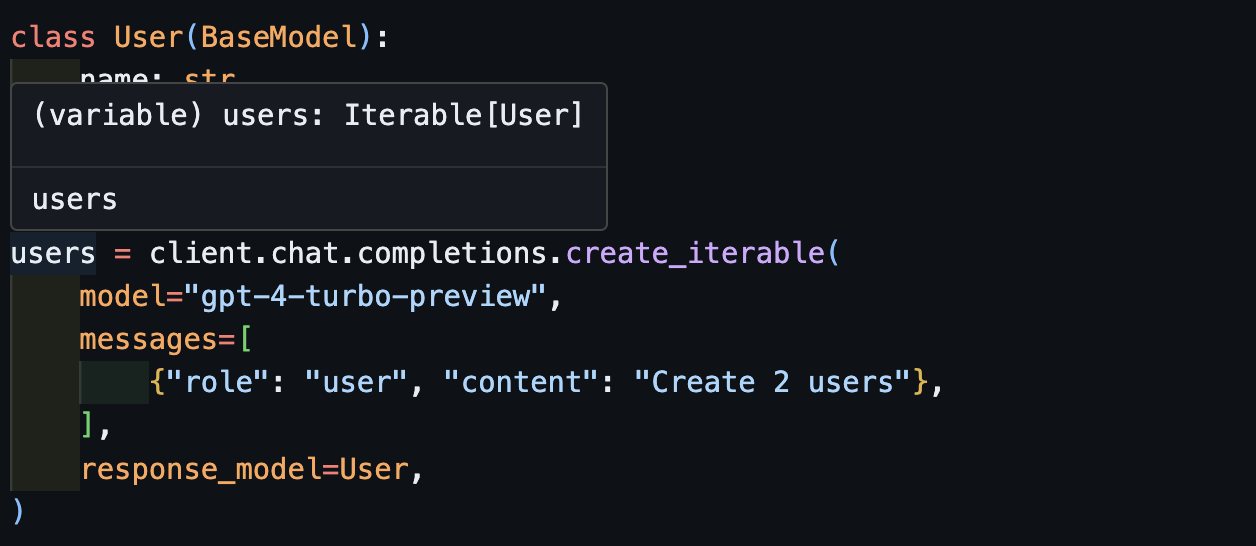

We get an iterable of objects when we want to extract multiple objects.

import openai

import instructor

from pydantic import BaseModel

client = instructor.from_openai(openai.OpenAI())

class User(BaseModel):

name: str

age: int

users = client.chat.completions.create_iterable(

model="gpt-4-turbo-preview",

messages=[

{"role": "user", "content": "Create 2 users"},

],

response_model=User,

)

for user in users:

print(user)

#> name='John' age=30

#> name='Jane' age=25

# User(name='John Doe', age=30)

# User(name='Jane Smith', age=25)We invite you to contribute to evals in pytest as a way to monitor the quality of the OpenAI models and the instructor library. To get started check out the evals for anthropic and OpenAI and contribute your own evals in the form of pytest tests. These evals will be run once a week and the results will be posted.

If you want to help, checkout some of the issues marked as good-first-issue or help-wanted found here. They could be anything from code improvements, a guest blog post, or a new cookbook.

We also provide some added CLI functionality for easy convinience:

-

instructor jobs: This helps with the creation of fine-tuning jobs with OpenAI. Simple useinstructor jobs create-from-file --helpto get started creating your first fine-tuned GPT3.5 model -

instructor files: Manage your uploaded files with ease. You'll be able to create, delete and upload files all from the command line -

instructor usage: Instead of heading to the OpenAI site each time, you can monitor your usage from the cli and filter by date and time period. Note that usage often takes ~5-10 minutes to update from OpenAI's side

This project is licensed under the terms of the MIT License.