-

Python: 3.6.9 | packaged by conda-forge | (default, Mar 6 2020, 18:58:41) [GCC 7.3.0]

-

CUDA available: True

-

GPU 0: Xavier

-

CUDA_HOME: /usr/local/cuda-10.2

-

NVCC: Cuda compilation tools, release 10.2, V10.2.89

-

GCC: gcc (Ubuntu/Linaro 7.5.0-3ubuntu1~18.04) 7.5.0

-

PyTorch: 1.10.0(use Python 3.6 - torch-1.10.0-cp36-cp36m-linux_aarch64.whl from https://forums.developer.nvidia.com/t/pytorch-for-jetson/72048)

-

PyTorch compiling details: PyTorch built with:

- GCC 7.5

- C++ Version: 201402

- OpenMP 201511 (a.k.a. OpenMP 4.5)

- LAPACK is enabled (usually provided by MKL)

- NNPACK is enabled

- CPU capability usage: NO AVX

- CUDA Runtime 10.2

- NVCC architecture flags: -gencode;arch=compute_53,code=sm_53;-gencode;arch=compute_62,code=sm_62;-gencode;arch=compute_72,code=sm_72

- CuDNN 8.0

- Build settings: BLAS_INFO=open, BUILD_TYPE=Release, CUDA_VERSION=10.2, CUDNN_VERSION=8.0.0, CXX_COMPILER=/usr/bin/c++, CXX_FLAGS= -Wno-deprecated -fvisibility-inlines-hidden -DUSE_PTHREADPOOL -fopenmp -DNDEBUG -DUSE_KINETO -DLIBKINETO_NOCUPTI -DUSE_XNNPACK -DSYMBOLICATE_MOBILE_DEBUG_HANDLE -DEDGE_PROFILER_USE_KINETO -O2 -fPIC -Wno-narrowing -Wall -Wextra -Werror=return-type -Wno-missing-field-initializers -Wno-type-limits -Wno-array-bounds -Wno-unknown-pragmas -Wno-sign-compare -Wno-unused-parameter -Wno-unused-variable -Wno-unused-function -Wno-unused-result -Wno-unused-local-typedefs -Wno-strict-overflow -Wno-strict-aliasing -Wno-error=deprecated-declarations -Wno-stringop-overflow -Wno-psabi -Wno-error=pedantic -Wno-error=redundant-decls -Wno-error=old-style-cast -fdiagnostics-color=always -faligned-new -Wno-unused-but-set-variable -Wno-maybe-uninitialized -fno-math-errno -fno-trapping-math -Werror=format -DMISSING_ARM_VST1 -DMISSING_ARM_VLD1 -Wno-stringop-overflow, FORCE_FALLBACK_CUDA_MPI=1, LAPACK_INFO=open, TORCH_VERSION=1.10.0, USE_CUDA=ON, USE_CUDNN=ON, USE_EIGEN_FOR_BLAS=ON, USE_EXCEPTION_PTR=1, USE_GFLAGS=OFF, USE_GLOG=OFF, USE_MKL=OFF, USE_MKLDNN=OFF, USE_MPI=ON, USE_NCCL=0, USE_NNPACK=ON, USE_OPENMP=ON,

-

TorchVision: 0.11.1

-

OpenCV: 4.8.0

-

MMCV: 1.3.17 (should <=3.17<=1.8.0,1.7.1 is slow)

-

MMCV Compiler: GCC 7.5

-

MMCV CUDA Compiler: 10.2

-

MMDetection: 2.27.0+ (should not over 2.27+,2.28.0 Deprecate the support of Python 3.6 https://github.com/open-mmlab/mmdetection/releases)

-

MMDeploy:0.7.0(should not above 0.7.0, 0.11 need modify python version to 3.6 immdetection, 0.14 is failed which need high version protobufin py 3.7) and fix open-mmlab#114

#if NV_TENSORRT_MAJOR > 7 context_->setOptimizationProfileAsync(0, static_cast(stream_.GetNative())); #else context_->setOptimizationProfile(0); #endif

Retinanet:

nvidia@xavier0:/data/azuryl/mmdeploy_0.7.0$ python ./tools/deploy.py configs/mmdet/detection/detection_tensorrt_dynamic-320x320-1344x1344.py /data/azuryl/mmdetection_2.27.0/configs/retinanet/retinanet_r18_fpn_1x_coco.py /data/azuryl/retinanet_r18_fpn_1x_coco_20220407_171055-614fd399.pth /data/azuryl/mmdetection_2.27.0/demo/demo.jpg --work-dir work_dir --show --device cuda:0 --dump-info

retinanet_r18_fpn_1x_coco_tensorrt.png

MASK_RCNN:

configs/mmdet/instance-seg/instance-seg_tensorrt_dynamic-320x320-1344x1344.py for Mask-RCNN But configs/mmdet/detection/detection_tensorrt_dynamic-320x320-1344x1344.py is NOT for instance segmentation task.

python ./tools/deploy.py configs/mmdet/instance-seg/instance-seg_tensorrt_dynamic-320x320-1344x1344.py /data/azuryl/mmdetection_2.27.0/configs/mask_rcnn/mask_rcnn_r50_fpn_2x_coco.py /data/azuryl/mask_rcnn_r50_fpn_2x_coco_bbox_mAP-0.392__segm_mAP-0.354_20200505_003907-3e542a40.pth /data/azuryl/mmdetection_2.27.0/demo/demo.jpg --work-dir work_dir --show --device cuda:0 --dump-info

mask_rcnn/mask_rcnn_r50_fpn_2x_coco_tensorrt.png

MASK_RCNN FP16

python ./tools/deploy.py configs/mmdet/instance-seg/instance-seg_tensorrt-fp16_dynamic-320x320-1344x1344.py /data/azuryl/mmdetection_2.27.0/configs/mask_rcnn/mask_rcnn_r50_fpn_2x_coco.py /data/azuryl/mask_rcnn_r50_fpn_2x_coco_bbox_mAP-0.392__segm_mAP-0.354_20200505_003907-3e542a40.pth /data/azuryl/mmdetection_2.27.0/demo/demo.jpg --work-dir work_dir --show --device cuda:0 --dump-info

MASK_RCN_FP16_tensorrt.png

MASK_RCNN INT8

wget -c http://images.cocodataset.org/annotations/annotations_trainval2017.zip

according to https://github.com/open-mmlab/mmdetection/blob/v2.27.0/configs/_base_/datasets/coco_instance.py#L3 data_root = '/usrpath/coco/'

unzip annotations/annotations_trainval2017.zip place in mmdeploy folder

python ./tools/deploy.py configs/mmdet/instance-seg/instance-seg_tensorrt-int8_dynamic-320x320-1344x1344.py /data/azuryl/mmdetection_2.27.0/configs/mask_rcnn/mask_rcnn_r50_fpn_2x_coco.py /data/azuryl/mask_rcnn_r50_fpn_2x_coco_bbox_mAP-0.392__segm_mAP-0.354_20200505_003907-3e542a40.pth /data/azuryl/mmdetection_2.27.0/demo/demo.jpg --work-dir work_dir --show --device cuda:0 --dump-info

MASK_RCNN_INT8_tensorrt.png

MSK_RCNN fp16_static-800x1344

python ./tools/deploy.py configs/mmdet/instance-seg/instance-seg_tensorrt-fp16_static-800x1344.py /data/azuryl/mmdetection_2.27.0/configs/mask_rcnn/mask_rcnn_r50_fpn_2x_coco.py /data/azuryl/mask_rcnn_r50_fpn_2x_coco_bbox_mAP-0.392__segm_mAP-0.354_20200505_003907-3e542a40.pth /data/azuryl/mmdetection_2.27.0/demo/demo.jpg --work-dir work_dir --show --device cuda:0 --dump-info

MASK_RCN_FP16_static-800x1344.tensorrt.png

mmdeploy1.2.0 + mmdetection 3.1.0 + mmcv 2.0.1 install method:

1 mmcv 1.7.1 install

open-mmlab#2301

1)

git clone https://github.com/open-mmlab/mmcv.git

2)

edit setup.py, remove python_requires='>=3.7'

3)

build from source, you may need install build tools with conda install -c conda-forge gxx_linux-aarch64

pip install -v -e.

2 mmdeploy v1.2.0 install

in mmdeploy_1.2.0/mmdeploy/backend/tensorrt/utils.py because of my cuda is 10.2 so command these line ''' if cuda_version is not None: version_major = int(cuda_version.split('.')[0]) if version_major < 11:# my cuda is 10.2

//cu11 support cublasLt, so cudnn heuristic tactic should disable CUBLAS_LT # noqa E501

tactic_source = config.get_tactic_sources() - ( 1 << int(trt.TacticSource.CUBLAS_LT)) config.set_tactic_sources(tactic_source) ''' and replace to config.max_workspace_size = max_workspace_size

3 mmdetection 3.1.0 install

4 mmengine 0.8.3 install

5 fix geos issue conda install -c conda-forge libstdcxx-ng conda install geos

67 fix display issue:

comment these lines in /data/azuryl/mmdeploy_1.2.0/mmdeploy/backend/tensorrt/wrapper.py

https://github.com/open-mmlab/mmdeploy/blob/v1.2.0/mmdeploy/backend/tensorrt/wrapper.py#L85

https://github.com/open-mmlab/mmdeploy/blob/v1.2.0/mmdeploy/backend/tensorrt/wrapper.py#L96-L97

python ./tools/deploy.py configs/mmdet/instance-seg/instance-seg_tensorrt-fp16_dynamic-320x320-1344x1344.py /data/azuryl/mmdetection_3.1.0/configs/mask_rcnn/mask-rcnn_r50_fpn_2x_coco.py /data/azuryl/mask_rcnn_r50_fpn_2x_coco_bbox_mAP-0.392__segm_mAP-0.354_20200505_003907-3e542a40.pth /data/azuryl/mmdetection_2.27.0/demo/demo.jpg --work-dir /data/azuryl/mmdeploy_model/maskrcnn_d320_1344 --show --device cuda:0 --dump-info

maskrcnn_mmdeploy1.2.0_tensorrt.png

MASKRCNN_fp16_MMdp1201_tensorrt.png

eval:

python ./tools/test.py configs/mmdet/instance-seg/instance-seg_tensorrt_dynamic-320x320-1344x1344.py /data/azuryl/mmdetection_3.1.0/configs/mask_rcnn/mask-rcnn_r50_fpn_2x_coco.py --model /data/azuryl/mmdeploy_model/maskrcnn_d320_1344/end2end.engine --device cuda:0 --log2file LOG2FILE --speed-test

python ./tools/test.py configs/mmdet/instance-seg/instance-seg_tensorrt_dynamic-320x320-1344x1344.py /data/azuryl/mmdetection_3.1.0/configs/mask_rcnn/mask-rcnn_r50_fpn_2x_coco.py --model /data/azuryl/mmdeploy_model/maskrcnn_f16_d320_1344/end2end.engine --device cuda:0 --log2file LOG2FILEf16 --speed-test

python -c 'import mmdeploy;print(mmdeploy.version)'

python -c 'import onnx;print(onnx.version)'

python -c 'import cv2;print(cv2.version)'

python -c 'import protobuf;print(protobuf.version)'

Highlights

The MMDeploy 1.x has been released, which is adapted to upstream codebases from OpenMMLab 2.0. Please align the version when using it.

The default branch has been switched to main from master. MMDeploy 0.x (master) will be deprecated and new features will only be added to MMDeploy 1.x (main) in future.

| mmdeploy | mmengine | mmcv | mmdet | others |

|---|---|---|---|---|

| 0.x.y | - | <=1.x.y | <=2.x.y | 0.x.y |

| 1.x.y | 0.x.y | 2.x.y | 3.x.y | 1.x.y |

deploee offers over 2,300 AI models in ONNX, NCNN, TRT and OpenVINO formats. Featuring a built-in list of real hardware devices, deploee enables users to convert Torch models into any target inference format for profiling purposes.

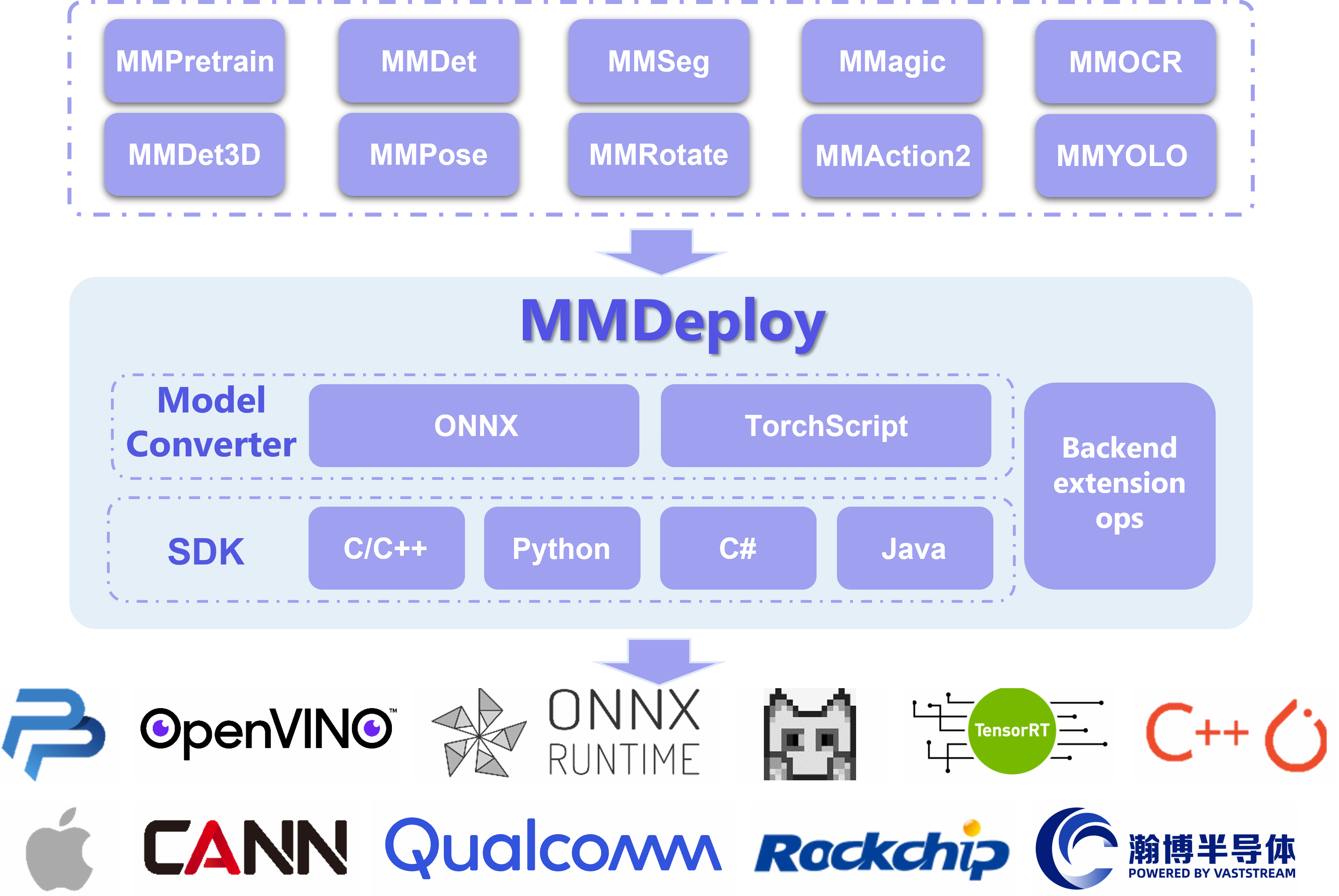

Introduction

MMDeploy is an open-source deep learning model deployment toolset. It is a part of the OpenMMLab project.

Main features

Fully support OpenMMLab models

The currently supported codebases and models are as follows, and more will be included in the future

Multiple inference backends are available

The supported Device-Platform-InferenceBackend matrix is presented as following, and more will be compatible.

The benchmark can be found from here

Efficient and scalable C/C++ SDK Framework

All kinds of modules in the SDK can be extended, such as Transform for image processing, Net for Neural Network inference, Module for postprocessing and so on

Documentation

Please read getting_started for the basic usage of MMDeploy. We also provide tutoials about:

- Build

- User Guide

- Developer Guide

- Custom Backend Ops

- FAQ

- Contributing

Benchmark and Model zoo

You can find the supported models from here and their performance in the benchmark.

Contributing

We appreciate all contributions to MMDeploy. Please refer to CONTRIBUTING.md for the contributing guideline.

Acknowledgement

We would like to sincerely thank the following teams for their contributions to MMDeploy:

Citation

If you find this project useful in your research, please consider citing:

@misc{=mmdeploy,

title={OpenMMLab's Model Deployment Toolbox.},

author={MMDeploy Contributors},

howpublished = {\url{https://github.com/open-mmlab/mmdeploy}},

year={2021}

}License

This project is released under the Apache 2.0 license.

Projects in OpenMMLab

- MMEngine: OpenMMLab foundational library for training deep learning models.

- MMCV: OpenMMLab foundational library for computer vision.

- MMPretrain: OpenMMLab pre-training toolbox and benchmark.

- MMagic: OpenMMLab Advanced, Generative and Intelligent Creation toolbox.

- MMDetection: OpenMMLab detection toolbox and benchmark.

- MMDetection3D: OpenMMLab's next-generation platform for general 3D object detection.

- MMYOLO: OpenMMLab YOLO series toolbox and benchmark

- MMRotate: OpenMMLab rotated object detection toolbox and benchmark.

- MMTracking: OpenMMLab video perception toolbox and benchmark.

- MMSegmentation: OpenMMLab semantic segmentation toolbox and benchmark.

- MMOCR: OpenMMLab text detection, recognition, and understanding toolbox.

- MMPose: OpenMMLab pose estimation toolbox and benchmark.

- MMHuman3D: OpenMMLab 3D human parametric model toolbox and benchmark.

- MMFewShot: OpenMMLab fewshot learning toolbox and benchmark.

- MMAction2: OpenMMLab's next-generation action understanding toolbox and benchmark.

- MMFlow: OpenMMLab optical flow toolbox and benchmark.

- MMDeploy: OpenMMLab model deployment framework.

- MMRazor: OpenMMLab model compression toolbox and benchmark.

- MIM: MIM installs OpenMMLab packages.

- Playground: A central hub for gathering and showcasing amazing projects built upon OpenMMLab.