Sudachi を活用してる日本語の形態素解析 Lucene プラグイン

A Lucene plugin based on Sudachi tokenizer for the Japanese morphological analysis

- Solr Lucene Analyzer Sudachi plugin philosophy

- Plugin compatibility with Lucene and Solr

- Plugin installation and configuration

- Local Development

- Appendix - Lucene Japanese morphological analysis landscape

- Licenses

The plugin strives to where possible:

- Leverage as much as possible the other good work by the Sudachi owners, in particular the elasticsearch-sudachi plugin.

- Minimize as much as possible the amount of configuration that the user has to do when configuring the plugin in Solr. For example, the Sudachi dictionary will be downloaded behind the scenes and unpacked in the right location for the consumption by the plugin at runtime.

Since the plugin is tightly coupled with Lucene, being compatible with a given version of Lucene makes the plugin compatible with the same version of Solr, at least until the Solr version v9.0.0 (incl.) From Solr version v9.1.0 (incl.), Solr and Lucene versions started to diverge.

There are a number of Solr version matching available repository tags which you can git clone before building the plugin jar.

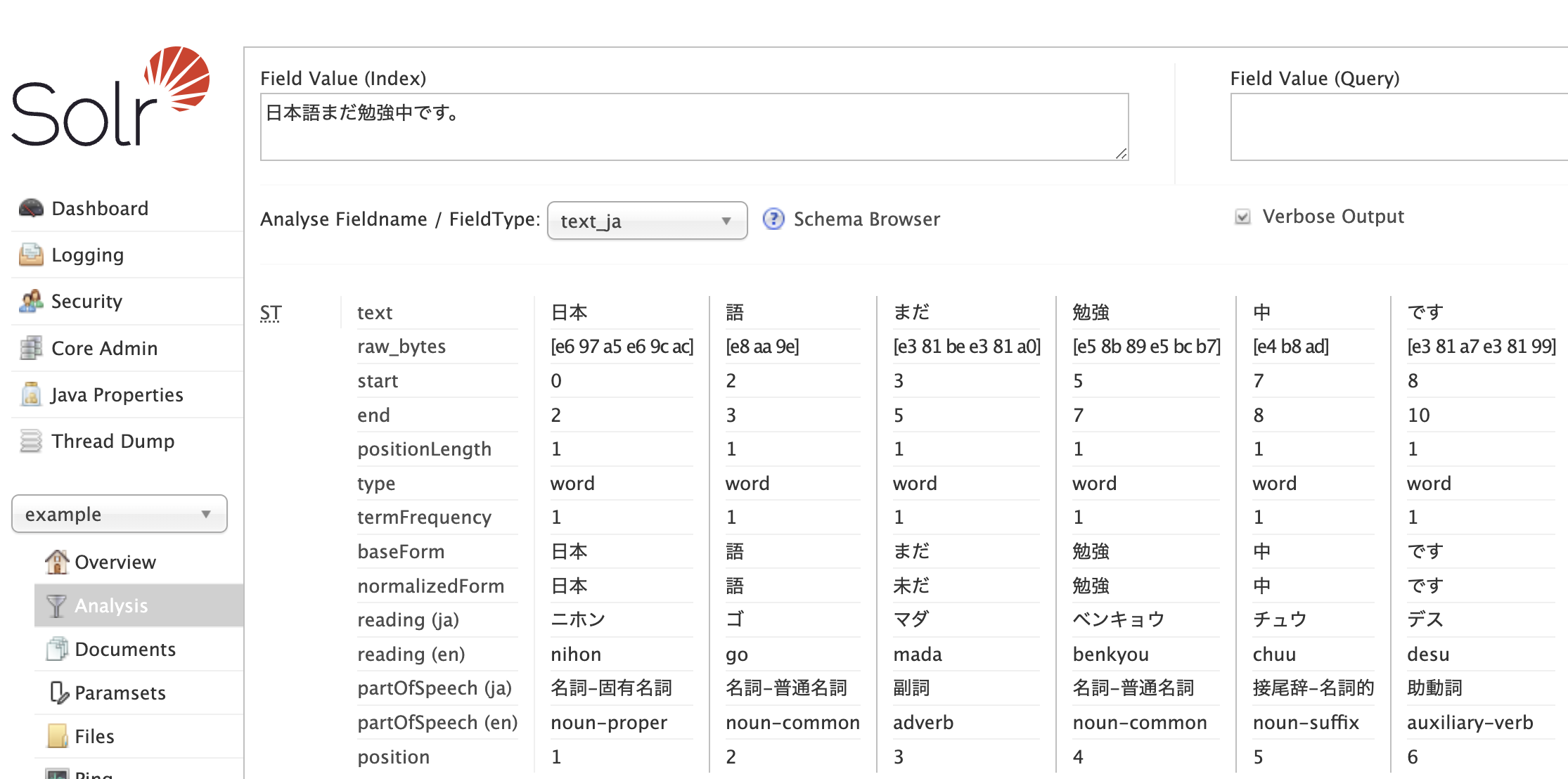

Solr field analysis screen demonstrating tokenized terms and their respective metadata, similar to Lucene Kuromoji behavior:

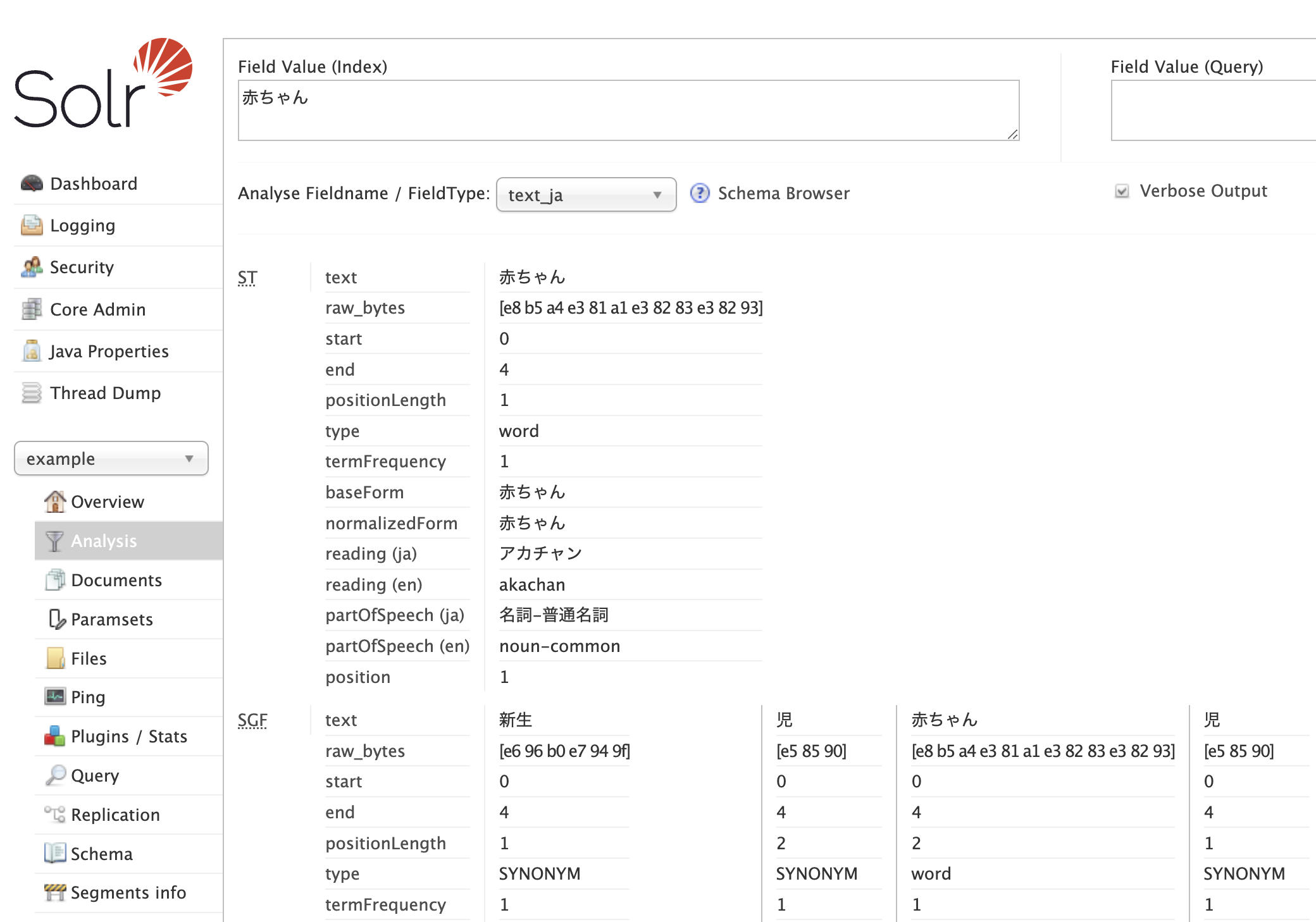

The configured synonyms are 赤ちゃん,新生児,児. The SynonymGraphFilterFactory was leveraging a tokenizer, therefore the synonym 新生児 got tokenized to 新生 and 児:

Whether you are running Solr in Docker environment or on a bare metal machine, the installation and configuration are the same. The steps that need to happen are pretty much those that are taken in the Dockerfiles under the src/smokeTest. Run the following commands:

-

Clone one of the Solr version matching available repository tags, e.g.:

git clone -b v9.4.0 https://github.com/azagniotov/solr-lucene-analyzer-sudachi.git --depth 1 -

Change to the cloned directory

cd solr-lucene-analyzer-sudachi -

Download and configure dictionaries locally (see Downloading a Sudachi dictionary for more information about the behavior of this command)

./gradlew configureDictionariesLocally -

Assemble the plugin uber jar

./gradlew -PsolrVersion=9.4.0 assemble -

Copy the built plugin jar to the Solr home lib directory

cp ./build/libs/solr-lucene-analyzer-sudachi*.jar /opt/solr/lib -

[When installing on bare metal machines] Sanity check Unix file permissions

Check the directory permissions to make sure that Solr can read the files under

/tmp/sudachi/ -

[Optional] You can change the default location of Sudachi dictionary in the file system from

/tmp/sudachi/to somewhere else. As an example, check the section Changing local Sudachi dictionary location for runtime and/or the src/smokeTest/solr_9.x.x/solr_9_4_0/Dockerfile.arm64#L52-L62

The current section provides a few schema.xml (or a managed-schema file) configuration examples for the text_ja field:

Simple example of <analyzer> XML element

Click to expand

<fieldType name="text_ja" class="solr.TextField" autoGeneratePhraseQueries="false" positionIncrementGap="100">

<analyzer>

<tokenizer class="io.github.azagniotov.lucene.analysis.ja.sudachi.tokenizer.SudachiTokenizerFactory" mode="search" discardPunctuation="true" />

<filter class="io.github.azagniotov.lucene.analysis.ja.sudachi.filters.SudachiBaseFormFilterFactory" />

<filter class="io.github.azagniotov.lucene.analysis.ja.sudachi.filters.SudachiPartOfSpeechStopFilterFactory" tags="lang/stoptags_ja.txt" />

<filter class="solr.CJKWidthFilterFactory" />

<!-- Removes common tokens typically not useful for search, but have a negative effect on ranking -->

<filter class="solr.StopFilterFactory" ignoreCase="true" words="lang/stopwords_ja.txt" />

<!-- Normalizes common katakana spelling variations by removing any last long sound character (U+30FC) -->

<filter class="io.github.azagniotov.lucene.analysis.ja.sudachi.filters.SudachiKatakanaStemFilterFactory" minimumLength="4" />

<!-- Lower-cases romaji characters -->

<filter class="solr.LowerCaseFilterFactory" />

</analyzer>

</fieldType>A more comprehensive example of two <analyzer> XML elements, query time and indexing time analyzers with synonym support. For synonym support, Lucene's SynonymGraphFilterFactory and FlattenGraphFilterFactory are configured.

Click to expand

<fieldType name="text_ja" class="solr.TextField" autoGeneratePhraseQueries="false" positionIncrementGap="100">

<analyzer type="query">

<tokenizer class="io.github.azagniotov.lucene.analysis.ja.sudachi.tokenizer.SudachiTokenizerFactory" mode="search" discardPunctuation="true" />

<!--

If you use SynonymGraphFilterFactory during indexing, you must follow it with FlattenGraphFilter

to squash tokens on top of one another like SynonymFilter, because the indexer can't directly

consume a graph.

FlattenGraphFilterFactory converts an incoming graph token stream, such as one from SynonymGraphFilter,

into a flat form so that all nodes form a single linear chain with no side paths. Every path through the

graph touches every node. This is necessary when indexing a graph token stream, because the index does

not save PositionLengthAttribute and so it cannot preserve the graph structure. However, at search time,

query parsers can correctly handle the graph and this token filter should NOT be used.

-->

<filter class="solr.SynonymGraphFilterFactory"

synonyms="synonyms_ja.txt"

ignoreCase="true"

expand="true"

format="solr"

tokenizerFactory.mode="search"

tokenizerFactory.discardPunctuation="true"

tokenizerFactory="io.github.azagniotov.lucene.analysis.ja.sudachi.tokenizer.SudachiTokenizerFactory" />

<filter class="io.github.azagniotov.lucene.analysis.ja.sudachi.filters.SudachiBaseFormFilterFactory" />

<filter class="io.github.azagniotov.lucene.analysis.ja.sudachi.filters.SudachiPartOfSpeechStopFilterFactory" tags="lang/stoptags_ja.txt" />

<filter class="solr.CJKWidthFilterFactory" />

<!-- Removes common tokens typically not useful for search, but have a negative effect on ranking -->

<filter class="solr.StopFilterFactory" ignoreCase="true" words="lang/stopwords_ja.txt" />

<!-- Normalizes common katakana spelling variations by removing any last long sound character (U+30FC) -->

<filter class="io.github.azagniotov.lucene.analysis.ja.sudachi.filters.SudachiKatakanaStemFilterFactory" minimumLength="4" />

<!-- Lower-cases romaji characters -->

<filter class="solr.LowerCaseFilterFactory" />

</analyzer>

<analyzer type="index">

<tokenizer class="io.github.azagniotov.lucene.analysis.ja.sudachi.tokenizer.SudachiTokenizerFactory" mode="search" discardPunctuation="true" />

<!--

If you use SynonymGraphFilterFactory during indexing, you must follow it with FlattenGraphFilter

to squash tokens on top of one another like SynonymFilter, because the indexer can't directly

consume a graph.

FlattenGraphFilterFactory converts an incoming graph token stream, such as one from SynonymGraphFilter,

into a flat form so that all nodes form a single linear chain with no side paths. Every path through the

graph touches every node. This is necessary when indexing a graph token stream, because the index does

not save PositionLengthAttribute and so it cannot preserve the graph structure. However, at search time,

query parsers can correctly handle the graph and this token filter should NOT be used.

From: org.apache.lucene.analysis.core.FlattenGraphFilterFactory

-->

<filter class="solr.SynonymGraphFilterFactory"

synonyms="synonyms_ja.txt"

ignoreCase="true"

expand="true"

format="solr"

tokenizerFactory.mode="search"

tokenizerFactory.discardPunctuation="true"

tokenizerFactory="io.github.azagniotov.lucene.analysis.ja.sudachi.tokenizer.SudachiTokenizerFactory" />

<filter class="solr.FlattenGraphFilterFactory" />

<filter class="io.github.azagniotov.lucene.analysis.ja.sudachi.filters.SudachiBaseFormFilterFactory" />

<filter class="io.github.azagniotov.lucene.analysis.ja.sudachi.filters.SudachiPartOfSpeechStopFilterFactory" tags="lang/stoptags_ja.txt" />

<filter class="solr.CJKWidthFilterFactory" />

<!-- Removes common tokens typically not useful for search, but have a negative effect on ranking -->

<filter class="solr.StopFilterFactory" ignoreCase="true" words="lang/stopwords_ja.txt" />

<!-- Normalizes common katakana spelling variations by removing any last long sound character (U+30FC) -->

<filter class="io.github.azagniotov.lucene.analysis.ja.sudachi.filters.SudachiKatakanaStemFilterFactory" minimumLength="4" />

<!-- Lower-cases romaji characters -->

<filter class="solr.LowerCaseFilterFactory" />

</analyzer>

</fieldType>The plugin needs a dictionary in order to run the tests. Thus, it needs to be downloaded using the following command:

./gradlew configureDictionariesLocallyThe above command does the following:

- Downloads a system dictionary

sudachi-dictionary-<YYYYMMDD>-full.zip(TheYYYYMMDDis defined in gradle.properties#sudachiDictionaryVersion) ZIP from AWS and unpacks it under the<PROJECT_ROOT>/.sudachi/downloaded/(if the ZIP has been downloaded earlier, the downloaded file will be reused) - Unzips the content under the

/tmp/sudachi/system-dict/ - Renames the downloaded

system_full.dictosystem.dict - Copies the user-dictionary/user_lexicon.csv under the

/tmp/sudachi/. The CSV is used to create a User dictionary. Although user defined dictionary contains only two entries, this sets an example how to add user dictionary metadata entries. - Builds a Sudachi user dictionary

user_lexicon.dictfrom the CSV and places it under the/tmp/sudachi/system-dict

At runtime, the plugin expects the system and user dictionaries to be located at /tmp/sudachi/system-dict/system.dict and /tmp/sudachi/user_lexicon.dict respectively.

But, their location in the local file system can be controlled via the ENV variables SUDACHI_SYSTEM_DICT and SUDACHI_USER_DICT respectively.

- The plugin keeps Java 8 source compatibility at the moment

- At least JDK 8

The plugin uses Gradle for as a build system.

For list of all the available Gradle tasks, run the following command:

./gradlew tasksBuilding and packaging can be done with the following command:

./gradlew buildThe project leverages the Spotless Gradle plugin and follows the palantir-java-format style guide.

To format the sources, run the following command:

./gradlew spotlessApplyTo note: Spotless Gradle plugin is invoked implicitly when running the ./gradlew build command.

To run unit tests, run the following command:

./gradlew testThe meaning of integration is that the test sources extend from Lucene's BaseTokenStreamTestCase in order to spin-up the Lucene ecosystem.

To run integration tests, run the following command:

./gradlew integrationTestThe meaning of functional is that the test sources extend from Lucene's BaseTokenStreamTestCase in order to spin-up the Lucene ecosystem and create a searchable document index in the local filesystem for the purpose of the tests.

To run functional tests, run the following command:

./gradlew functionalTestThe meaning of End-to-End is that the test sources extend from Solr's SolrTestCaseJ4 in order to spin-up a Solr ecosystem using an embedded Solr server instance and create a searchable document index in the local filesystem for the purpose of the tests.

To run end-to-end tests, run the following command:

./gradlew endToEndTestSmoke tests utilize Docker Solr images to deploy the built plugin jar into Solr app. These tests are not automated (i.e.: they do not run on Ci) and should be executed manually. You can find the Dockerfiles under the src/smokeTest

Tokenization, or morphological analysis, is a fundamental and important technology for processing a Japanese text, especially for industrial applications. Unlike whitespace separation between words for English text, Japanese text does not contain explicit word boundary information. The methods to recognize words within a text are unobvious and the morphological analysis of a token (segmentation + part-of-speech tagging) in Japanese is not trivial. Over time, there were various morphological tools developed, each with different kinds of the standard.

The Lucene "Kuromoji" is a built-in MeCab-style Japanese morphological analysis component that provides analysis/tokenization capabilities. By default, Kuromoji leverages under the hood the MeCab tokenizer’s “IPA” dictionary (ja).

Kuromoji analyzer has its roots in the Kuromoji analyzer made by Atilika, a small NLP company in Tokyo. Atilika has donated Kuromoji codebase (see LUCENE-3305) to the Apache Software Foundation as of Apache Lucene and Apache Solr v3.6. These days, the implementations of Atilika and Lucene Kuromoji have diverged, while Atilika Kuromoji seems to be abandoned anyways.

MeCab (ja) is an open source morphological analysis engine developed through a joint research unit project between Kyoto University Graduate School of Informatics and Nippon Telegraph and Telephone Corporation's Communication Science Research Institute.

MeCab was created by Taku Kudo in ~2007. He/they made a breakthrough by leveraging the CRF algorithm (Conditional Random Fields) to train a CRF model and build a word dictionary by utilizing the trained model.

MeCab-style tokenizer builds a graph-like structure (i.e.: lattice) to represent input corpus (i.e.: text terms/words) and to find the best connected path through that graph by leveraging Viterbi algorithm.

For Lattice-based tokenizers, a dictionary is an object or a data structure that provides a list of known terms or words, as well as how those terms should appear next to each other (i.e.: connection cost) according to Japanese grammar or some statistical probability. During the tokenization process, a tokenizer uses the dictionary in order to tokenize the input text by leveraging the dictionary metadata. The objective of tokenizer is to find the best tokenization that maximizes the sum of phrase scores.

To expand on the dictionary: a dictionary is not a mere "word collection", it includes a machine-learned language model which is carefully trained (for example, with the help of MeCab CLI (ja)). If you want to update the dictionary, you have to start from "re-training" the model on a larger / fresher lexicon.

The IPA dictionary is the MeCab's so-called "standard dictionary", characterized by a more intuitive separation of morphological units than UniDic. In contrast, UniDic splits a sentence into smaller example units for retrieval. UniDIC is a dictionary based on "short units" (短単位 read as "tantani") as defined by the NINJAL (National Institute for Japanese Language and Linguistics) which produces and maintains the UniDic dictionary.

From a Japanese full-text search perspective, consistency of the tokenization (regardless of the length of the text) is more important. If you are interested in this topic, please check this research paper. "情報検索のための単語分割一貫性の定量的評価: Quantitative Evaluation of Toekinization Consistency for Information Retrieval in Japanese". Therefore, UniDic dictionary is more suitable for Japanese full-text information retrieval since the dictionary is well maintained by researchers of NINJAL (to the best of my knowledge) and its shorter lexical units make it more suitable for splitting words when searching (tokenization is more coarse-grained) than the IPA dictionary.

UniDic dictionaries produced by NLP researchers at NINJAL (National Institute for Japanese Language and Linguistics), which are based on the BCCWJ corpus and leverage MeCab-style dictionary format.

The “The Balanced Corpus of Contemporary Written Japanese” (BCCWJ) is a corpus created for the purpose of attempting to grasp the breadth of contemporary written Japanese, containing extensive samples of modern Japanese texts in order to create as uniquely balanced a corpus as possible.

The data is ~104.3 million words, covering genres such as general books and magazines, newspapers, business reports, blogs, internet forums, textbooks, and legal documents among others. Random samples of each genre were taken in order to be morphologically analyzed for the purpose of creating a dictionary.

Thus, UniDic is a lexicon (i.e.: collection of morphemes) of BCCWJ core data (about couple percents of the whole corpus is manually annotated with things like part of speech, etc). The approximate UniDic size is ~20-30k sentences.

As a supplementary fun read, you can have a look at the excellent article that outlines Differences between IPADic and UniDic by the author of the GoLang-based Kagome tokenizer (TL;DR: UniDic has more advantage for lexical searching purpose).

Thus, the above makes a UniDic (which is the dictionary that Sudachi tokenizer leverages) dictionary to be the best choice for a MeCab-based tokenizer dictionary.

The MeCab IPA dictionary (bundled within Lucene Kuromoji by default) dates back to 2007. This means that there is a high likelihood that some newer words / proper nouns that came into the use after 2007 (e.g: new Japanese imperial era 令和 (read as "Reiwa"), people's names, manga/anime/brand/place names, etc) may not be tokenized correctly. The "not correctly" here means under-tokenized or over-tokenized.

Although the support for the current Japanese imperial era "Reiwa" (令和) has been added to the Lucene Kuromoji especially by Uchida Tomoko, for many post-2007 (i.e.: more modern) words there is no explicit support by the Lucene Kuromoji maintainers.

The adoption of a more updated version of the dictionary can directly influence the search quality and accuracy of the 1st-phase retrieval, the Solr output. Depending on the business domain of a company that leverages search as its core function, this may create more or less issues.

Therefore, Solr Lucene Analyzer Sudachi is a reasonable choice for those who are interested to run their Solr eco-system on a more up-to date Japanese morphological analysis tooling.

Sudachi by Works Applications Co., Ltd. is licensed under the Apache License, Version2.0. See https://github.com/WorksApplications/Sudachi#licenses

Sudachi logo by Works Applications Co., Ltd. is licensed under the Apache License, Version2.0. See https://github.com/WorksApplications/Sudachi#logo

Lucene, a high-performance, full-featured text search engine library written in Java and its logo are licensed under the Apache License, Version2.0. See https://lucene.apache.org/core/documentation.html

The Lucene-based Solr plugin leveraging Sudachi by Alexander Zagniotov is licensed under the Apache License, Version2.0

Copyright (c) 2023-2024 Alexander Zagniotov

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.