Source code for a Media Intelligence Video Analysis solution that can identify specific elements in video content. This layer is the basis for identifying and indexing video analysis elements that in the future can be used for finding specific scenes based on a set of rules.

As possible ad-ons, customers can use this basis layer for:

- Ads Slots identification and insertion as in Smart Ad Breaks.

- Digital Product Placement for branding solutions.

- Media content moderation.

- Media content classification.

The documentation is made available under the Creative Commons Attribution-ShareAlike 4.0 International License. See the LICENSE file.

The sample code within this documentation is made available under the MIT-0 license. See the LICENSE-SAMPLECODE file.

This repository defines the resources and instructions to deploy a CloudFormation Stack on an AWS Account.

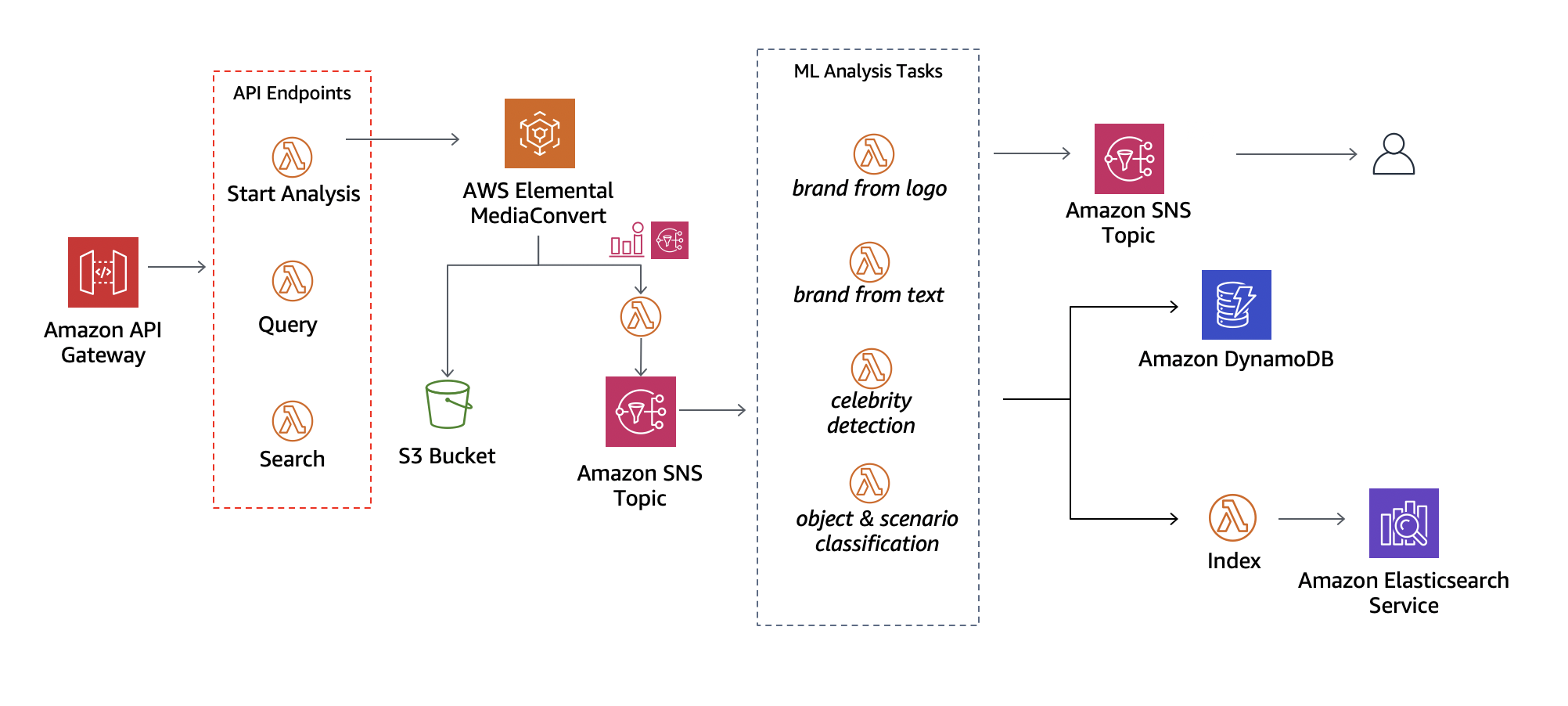

The stack will deploy the following architecture:

The application deploys a REST API with the following endpoints:

analysis/start: Starts a video analysis with the specified parametersanalysis/search: Searches for a video that maches a set of filtersanalysis: Retrieves the raw analysis results from DynamoDB.

The workflow for a video analysis goes as follows:

- The user uploads a video to a pre-determined S3 Bucket

- The user calls the /analysis/start endpoint, starting the analysis workflow

- Multiple analysis are performed. The results are saved onto DynamoDB and ElasticSearch

- The user searches for a video using one of the provided filters

- The user retrieves all the information about an specific video using DynamoDB.

Follow these steps in the order to test this application on your AWS Account:

Prerequisites

Please install the following applications on your computer if you haven't already:

For this prototype we assume that you have two S3 Buckets already created. Troughout this guide we will consider the following:

- INPUT_BUCKET will hold the video files that will be analysed by the workflow.

- OUTPUT_BUCKET will receive the files exported by the MediaConvert service.

Please follow the steps in this link and create two S3 bucket if you don't have it already.

In order to use the Celebrity Recognition Model, you will need to create a Face Collection on Rekognition and then index some faces to it.

You can create the folder

/facesand generate a list of a few celebrities split by name. You can use it as a starter and follow the instructions on this link to index them to your face collection. Feel free to use the INPUT_BUCKET you've created before to upload the face images.

Please take note of your face collection Id. We will use it in a next step.

In order for the brand detection algorithm to work, you will need to train a model using Rekognition Custom Labels. To do so, please follow the following steps:

- Create a Project

- Create a folder with some brand logos in

/brands/samplesand then upload them to the INPUT_BUCKET. - Create a dataset using the files you uploaded to S3. Inside the

/brandsyou will find a file calledoutput.manifest.mainfest`. Open that file and replace <S3_BUCKET> with the id of the INPUT_BUCKET you used on the previous step. - Train your model (This step might take a few minutes to complete.)

- Start your model

If you are willing to implement the celebrity detection analysis as well as the brand from logo analysis you need to create their lambda folders

inside the /analysis folder and create their main source code, you can use as a basis:

- Searching for a face (Image) in a collection

- Analysing an Image with Custom Labels Additionally to those intermediate steps, you will also need to update the CloudFormation template by adding those analysis, you can use the current analysis as an example.

[Note] You will need to add these elements in the CloudFormation Template:

- AWS Lambda Function element

- AWS Lambda permissions for each analysis

- Amazon SNS topic subscription for each analysis

For this step we will need to use a terminal. Please navigate to this folder and run the following commands:

sam build --use-container sam deploy --guided

This command will prompt you with a set of parameters, please fill them according to your setup:

| Parameter | Description | Example |

|---|---|---|

| E-mail to be notified when an analysis completes | my-email@provider.com | |

| S3Bucket | The name of the bucket you created previously | INPUT_BUCKET |

| DestinationBucket | The name of the second bucket you created previously | OUTPUT_BUCKET |

| ESDomainName | A unique domain name for the ElasticSearch cluster | my-unique-es-cluster |

| CognitoDomainName | A unique domain name for the Cognito User Pool | my-unique-cog-cluster |

| DynamoDBTable | A name for the Dynamodb table | aprendiendoaws-ml-mi-jobs |

| CelebrityCollectionID | The Id for the face collection you've created previously | bra-celebs |

| StageName | A name for the stage that will be deployed on API Gateway | Prod |

| OSCDictionary | KEEP DEFAULT | osc_files/dictionary.json |

| ModelVersionArn | The arn of the Rekognition Custom Labels solution you've created previously | arn:aws:rekognition:us-east-1:123456789:project/my-project/verion/my-project |

After filling the values accordingly, use the default configurations until the template starts deploying.

You can visit the CloudFormation tab in the AWS Console to verify the resources created. To do so, click on the aprendiendoaws-ml-mi stack and select the Resources tab.

Prerequisites

Before testing the API be sure you have uploaded a video in the Amazon S3 Input Bucket you defined in the CloudFormation parameters.

You can use one of the videos provided in the /showcase/examples folder to perform the testing. To do so, upload the desired video to recently created bucket and call /start to start the workflow. You can use the following snippet as an example:

Start Video Analysis

Once you have uploaded a video to your Amazon S3 Input Bucket, you must send an HTTPS request to your API with the following body in JSON format:

// #POST /analysis/start

{

"S3Key": "GranTourTheTick.mp4", // Your video file name

"SampleRate": 1, // The desired sample rate

"analysis": [ // The desired analysis

"osc",

"bft"

]

}Get Video Analysis Results

If successfull, you will receive a response containing the Media Convert Job Id and status. Now you can use the /analysis endpoint to retrieve the current analysis for that particular job:

// POST /analysis

{

"S3Key": "GranTourTheTick.mp4", // Your video file name

"JobId": "MyJobId", // The MediaConvert JobId from the previous step

"analysis": "bfl" // [OPTIONAL] Which analysis to retrieve results

}Search Specific Elements in Video Analysis Results

Finally, you can search your analysis results using the /analysis/search endpoint:

// POST /analysis/search

{

"must": { // [OPTIONAL] Choose what aspects you want in the video

"scenes": [ // [OPTIONAL] Retrieve videos that these scenes

{

"scene": "Sports",

"accuracy": 50.0

}

]

},

"avoid": {

"sentiments":[ // [OPTIONAL] Chose the aspects that

{ // you want to avoid in the video

"sentiment": "sadness",

"accuracy": 89.0

}

]

},

"S3Key": "GranTourTheTick.mp4", // [OPTIONAL] Choose a video search the results

"SampleRate": 1 // [OPTIONAL] Choose a sample rate to search the results

}This prototype developed by the AWS Envision Engineering Team. For questions, comments, or concerns please reach out to:

- Technical Leader: Pedro Pimentel

- EE Engineer: Arturo Minor

The sample code; software libraries; command line tools; proofs of concept; templates; or other related technology (including any of the foregoing that are provided by our personnel) is provided to you as AWS Content under the AWS Customer Agreement, or the relevant written agreement between you and AWS (whichever applies). You should not use this AWS Content in your production accounts, or on production or other critical data. You are responsible for testing, securing, and optimizing the AWS Content, such as sample code, as appropriate for production grade use based on your specific quality control practices and standards. Deploying AWS Content may incur AWS charges for creating or using AWS chargeable resources, such as running Amazon EC2 instances or using Amazon S3 storage.

This solution collects anonymous operational metrics to help AWS improve the quality and features of the solution. Data collection is subject to the AWS Privacy Policy (https://aws.amazon.com/privacy/). To opt out of this feature, simply remove the tag(s) starting with “uksb-” or “SO” from the description(s) in any CloudFormation templates or CDK TemplateOptions.