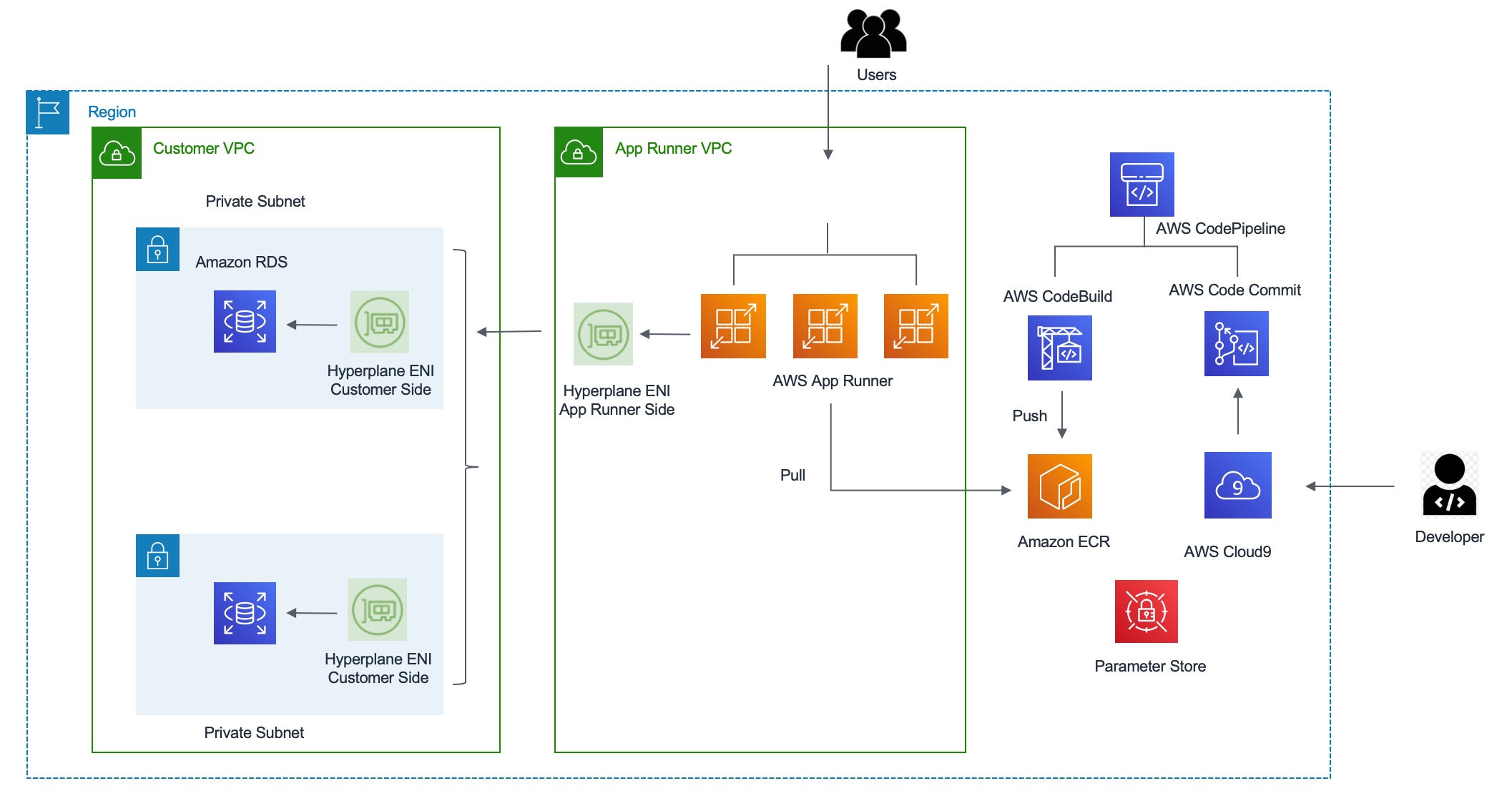

AWS launched AWS App Runner service in 2021 that leverages AWS best practices and technologies for deploying and running containerized web applications and APIs at scale. This leads to a drastic reduction in your time to market for new applications and features. App Runner runs on top of AWS ECS and Fargate.

On Feb 9, 2022 AWS launched AWS announced VPC support for App Runner services. With this feature, App Runner applications can now connect to private endpoints in your VPC, and you can enable a more secure and compliant environment by removing public access to these resources.

AWS App Runner services can now communicate with other applications hosted in an Amazon Virtual Private Cloud (Amazon VPC). You can now connect you App Runner services to databases in Amazon Relational Database Service (Amazon RDS), to Redis caches in Amazon ElastiCache, or to message queues in Amazon MQ. You can also connect your services to your own applications in Amazon Elastic Container Service (Amazon ECS), Amazon Elastic Kubernetes Service (Amazon EKS), or Amazon Elastic Compute Cloud (Amazon EC2). As a result, web applications and APIs running on App Runner can now get powerful support from data services on AWS to build production architectures.

To enable VPC access for your App Runner service, simply pass the subnets of the VPC and security groups while you create or update your App Runner service. App Runner uses this information to create network interfaces that facilitate communication to a VPC. If you pass multiple subnets, App Runner creates multiple network interfaces—one for each subnet. To learn more about VPC support for AWS App Runner, check out the the blog post written by Archana Srikanta Deep Dive on AWS App Runner VPC Networking.

This workshop is designed to enable AWS partners and their customers to build and deploy solutions on AWS using AWS App Runner and Amazon RDS. The workshop consists of a number of sections to create solutions using Continuous Integration and Continuous Delivery patterns. You will be using AWS services like AWS App Runner, Amazon RDS, AWS CodePipeline, AWS CodeCommit and AWS CodeBuild.

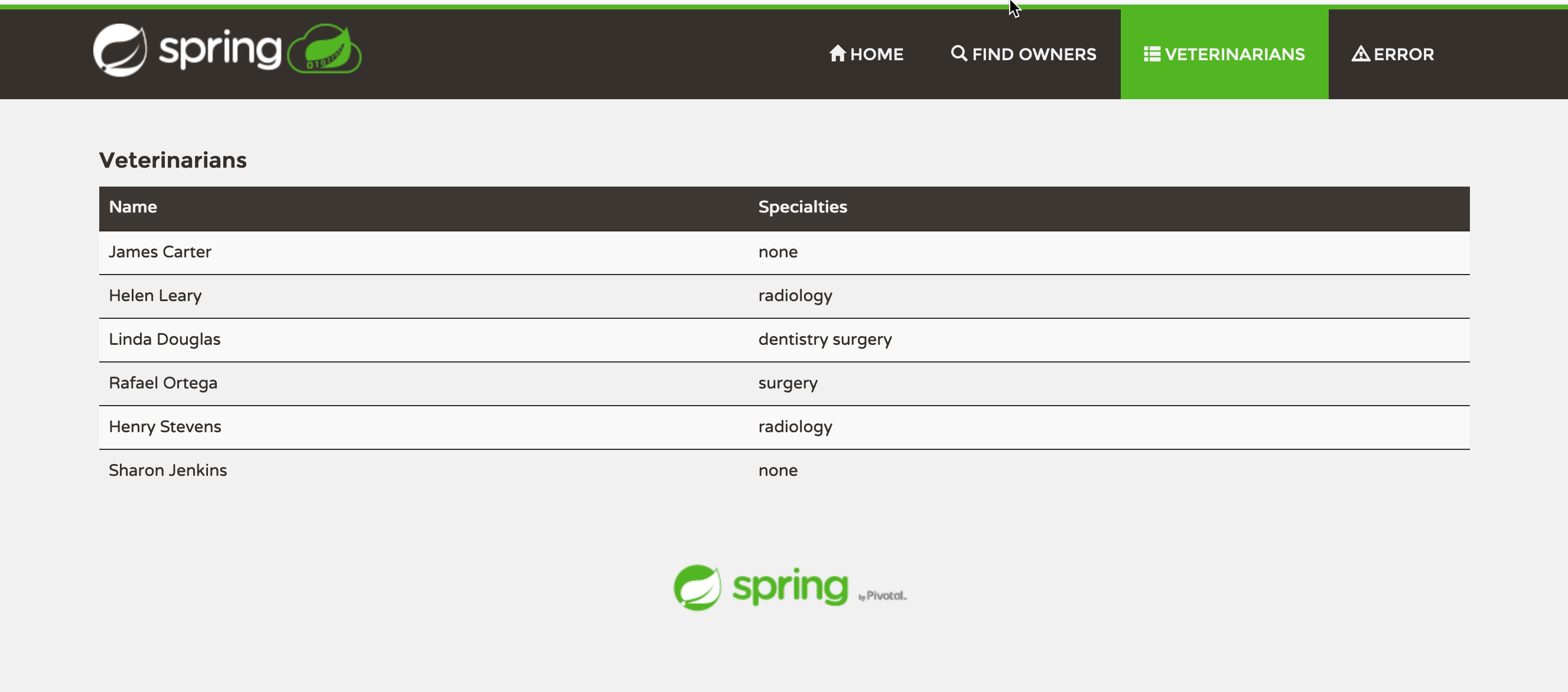

We shall build a solution using the Spring Framework. This sample solution is designed to show how the Spring application framework can be used to build a simple, but powerful database-backed applications. It uses AWS RDS (MySQL) at the backend and it will demonstrate the use of Spring's core functionality. This architecture enable AWS partners and their customers to build and deploy containerized solutions on AWS using AWS App Runner, AWS RDS and Spring framework. The Spring Framework is a collection of small, well-focused, loosely coupled Java frameworks that can be used independently or collectively to build industrial strength applications of many different types.

Here are the key Terraform resources in the solution architecture.

resource "aws_security_group" "db-sg" {

name = "${var.stack}-db-sg"

description = "Access to the RDS instances from the VPC"

vpc_id = aws_vpc.main.id

ingress {

from_port = 3306

to_port = 3306

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

tags = {

Name = "${var.stack}-db-sg"

}

}resource "aws_apprunner_vpc_connector" "connector" {

vpc_connector_name = "petclinic_vpc_connector"

subnets = aws_subnet.private[*].id

security_groups = [aws_security_group.db-sg.id]

}resource "aws_apprunner_service" "service" {

auto_scaling_configuration_arn = aws_apprunner_auto_scaling_configuration_version.auto-scaling-config.arn

service_name = "apprunner-petclinic"

source_configuration {

authentication_configuration {

access_role_arn = aws_iam_role.apprunner-service-role.arn

}

}

network_configuration {

egress_configuration {

egress_type = "VPC"

vpc_connector_arn = aws_apprunner_vpc_connector.connector.arn

}

}Before you build the whole infrastructure, including your CI/CD pipeline, you will need to meet the following pre-requisites.

Ensure you have access to an AWS account, and a set of credentials with Administrator permissions. Note: In a production environment we would recommend locking permissions down to the bare minimum needed to operate the pipeline.

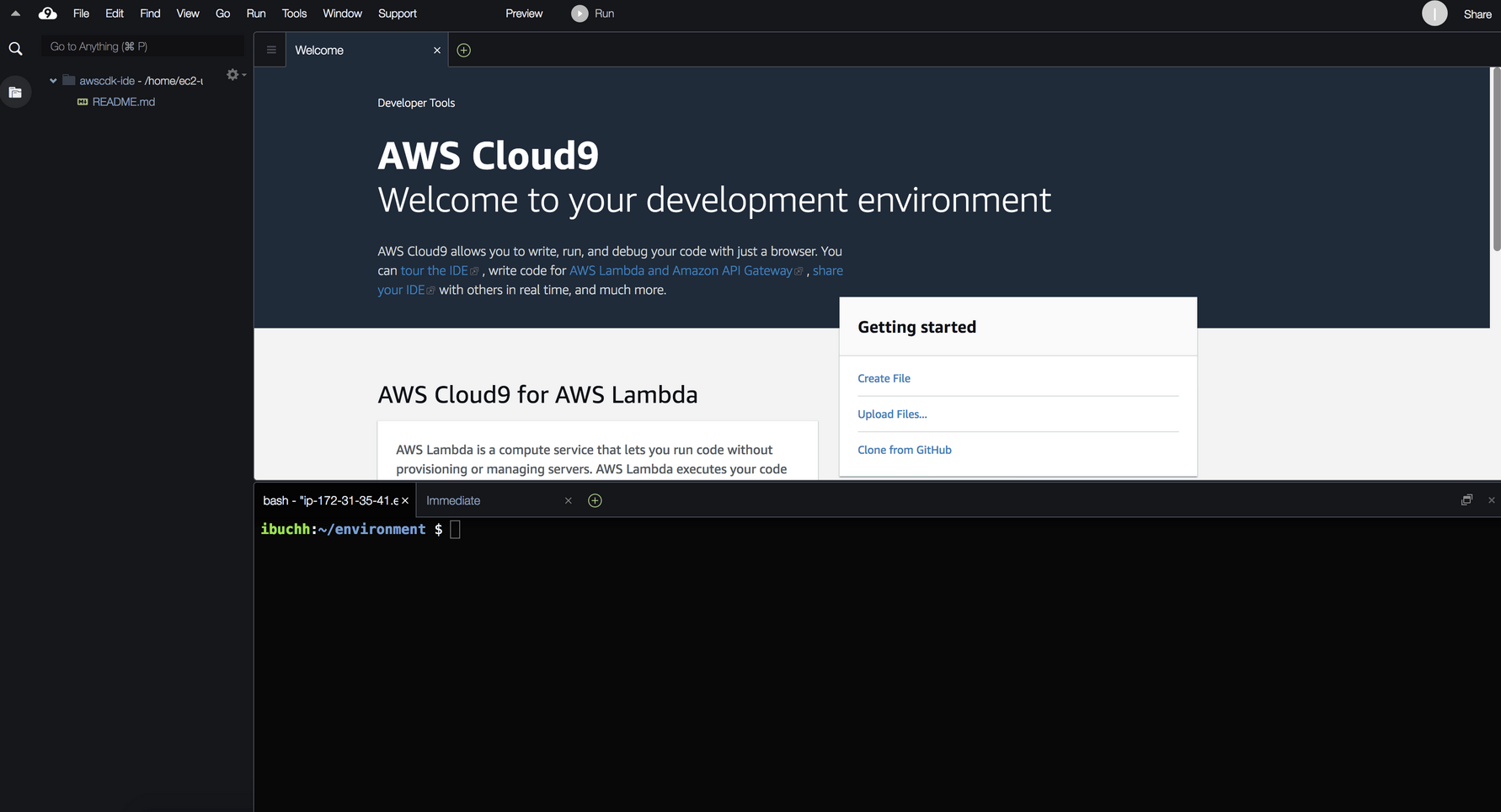

You can create a bootstrapped Cloud9 environment by deploying the cloudformation/cloud9.yaml via the CloudFormation console. This will set up a new Cloud9 instance for you to use for the workshop.

Note that it may take several minutes after the Cloud9 instance is available before it is completely configured.

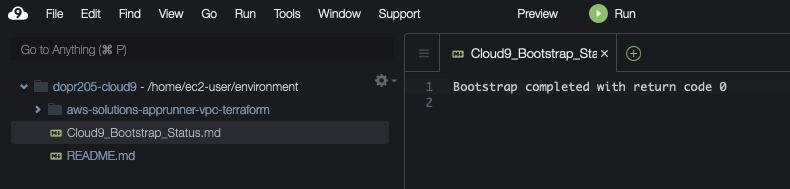

You'll know the environment is ready when you see a file called Cloud9_Bootstrap_Status.md in your Cloud9 environment folder

Launch the AWS Cloud9 IDE. Close the Welcome tab and open a new Terminal tab.

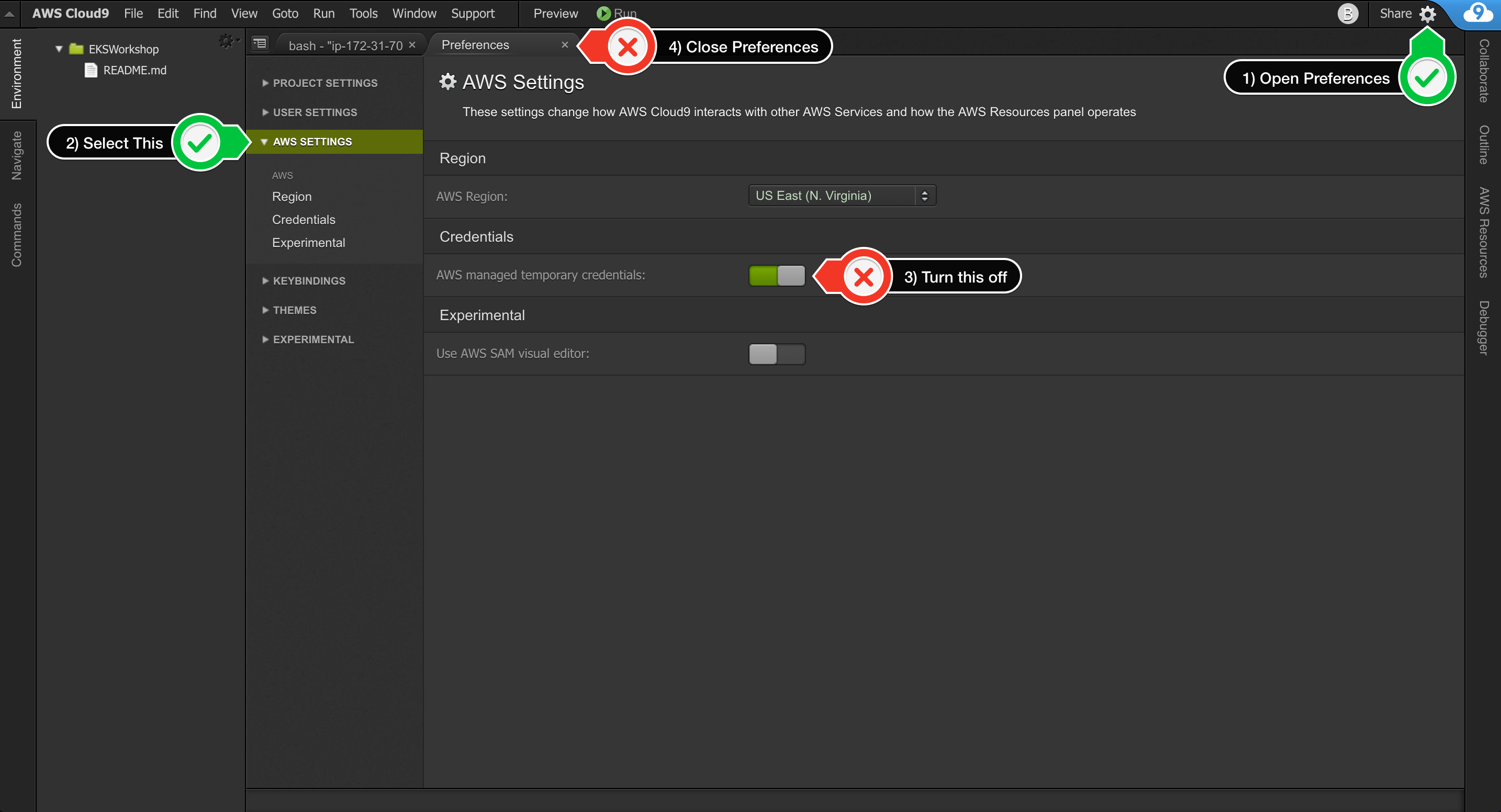

By default, Cloud9 manages temporary IAM credentials for you. Unfortunately these are incomaptible with Terraform. To get around this you need to disable Cloud9 temporary credentials, and create and attach an IAM role for your Cloud9 instance.

- Open your Cloud9 IDE and click the gear icon (in top right corner), or click to open a new tab and choose "Open Preferences"

- Select AWS SETTINGS

- Turn off AWS managed temporary credentials

- Close the Preferences tab

- In the Cloud9 terminal pane, execute the command:

rm -vf ${HOME}/.aws/credentials - As a final check, use the GetCallerIdentity CLI command to validate that the Cloud9 IDE is using the correct IAM role.

aws sts get-caller-identity --query Arn | grep AppRunnerC9Role -q && echo "IAM role valid" || echo "IAM role NOT valid"

Run aws configure to configure your region. Leave all the other fields blank. You should have something like:

admin:~/environment $ aws configure

AWS Access Key ID [None]:

AWS Secret Access Key [None]:

Default region name [None]: us-east-1

Default output format [None]:

Verify the Apache Maven installation:

source ~/.bashrc

mvn --versioncd ~/environment/aws-solutions-apprunner-vpc-terraform/petclinic

mvn package -Dmaven.test.skip=trueThe first time you execute this (or any other) command, Maven will need to download the plugins and related dependencies it needs to fulfill the command. From a clean installation of Maven, this can take some time (note: in the output above, it took almost five minutes). If you execute the command again, Maven will now have what it needs, so it won’t need to download anything new and will be able to execute the command quicker.

The compiled java classes were placed in spring-petclinic/target/classes, which is another standard convention employed by Maven. By using the standard conventions, the POM above is small and you haven’t had to tell Maven explicitly where any of your sources are or where the output should go. By following the standard Maven conventions, you can do a lot with little effort.

From the petclinic directory:

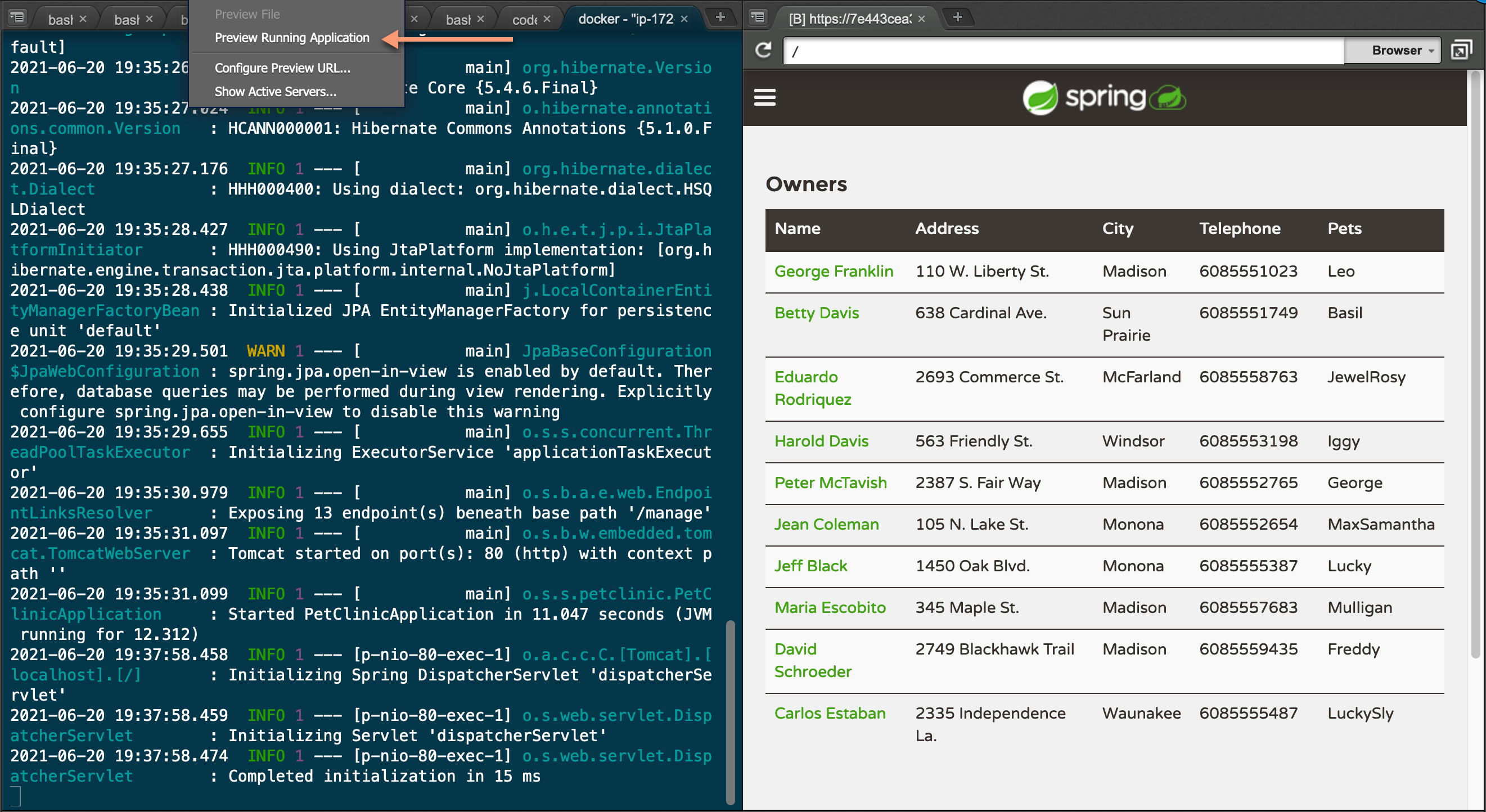

docker build -t petclinic .Run the following inside the Cloud9 terminal:

docker run -it --rm -p 8080:80 --name petclinic petclinicThis will run the application using container port of 80 and will expose the application to host port of 8080. Click Preview from the top menu and then click “Preview Running Application.” It will open a browser displaying the Spring Petclinic application.

We shall use Terraform to build the above architecture including the AWS CodePipeline.

Note: This workshop will create chargeable resources in your account. When finished, please make sure you clean up resources as instructed at the end.

aws ssm put-parameter --name /database/password --value mysqlpassword --type SecureStringcd ~/environment/aws-solutions-apprunner-vpc-terraform/terraformEdit .auto.tfvars, leave the aws_profile as "default", and ensure aws_region matches your environment, and that codebuild_cache_bucket_name has a value similar to apprunner-cache-<your_account_id>.

If not replace the placeholder yyyymmdd-identifier with today's date and something unique to you to create globally unique S3 bucket name. S3 bucket names can include numbers, lowercase letters and hyphens.

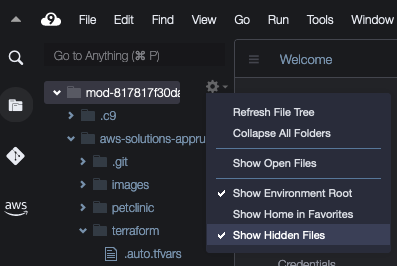

If you don't see the .auto.tfvars file you may have to select 'Show Hidden Files' option under the gear on the left hand side of the IDE.

Initialise Terraform:

terraform initBuild the infrastructure and pipeline using terraform:

terraform applyTerraform will display an action plan. When asked whether you want to proceed with the actions, enter yes.

Wait for Terraform to complete the build before proceeding. It will take few minutes to complete “terraform apply”

Once the build is complete, you can explore your environment using the AWS console:

- View the App Runner service using the AWS App Runner console

- View the RDS database using the Amazon RDS console.

- View the ECR repo using the Amazon ECR console.

- View the CodeCommit repo using the AWS CodeCommit console.

- View the CodeBuild project using the AWS CodeBuild console.

- View the pipeline using the AWS CodePipeline console.

Note that your pipeline starts in a failed state. That is because there is no code to build in the CodeCommit repo! In the next step you will push the petclinic app into the repo to trigger the pipeline.

Open the App Runner service configuration file terraform/services.tf file and explore the options specified in the file.

image_repository {

image_configuration {

port = var.container_port

runtime_environment_variables = {

"AWS_REGION" : "${var.aws_region}",

"SPRING_DATASOURCE_USERNAME" : "${var.db_user}",

"SPRING_DATASOURCE_INITIALIZATION_MODE" : var.db_initialize_mode,

"SPRING_PROFILES_ACTIVE" : var.db_profile,

"SPRING_DATASOURCE_URL" : "jdbc:mysql://${aws_db_instance.db.address}/${var.db_name}"

}

}

image_identifier = "${data.aws_ecr_repository.image_repo.repository_url}:latest"

image_repository_type = "ECR"

}Note: In a production environment it is a best practice to use a meaningful tag instead of using the :latest tag.

You will now use git to push the petclinic application through the pipeline.

Start by switching to the petclinic directory:

cd ~/environment/aws-solutions-apprunner-vpc-terraform/petclinicSet up your git username and email address:

git config --global user.name "Your Name"

git config --global user.email you@example.comNow ceate a local git repo for petclinic as follows:

git init

git add .

git commit -m "Baseline commit"An AWS CodeCommit repo was built as part of the pipeline you created. You will now set this up as a remote repo for your local petclinic repo.

For authentication purposes, you can use the AWS IAM git credential helper to generate git credentials based on your IAM role permissions. Run:

git config --global credential.helper '!aws codecommit credential-helper $@'

git config --global credential.UseHttpPath trueFrom the output of the Terraform build, we use the output source_repo_clone_url_http in our next step.

cd ~/environment/aws-solutions-apprunner-vpc-terraform/terraform

export tf_source_repo_clone_url_http=$(terraform output --raw source_repo_clone_url_http)Set this up as a remote for your git repo as follows:

cd ~/environment/aws-solutions-apprunner-vpc-terraform/petclinic

git remote add origin $tf_source_repo_clone_url_http

git remote -vYou should see something like:

origin https://git-codecommit.eu-west-2.amazonaws.com/v1/repos/petclinic (fetch)

origin https://git-codecommit.eu-west-2.amazonaws.com/v1/repos/petclinic (push)To trigger the pipeline, push the master branch to the remote as follows:

git push -u origin masterThe pipeline will pull the code, build the docker image, push it to ECR, and deploy it to your ECS cluster. This will take a few minutes. You can monitor the pipeline in the AWS CodePipeline console.

From the output of the Terraform build, note the Terraform output apprunner_service_url.

cd ~/environment/aws-solutions-apprunner-vpc-terraform/terraform

export tf_apprunner_service_url=$(terraform output apprunner_service_url)

echo $tf_apprunner_service_urlUse this in your browser to access the application.

The pipeline can now be used to deploy any changes to the application.

You can try this out by changing the welcome message as follows:

cd ~/environment/aws-solutions-apprunner-vpc-terraform/petclinic

vi src/main/resources/messages/messages.properties

Change the value for the welcome string, for example, to "Hello".

Commit the change:

git add .

git commit -m "Changed welcome string"

Push the change to trigger pipeline:

git push origin masterAs before, you can use the console to observe the progression of the change through the pipeline. Once done, verify that the application is working with the modified welcome message.

Note: If you are participating in this workshop at an AWS-hosted event using Event Engine and a provided AWS account, you do not need to complete this step. We will cleanup all managed accounts afterwards on your behalf.

Make sure that you remember to tear down the stack when finshed to avoid unnecessary charges. You can free up resources as follows:

cd ~/environment/aws-solutions-apprunner-vpc-terraform/terraform

terraform destroy

When prompted enter yes to allow the stack termination to proceed.

Once complete, note that you will have to manually empty and delete the S3 bucket used by the pipeline.

aws ecr delete-repository \

--repository-name $REPOSITORY_NAME \

--region $AWS_REGION \

--force