Capyfile - highly customizable file processing pipeline

What we are pursuing here:

- Easy setup

- High customization

- Wide range of file processing operations

File processing pipeline can be set up in two simple steps.

The service definition file is a file that describes the file processing pipeline.

Top level of the configuration file is the service object. It holds the processors that

hold the operations.

Available operations are:

filesystem_input_read- read the files from the filesystemfilesystem_input_write- write the files to the filesystemfilesystem_input_remove- remove the files from the filesystemfile_size_validate- check file sizefile_type_validate- check file MIME typefile_time_validate- check file time stataxiftool_metadata_cleanup- clear file metadata if possible (require exiftool)image_convert- convert image to another format (require libvips)s3_upload- upload file to S3-compatible storage- ... will be more

Operation parameters can be retrieved from those source types:

value- parameter value will be retrieved from the service definitionenv_var- parameter value will be retrieved from the environment variablesecret- parameter value will be retrieved from the secret (docker secret)file- parameter value will be retrieved from the filehttp_get- parameter value will be retrieved from the HTTP GET parameterhttp_post- parameter value will be retrieved from the HTTP POST parameterhttp_header- parameter value will be retrieved from the HTTP headeretcd- parameter value will be retrieved from the etcd key-value store- ... will be more

Each operation accepts targetFiles parameter that tells the operation what files it should

process. The targetFiles parameter can have these values:

without_errors(default) - all files that are passed to the operation except files that have errorswith_errors- all files that are passed to the operation that have errorsall- all files that are passed to the operation

At this moment you have two options to run the file processing pipeline:

- via

capycmdcommand line application - via

capysvrhttp server

The below you can see a couple of examples of the service definition file.

First example is a typical service definition file for the capycmd command line application.

This service definition setting up a pipeline that reads the log files from the filesystem,

uploads the files that are older than 30 days to S3-compatible storage and removes them.

{

"version": "1.0",

"name": "logs",

"processors": [

{

"name": "archive",

"operations": [

{

"name": "filesystem_input_read",

"params": {

"target": {

"sourceType": "value",

"source": "/var/log/rotated-logs/*"

}

}

},

{

"name": "file_time_validate",

"params": {

"maxMtime": {

"sourceType": "env_var",

"source": "MAX_LOG_FILE_AGE_RFC3339"

}

}

},

{

"name": "s3_upload",

"targetFiles": "without_errors",

"params": {

"accessKeyId": {

"sourceType": "secret",

"source": "aws_access_key_id"

},

"secretAccessKey": {

"sourceType": "secret",

"source": "aws_secret_access_key"

},

"endpoint": {

"sourceType": "value",

"source": "s3.amazonaws.com"

},

"region": {

"sourceType": "value",

"source": "us-east-1"

},

"bucket": {

"sourceType": "env_var",

"source": "AWS_LOGS_BUCKET"

}

}

},

{

"name": "filesystem_input_remove",

"targetFiles": "without_errors"

}

]

}

]

}Now when you have a service definition file, you can run the file processing pipeline.

# Provide service definition stored in the local filesystem

# via CAPYFILE_SERVICE_DEFINITION_FILE=/etc/capyfile/service-definition.json

docker run \

--name capyfile_server \

--mount type=bind,source=./service-definition.json,target=/etc/capyfile/service-definition.json \

--mount type=bind,source=/var/log/rotated-logs,target=/var/log/rotated-logs \

--env CAPYFILE_SERVICE_DEFINITION_FILE=/etc/capyfile/service-definition.json \

--env MAX_LOG_FILE_AGE_RFC3339=$(date -d "30 days ago" -u +"%Y-%m-%dT%H:%M:%SZ") \

--env AWS_LOGS_BUCKET=logs \

--secret aws_access_key_id \

--secret aws_secret_access_key \

capyfile/capycmd:latest logs:archiveThe command produces the output that can look like this (the output has weird order because it is a result of concurrent processing):

Running logs:archive service processor...

[/var/log/rotated-logs/access-2023-08-27.log] filesystem_input_read FINISHED file read finished

[/var/log/rotated-logs/access-2023-08-28.log] filesystem_input_read FINISHED file read finished

[/var/log/rotated-logs/access-2023-09-27.log] filesystem_input_read FINISHED file read finished

[/var/log/rotated-logs/access-2023-09-28.log] filesystem_input_read FINISHED file read finished

[/var/log/rotated-logs/access-2023-09-29.log] filesystem_input_read FINISHED file read finished

[/var/log/rotated-logs/access-2023-08-28.log] file_time_validate STARTED file time validation started

[/var/log/rotated-logs/access-2023-08-28.log] file_time_validate FINISHED file time is valid

[/var/log/rotated-logs/access-2023-08-27.log] file_time_validate STARTED file time validation started

[/var/log/rotated-logs/access-2023-09-27.log] file_time_validate STARTED file time validation started

[/var/log/rotated-logs/access-2023-08-27.log] file_time_validate FINISHED file time is valid

[/var/log/rotated-logs/access-2023-09-27.log] file_time_validate FINISHED file mtime is too new

[/var/log/rotated-logs/access-2023-09-29.log] file_time_validate STARTED file time validation started

[/var/log/rotated-logs/access-2023-09-27.log] s3_upload SKIPPED skipped due to "without_errors" target files policy

[/var/log/rotated-logs/access-2023-09-28.log] file_time_validate STARTED file time validation started

[/var/log/rotated-logs/access-2023-09-29.log] file_time_validate FINISHED file mtime is too new

[/var/log/rotated-logs/access-2023-08-28.log] s3_upload STARTED S3 file upload has started

[/var/log/rotated-logs/access-2023-09-28.log] file_time_validate FINISHED file mtime is too new

[/var/log/rotated-logs/access-2023-09-29.log] s3_upload SKIPPED skipped due to "without_errors" target files policy

[/var/log/rotated-logs/access-2023-08-27.log] s3_upload STARTED S3 file upload has started

[/var/log/rotated-logs/access-2023-09-27.log] filesystem_input_remove SKIPPED skipped due to "without_errors" target files policy

[/var/log/rotated-logs/access-2023-09-29.log] filesystem_input_remove SKIPPED skipped due to "without_errors" target files policy

[/var/log/rotated-logs/access-2023-09-28.log] s3_upload SKIPPED skipped due to "without_errors" target files policy

[/var/log/rotated-logs/access-2023-09-28.log] filesystem_input_remove SKIPPED skipped due to "without_errors" target files policy

[/var/log/rotated-logs/access-2023-08-27.log] s3_upload FINISHED S3 file upload has finished

[/var/log/rotated-logs/access-2023-08-28.log] s3_upload FINISHED S3 file upload has finished

[/var/log/rotated-logs/access-2023-08-27.log] filesystem_input_remove STARTED file remove started

[/var/log/rotated-logs/access-2023-08-27.log] filesystem_input_remove FINISHED file remove finished

[/var/log/rotated-logs/access-2023-08-28.log] filesystem_input_remove STARTED file remove started

[/var/log/rotated-logs/access-2023-08-28.log] filesystem_input_remove FINISHED file remove finished

...

This service definition setting up a pipeline that allows .pdf, .doc and .docx files that are less than 10MB. Valid files will be uploaded to S3-compatible storage.

{

"version": "1.0",

"name": "documents",

"processors": [

{

"name": "upload",

"operations": [

{

"name": "file_size_validate",

"params": {

"maxFileSize": {

"sourceType": "value",

"source": 1048576

}

}

},

{

"name": "file_type_validate",

"params": {

"allowedMimeTypes": {

"sourceType": "value",

"source": [

"application/pdf",

"application/msword",

"application/vnd.openxmlformats-officedocument.wordprocessingml.document"

]

}

}

},

{

"name": "s3_upload",

"params": {

"accessKeyId": {

"sourceType": "secret",

"source": "aws_access_key_id"

},

"secretAccessKey": {

"sourceType": "secret",

"source": "aws_secret_access_key"

},

"endpoint": {

"sourceType": "etcd",

"source": "/services/upload/aws_endpoint"

},

"region": {

"sourceType": "etcd",

"source": "/services/upload/aws_region"

},

"bucket": {

"sourceType": "env_var",

"source": "AWS_DOCUMENTS_BUCKET"

}

}

}

]

}

]

}Now when you have a service definition file, you can run the file processing pipeline available over HTTP.

# Provide service definition stored in the local filesystem

# via CAPYFILE_SERVICE_DEFINITION_FILE=/etc/capyfile/service-definition.json

docker run \

--name capyfile_server \

--mount type=bind,source=./service-definition.json,target=/etc/capyfile/service-definition.json \

--env CAPYFILE_SERVICE_DEFINITION_FILE=/etc/capyfile/service-definition.json \

--env AWS_DOCUMENTS_BUCKET=documents \

--secret aws_access_key_id \

--secret aws_secret_access_key \

-p 8024:80 \

capyfile/capysvr:latest

# Or you can provide the service definition stored in the remote host

# via CAPYFILE_SERVICE_DEFINITION_URL=https://example.com/service-definition.json

docker run \

--name capyfile_server \

--env CAPYFILE_SERVICE_DEFINITION_URL=https://example.com/service-definition.json \

--env AWS_DOCUMENTS_BUCKET=documents \

--secret aws_access_key_id \

--secret aws_secret_access_key \

-p 8024:80 \

capyfile/capysvr:latestIf you want to load parameters from etcd, you can provide the etcd connection parameters via environment variables:

ETCD_ENDPOINTS=["etcd1:2379","etcd2:22379","etcd3:32379"]

ETCD_USERNAME=etcd_user

ETCD_PASSWORD=etcd_password

Now it is ready to accept and process the files.

# upload and process single file

curl -F "file1=@$HOME/Documents/document.pdf" http://localhost/upload/document

# upload and process request body

curl --data-binary "@$HOME/Documents/document.pdf" http://localhost/upload/document

# upload and process multiple files

curl -F "file1=@$HOME/Documents/document.pdf" http://localhost/upload/document

curl \

-F "file1=@$HOME/Documents/document.pdf" \

-F "file3=@$HOME/Documents/document.docx" \

-F "file3=@$HOME/Documents/very-big-document.pdf" \

-F "file4=@$HOME/Documents/program.run" \

http://localhost/upload/document The service returns json response of this format (example for multiple files upload above):

{

"status": "PARTIAL",

"code": "PARTIAL",

"message": "successfully uploaded 2 of 4 files",

"files": [

{

"url": "https://documents.storage.example.com/documents/abcdKDNJW_DDWse.pdf",

"filename": "abcdKDNJW_DDWse.pdf",

"originalFilename": "document.pdf",

"mime": "application/pdf",

"size": 5892728,

"status": "SUCCESS",

"code": "FILE_SUCCESSFULLY_UPLOADED",

"message": "file successfully uploaded"

},

{

"url": "https://documents.storage.example.com/documents/abcdKDNJW_DDWsd.docx",

"filename": "abcdKDNJW_DDWsd.docx",

"originalFilename": "document.docx",

"mime": "application/vnd.openxmlformats-officedocument.wordprocessingml.document",

"size": 3145728,

"status": "SUCCESS",

"code": "FILE_SUCCESSFULLY_UPLOADED",

"message": "file successfully uploaded"

}

],

"errors": [

{

"originalFilename": "very-big-document.pdf",

"status": "ERROR",

"code": "FILE_IS_TOO_BIG",

"message": "file size can not be greater than 10 MB"

},

{

"originalFilename": "program.run",

"status": "ERROR",

"code": "FILE_MIME_TYPE_IS_NOT_ALLOWED",

"message": "file MIME type \"application/x-makeself\" is not allowed"

}

],

"meta": {

"totalUploads": 4,

"successfulUploads": 2,

"failedUploads": 2

}

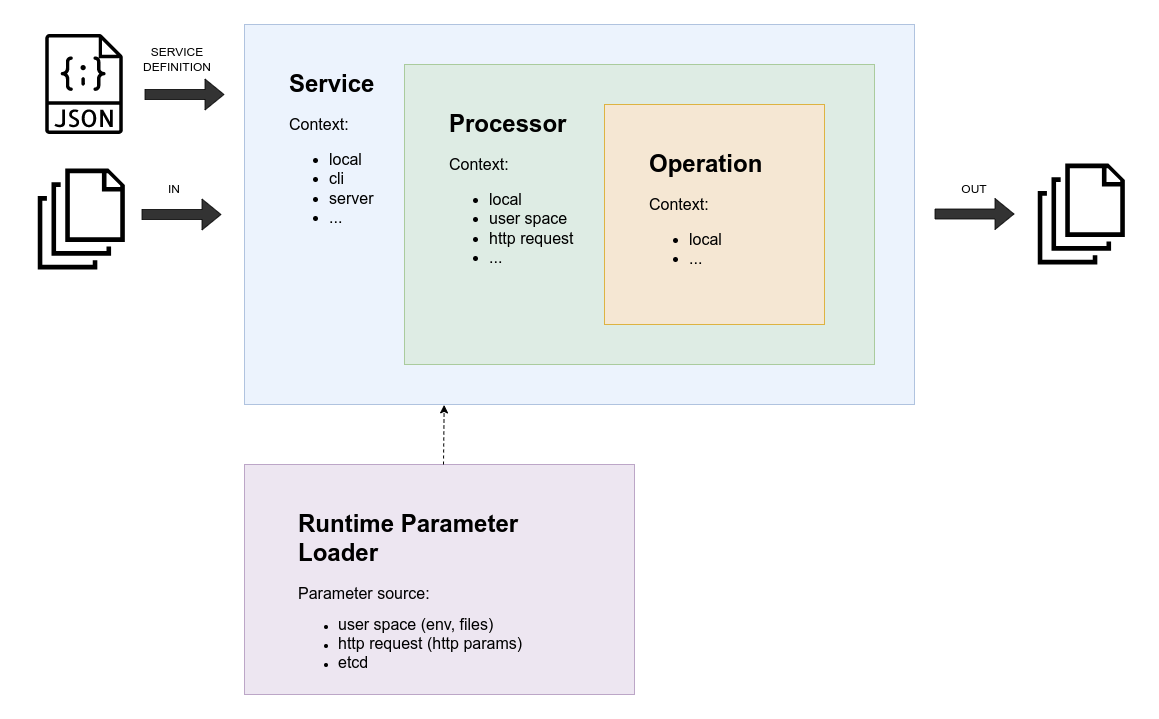

}On paper, it supposed to look like this:

There are three core concepts:

- Service. Top layer that has access to the widest context.

- Processor. It's responsible for configuring the operations, building operation pipeline.

- Operation. Do the actual file processing. It can read, write, validate, modify, or store the files.

What we have so far is a basic dev environment running on Docker.

For the development purposes, we have a docker-compose file with all necessary dependencies

(see docker-compose.dev.yml).

Also, we have two service definitions for capysvr and capycmd services:

service-definition.capysvr.dev.json- prepared service definition forcapysvrservice-definition.capycmd.dev.json- prepared service definition forcapycmd

And the dev.sh script that helps to build, run, and stop the services.

What is available for capysvr:

# Build capysvr from the source code and run it with all necessary dependencies

./dev.sh start capysvr

# now capysvr is accessible on http://localhost:8024 or http://capyfile.local:8024

# it use `service-definition.dev.json` service definition file

# If you have made some changes in the source code, you can rebuild the capysvr

./dev.sh rebuild capysvr

# Stop the capysvr

./dev.sh stop capysvrWhat is available for capycmd:

# Build capycmd from the source code and run it with all necessary dependencies

# This will open the container's shell where you have access to ./capycmd command

./dev.sh start capycmd

~$ ./capycmd logs:archive

# If you have made some changes in the source code, you can rebuild the capycmd

./dev.sh rebuild capycmd

# Stop the capycmd

./dev.sh stop capycmd