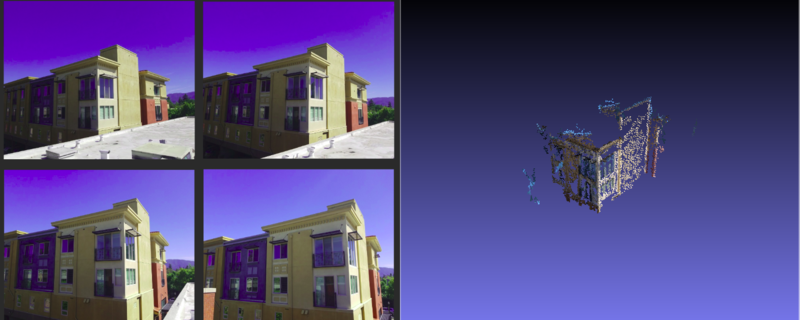

This is an early prototype project to develop a Structure from Motion Pipeline.

This package uses the following 3rd party packages to run:

You will need to go and install GTSAM on your own machine. I used this tag when I did my testing.

For PMVS2, I have included it in this local repo so It can make local calls to it to build the actual pointcloud *.ply file.

Once you have installed GTSAM, you will need to navigate to the root of this directory and run:

mkdir build

cd build

cmake ..

make

You should see the cSfM executable now in the build directory.

To use cSfM, you will need to have a directory containing several images of your item of interest. You will also need to pass in the FULL path to a textfile that contains the name of the images in the same folder you wish to build the pointcloud of, the focal length of your image (in pixels), the center of your image in x and y.

If you are using a claibrated camera, this should be easy to find as elements in the resultant distortion matrix.

However, if you are using images from a smart phone/uncalibrated camera, you can estimate these parameters.

To calculate the focal length of your image, I have written a simple helper Python script. The way you use that is as follows:

python calculate_focal_length.py [pxW] [f_mm] [fov]

where:

pxWis the width of the images in pixels.f_mmis the focal length of the camera that was used to capture the image in [mm].fovis the camera's Field of View in degrees.

To get this information, one can look at the EXIF metadata that their images should have.

This should print out a relatively accurate estimate of the focal length of your images in pixel space.

For a rough estimate of the center of the image in x and y, you can use this rough estimate:

- cx = PixelWidth/2

- cy = PixelHeight/2

This should be accruate enough, as the algorithm will attempt to calculate a more accurate camera matrix as it triangulates points in space.

This is still a first attempt at SfM, this is a very complex problem that still has a lot of research conducted on how to use different cameras and different improve results. That being said, here are some thoughts and tips to get good results:

-

Scale down your images. This pipeline does not use a GPU based method to calculate poses in space via triangulation, so it may take a long time.

-

Try and use images that have large overlaps in them. Right now the pipeline matches keypoints across all the images, so if there is not enough overlap, it will struggle to recover camera poses.

-

Use a few images at first to test things out. Do not overestimate how computationally expensive it becomes as the size of images and volume of the image stack increases.

I hope to continue to develop this further by adding the following improvements over time as a side project:

-

Move away from AKAZE for feature matching and towards optical flow. While working on this project I learned that although photojournalism uses unsorted SfM, most of my image sets were image sets from walking around with a camera to different points in space. This lends itself more to the Visual Odometry aspect of SfM as well as the applications of robotics and more traditional computer vision. Integrate this pipeline into ROS and use it with Visual Odometry.

-

In the past I have started to write a Monocular Visual Odometry ROS package. With what I learned here, I would really like to go and see how I can improve my error prone implementation there with what I learned. See how I can integrate VO and SfM together.

-

Look further into OpenMVG, OpenMVS, and PCL. One issue with PMVS is that it produces a singular static ply file. If I wanted to integrate this into ROS for dynamic pointcloud pointcloud generation, I will need to figure out how to build up pointcloud data structures and publish them for the data types that ROS can handle.

- http://www.cse.psu.edu/~rtc12/CSE486/lecture19.pdf

- https://docs.opencv.org/3.0-beta/modules/calib3d/doc/camera_calibration_and_3d_reconstruction.html

- https://docs.opencv.org/3.1.0/da/de9/tutorial_py_epipolar_geometry.html

- http://nghiaho.com/?p=2379

- https://www.di.ens.fr/pmvs/pmvs-1/index.html

- http://www.cs.cornell.edu/~snavely/bundler/

- https://robotics.stackexchange.com/questions/14456/determine-the-relative-camera-pose-given-two-rgb-camera-frames-in-opencv-python

- https://www.cc.gatech.edu/grads/j/jdong37/files/gtsam-tutorial.pdf