- The bigger picture 🌌

- Dataset 🧠

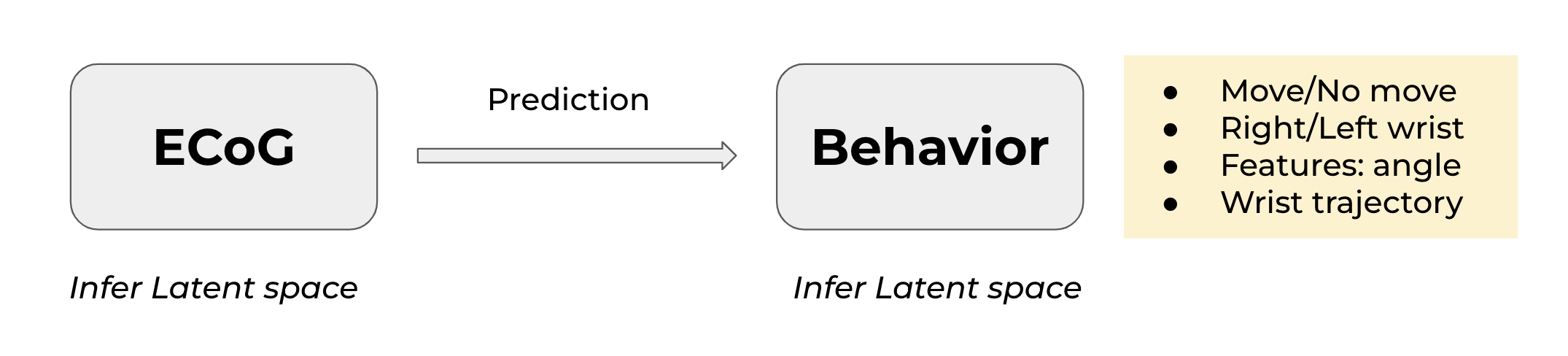

- Step 1: Decoding movements

- Step 2: Build low-dimensional representations

- Step 3: Decode low-dimensional representations of behavior from ECoG data

A number of authors have recently suggested that we should look at the mesoscale dynamics of brain to disentangle specific movements (e.g., Natraj et al., 2022).

Can movements be encoded in the brain via low-dimensional latent space variables?

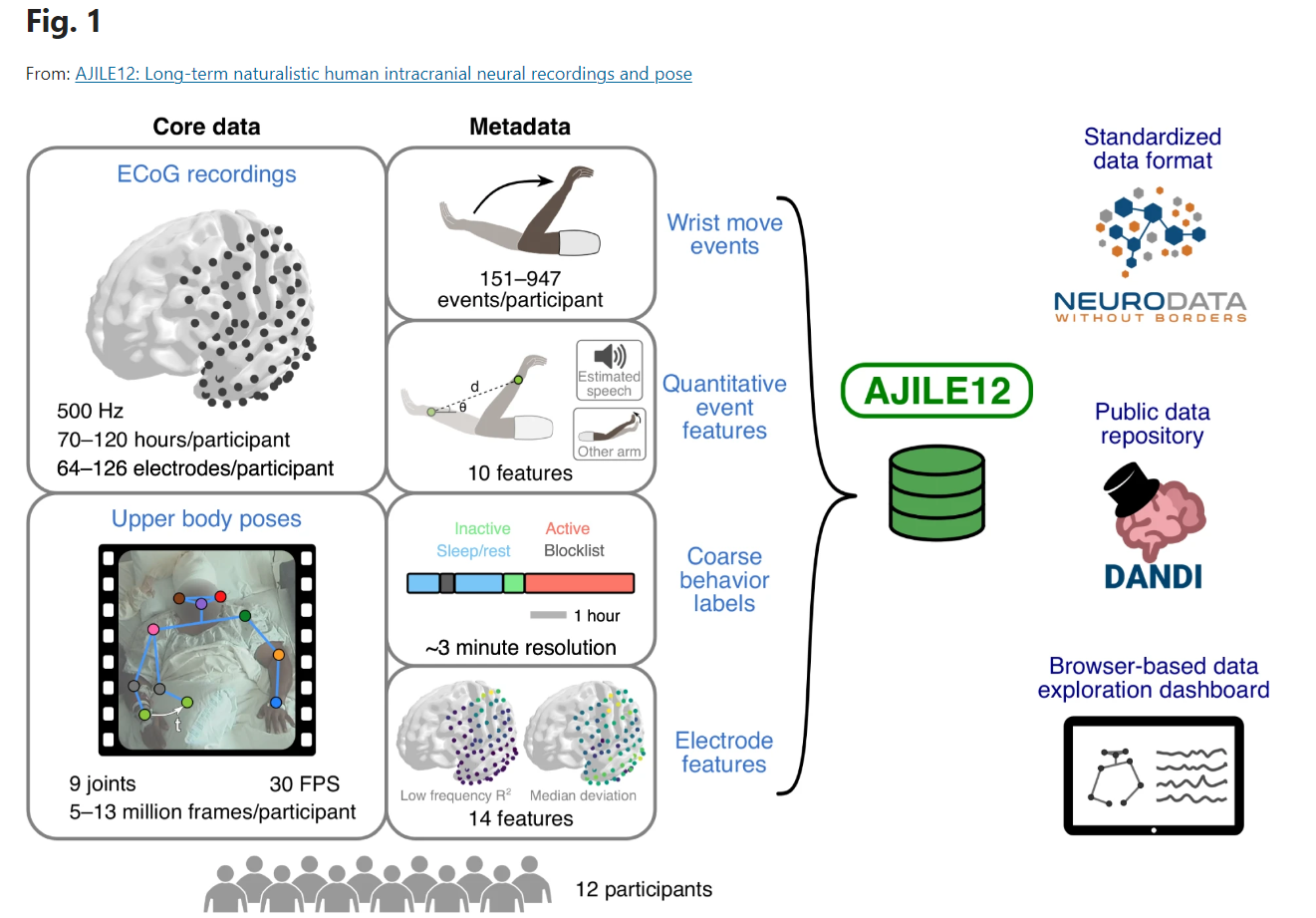

Datasets are available here. Supporting paper: link

Code to view and to open the data is available here.

Figure taken from: Peterson, S.M., Singh, S.H., Dichter, B. et al. AJILE12: Long-term naturalistic human intracranial neural recordings and pose. Sci Data 9, 184 (2022)

Using the dataset from this paper it is possible to solve movement/no-movement classification task. The corresponding jupyter-notebook can be found at ./models/Baseline-Classification-Model. Over 95% accuracy was achieved on spectral features, suggesting their importance for decoding movements.

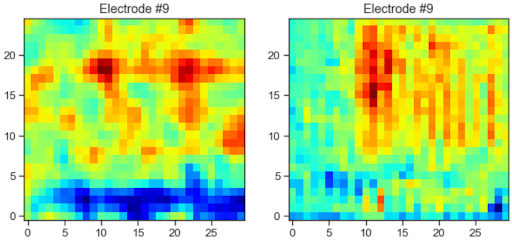

'Is it possible to infer low-dimensional space describing spectral dynamics in the brain? 🤔

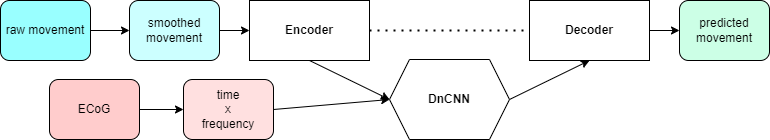

We tried to construct an encoder-decoder network based on time-frequency features. Later the encoder part of this network could be used in any ECoG-behavior task. However, one sample for training such network is of shape = (n_channels, n_freqs, n_times), yielding a very high-dimensional input vector. This figure shows hope for constructing a decent time-frequency autoencoder, but it struggles with generalization and even correct representation of input matrices:

The PCA, fully-connected and deep convolutional autoencoders for squeezing high-dimensional time-frequency data can be found at \models\Handmade-TF-Autoencoder\.

Further development is based on the idea that deep convolutional autoencoder is not able to learn due to gradient vanishing and underfitting.

One of our ideas for the project was wrist prediction based on ECoG time-frequency spectrograms. The authors of the paper once used HTNet structure for a similar task and programmed it in Keras. We reproduced the HTNet model, which refers to one of the ConvNets developed by the creators of the dataset, using which we obtained high performance in binary classification according to several metrics. This task introduced a bit of struggle because we had to transfer initial Keras code to PyTorch, bearing in mind that PyTorch lacks individual functions for Depthwise and Separable convolutions. See code here.

The authors of the paper extracted so-called "reach" events and their corresponding features, such as displacement, duration, polynomial approximation and angle. We hypothesised that angle can be predicted using time-frequency features. However, this was not the case. It is possible that noise from the motion-capture system and lack of 3d-reconstruction of movements made it impossible to extract reasonable features. We highly doubt that behavioral time series possess a lot of sense without normalization and smoothing.

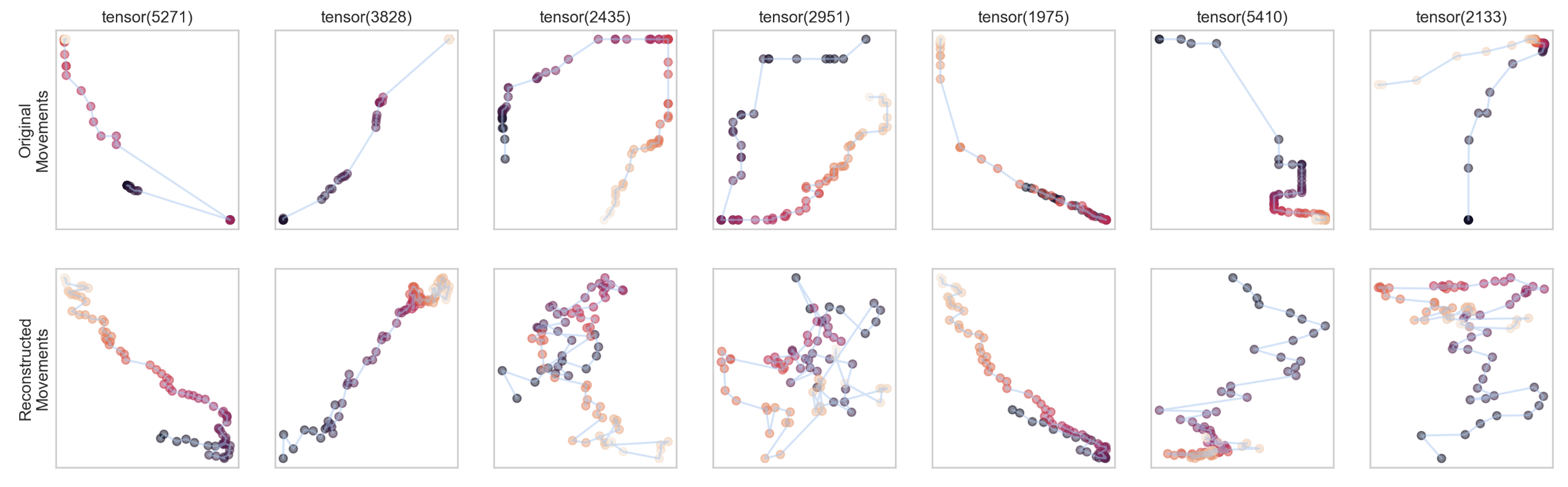

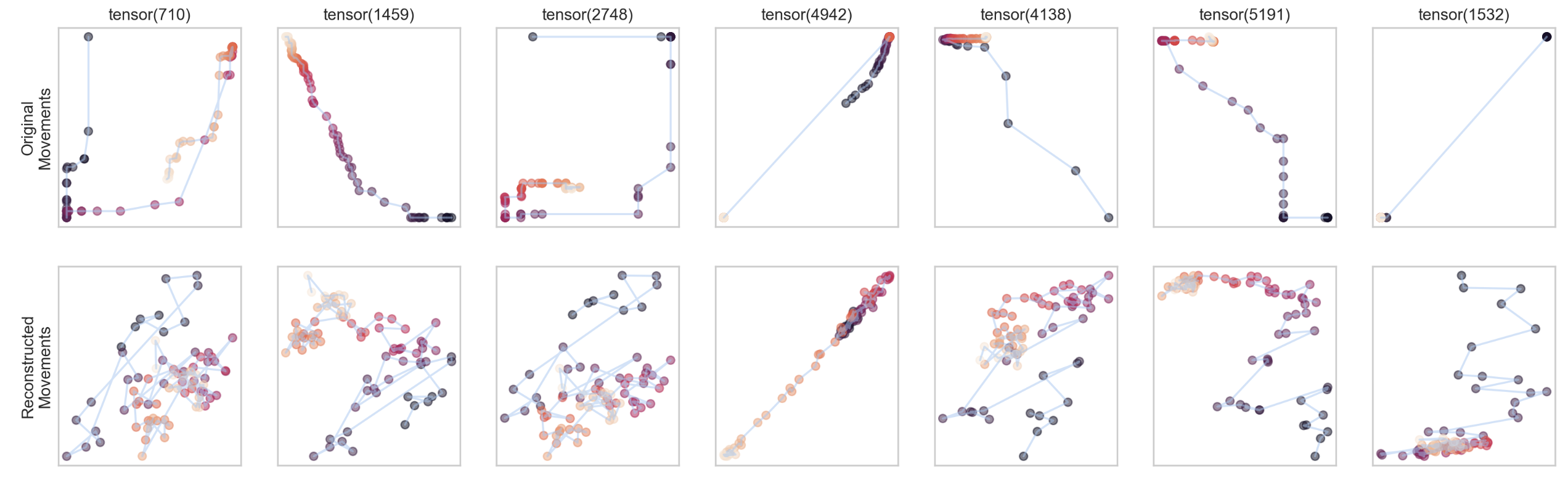

The goal of this step of our analysis was to compress movement trajectories into low-dimensional space. At the first glance, this supposed to be a trivial task, so we quickly built autoencoder and plugged the raw coordinates of the movement into it. And it did not work for all movements. See code here.

Movement reconstruction using linear autoencoder with the latent space = 10.

Movement reconstruction using linear autoencoder with the latent space = 4.

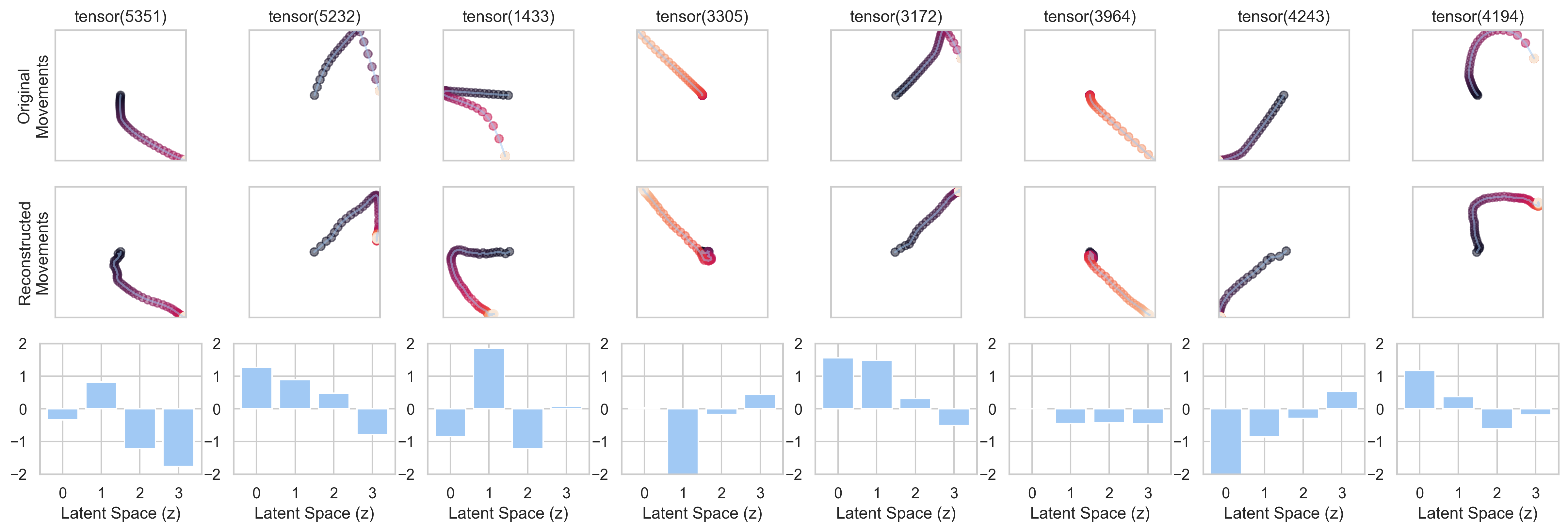

Examples of preprocessed movements.

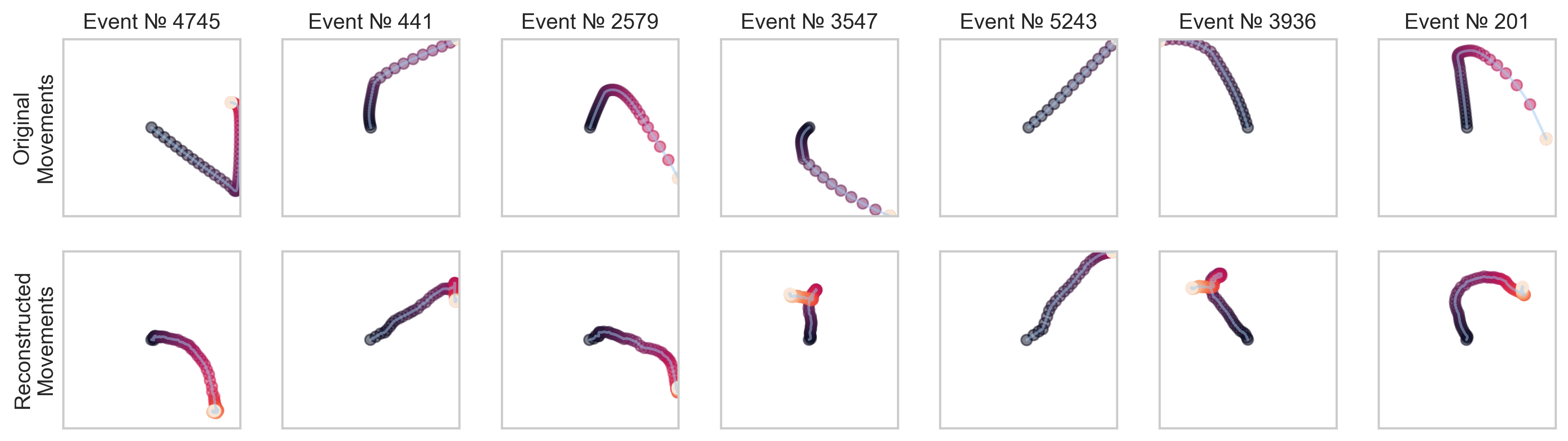

Examples of the VAE reconstructions.

What the latent space encodes? 🤔

Examples of decoder reconstructions based on different values of latent variables.

Pipeline of movement low-dimensional representation reconstruction from ECoG

Movement reconstruction from the decoded latent space values

Team: Antonenkova Yuliya, Chirkov Valerii, Latyshkova Alexandra, Popkova Anastasia, Timcenko Aleksejs

Project TA: Xiaomei Mi

Mentor: Mousavi Mahta

- Natraj, N., Silversmith, D. B., Chang, E. F., & Ganguly, K. (2022). Compartmentalized dynamics within a common multi-area mesoscale manifold represent a repertoire of human hand movements. Neuron, 110(1), 154-174.e12. https://doi.org/10.1016/j.neuron.2021.10.002

- Peterson, S. M., Singh, S. H., Dichter, B., Scheid, M., Rao, R. P. N., & Brunton, B. W. (2022). AJILE12: Long-term naturalistic human intracranial neural recordings and pose. Scientific Data, 9(1), 184. https://doi.org/10.1038/s41597-022-01280-y

- Peterson, S. M., Steine-Hanson, Z., Davis, N., Rao, R. P. N., & Brunton, B. W. (2021). Generalized neural decoders for transfer learning across participants and recording modalities. Journal of Neural Engineering, 18(2), 026014. https://doi.org/10.1088/1741-2552/abda0b

- Peterson, S. M., Singh, S. H., Wang, N. X. R., Rao, R. P. N., & Brunton, B. W. (2021). Behavioral and Neural Variability of Naturalistic Arm Movements. ENeuro, 8(3), ENEURO.0007-21.2021. https://doi.org/10.1523/ENEURO.0007-21.2021

- Singh, S. H., Peterson, S. M., Rao, R. P. N., & Brunton, B. W. (2021). Mining naturalistic human behaviors in long-term video and neural recordings. Journal of Neuroscience Methods, 358, 109199. https://doi.org/10.1016/j.jneumeth.2021.109199

- Pailla, T., Miller, K. J., & Gilja, V. (2019). Autoencoders for learning template spectrograms in electrocorticographic signals. Journal of Neural Engineering, 16(1), 016025. https://doi.org/10.1088/1741-2552/aaf13f

- Higgins, I., Matthey, L., Pal, A., Burgess, C., Glorot, X., Botvinick, M., Mohamed, S., & Lerchner, A. (2022, July 21). beta-VAE: Learning Basic Visual Concepts with a Constrained Variational Framework. https://openreview.net/forum?id=Sy2fzU9gl