The repository is part of the so-called, Mega-Meta study on reviewing factors contributing to substance use, anxiety, and depressive disorders. The study protocol has been pre-registered at Prospero. The procedure for obtaining the search terms, the exact search query, and selecting key papers by expert consensus can be found on the Open Science Framework.

The screening was conducted in the software ASReview (Van de Schoot et al., 2020 using the protocol as described in Hofstee et al. (2021). The server installation is described in Melnikov (2021), and training of the hyperparameters for the CNN-model is described by Tijema et al (2021). The data can be found on DANS [LINK NEEDED].

The current repository contains the post-processing scripts to:

- Merge the three output files after screening in ASReview;

- Obtain missing DOIs;

- Apply another round of de-duplication (the first round of de-duplication was applied before the screening started).

- Deal with noisy labels corrected in two rounds of quality checks;

The scripts in the current repository result in one single dataset that can be used for future meta-analyses. The dataset itself is available on DANS[NEEDS LINK].

The /data folder contains test-files which can be used to test the pipeline.

NOTE: When you want to use these test files; please make sure that the empirical

data is not saved in the `/data` folder because the next step will overwrite these files.

- Open the

pre-processing.Rprojectin Rstudio; - Open

scrips/change_test_file_names.Rand run the script. The test files will now have the same file names as those of the empirical data. - Continue with Running the complete pipeline.

To check whether the pipeline worked correctly on the test data, check the following values in the output:

- Within the

crossref_doi_retrieval.ipynbscript 33/42 doi's should be retrieved. - After two rounds of deduplication in

master_script_deduplication.Rthe total number of relevant papers (sum of the values in the composite_label column) should be 21. - After running the quality_check function in

master_script_quality_check.Rthe number of changed labels should be:- Quality check 1: 7

- Quality check 2: 6

The empricial data is available on DANS[NEEDS LINK]. Request access, donwload the files,

and add the required data into the /data folder.

The following nine datasets should be available in /data:

The three export-datasets with the partly labelled data after screening in ASReview:

anxiety-screening-CNN-output.xlsxdepression-screening-CNN-output.xslxsubstance-screening-CNN-output.xslx

The three datasets resulting from Quality Check 1:

anxiety-incorrectly-excluded-records.xlsxdepression-incorrectly-excluded-records.xlsxsubstance-incorrectly-excluded-records.xlsx

The three datasets resulting from Quality Check 2:

anxiety-incorrectly-included-recordsdepression-incorrectly-included-recordssubstance-incorrectly-included-records

To get started:

- Open the

pre-processing.Rprojectin Rstudio; - Open

scripts/master_script_merging_after_asreview.R; - Install, if necessary, the packages required by uncommenting the lines and running them.

- Make sure that at least the following columns are present in the data:

titleabstractincludedyear(may be spelled differently as this can be changed withincrossref_doi_retrieval.ipynb)

- Open the

pre-processing.Rprojectin Rstudio and run themaster_script_merging_after_asreview.Rto merge the datasets. At the end of the merging script, the filemegameta_asreview_merged.xlsxis created and saved in/output. - Run the

scripts/crossref_doi_retrieval.ipynbin jupyter notebook to retrieve the missing doi's (you might need to install the package tqdm first:pip install tqdm). The output from the doi retrieval is stored in/output:megameta_asreview_doi_retrieved.xlsx. Note: This step might take some time! To significantly decrease run time, follow the steps in the Improving DOI retrieval speed section. - For the deduplication part, open and run

scripts/master_script_deduplication.Rback in the Rproject in Rstudio. This result is stored in/output:megameta_asreview_deduplicated.xslx - Two quality checks are performed. Manually change the labels

- of incorrectly excluded records to included.

- of incorrectly included records to excluded.

The data which should be corrected is available on DANS. This step should add the following columns to the dataset:

quality_check_1(0->1)(1, 2, 3, NA): This column indicates for which subjects a record was falsely excluded:- 1 = anxiety

- 2 = depression

- 3 = substance-abuse

quality_check_2(1->0)(1, 2, 3, NA): This column indicates for which subjects a record was falsely included:- 1 = anxiety

- 2 = depression

- 3 = substance-abuse

depression_included_corrected(0, 1, NA): Combining the information from the depression_included and quality_check columns, this column contains the inclusion/exclusion/not seen labels after correction.substance_included_corrected(0, 1, NA): Combining the information from the substance_included and quality_check columns, this column contains the inclusion/exclusion/not seen labels after correction.anxiety_included_corrected(0, 1, NA): Combining the information from the anxiety_included and quality_check columns, this column contains the inclusion/exclusion/not seen labels after correction.composite_label_corrected(0, 1, NA): A column indicating whether a record was included in at least one of the corrected_subject columns: The results after taking the quality checks into account.

- OPTIONAL: Create ASReview plugin-ready data by running the script

master_script_process_data_for_asreview_plugin.R. This script creates a new folder in the output folder,data_for_plugin, containing several versions of the dataset created from step 4. See Data for the ASReview plugin for more information.

It is possible to improve the speed of the doi retrieval by using the following steps:

- Split the dataset into smaller chunks by running the

split_input_file.ipynbscript. Within this script is the option to set the amount of chunks. If the records aren't split evenly, the last chunk might be smaller than the others. - For each chunk, create a copy of the

chunk_0.pyfile, and place it in thesplitfolder. Change the namechunk_0.pytochunk_1.py,chunk_2.py, etc, for each created chunk. - Within each file, change

script_number= "0" toscript_number= "1",script_number= "2", etc. - Run each

chunk_*.pyfile in thesplitfolder simultaneously from a separate console. The script stores the console output to a respectiveresult_chunk_*.txtfile. - Use the second half of

merge_files.ipynbto merge the results of thechunk_*.pyscripts. - The resulting file will be stored in the same way as the

crossref_doi_retrieval.ipynbwould.

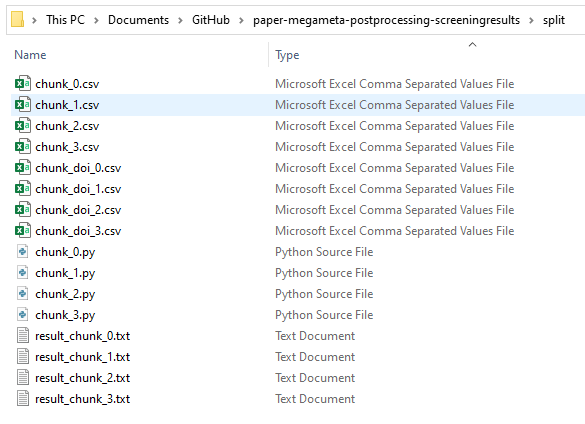

The split folder should look like this after each chunk has been run:

Keeping in mind that deduplication is never perfect,

scripts/master_script_deduplication.R contains a function to deduplicate the

records in a very conservative way. It is assumed that it is better to miss

duplicates within the data, than to falsely deduplicate records.

Therefore deduplication within the master_script_deduplication.R is based on

two different rounds of deduplication. The first round uses the digitial

object identifier (doi) to identify duplicates. However, many doi's, even

after doi-retrieval, are still missing. Or in some cases the doi's may be

different for otherwise seemingly identical records. Therefore, an extra round

of deduplication is applied to the data. This conservative strategy was

devised with the help of @bmkramer. The code used a deduplication script by

@terrymyc as inspiration.

The exact strategy of the second deduplication round is as follows:

- Set all necessary columns (see below) for deduplication to lowercase characters and remove any punctuation marks.

- Count duplicates identified using conservative deduplication strategy. This strategy will identify duplicates based on:

- Author

- Title

- Year

- Journal or issn (if either journal or issn is an exact match, together with the above, the record is marked as a duplicate)

- Count duplicates identified using a less conservative deduplication strategy. This strategy will identify duplicates based on:

- Author

- Title

- Year

- Deduplicate using the strategy from 2.

The deduplication script will also print the number of identified duplicates for both the conservative strategy and a less conservative strategy based on only authors, title, and year. In this way, we can compare the impact of different duplication strategies.

The script master_script_process_data_for_asreview_plugin.R creates a new folder in the output folder, data_for_plugin, containing several versions of the dataset created from step 4.

megameta_asreview_partly_labelled: A dataset where a column calledlabel_includedis added, which is an exact copy of the composite_label_corrected.megameta_asreview_only_potentially_relevant: A dataset with only those records which have a 1 in composite_label_correctedmegameta_asreview_potentially_relevant_depression: A dataset with only those records which have a 1 in depression_included_correctedmegameta_asreview_potentially_relevant_substance: A dataset with only those records which have a 1 in substance_included_correctedmegameta_asreview_potentially_relevant_anxiety: A dataset with only those records which have a 1 in anxiety_included_corrected

[INSTRUCTIONS FOR PLUGIN?]

change_test_file_names.R- With this script the filenames of the test files are converted to the empirical datafile names.merge_datasets.R- This script contains a function to merge the datasets. An unique included column is added for each dataset before the merge.composite_label.R- This script contains a function to create a column with the final inclusions.print_information_datasets.R- This script contains a function to print information on datasets.identify_duplicates.R- This script contains a function to identify duplicate records in the dataset.deduplicate_doi.R- This script contains a function to deduplicate the records, based on doi, while maintaining all information.deduplicate_conservative.R- this script contains a function to deduplicate the records in a conservative way based on title, author, year and journal/issn

The result of running all master scripts up until step 4

in this repository is the file

output/megameta_asreview_quality_checked.xslx. In this dataset the following

columns have been added:

index(1-165045): A simple indexing column going from 1-165045. Some numbers are not present because they have been removed after deduplication.unique_record(0, 1, NA): Indicating whether the column has a unique DOI. This is NA when there is no DOI present.depression_included(0, 1, NA): A column indicating whether a record was included in depression.anxiety_included(0, 1, NA): A column indicating whether a record was included in anxiety.substance_included(0, 1, NA): A column indicating whether a record was included in substance_abuse.composite_label(0, 1, NA): A column indicating whether a record was included in at least one of the subjects.quality_check_1(0->1)(1, 2, 3, NA): This column indicates for which subjects a record was falsely excluded:- 1 = anxiety

- 2 = depression

- 3 = substance-abuse

quality_check_2(1->0)(1, 2, 3, NA): This column indicates for which subjects a record was falsely included:- 1 = anxiety

- 2 = depression

- 3 = substance-abuse

depression_included_corrected(0, 1, NA): Combining the information from the depression_included and quality_check columns, this column contains the inclusion/exclusion/not seen labels after correction.substance_included_corrected(0, 1, NA): Combining the information from the substance_included and quality_check columns, this column contains the inclusion/exclusion/not seen labels after correction.anxiety_included_corrected(0, 1, NA): Combining the information from the anxiety_included and quality_check columns, this column contains the inclusion/exclusion/not seen labels after correction.composite_label_corrected(0, 1, NA): A column indicating whether a record was included in at least one of the corrected_subject columns: The results after taking the quality checks into account.

For all columns where there are only 0's 1's and NA's, a 0 indicates a negative

(excluded for example), while 1 indicates a positive (included for example). NA

means Not Available.

This project is funded by a grant from the Centre for Urban Mental Health, University of Amsterdam, The Netherlands

The content in this repository is published under the MIT license.

For any questions or remarks, please send an email to the ASReview-team or Marlies Brouwer.