draw an instrument, and play it! (fun with PaliGemma and SCAMP)

(click to play)

Note - this is a prototype, not a production-grade app. (The OpenCV streaming setup is a bit buggy and occasionally crashes. You may need to restart.)

You will need:

- A local machine with a webcam, and Python 3.11+. (Client has been tested on MacOS Sonoma / M1 Mac).

- A Google Cloud project, with access to Google Compute Engine.

- A HuggingFace account with an Access Token

- Create a Google Compute Engine instance with at least one GPU. I used two NVIDIA T4 GPUs, but adjust to whatever your quota allows. Set "Allow HTTP/HTTPS" traffic to

true. - SSH into the instance.

- Install Python packages for the server workloads.

git clone https://github.com/askmeegs/papermusic

cd papermusic

python3 -m venv .

source ./bin/activate

pip install -r requirements.txt- Set your HuggingFace Access Token as an environment variable.

export HUGGINGFACE_USER_ACCESS_TOKEN=your_token_here- Clone the repo on your local machine.

git clone https://github.com/askmeegs/papermusic

cd papermusic

- Install client packages.

python3 -m venv .

source ./bin/activate

pip install -r requirements.txt

Place a hand-drawn musical instrument in front of your webcam, like the screenshot shown above. Make sure the notes are written clearly on the instrument.

- Start

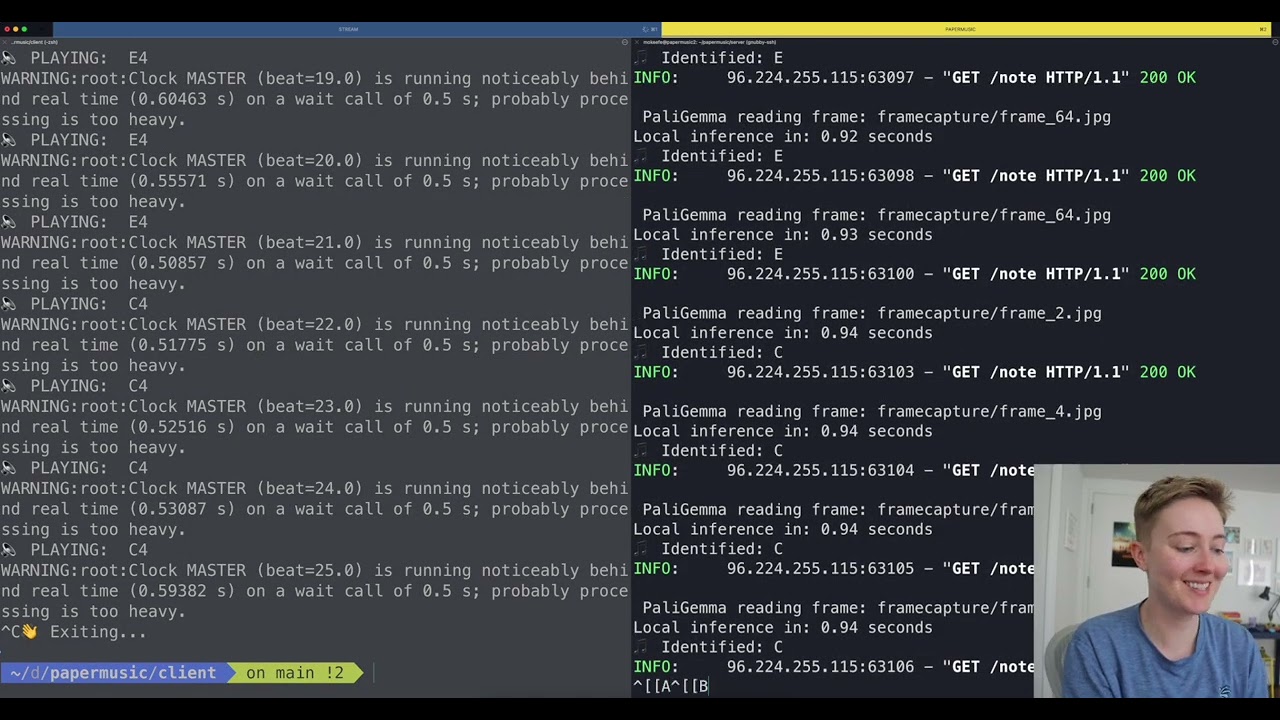

server/handlestream.pyon GCE, to listen for the webcam stream. - Start

client/webcamclient.pyon your local machine, to send your webcam stream to GCE. - Start

server/server.pyon GCE, to process the webcam stream and identify the notes. - Start

client/audioclient.pyon your local machine, to poll the Server and play the notes over local audio.