liveProject: Reinforcement Learning for self driving vehicles

This github repository contains implementations of liveProject: Reinforcement Learning for self-driving vehicles for reference purposes. This repository includes two different types of implementations. First, containing agent implementations from scratch and second approach uses stable-baselines or rl-agents like packages to directly import algorithms for fast prototyping to evaluate the performance of the models.

In this liveProject we explore the tools, techniques and methodologies used by AI researchers to quantify the performance of an agent in a given environment to achieve a given task. The use-case that we explore is navigating and performing different tasks across multiple driving environments. We explore different basic and abstract driving environments for prototyping some RL algorithm implementations in this beginner centered up-for-grabs liveProject.

Note: For all the Colab Implementation Links below please create your own copy and proceed with the code editing, execution and testing process.

This liveProject is analyzed based on three simple working principles in AI: optimize, explore & simplify. The practical applications of these concepts are elaborated over from Milestone Two, Three & Four. Also, we have an introduction Milestone that focuses primarily on visualization techniques.

- Milestone One: Visualization in Reinforcement Learning for problem & result analysis.

- Getting started with Google Colab environments & notebooks with OpenAI.

- Introduction to RL with Value Iteration algorithm in simple environments.

- Limitations for Value Iteration algorithm in

Taxi-v3scenario. Colab Implementation Link

- Limitations for Value Iteration algorithm in

- Developing Value Iteration for MDP based driving scenarios in the

highway-envpackage. Colab Implementation Link - Deliverable One: Comparative result Visualizations for discussed algorithms in MDP based driving scenarios.

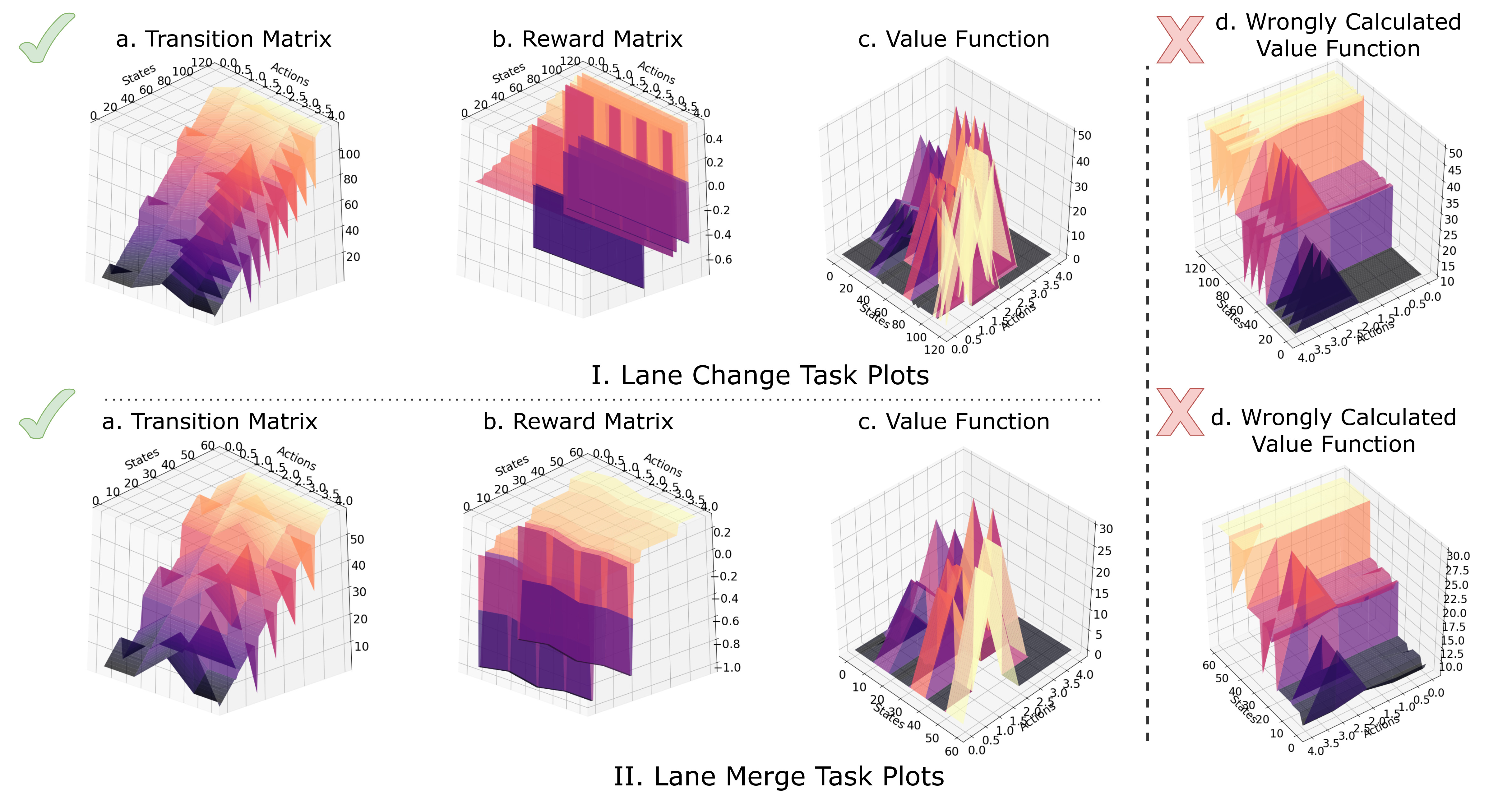

Figure 1: Lane change and highway merge (additional) tasks plots for reference.

- Milestone Two: Optimizing a given agent's performance to its fullest potential.

- Setting up Google Colab notebooks for rendering OpenAI environments outputs. Colab Implementation Link

- Value function approximation implementation with Q-table and exploration for OpenAI's

MountainCar-v0environment. Colab Implementation Link

- Value function approximation implementation with Q-table and exploration for OpenAI's

- Handling sparse rewards scenarios in goal based environments like

MountainCar-v0environment. Colab Implementation Link - Deliverable Two: Evaluating & documenting variations in agent behavior for our hand-built reward functions to develop the most optimized agent in

MountainCar-v0for this approach.

- Setting up Google Colab notebooks for rendering OpenAI environments outputs. Colab Implementation Link

Figure 2: Reward Shaping approach output (Left) & its Optimized Iteration output (Right) respectively.

- Milestone Three: Exploring new algorithms for achieving better performance in goal based driving tasks.

- State–action–reward–state–action (SARSA lambda) agent implementation for

MountainCar-v0environment to learn agent designing concepts. Colab Implementation Link - Exploring new RL algorithms for goal based parking task on

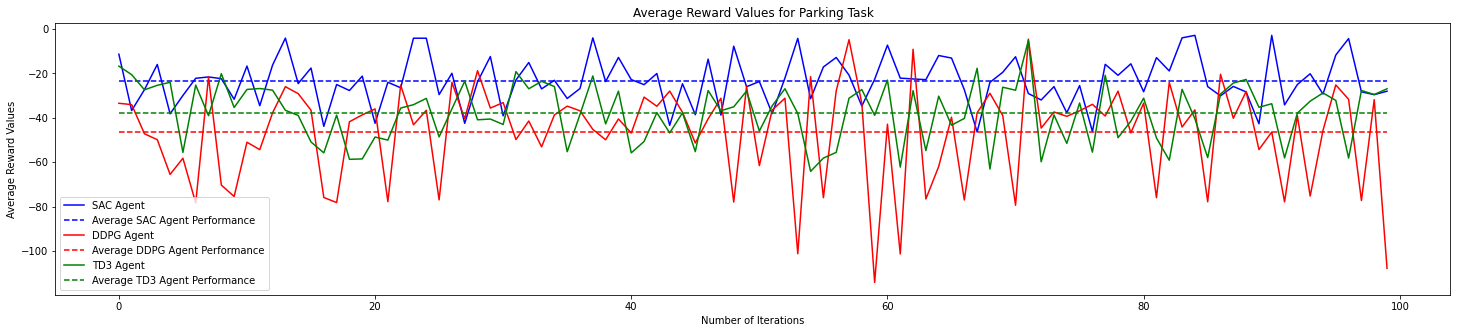

highway-envpackage with HER and SAC fromstable-baselines3RL algorithms package. Colab Implementation Link - Deliverable Three: Implementation, Analysis and Documentation of HER supported algorithms like DDPG, TD3 and SAC for the parking task on third party highway-env package in

parking-v0environment.

- State–action–reward–state–action (SARSA lambda) agent implementation for

Figure 3: Mean average reward plot for performance evaluation of SAC, TD3 & DDPG equipped with HER.

Additionally, if you are interested in reviewing the code base of the Tensorflow based stable-baselines package, you can check their earlier archived repository. Also, you can also refer to theTensorflow backend based implementation of HER based SAC agent as well, just to see turnarounds required for running the earlier stable-baselines. Colab Implementation Link

- Milestone Four: Designing plan oriented agents that use searching capabilities to navigate across optimal paths in an environment.

-

Monte Carlo Tree Search (MCTS) Implementation for Toy Text

Taxi-v3environments. -

Introduction to navigation environments in

highway-envpackage and MCTS agent prototyping for all these navigation based tasks withrl-agentspackage. -

Deliverable 3: Creating a MCTS learning and evaluation functional structure to evaluate the agent performance for different environments present in the

highway-envpackage.Note: The current generic implementation doesn't work optimally for these different environments in the

highway-envpackage. You can skip the optimization and performance analysis part.

-

- Certification Test: Final certification test checks the understanding developed by you during the liveProject.

Figure 4: Outputs of different trained MCTS agents with the same parameters that are under evaluation in the certification test.

This is a supplementary section that covers an additional topic of making a custom environment as per one's need.

- Building Custom Environments In this notebook we create a sample environment for highway-env package and use the rl-agent package to create a baseline planner agent corresponding to that environment. Colab Implementation Link

Figure 5: U-Turn environment baseline agents namely MCTS Agent (Left) and Deterministic Agent (Right).

- Thank you to

for providing and maintaining the

and

packages.

- Thanks to

team and

for providing efficient and well documented implementations of RL algorithms in a single

.

- Also, thanks to

for an interesting implementation use-case of reward shaping.

- Great improvement suggestions were provided by

,

&

. It's because of them this project has reached its current stage. Thank you for all the support & help!