This repo provides a multitenant capable GitOps workflow structure that can be forked and used to demonstrate the deployment and configuration of a multi-cluster mesh demo as code using the Argo CD app-of-apps pattern.

- prod:

- gloo mesh 2.1.0-beta27

- istio 1.13.4

- revision: 1-13

- dev:

- gloo mesh 2.1.0-beta28

- istio 1.15.0

- revision: 1-15

- 1 Kubernetes Cluster

- This demo has been tested on 1x

n2-standard-4(gke),m5.xlarge(aws), orStandard_DS3_v2(azure) instance formgmtcluster

- This demo has been tested on 1x

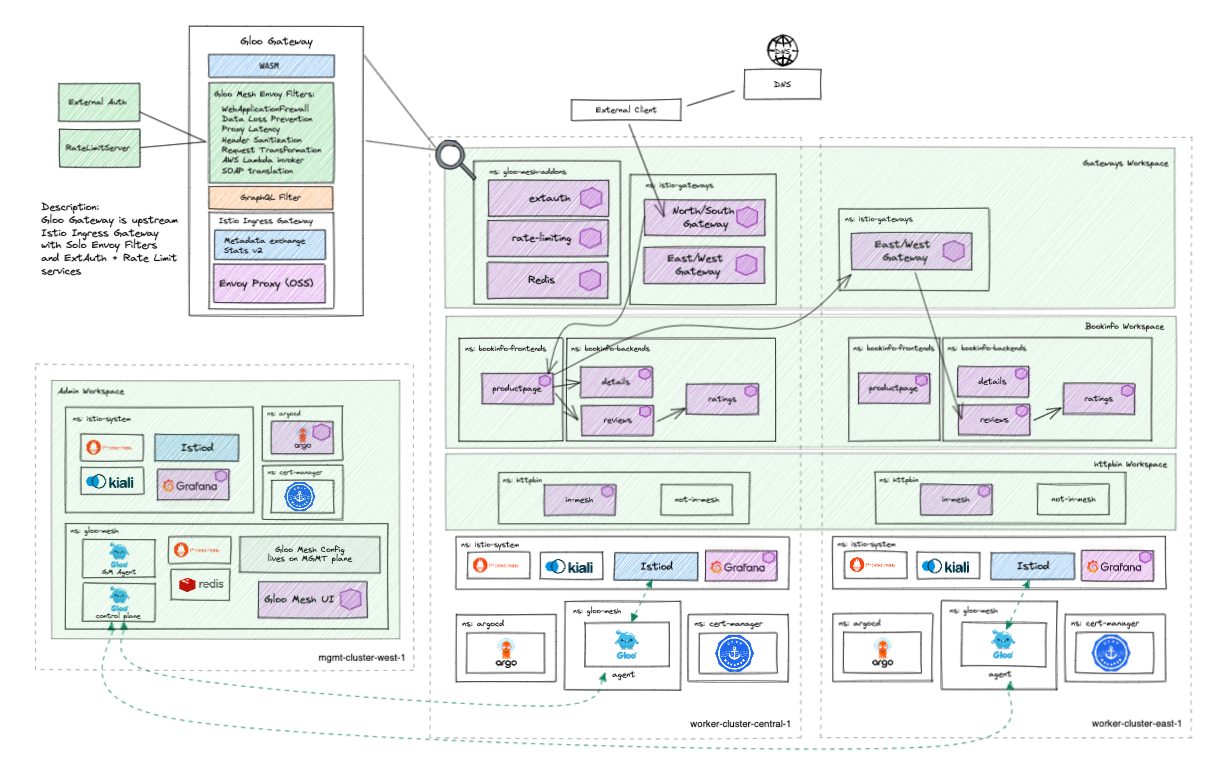

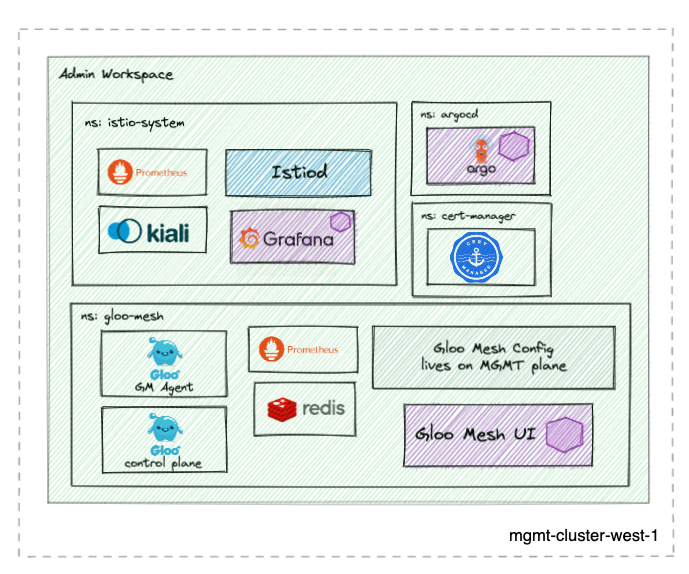

This repo is meant to be deployed along with the following repos to create the entire High Level Architecture diagram below.

Run:

./deploy.sh $LICENSE_KEY $environment_overlay $cluster_context # deploys on mgmt cluster by default if no input

The script will prompt you for input if not provided

You can configure parameters used by the script in the vars.txt. This is particularily useful if you want to test an alternate repo branch or if you fork this repo.

LICENSE_KEY=${1:-""}

environment_overlay=${2:-""} # prod, dev, base

cluster_context=${3:-mgmt}

github_username=${4:-ably77}

repo_name=${5:-aoa-mgmt}

target_branch=${6:-HEAD}

Note:

- Although you may change the contexts where apps are deployed as describe above, the Gloo Mesh and Istio cluster names will remain stable references (i.e.

mgmt,cluster1, andcluster2)

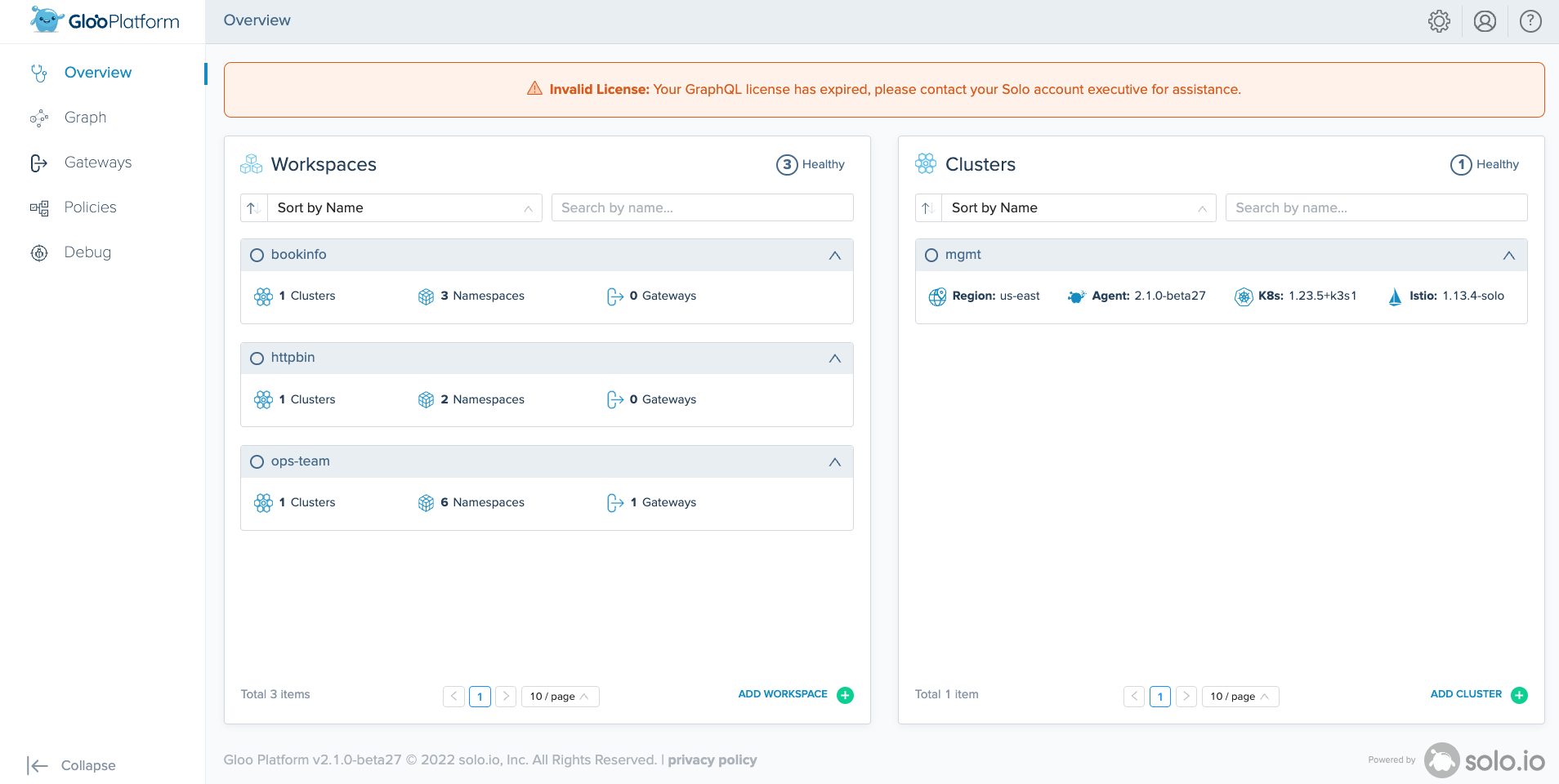

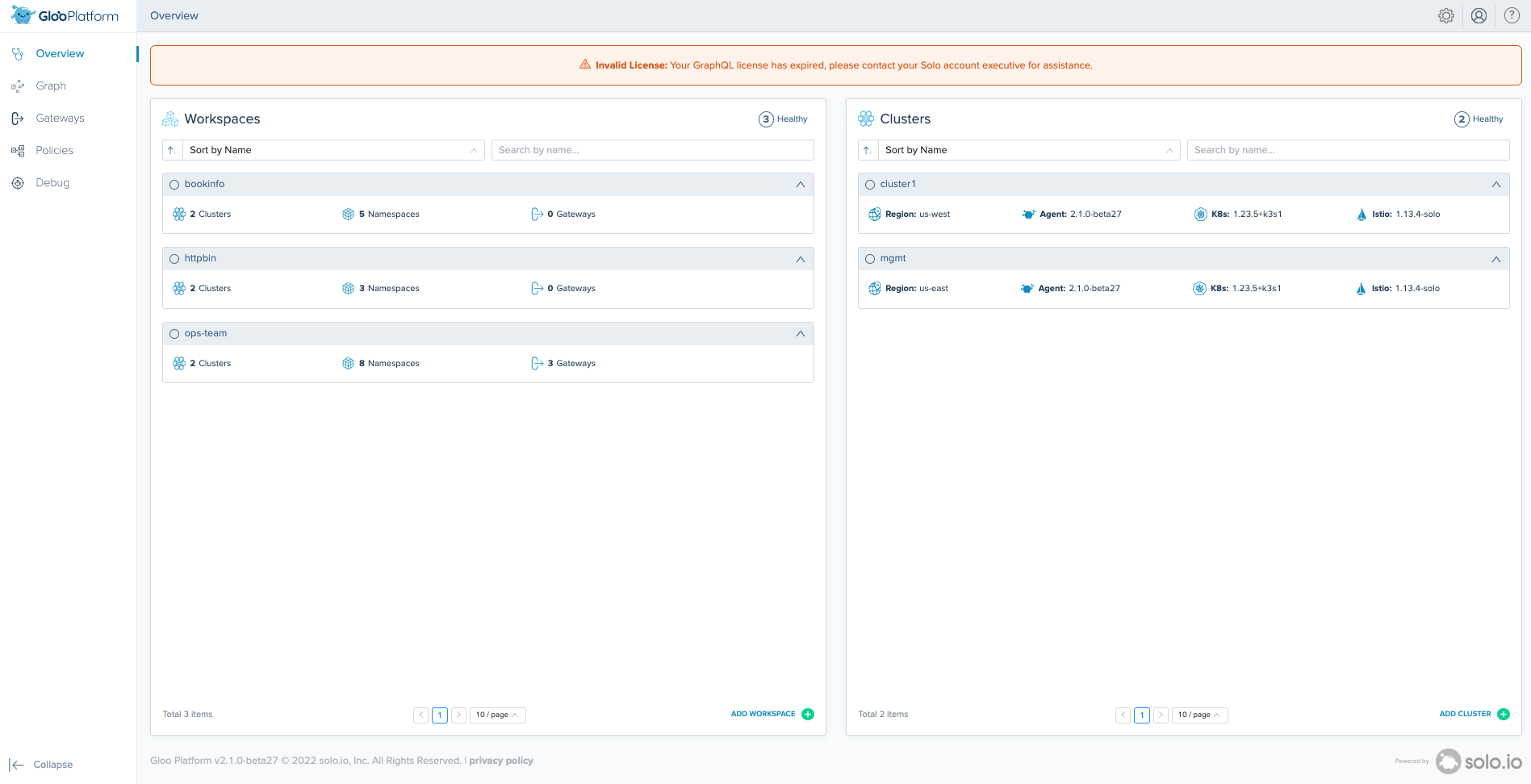

Once the installation is complete, you should be able to navigate to your LoadBalancer address to access Argo CD and the Gloo Mesh UI. The bookinfo, httpbin, and ops-team workspaces have already been precreated as well as the mgmt cluster and agent registered.

This setup is configured to expose the Gloo Mesh UI with Gloo Gateway using a wildcard host '*'. This means when using the default type LoadBalancer setting, this will take the address of the generated load balancer for the Istio ingressgateway

To access the Gloo Mesh UI you can go to https://< istio-gateway-address >/gmui

You can also always use port-forward

kubectl port-forward -n gloo-mesh svc/gloo-mesh-ui 8090

This setup is configured to expose ArgoCD using a wildcard host '*' at the endpoint /argo. This means when using the default type LoadBalancer setting, this will take the address of the generated load balancer for the Istio ingressgateway

To access the Argo CD UI you can go to https://< istio-gateway-address >/argo

Login to Argo CD with: Username: admin Password: solo.io

access the argocd dashboard with port-forward: kubectl port-forward svc/argocd-server -n argocd 9999:443 --context mgmt

navigate to http://localhost:9999/argo in your browser for argocd

username: admin password: solo.io

At this point, the base setup for a single cluster mesh is complete. It is possible now to deploy your own workloads in application namespaces and create Gloo Mesh CRDs and policies manually, or if you have forked this repo you can push those updates into Git and let Argo CD sync those changes for you.

As noted above, this repo is meant to be deployed along with the following repos to create the entire multi-cluster architecture. When successfully deployed together, you will see that the Gloo Mesh Agent will register itself to the control plane and the bookinfo and httpbin workloads that exist on cluster1 and cluster2 (if also deployed) will be routable destinations in the mesh.

The app-of-apps pattern uses a generic Argo Application to sync all manifests in a particular Git directory, rather than directly point to a Kustomize, YAML, or Helm configuration. Anything pushed into the environment/<overlay>/active directory is deployed by it's corresponding app-of-app

If you are curious to learn more about the pattern, Christian Hernandez from CodeFresh has a solid blog describing at a high level the pattern I'm using here in this repo (https://codefresh.io/blog/argo-cd-application-dependencies/)

Fork this repo and replace the variables in the vars.txt github_username, repo_name, and branch with your own

From there should be able to deploy and sync the corresponding environment waves as is in your forked repo or push new changes to it