DrugGEN: Target Specific De Novo Design of Drug Candidate Molecules with Graph Transformer-based Generative Adversarial Networks

Check out our paper below for more details

DrugGEN: Target Centric De Novo Design of Drug Candidate Molecules with Graph Generative Deep Adversarial Networks,

Atabey Ünlü, Elif Çevrim, Ahmet Sarıgün, Heval Ataş, Altay Koyaş, Hayriye Çelikbilek, Deniz Cansen Kahraman, Abdurrahman Olğaç, Ahmet S. Rifaioğlu, Tunca Doğan

Arxiv, 2023

This implementation:

- has the demo and training code for DrugGEN implemented in

&

,

- can design de novo drugs based on their protein interactions,

| First Generator | Second Generator |

|---|---|

|

|

| ChEMBL-25 | ChEMBL-45 |

|---|---|

|

|

We provide the implementation of the DrugGEN, along with scripts from PyTorch Geometric framework to generate and run. The repository is organised as follows:

data contains:

- Raw dataset files, which should be text files containing SMILES strings only. Raw datasets preferably should not contain stereoisomeric SMILES to prevent Hydrogen atoms to be included in the final graph data.

- Constructed graph datasets (.pt) will be saved in this folder along with atom and bond encoder/decoder files (.pk).

experiments contains:

logsfolder. Model loss and performance metrics will be saved in this directory in seperate files for each model.tboard_outputfolder. Tensorboard files will be saved here if TensorBoard is used.modelsfolder. Models will be saved in this directory at last or preferred steps.samplesfolder. Molecule samples will be saved in this folder.inferencefolder. Molecules generated in inference mode will be saved in this folder.

Python scripts:

layers.pycontains transformer encoder and transformer decoder implementations.main.pycontains arguments and this file is used to run the model.models.pyhas the implementation of the Generators and Discriminators which are used in GAN1 and GAN2.new_dataloader.pyconstructs the graph dataset from given raw data. Uses PyG based data classes.trainer.pyis the training and testing file for the model. Workflow is constructed in this file.utils.pycontains performance metrics from several other papers and some unique implementations. (De Cao et al, 2018; Polykovskiy et al., 2020)

Three different data types (i.e., compound, protein, and bioactivity) were retrieved from various data sources to train our deep generative models. GAN1 module requires only compound data while GAN2 requires all of three data types including compound, protein, and bioactivity.

- Compound data includes atomic, physicochemical, and structural properties of real drug and drug candidate molecules. ChEMBL v29 compound dataset was used for the GAN1 module. It consists of 1,588,865 stable organic molecules with a maximum of 45 atoms and containing C, O, N, F, Ca, K, Br, B, S, P, Cl, and As heavy atoms.

- Protein data was retrieved from Protein Data Bank (PDB) in biological assembly format, and the coordinates of protein-ligand complexes were used to construct the binding sites of proteins from the bioassembly data. The atoms of protein residues within a maximum distance of 9 A from all ligand atoms were recorded as binding sites. GAN2 was trained for generating compounds specific to the target protein AKT1, which is a member of serine/threonine-protein kinases and involved in many cancer-associated cellular processes including metabolism, proliferation, cell survival, growth and angiogenesis. Binding site of human AKT1 protein was generated from the kinase domain (PDB: 4GV1).

- Bioactivity data of AKT target protein was retrieved from large-scale ChEMBL bioactivity database. It contains ligand interactions of human AKT1 (CHEMBL4282) protein with a pChEMBL value equal to or greater than 6 (IC50 <= 1 µM) as well as SMILES information of these ligands. The dataset was extended by including drug molecules from DrugBank database known to interact with human AKT proteins. Thus, a total of 1,600 bioactivity data points were obtained for training the AKT-specific generative model.

More details on the construction of datasets can be found in our paper referenced above.

- 15/02/2023: Our

is shared here together with its supplementary material document link.

- 01/01/2023: First version script of DrugGEN is released.

DrugGEN has been implemented and tested on Ubuntu 18.04 with python >= 3.9. It supports both GPU and CPU inference.

Clone the repo:

git clone https://github.com/asarigun/DrugGEN.gitYou can set up the environment using either conda or pip.

Here is with conda:

# set up the environment (installs the requirements):

conda env create -f DrugGEN/dependencies.yml

# activate the environment:

conda activate druggenHere is with pip using virtual environment:

python -m venv DrugGEN/.venv

./Druggen/.venv/bin/activate

pip install -r DrugGEN/requirements.txt# Download the raw files

cd DrugGEN/data

bash dataset_download.sh

# DrugGEN can be trained with a one-liner

python DrugGEN/main.py --submodel="CrossLoss" --mode="train" --raw_file="DrugGEN/data/chembl_train.smi" --dataset_file="chembl45_train.pt" --drug_raw_file="DrugGEN/data/akt_train.smi" --drug_dataset_file="drugs_train.pt" --max_atom=45** Please find the arguments in the main.py file. Explanation of the commands can be found below.

Model arguments:

--submodel SUBMODEL Choose the submodel for training

--act ACT Activation function for the model

--z_dim Z_DIM Prior noise for the first GAN

--max_atom MAX ATOM Maximum atom number for molecules must be specified

--lambda_gp LAMBDA_GP Gradient penalty lambda multiplier for the first GAN

--dim DIM Dimension of the Transformer models for both GANs

--depth DEPTH Depth of the Transformer model from the first GAN

--heads HEADS Number of heads for the MultiHeadAttention module from the first GAN

--dec_depth DEC_DEPTH Depth of the Transformer model from the second GAN

--dec_heads DEC_HEADS Number of heads for the MultiHeadAttention module from the second GAN

--mlp_ratio MLP_RATIO MLP ratio for the Transformers

--dis_select DIS_SELECT Select the discriminator for the first and second GAN

--init_type INIT_TYPE Initialization type for the model

--dropout DROPOUT Dropout rate for the encoder

--dec_dropout DEC_DROPOUT Dropout rate for the decoder

Training arguments:

--batch_size BATCH_SIZE Batch size for the training

--epoch EPOCH Epoch number for Training

--warm_up_steps Warm up steps for the first GAN

--g_lr G_LR Learning rate for G

--g2_lr G2_LR Learning rate for G2

--d_lr D_LR Learning rate for D

--d2_lr D2_LR Learning rate for D2

--n_critic N_CRITIC Number of D updates per each G update

--beta1 BETA1 Beta1 for Adam optimizer

--beta2 BETA2 Beta2 for Adam optimizer

--clipping_value Clipping value for the gradient clipping process

--resume_iters Resume training from this step for fine tuning if desired

Dataset arguments:

--features FEATURES Additional node features (Boolean) (Please check new_dataloader.py Line 102)- First, download the weights of the chosen trained model from trained models, and place it in the folder: "DrugGEN/experiments/models/".

- After that, please run the code below:

python DrugGEN/main.py --submodel="{Chosen model name}" --mode="inference" --inference_model="DrugGEN/experiments/models/{Chosen model name}"- SMILES representation of the generated molecules will be saved into the file: "DrugGEN/experiments/inference/{Chosen model name}/denovo_molecules.txt".

- SMILES notations of 50,000 de novo generated molecules from DrugGEN models (10,000 from each) can be downloaded from here.

- We first filtered the 50,000 de novo generated molecules by applying Lipinski, Veber and PAINS filters; and 43,000 of them remained in our dataset after this operation (SMILES notations of filtered de novo molecules).

- We run our deep learning-based drug/compound-target protein interaction prediction system DEEPScreen on 43,000 filtered molecules. DEEPScreen predicted 18,000 of them as active against AKT1, 301 of which received high confidence scores (> 80%) (SMILES notations of DeepScreen predicted actives).

- At the same time, we performed a molecular docking analysis on these 43,000 filtered de novo molecules against the crystal structure of AKT1, and found that 118 of them had sufficiently low binding free energies (< -9 kcal/mol) (SMILES notations of de novo molecules with low binding free energies).

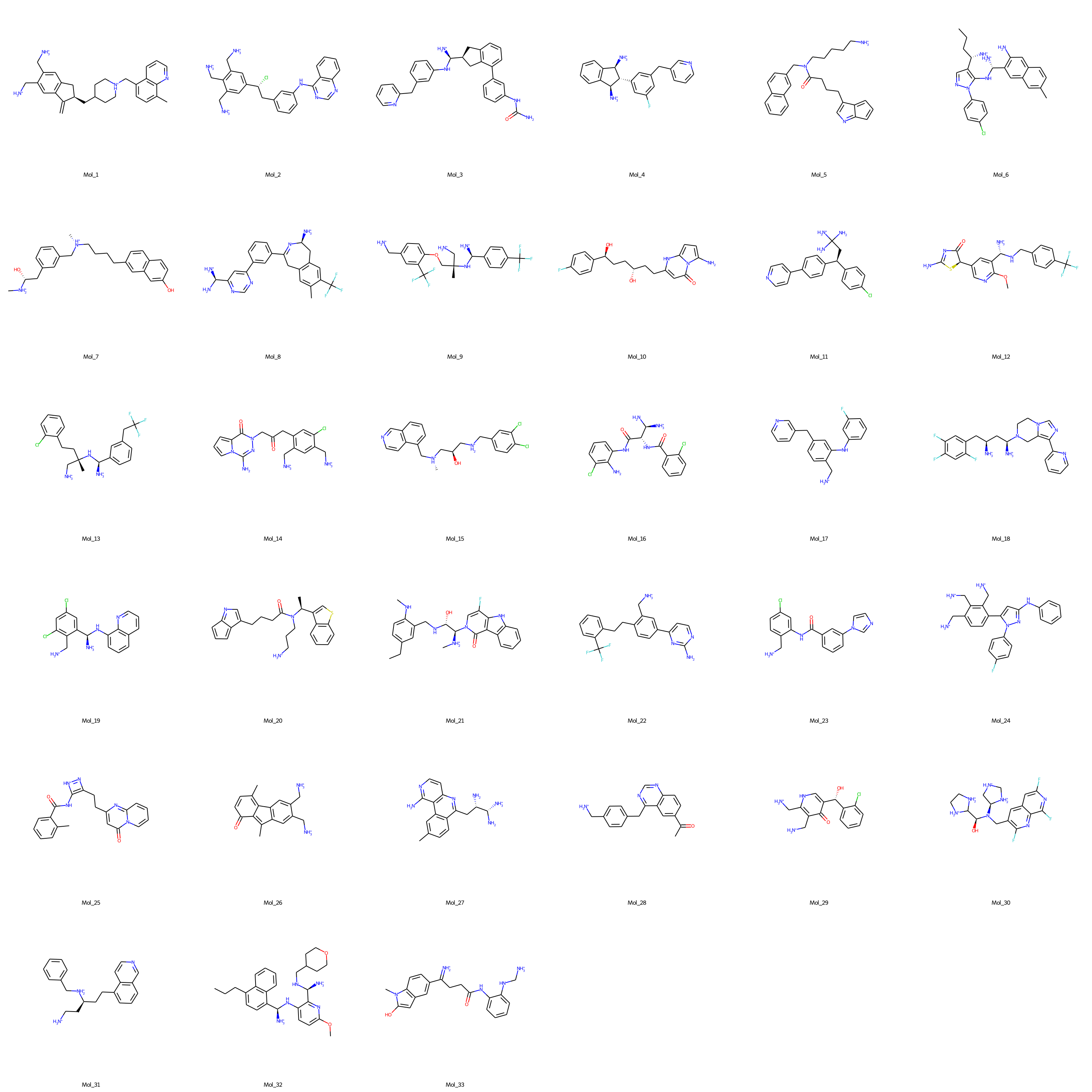

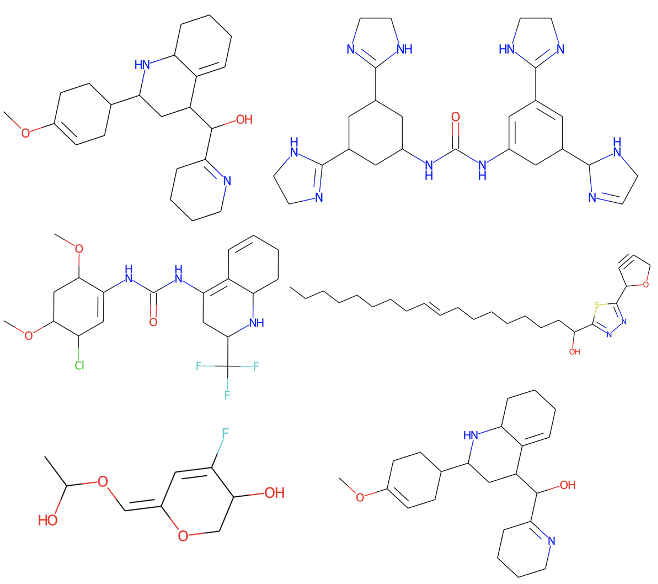

- Finally, de novo molecules to effectively target AKT1 protein are selected via expert curation from the dataset of molecules with binding free energies lower than -9 kcal/mol. The structural representations of the selected molecules are shown in the figure below (SMILES notations of the expert selected de novo AKT1 inhibitor molecules).

@article{unlu2023target,

title={Target Specific De Novo Design of Drug Candidate Molecules with Graph Transformer-based Generative Adversarial Networks},

author={{\"U}nl{\"u}, Atabey and {\c{C}}evrim, Elif and Sar{\i}g{\"u}n, Ahmet and {\c{C}}elikbilek, Hayriye and G{\"u}venilir, Heval Ata{\c{s}} and Koya{\c{s}}, Altay and Kahraman, Deniz Cansen, Ol{\u{g}}a{\c{c}}, Abdurrahman, and Rifaio{\u{g}}lu, Ahmet, and Dogan, Tunca},

journal={arXiv preprint arXiv:2302.07868},

year={2023}

}This code is available for non-commercial scientific research purposes as will be defined in the LICENSE file. By downloading and using this code you agree to the terms in the LICENSE. Third-party datasets and software are subject to their respective licenses.

In each file, we indicate whether a function or script is imported from another source. Here are some excellent sources from which we benefit:

- First GAN is borrowed from MolGAN

- MOSES was used for performance calculation (MOSES Script are directly embedded to our code due to current installation issues related to the MOSES repo).

- Graph Transformer architecture of generators was inspired from Dwivedi & Bresson, 2020

- Several Pytroch Geometric scripts were used to generate the datasets.

- Graph Transformer Encoder architecture was taken from Dwivedi & Bresson (2021) and Vignac et al. (2022) and modified.