Scalable training of Transformer-XL

Extending Transformer-XL for distributed training. (medium post)

One machine

Reproduce 24.617 test perplexity on wikitext-103 after 2 hours 54 minutes and one machine with 180M Transformer-XL (internal logs: east/txl.03).

aws configure

pip install -r requirements.txt

python launch.py --config=one_machine

Command above will reserve p3dn instance, setup dependencies and start training. You can see what it's doing by doing one of:

- Connect to instance using ssh and attach to tmux session. (

ncluster moshdoes this automatically) - Call

launch_tensorboard.pyto spin up TensorBoard instance and look at the latest graphs that appeared.

Several machines

Reproduce 90 seconds per epoch on 180M Transformer-XL network and 8-machines.

python launch.py --config=eight_machines

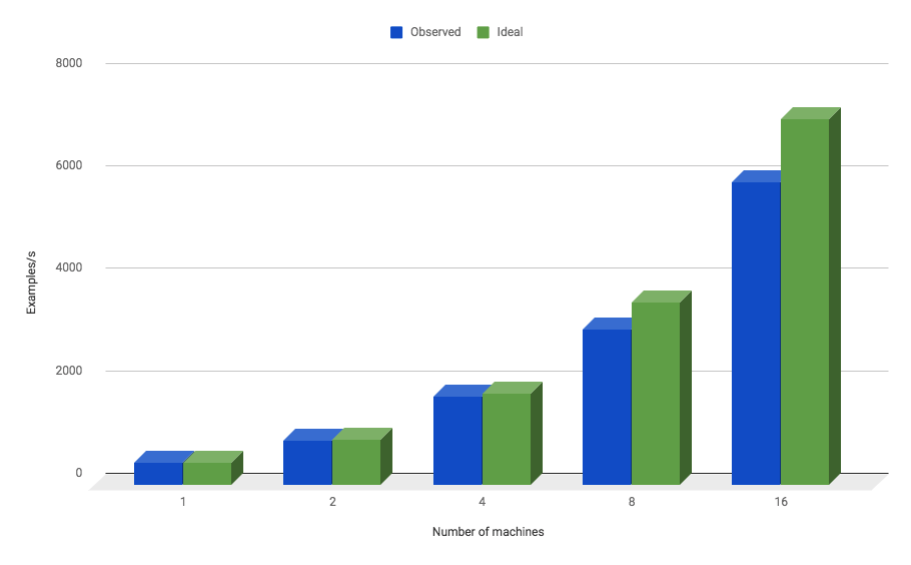

Throughput tests

Training throughput numbers for 277M parameter Transformer-XL

python launch.py --config=test_1 # 446 examples/sec

python launch.py --config=test_2 # 860 examples/sec

python launch.py --config=test_4 # 1736 examples/sec

python launch.py --config=test_8 # 3031 examples/sec

python launch.py --config=test_16 # 5904 examples/sec

Locally on single GPU

Follow instructions on original Transformer-XL repo to get data and put it under data/wikitext-103, then

bash single_machine.sh

Notes

-

These experiments automatically launch at spot instance prices. If you prefer to launch instances as on-demand , add

--nospotflag to your launch.py command. -

By default, instances stop automatically 10 minutes after completion or 60 minutes if error is detected. You could stop instances manually using

ncluster stopcommand or terminate them withncluster killcommands. -

Use ncluster command-line tool to interact with your instances

Text examples

Here's an example of text generated by Transformer-XL 300M model after training on 3 epochs of Wikipedia.

Prompt

Ruchi Sanghvi (born 20 January 1982)[1][2] is an Indian computer engineer. She was the first female engineer hired by Facebook.[3][4] In late 2010, she quit Facebook and in 2011,[5] she started her own company Cove, with two other co-founders. The company was sold to Dropbox in 2012 and Sanghvi joined Dropbox as VP of Operations. She left Dropbox in October 2013.[6][7]

Transformer-XL completion

She was born in Allahabad City, Uttar Pradesh, India, on 15 February 1982, as the second daughter of a teacher's assistant (/Иноний акутию] (1910–1978) and an Urdu scholar mother ("née" Mukherjee). She has a younger sister, Kamya Tahir Ali, who is also a writer, and a younger brother, Mariam. Her father is a university lecturer, while her mother is a social worker.

When she was 15, her family moved to Allahabad, Uttar Pradesh, India. There she completed her matriculation from Allahabad Kings College and later earned a master's degree in Economics from the University of Allahabad. She joined Facebook in 2011 and started working on her computers as a researcher to help her study hard technology and IT. Following a short-term stint on the City's Speaking Platform, she joined LinkedIn in 2014.

She has also worked as a researcher and teacher in mathematics and science.

Ruchi has not yet decided how to work with her husband. She has stated that she was looking for work that would help her family. Her husband is now an IT professor at University of Allahabad and her daughter is now a computer programmer.

She joined Facebook in 2013.

In December 2013, Sanghvi's mother, Kamya Tahir Ali, died at the age of 88. She had previously worked with her mother in various jobs, including working in engineering and computer science.

In December 2013, Sanghvi was approached to join Facebook. She said that the decision to leave Facebook was an attempt to pay her legal fees, and that she wanted to spend the time with her family but that she was unable to do so, and that the decision was not supported by Facebook. She also said that she wanted to re-focus her studies on computing.