This repository documents some experiments with siamese/triplet autoencoders, and siamese variational autoencoders. The goal of these experiements was to learn interesting, semantically meaningful low-dimensional representations of the data by:

- Combining the self-supervised properties of an autoencoder (variational or otherwise) to generate low-dimensional latent representation of the data

- Refining the obtained latent representation using contrastive methods on groups of points sampled from the latent space and presented as either pairwise or triplet constraints.

For reference, pairwise constraints are tuples of the form (A, B) where data points A and B are considered "similar", while triplet constraints are tuples of the form (A, B, C) where B is more similar to A than C.

Contrastive methods are a popular way to learn semantically meaningful embeddings of high dimensional data. Intuitively, any reasonable embedding should embed similar points close together and dissimilar points far apart - contrastive methods build on this intuition by:

- penalizing large distances between points tagged a-priori as similar

- penalizing small distances (i.e. distances less than some margin) between points tagged a-priori as dissimilar.

This method is not without its flaws - most notably, blindly moving "similar" points closer together erases the intra-class variance, which might be something we are interested in.

One possible solution to this would be to replace regular Siamese/Triplet Networks with Siamese/Triplet autoencoders; the reconstruction accuracy constraint should help somewhat in preserving the structure of the latent space so that similar classes are not collapsed. One could also use Siamese/Triplet variational autoencoders, which have the additional benefit of imposing a Gaussian prior on the shape of the latent space.

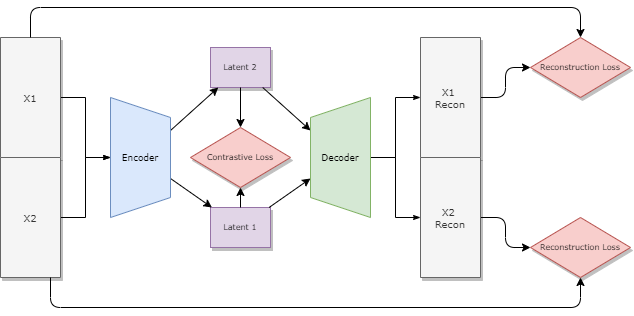

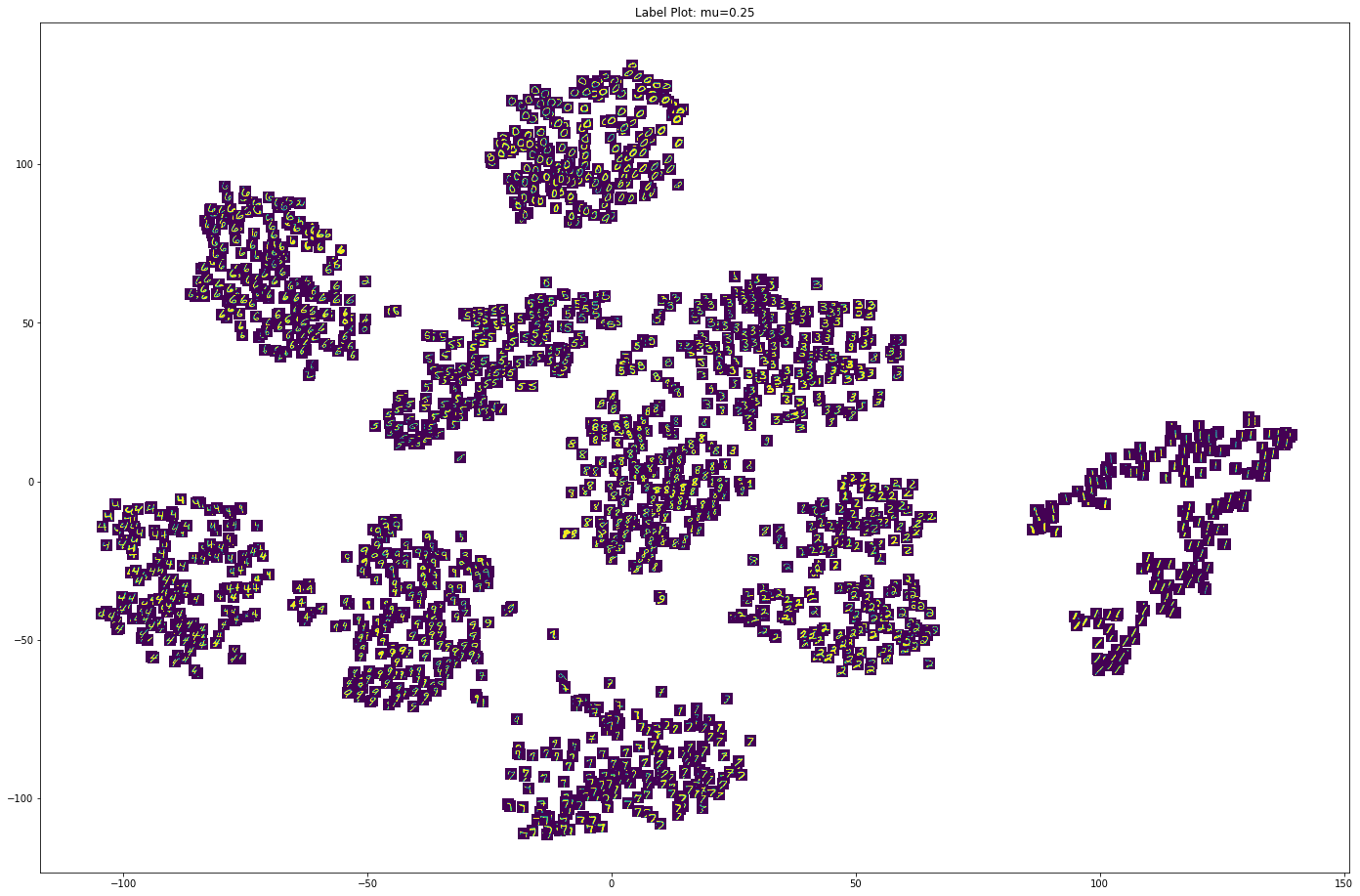

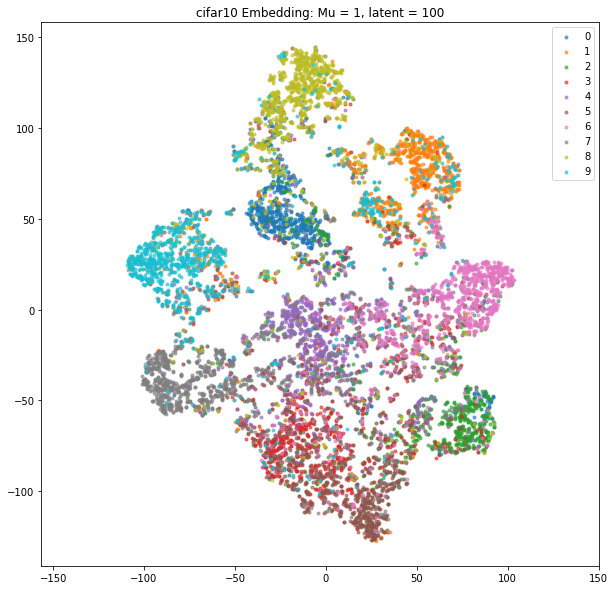

Overall, we are simultaneously minimizing two losses: the contrastive or triplet loss within the latent space, and the reconstruction error. These losses are balanced with a weighting parameter M. As M tends to 1, we approach the behavior of a regular siamese/triplet network; as M tends to 0, we approach the behavior of a regular autoencoder/VAE.

Figure 1: Pairwise Network Architecture. The encoder/decoder components collectively can either be regular or variational autoencoders.Example can be found in run.py:

all_results = run_experiment("pairwise", "vae", "cifar10", [0.75], [3],

num_iters=10, batch_size=32, normalize=True,

feature_extractor='hog')

Parameters:

constraint_type: Simulation type. Accepts either "pairwise" or "triplet"encoder_type: Network type. Accepts either "embenc" (regular autoencoder) or "vae"dataset: Dataset name. Accepts "mnist", "cifar10" and "imagenet"list_of_weights: list of mu values for which experiment is to be run.list_of_latent_dims(list): list of latent dimensions for which experiment is to be run.batch_size: batch size from which pairs/triples are generated. Defaults to 64.keys: Mapping from numeric labels to string label names. Defaults to None. Used in visualizing.composite_labels: Maps current labels to composite labels, i.e. when multiple classes are to be merged.margin: Margin to be used for metric loss. Defaults to 1.dropout_val: dropout value for networks. Defaults to 0.2.num_iters: number of iterations. Defaults to 20000.normalize: Whether or not to normalize the data.feature_extractor: the type of feature extractor to use. Supports'resnet'(features extracted from pre-trained ResNet50) and'hog'(histogram of oriented gradients)