This is an example based on SciPhi-AI/R2R-qna-rag-prebuilt.

Different modes:

- Local with pgvector

- Docker with pgvector (own infrastructure)

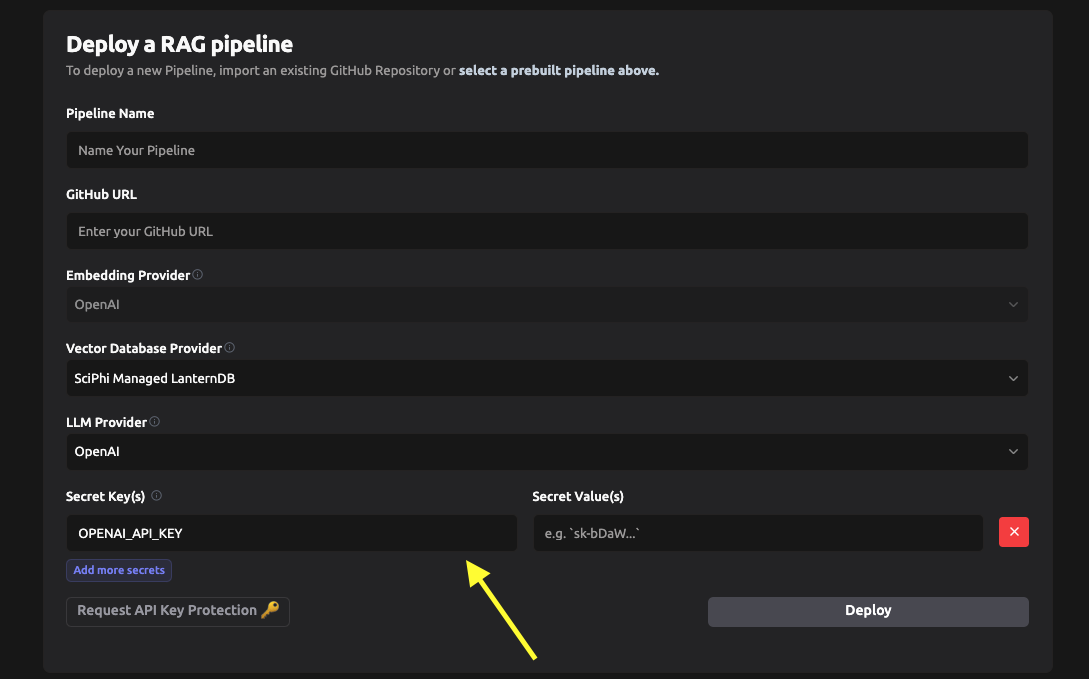

- SciPhi

- OPENAI_API_KEY

- ANTHROPIC_API_KEY: anthropic.com

- CONFIDENTAI_API_KEY: For Confident AI's

deepevalprompt evaluations/monitoring. An alternative for this is Parea AI.

$ export OPENAI_API_KEY=xxxxxx

$ export ANTHROPIC_API_KEY=yyyyyy

$ export CONFIDENT_API_KEY=zzzzzzUses src/app-external.py application.

pgvector is a vectorial database based on PostgreSQL.

- CONFIG_PATH: JSON config file name.

- POSTGRES_USER

- POSTGRES_PASSWORD

- POSTGRES_HOST

- POSTGRES_PORT

- POSTGRES_DBNAME

Example:

# Using Python >=3.12

$ python3 -m venv env

$ source env/bin/activate

(env) $ pip install -r docker/requirements.txt

(env) $ export CONFIG_PATH="./docker/config-pgvector.json"

(env) $ export POSTGRES_USER=vectordb

(env) $ export POSTGRES_PASSWORD=vectordb

(env) $ export POSTGRES_HOST=localhost

(env) $ export POSTGRES_PORT=5432

(env) $ export POSTGRES_DBNAME=vectordb

(env) $ ./start.shUses src/app-external.py application.

Modify the docker/docker-compose.yml settings for

the sciphi container. This repository contains different service combinations:

docker/config-litellm-pgvector.json: Uses the pgvectordbcontainer andlitellmas LLM provider (proxy to providers). Uses OpenAI's embeddings. See Providers documentation.docker/config-litellm-local_embeddings-pgvector.json: Uses pgvectordbcontainer,litellmas proxy providers andsentence_transformerswith HuggingFace models locally for embeddings.docker/config-llama-cpp-pgvector.json: Uses the pgvectordbcontainer andllama-cppas LLM provider, using local Ollama models. Uses OpenAI's embeddings.

# Run all containers

$ docker compose -f docker/docker-compose.yml up # -d to detach

# Run only sciphi-related containers

$ docker compose -f docker/docker-compose.yml up sciphi # -d to detachThis environment uses the config.json and src/app.py files since those are

expected in their infrastructure.

Add required keys in deployments config:

See postman/QUERIES.md.