Distributed map-reduce program that takes a set of plain text files as an input and produces a count indicating how many times each word occurs in the whole dataset.

- Python >= 3.9.x

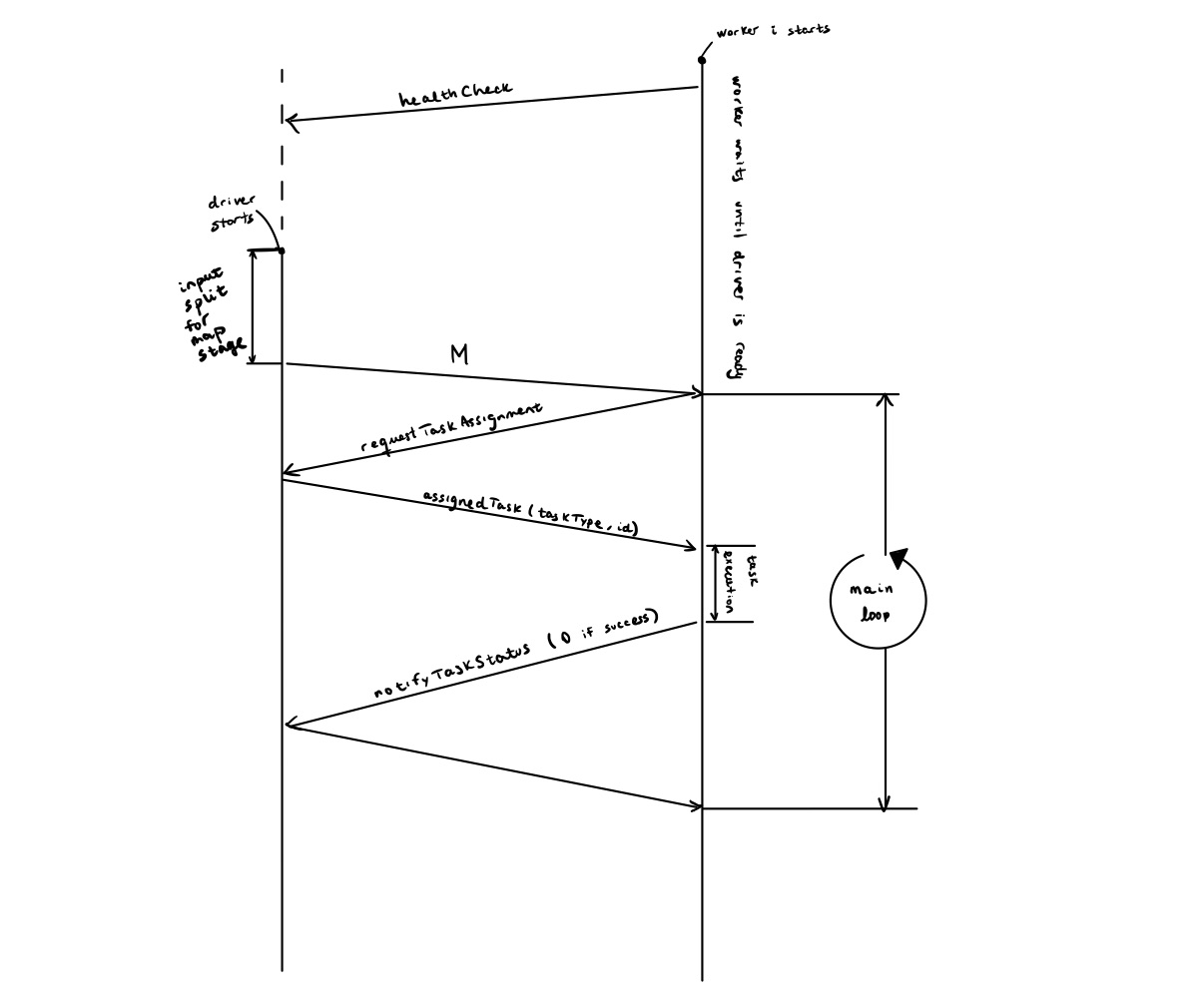

Note 1 When map tasks are still running, the driver doesn't assign any reduce task. In this case

requestTaskAssignmentreturns anassignedTaskwith id=-1.

Note 2 When all tasks are completed

requestTaskAssignmentreturns anassignedTaskwith id=-2. When the worker receives this assignment, it proceeds to quit. The driver will wait for 5 seconds after this and it will quit. In the meantime, the rest of the workers will have time to get notified about the job's completion.

-

Place all the input files in a new directory

$input_dir. -

Set up a virtual environment and install the requirements:

$ cd $repo $ python -m venv $venv_path $ source $venv_path/bin/activate $ pip install -r requirements.txt

-

Start as many workers as desired:

$ python worker.py

-

Start driver:

$ python driver.py -N $n_map_tasks -M $n_reduce_tasks $input_dir

Note Worker instances can also be started after the driver has been launched.

-

Once the process has terminated, check the result in the

.tmp/out/.

$ cd $repo

$ python3 -m venv $venv_path

$ source $venv_path/bin/activate

$ pip install -r requirements.txt

$ pip install pytest

$ pytestcompile-protos.sh must be called whenever changes are made to any of the proto files in the protos/ directory. This will produce new *_pb2*.py files.