Yuanxun Lu1, Jingyang Zhang2, Shiwei Li2, Tian Fang2, David McKinnon2, Yanghai Tsin2, Long Quan3, Xun Cao1, Yao Yao1*

1Nanjing University, 2Apple, 3HKUST

Project Page | Paper | Weights

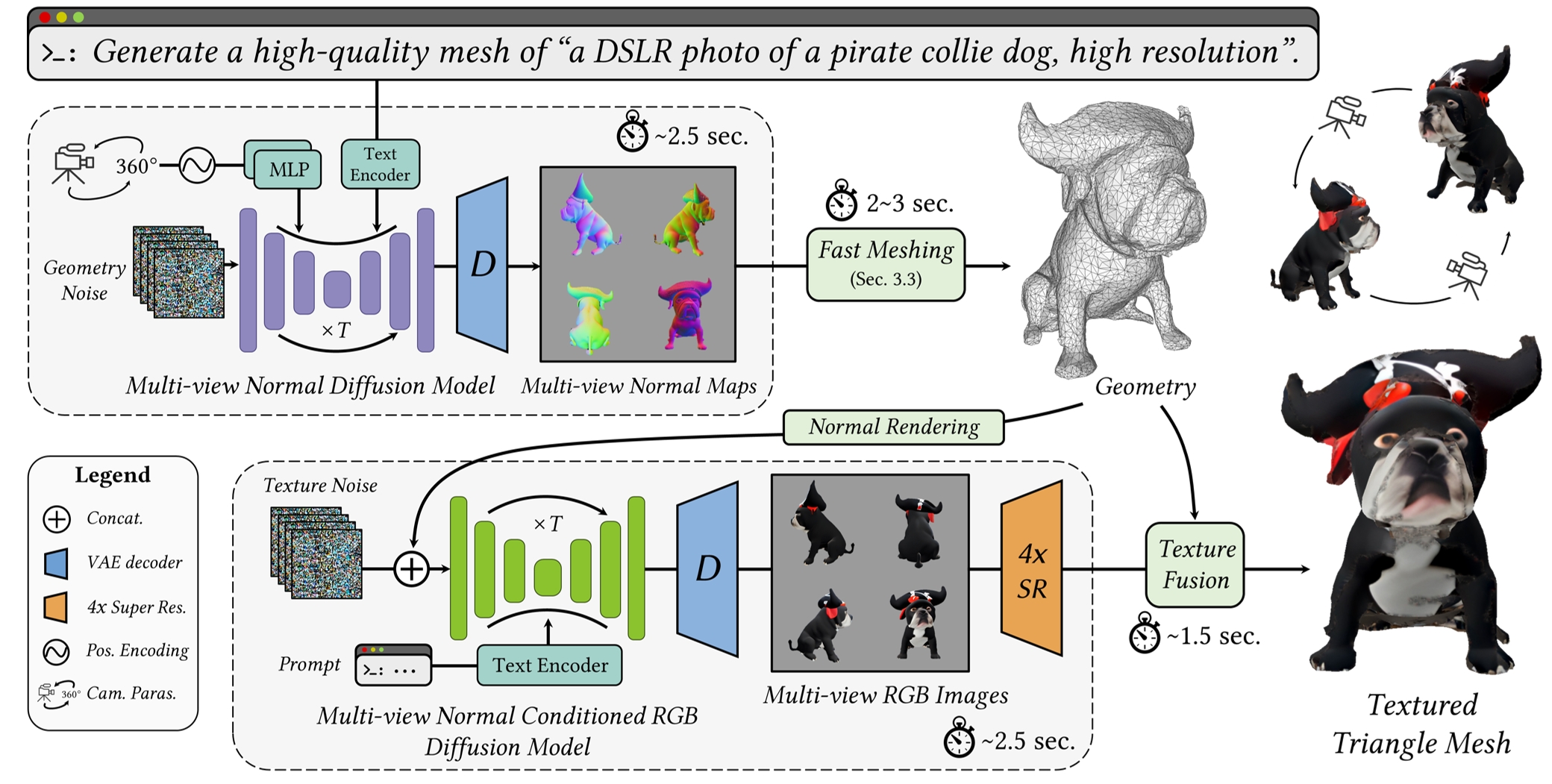

This is the official implementation of Direct2.5, a text-to-3D generation system that can produce diverse, Janus-free, and high-fidelity 3D content in only 10 seconds.

-

This project is successfully tested on Ubuntu 20.04 with PyTorch 2.1 (Python 3.10). We recommend creating a new environment and install necessary dependencies:

conda create -y -n py310 python=3.10 conda activate py310 pip install torch==2.1.0 torchvision pip install -r requirements.txt

-

Install

torch-scatterandpytorch3daccording to your cuda versions. For example, you could run this if you havecu118:# torch-scatter pip install torch-scatter -f https://data.pyg.org/whl/torch-2.1.0+cu118.html # pytorch3d conda install -c fvcore -c iopath -c conda-forge fvcore iopath conda install -c bottler nvidiacub pip install fvcore iopath pip install --no-index --no-cache-dir pytorch3d -f https://dl.fbaipublicfiles.com/pytorch3d/packaging/wheels/py310_cu118_pyt210/download.html -

Install

nvdiffrastfollowing the official instruction -

(Optional) Install

Real-ESRGANif you use super-resolution:pip install git+https://github.com/sberbank-ai/Real-ESRGAN.git

- Download the checkpoints: mvnormal_finetune_sd21b and mvrgb_normalcond_sd21b

-

Replace the ckpt path in the config file

configs/default-256-iter.yamlby your$ckpt_folderL4 and L5: mvnorm_pretrain_path: '$ckpt_folder/mvnormal_finetune_sd21b' mvtexture_pretrain_path: '$ckpt_folder/mvrgb_normalcond_sd21b'

-

Run the following command to generate a few examples. Note that the generation variances are quite large, so it may need a few tries.

python text-to-3d.py --num_repeats 2 --prompts configs/example_prompts.txtResults can be found under the

resultsfolder. The corresponding prompts could be in found in the txt file.You could also try single prompt using flag like

-prompts "A bald eagle carved out of wood" -

You can opt to upsample the images with RealESRGAN before texturing by modifying

L17of the configuration file. This will additionally cost around 1 second.

This sample code is released under the LICENSE terms.

@article{lu2024direct2,

title={Direct2.5: Diverse Text-to-3d Generation via Multi-view 2.5D Diffusion},

author={Lu, Yuanxun and Zhang, Jingyang and Li, Shiwei and Fang, Tian and McKinnon, David and Tsin, Yanghai and Quan, Long and Cao, Xun and Yao, Yao},

journal={Computer Vision and Pattern Recognition (CVPR)},

year={2024}

}