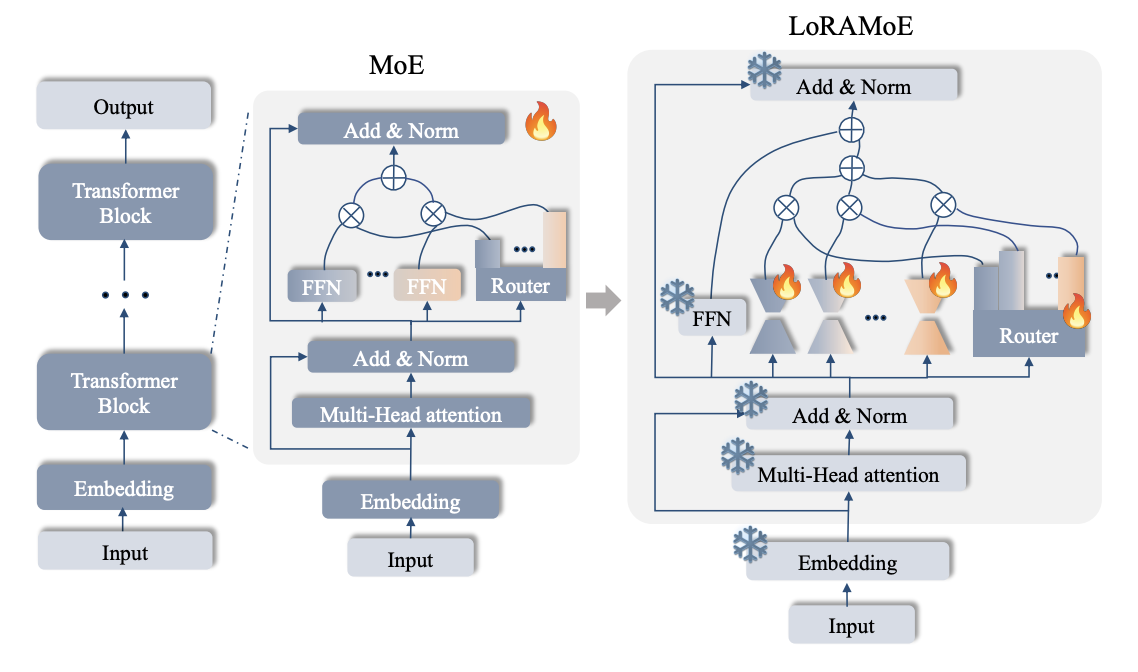

LoRAMoE: Revolutionizing Mixture of Experts for Maintaining World Knowledge in Language Model Alignment

This is the repository for LoRAMoE: Revolutionizing Mixture of Experts for Maintaining World Knowledge in Language Model Alignment.

You can quickly export the environment by using the follow command:

conda env create -f environment.ymlor

conda create -n loramoe python=3.10 -y

pip install -r requirements.txtWe do not install the peft to avoid the conflicts with the local peft package.

We construct a tiny dataset to demonstrate the data format during the training and inference phase and evaluate the correct of code.

data/

|--tiny_data/

|--train/train.json

|--test.json

bash run_loramoe.sh| blc weight | blc alpha | LoRA rank | LoRA alpha | LoRA trainable | LoRA dropout | LoRA num |

|---|---|---|---|---|---|---|

| the strength of localized balance constraints | degree of imbalance | rank of LoRA experts | LoRA scale | where the LoRA layers are added | dropout rate in LoRA | number of experts |

In transformers, we mainly change modeling_llama.py to introduce new para task_types.

In peft, we replace the original LoRA class with the mixtures of experts architecture.

We use opencompass for evaluation. To run LoRAMoE on opencompass:

- In

opencompass/opencompass/models/huggingface.py, add:

import sys

sys.path.insert(0, 'path_to_your_current_dir_containing_changed_peft&transformers')- In the config file

models = [

dict(

type=HuggingFaceCausalLM,

abbr='',

path="path_to_base_model",

tokenizer_path='path_to_tokenizer',

peft_path='path_to_loramoe',

...

)

]If you find this useful in your research, please consider citing

@misc{dou2024loramoe,

title={LoRAMoE: Revolutionizing Mixture of Experts for Maintaining World Knowledge in Language Model Alignment},

author={Shihan Dou and Enyu Zhou and Yan Liu and Songyang Gao and Jun Zhao and Wei Shen and Yuhao Zhou and Zhiheng Xi and Xiao Wang and Xiaoran Fan and Shiliang Pu and Jiang Zhu and Rui Zheng and Tao Gui and Qi Zhang and Xuanjing Huang},

year={2023},

eprint={2312.09979},

archivePrefix={arXiv},

primaryClass={cs.CL}

}