Scrape US Department of Energy (DOE) Office of Science Workforce Development for Teachers and Scientists (WDTS) produced PDF files for information about DOE run STEM pipeline programs. The information is taken from WDTS, the US Census Bureau, and the US Department of Education.

The currently list of STEM pipeline programs whose information can be gathered and parsed are:

- Community College Internships (CCI)

- Office of Science Graduate Student Research (SCGSR) Program

- Science Undergraduate Laboratory Internships (SULI)

- Visiting Faculty Program (VFP)

To report a bug or request a feature, please file an issue.

- Installation

- Command Line Interface

- Using Docker

- Examples

- Dependencies

- Contributing

- Acknowledgments / Contributors

If you wish to install the dependencies yourself and only wish to checkout the code and download the needed input data, then you may use the following commands.

git clone git@github.com:aperloff/WDTSscraper.git

data/download.shIf the necessary input files were downloaded correctly you should see some output which looks like:

Creating the directory data/us_map ... DONE

Creating the directory data/schools ... DONE

Creating the directory data/CCI ... DONE

Creating the directory data/SCGSR ... DONE

Creating the directory data/SULI ... DONE

Creating the directory data/VFP ... DONE

Downloading the US map information ... DONE, VERIFIED

Extracting the US map shapefiles ... DONE

Downloading information about US postsecondary schools ... DONE, VERIFIED

Extracting the school information ... DONE

Downloading the list of CCI participants ... DONE, VERIFIED

Downloading the list of SCGSR participants ... DONE, VERIFIED

Downloading the list of SULI participants ... DONE, VERIFIED

Downloading the list of VFP participants ... DONE, VERIFIED

SUCCESS::All files downloaded!

If you'd rather use a clean environment, there is an available set of Docker image (aperloff/wdtsscraper) with the following tags:

latest: Contains all of the necessary dependencies and the WDTSscraper code. This image does not contain any of the necessary input data.latest-data: Contains everything in thelatestimage, but also contains the input data.

Further information about using these images will be given below (see Using Docker).

python3 python/WDTSscraper.py -C python/configs/config_all-programs_2021.pyThe output will be an image file.

The command line options available are:

-d, --debug: Shows some extra information in order to debug this program (default = False)-f, --files [files]: The absolute paths to the files to scrape-F, --formats [formats]: List of formats with which to save the resulting map (choices = [png,pdf,ps,eps,svg], default = [png])-i, --interactive: Show the plot during program execution-n, --no-lines: Do not plot the lines connecting the home institutions and the national laboratories-N, --no-draw: Do not create or save the resulting map-O, --output-path=OUTPUTPATH: Directory in which to save the resulting maps (default =os.cwd())-S, --strict-filtering: More tightly filter out participants by removing those whose topic is unknown-t, --types [types]: A list of the types of files being processed (choices = [VFP,SULI,CCI,SCGSR])-T, --filter-by-topic: Filter the participants by topic if the topic is available-y, --years [years]: A list of years to help determine how to process each file

The number of files, types, and years must be equal (i.e. you can't specify 10 input files and years, but only 9 program names).

There are two tools used for managing the input data collected from the US government:

data/download.sh: This is used for downloading and extracting the entire collection of needed input files. It gathers US map information from the Census Bureau, post-secondary school information from the Department of Education, and DOE program participation from WDTS.data/clean.sh: This will remove all sub-directories within thedata/directory. This effectively wipes the slate clean. Make sure not to store any valuable files within these sub-directories.

There are a few workflows available when using the Docker images:

-

You may choose to use the

latestimage as a clean environment, but download up-to-date input files. In this case, you will need to run thedata/download.shscript manually. -

You may choose to use the

latest-dataimage, which already contains the input data. In this case, you will only need to runpython/WDTSscraper.py -

An alternative mode has you checkout the code and download the input data locally, but use one of the Docker images to provide the necessary dependencies. In this case, the code and input data within the image will automatically be replaced by your local version using a bind mount. This method is most useful for people wishing to develop a new feature for the repository, but who want to avoid installing the dependencies on their local machine. The resulting images will be copied back to the host machine. To run in this mode, a helper script has been developed to wrap up all of the Docker complexities. Simply run:

.docker/run.sh -C python/configs/config_all_programs_2021.py

Note: To use this running mode, you will need to have permission to bind mount the local directory and the local user will need permission to write to that directory as well. This is typically not a problem unless the repository has been checked out inside a restricted area of the operating system or the permissions on the directory have been changed.

Here are some examples of the types of images which can be created.

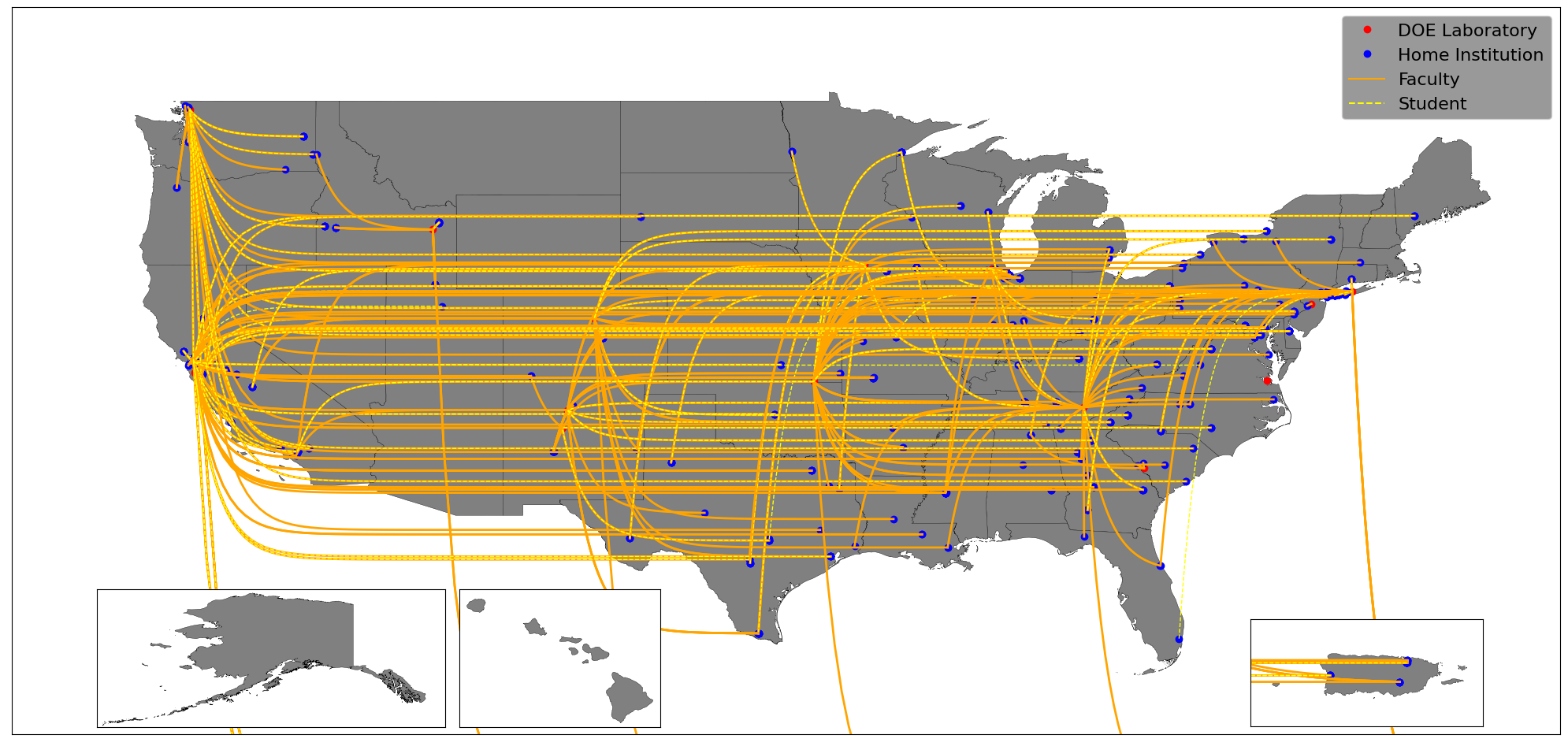

The first one displays the DOE national laboratories involved in the VFP, the home institutions for the faculty and students involved, and the connections between those home institutions and the national laboratories. The plot covers from 2015 to 2021 and includes all research areas.

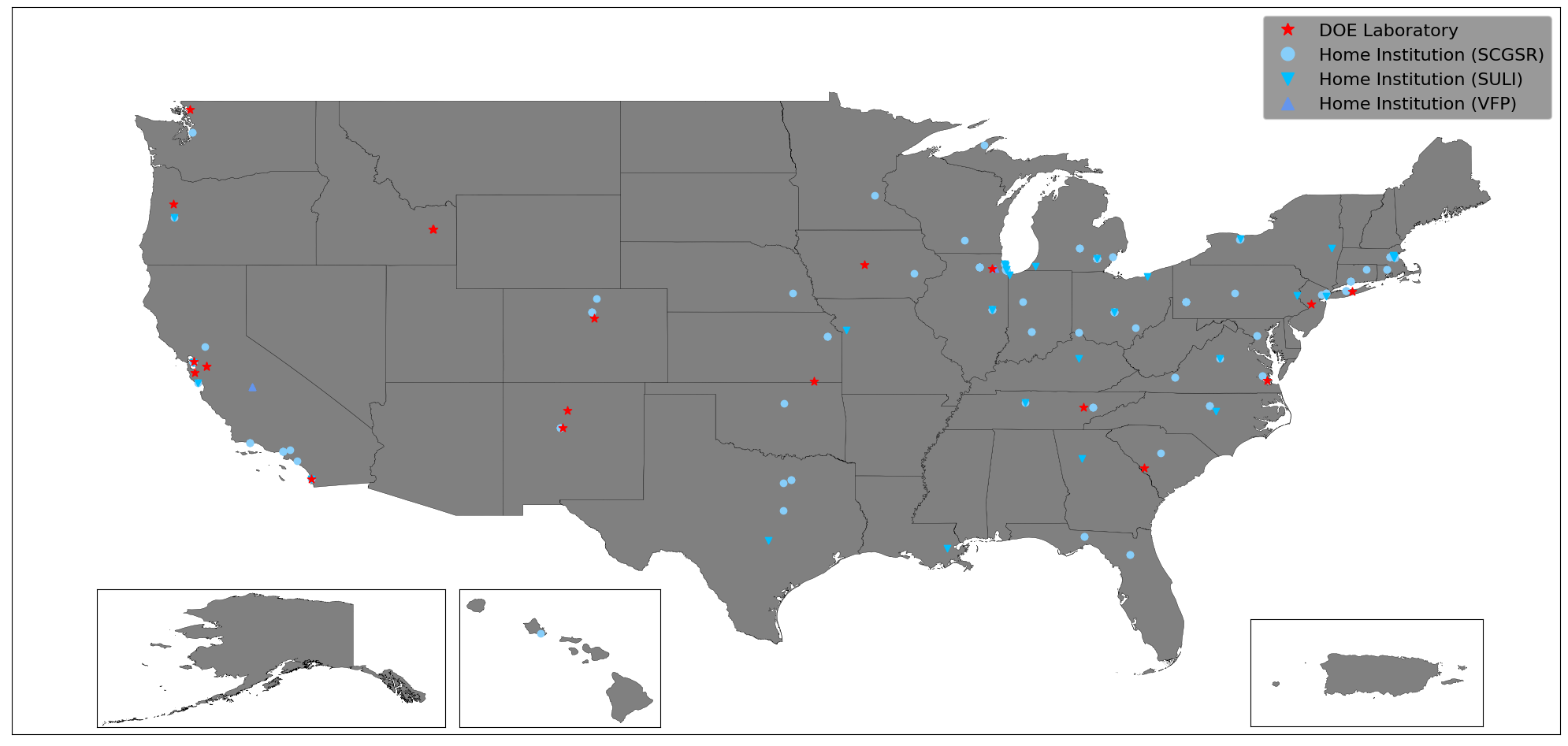

The second image shows the DOE national laboratories and home institutions involved in the CCI, SCGSR, SULI, and VFP programs, but only for students and faculty verifiably involved in high energy physics (HEP) research. Because there was no information about the type of research being done by CCI participants, there are no makers on the plot for that programs. Once again this plot covers from 2015 to 2021.

Required dependencies:

Python 3pdfplumber(GitHub): Used to extract text and tables from PDFs.- Can be installed using the command

pip3 install --no-cache-dir pdfplumber - This program has several of its own optional dependencies. More information can be found here.

ImageMagick: Installation instructionsghostscript): Installation instructions- For MAC users: Optionally by programs can be installed using the command

brew install freetype imagemagick ghostscript

- Can be installed using the command

jsons(GitHub): Used to serialize and deserialize the class objects.- Can be installed using the command

pip3 install --no-cache-dir jsons

- Can be installed using the command

magiconfig(GitHub): Used to read Python configuration files.- Can be installed using the command

pip3 install --no-cache-dir magiconfig

- Can be installed using the command

matplotlib(PyPI): Used for plotting the scraped data.- Installation instructions can be found here.

- TLDR: Install using the command

pip3 install --no-cache-dir matplotlib

mpl_toolkits: Should be installed when you installmatplotlib. In other words, you shouldn't need to do anything extra.GeoPandas(PyPI): This library is used to parse geographic data and to visualize it usingmatplotlib.- Installation instructions can be found here.

- TLDR: You can install it using the command

pip3 install --no-cache-dir numpy pandas shapely fiona pyproj rtree GeoAlchemy2 geopy mapclassify matplotlib geopandas

There is a script available to make sure all of the needed dependencies are installed:

python3 python/check_for_dependencies.pyOptional dependencies:

ShellCheck(GitHub): Used for linting shell scripts.PyLint(GitHub, PyPI): Used for linting Python modules.bats(GitHub): Used for unit testing shell scripts.pytest(GitHub, PyPI): Used for unit testing Python modules.

Pull requests are welcome, but please submit a proposal issue first, as the library is in active development.

Current maintainers:

- Alexx Perloff

Unit testing is performed using bats for shell scripts and PyTest for the Python modules. You are of course allowed to install these programs locally. However, shell scripts have been setup to make this procedure as easy as possible.

The Bats tests are currently setup to test the data/download.sh and data/clean.sh shell scripts. These tests rely on a stable internet connection and the government servers being healthy.

First, the Bats software needs to be setup. This is a process that only needs to happen once. To setup the software run the following command from within the repository's base directory:

test/bats_control.sh -sOnce the software is setup, you can run the tests using:

./test/bats_control.shIf everything is working correctly, the output will be:

✓ Check download

✓ Check clean

2 tests, 0 failuresTo remove the Bats software run:

./test/bats_control.sh -rTo run the python unit/integration tests, you will need to have PyTest installed. To create a local virtual environment with PyTest installed, use the following commands from within the repository's base directory:

./test/pytest_control.sh -sYou only have to run that command when setting up the virtual environment the first time. You can then run the tests by using the command:

./test/pytest_control.shYou should see an output similar to:

======================================================== test session starts ========================================================

platform darwin -- Python 3.9.10, pytest-7.0.1, pluggy-1.0.0

rootdir: <path to WDTSscraper>

collected 2 items

test/test.py .. [100%]

======================================================== 2 passed in 13.34s =========================================================You can pass addition options to PyTest using the -o flag. For example, you could run the following command to increase the verbosity of PyTest:

./test/pytest_control.sh -o '--verbosity=3'Other helpful pytest options include:

-rP: To see the output of successful tests. This is necessary because by default all of the output from the various tests is captured by PyTest.-rx: To see the output of failed tests (default).-k <testname>: Will limit the tests run to just the test(s) specified. The<testname>can be a class of tests or the name of a specific unit test function.

To remove the virtual environment use the command:

./test/pytest_control.sh -rwhich will simply remove the test/venv directory.

Linting is done using ShellCheck for the Bash scripts and PyLint for the Python code. The continuous integration jobs on GitHub will run these linters as part of the PR validation process. You may as well run them in advance in order to shorten the code review cycle. PyLint can be run as part of the Python unit testing process using the command:

test/pytest_control.sh -lUnfortunately, ShellCheck is not installable via PyPI. If you install ShellCheck locally, you would need to run it using the command:

find ./ -type f -regex '.*.sh$' -not -path './/test/venv/*' -exec shellcheck -s bash -e SC2162 -e SC2016 -e SC2126 {} +ShellCheck is also installed inside the Docker image and you can run it as part of method (3) from the Using Docker section. In that case you would need to run:

docker run --rm -t --mount type=bind,source=${PWD},target=/WDTSscraper aperloff/wdtsscraper:latest /bin/bash -c "find ./ -type f -regex '.*.sh$' -not -path './/test/venv/*' -exec shellcheck -s bash -e SC2162 -e SC2016 -e SC2126 {} +"Many thanks to the following users who've contributed ideas, features, and fixes:

- Michael Cook

- Adam Lyon

- Kevin Pedro