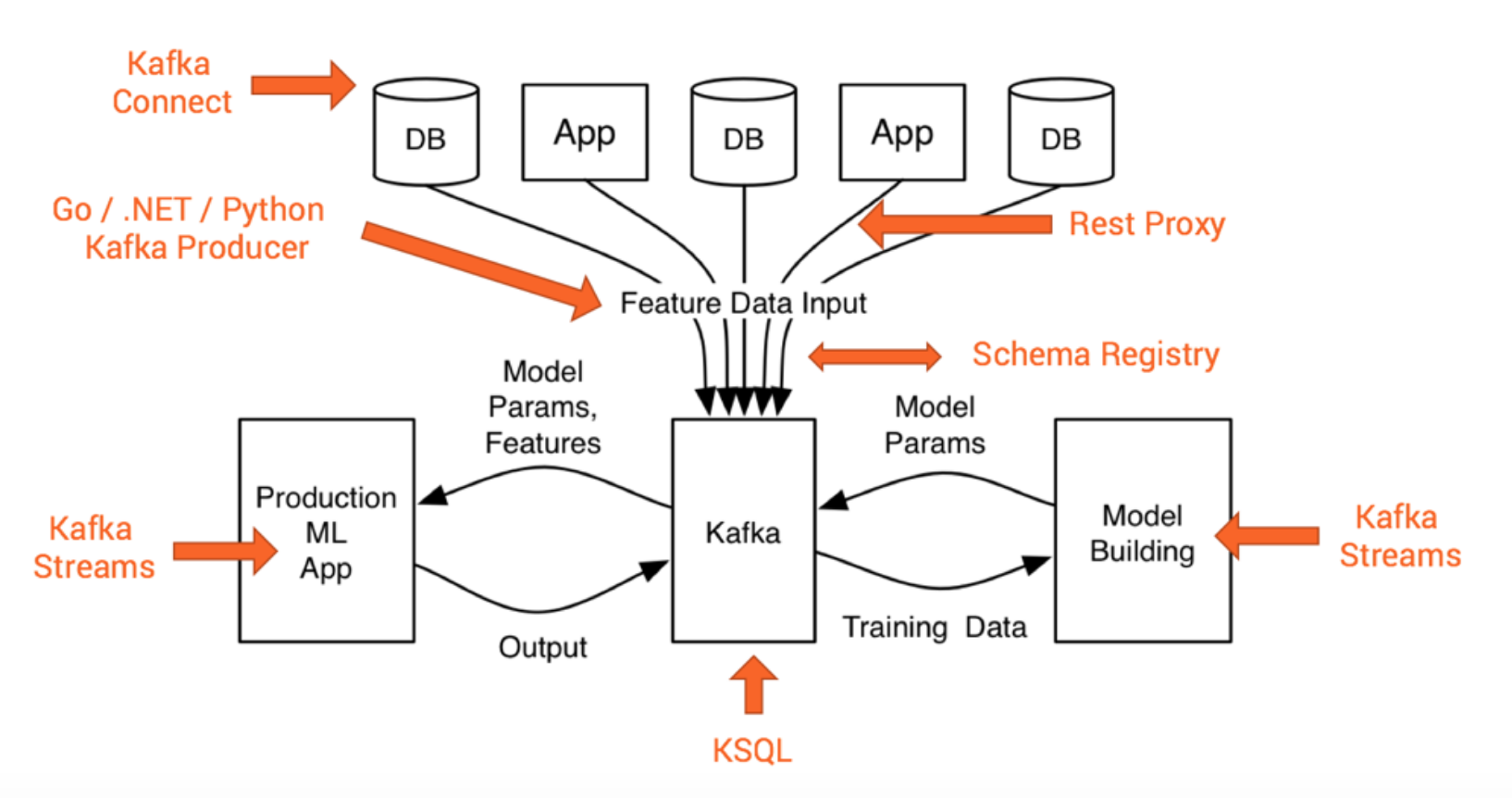

This project contains examples which demonstrate how to deploy analytic models to mission-critical, scalable production leveraging Apache Kafka and its Streams API. Examples will include analytic models built with TensorFlow, Keras, H2O, Python, DeepLearning4J and other technologies.

Here is some material about this topic if you want to read and listen to the theory instead of just doing hands-on:

- Blog Post: How to Build and Deploy Scalable Machine Learning in Production with Apache Kafka

- Slide Deck: Apache Kafka + Machine Learning => Intelligent Real Time Applications

- Slide Deck: Deep Learning at Extreme Scale (in the Cloud) with the Apache Kafka Open Source Ecosystem

- Video Recording: Deep Learning in Mission Critical and Scalable Real Time Applications with Open Source Frameworks

- Blog Post: Using Apache Kafka to Drive Cutting-Edge Machine Learning - Hybrid ML Architectures, AutoML, and more...

- Deployment of a H2O GBM model to a Kafka Streams application for prediction of flight delays

- Deployment of a H2O Deep Learning model to a Kafka Streams application for prediction of flight delays

- Deployment of a pre-built TensorFlow CNN model for image recognition

- Deployment of a DL4J model to predict the species of Iris flowers

- Deployment of a Keras model (trained with TensorFlow backend) using the Import Model API from DeepLearning4J

More sophisticated use cases around Kafka Streams and other technologies will be added over time in this or related Github project. Some ideas:

- Image Recognition with H2O and TensorFlow (to show the difference of using H2O instead of using just low level TensorFlow APIs)

- Anomaly Detection with Autoencoders leveraging DeepLearning4J.

- Cross Selling and Customer Churn Detection using classical Machine Learning algorithms but also Deep Learning

- Stateful Stream Processing to combine different model execution steps into a more powerful workflow instead of "just" inferencing single events (a good example might be a streaming process with sliding or session windows).

- Keras to build different models with Python, TensorFlow, Theano and other Deep Learning frameworks under the hood + Kafka Streams as generic Machine Learning infrastructure to deploy, execute and monitor these different models.

- Deep Learning UDF for KSQL: Streaming Anomaly Detection of MQTT IoT Sensor Data using an Autoencoder

- End-to-End ML Integration Demo: Continuous Health Checks with Anomaly Detection using KSQL, Kafka Connect, Deep Learning and Elasticsearch

- TensorFlow Serving + gRPC + Kafka Streams on Github => Stream Processing and RPC / Request-Response concepts combined: Model inference with Apache Kafka, Kafka Streams and a TensorFlow model deployed on a TensorFlow Serving model server

The code is developed and tested on Mac and Linux operating systems. As Kafka does not support and work well on Windows, this is not tested at all.

Java 8 and Maven 3 are required. Maven will download all required dependencies.

Just download the project and run

mvn clean package

Apache Kafka 2.1 is currently used. The code is also compatible with Kafka and Kafka Streams 1.1 and 2.0.

Please make sure to run the Maven build without any changes first. If it works without errors, you can change library versions, Java version, etc. and see if it still works or if you need to adjust code.

Every examples includes an implementation and an unit test. The examples are very simple and lightweight. No further configuration is needed to build and run it. Though, for this reason, the generated models are also included (and increase the download size of the project).

The unit tests use some Kafka helper classes like EmbeddedSingleNodeKafkaCluster in package com.github.megachucky.kafka.streams.machinelearning.test.utils so that you can run it without any other configuration or Kafka setup. If you want to run an implementation of a main class in package com.github.megachucky.kafka.streams.machinelearning, you need to start a Kafka cluster (with at least one Zookeeper and one Kafka broker running) and also create the required topics. So check out the unit tests first.

Use Case

Gradient Boosting Method (GBM) to predict flight delays. A H2O generated GBM Java model (POJO) is instantiated and used in a Kafka Streams application to do interference on new events.

Machine Learning Technology

- H2O

- Check the H2O demo to understand the test and and how the model was built

- You can re-use the generated Java model attached to this project (gbm_pojo_test.java) or build your own model using R, Python, Flow UI or any other technologies supported by H2O framework.

Source Code

MachineLearning_H2O_Example.java

Unit Test

MachineLearning_H2O_Example_IntegrationTest.java

Manual Testing

You can easily test this by yourself. Here are the steps:

-

Start Kafka, e.g. with Confluent CLI:

confluent start kafka -

Create topics AirlineInputTopic and AirlineOutputTopic

kafka-topics --zookeeper localhost:2181 --create --topic AirlineInputTopic --partitions 3 --replication-factor 1 kafka-topics --zookeeper localhost:2181 --create --topic AirlineOutputTopic --partitions 3 --replication-factor 1 -

Start the Kafka Streams app:

java -cp target/kafka-streams-machine-learning-examples-1.0-SNAPSHOT-jar-with-dependencies.jar com.github.megachucky.kafka.streams.machinelearning.Kafka_Streams_MachineLearning_H2O_GBM_Example -

Send messages, e.g. with kafkacat:

echo -e "1987,10,14,3,741,730,912,849,PS,1451,NA,91,79,NA,23,11,SAN,SFO,447,NA,NA,0,NA,0,NA,NA,NA,NA,NA,YES,YES" | kafkacat -b localhost:9092 -P -t AirlineInputTopic -

Consume predictions:

kafka-console-consumer --bootstrap-server localhost:9092 --topic AirlineOutputTopic --from-beginning -

Find more details in the unit test...

H2O Deep Learning instead of H2O GBM Model

The project includes another example with similar code to use a H2O Deep Learning model instead of H2O GBM Model: Kafka_Streams_MachineLearning_H2O_DeepLearning_Example_IntegrationTest.java This shows how you can easily test or replace different analytic models for one use case, or even use them for A/B testing.

Use Case

Convolutional Neural Network (CNN) to for image recognition. A prebuilt TensorFlow CNN model is instantiated and used in a Kafka Streams application to do recognize new JPEG images. A Kafka Input Topic receives the location of a new images (another option would be to send the image in the Kafka message instead of just a link to it), infers the content of the picture via the TensorFlow model, and sends the result to a Kafka Output Topic.

Machine Learning Technology

- TensorFlow

- Leverages TensorFlow for Java. These APIs are particularly well-suited for loading models created in Python and executing them within a Java application. Please note: The Java API doesn't yet include convenience functions (which you might know from Keras), thus a private helper class is used in the example for construction and execution of the pre-built TensorFlow model.

- Check the official TensorFlow demo LabelImage to understand this image recognition example

- You can re-use the pre-trained TensorFlow model attached to this project tensorflow_inception_graph.pb or add your own model.

- The 'images' folder contains models which were used for training the model (trained_airplane_1.jpg, trained_airplane_2.jpg, trained_butterfly.jpg) but also a new picture (new_airplane.jpg) which is not known by the model and using a different resolution than the others. Feel free to add your own pictures (they need to be trained, see list of trained pictures in the file: imagenet_comp_graph_label_strings.txt), otherwise the model will return 'unknown'.

Source Code

Kafka_Streams_TensorFlow_Image_Recognition_Example.java

Unit Test

Kafka_Streams_TensorFlow_Image_Recognition_Example_IntegrationTest.java

Use Case

Iris Species Prediction using a Neural Network. This is a famous example: Prediction of the Iris Species - implemented with many different ML algorithms. Here I use DeepLearning4J (DL4J) to build a neural network using Iris Dataset.

Machine Learning Technology

- DeepLearning4J

- Pretty simple example to demo how to build, save and load neural networks with DL4J. MultiLayerNetwork and INDArray are the key APIs to look at if you want to understand the details.

- The model is created via DeepLearning4J_CSV_Model.java and stored in the resources: DL4J_Iris_Model.zip. No need to re-train, just for reference. Kudos to Adam Gibson who created this example as part of the DL4J project.

Unit Test Kafka_Streams_MachineLearning_DL4J_DeepLearning_Iris_IntegrationTest.java

Use Case

Development of an analytic model trained with Python, Keras and TensorFlow and deployment to Java and Kafka ecosystem. No business case, just a technical demonstration of a simple 'Hello World' Keras model. Feel free to replace the model with any other Keras model trained with your backend of choice. You just need to replace the model binary (and use a model which is compatible with DeepLearning4J 's model importer).

Machine Learning Technology

- Python

- DeepLearning4J

- Keras - a high-level neural networks API, written in Python and capable of running on top of TensorFlow, CNTK, or Theano.

- TensorFlow - used as backend under the hood of Keras

- DeepLearning4J 's KerasModelImport feature is used for importing the Keras / TensorFlow model into Java. The used model is its 'Hello World' model example.

- The Keras model was trained with this Python script.

Unit Test