CNN models trained to detect whether an image has been manipulated (deepfake).

GitHub Home

·

Report Bug

·

All Projects

Table of Contents

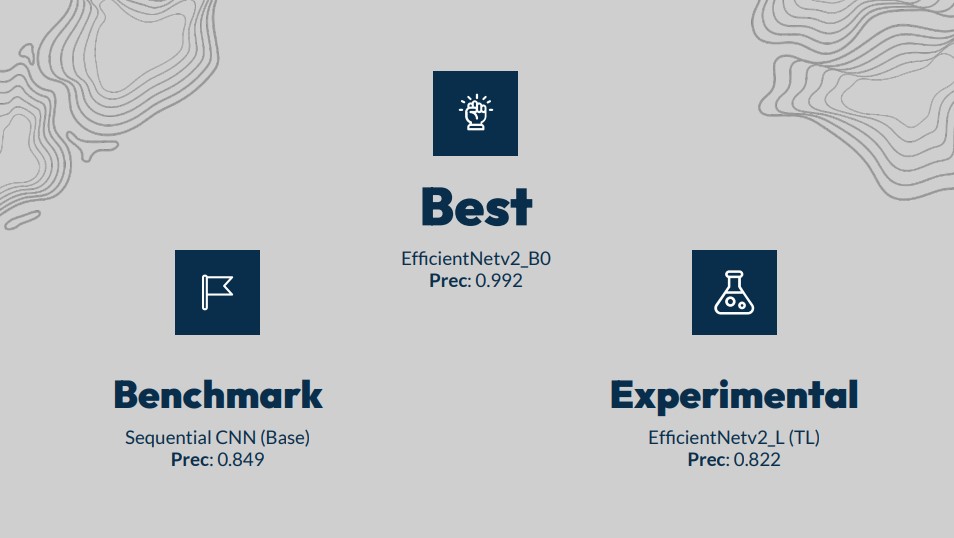

In an effort to combat a rising risks associated with accessible generative AI known as deepfakes, this project seeks to create a strong deepfake detector using 11 convolutional neural nets (CNNs). Data was taken from OpenForensics (an open-source dataset of labeled real and fake images), preprocessed, and fitted to 2 types of architectures of CNNs: Sequential models and EfficientNet models. The end result of these models peaked at a validation accuracy of 0.965 and precision of 0.992, with the strongest recommended model being the EfficientNet_v2B0 (located in the pre-trained models folder). Thus, this project recommended using the EfficientNet_v2B0 model for detecting the difference between deepfakes and real photographs.

This project provides pre-trained models as out-of-the-box solutions for business needs, saving users the time and compute!

Download pre-reqs

pip install streamlit

pip install tensorflowClone repo

git clone https://github.com/cdenq/deepfake-image-detector.git Navigate to models folder and unzip

cd deepfake-image-detector/code/PretrainedModel/

unzip dffnetv2B0.zipNavigate to streamlit folder

cd streamlit_deepfake_detectorActivate virtual environment in which you downloaded the pre-reqs into

source activate your_envs

streamlit run multipage_app.pyYou are now able to choose between the Detector and Game modes to upload / check if images are deepfakes!

NOTE: This detector is trained on real and altered humans, meaning it struggles with cartoons or drawn images! The best performance comes with photo-realistic images.

The rapid evolution of generative artificial intelligence (GPAI, LLMs) has rapidly increased the public’s access to powerful, deceptive tools. One such concern is the increasing prevalence of deepfake images, which pose a significant threat to public trust and undermines the epistemic integrity of visual media. These manipulated images can be utilized to spread false information, manipulate public opinion, and polarize communities, which can have serious consequences for both social and political discourse.

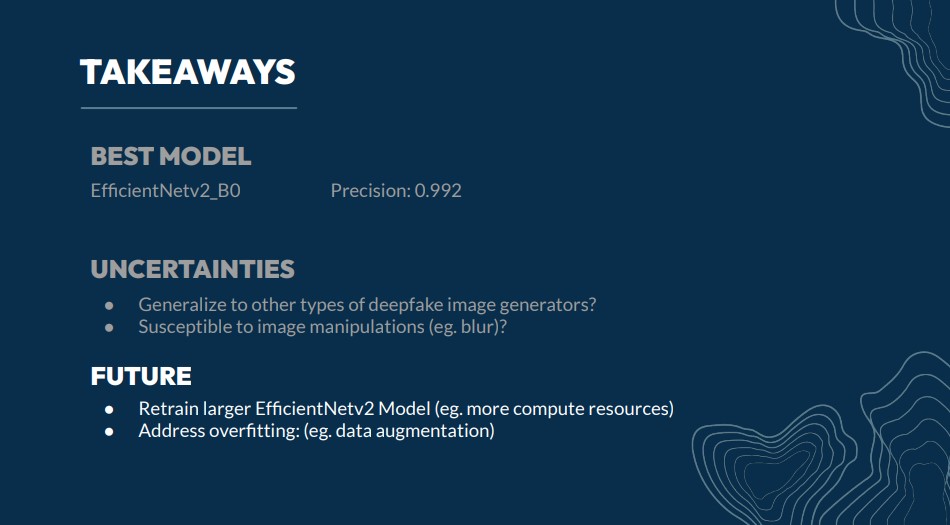

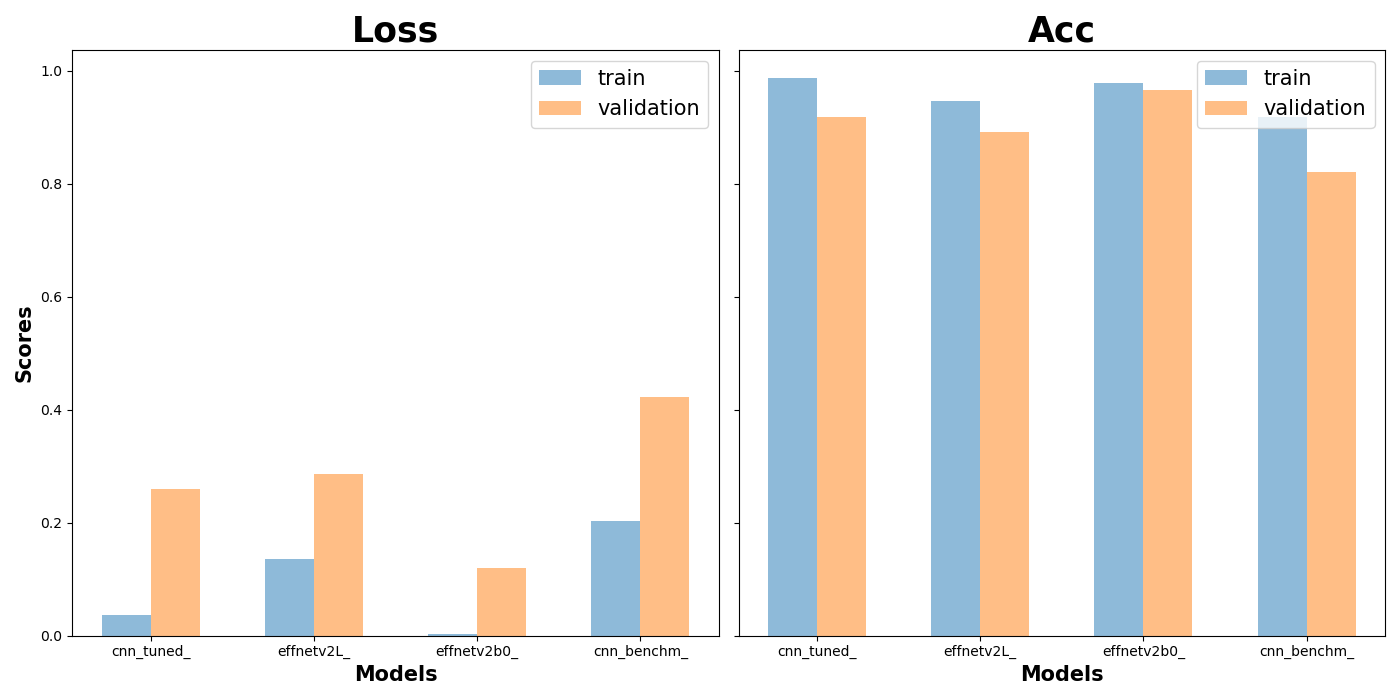

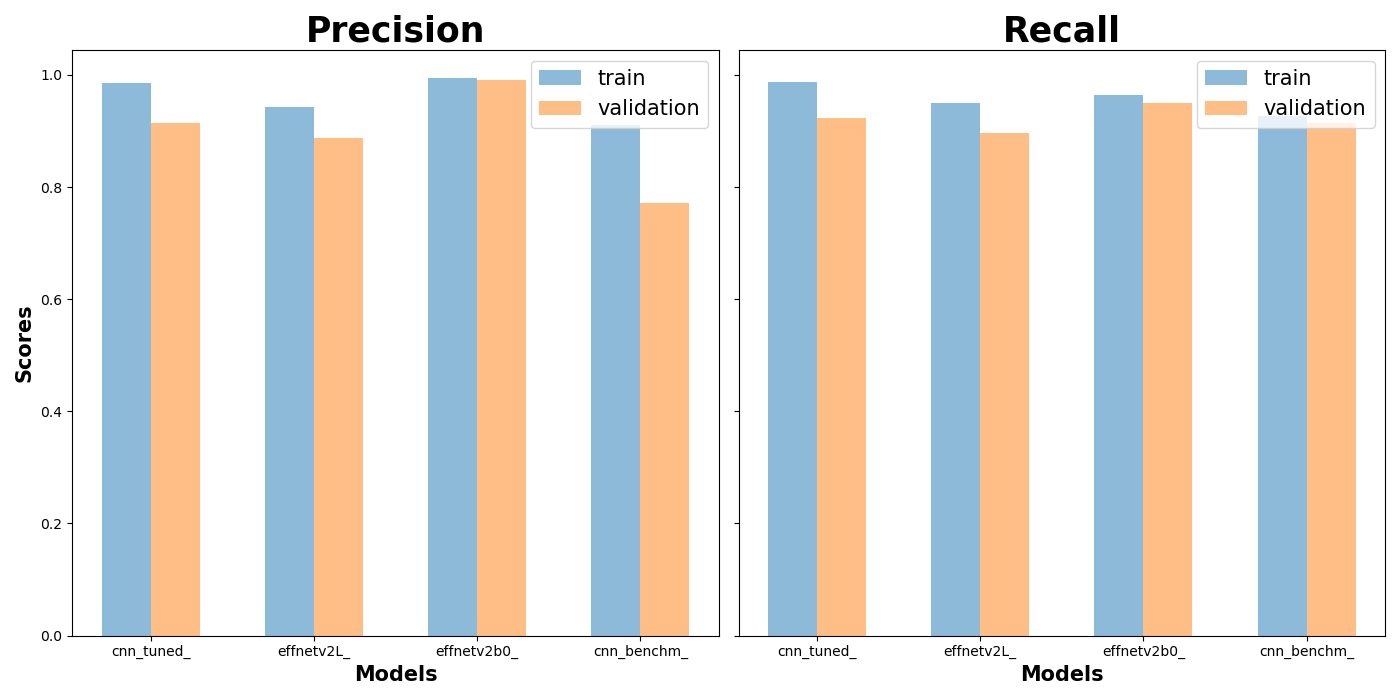

This project aims to combat the spread of AI risks by developing a deep learning model that can detect differences between deepfakes and real images. Model are evaluated by their validation precision score, since a false-positive (deepfake labeled as real) was taken to be the more serious error.

Data is taken from OpenForensics, which is an open-source dataset used in the paper "Multi-Face Forgery Detection And Segmentation In-The-Wild Dataset" by Le, Trung-Nghia et al. Not much cleaning was needed besides train_test_split.

Preprocessing for modeling included image formatting to (256, 256) and scaling (done within the layers). For certain models, this project also applied data augmentation (rotation, flipping, etc.), which were also included in the layers.

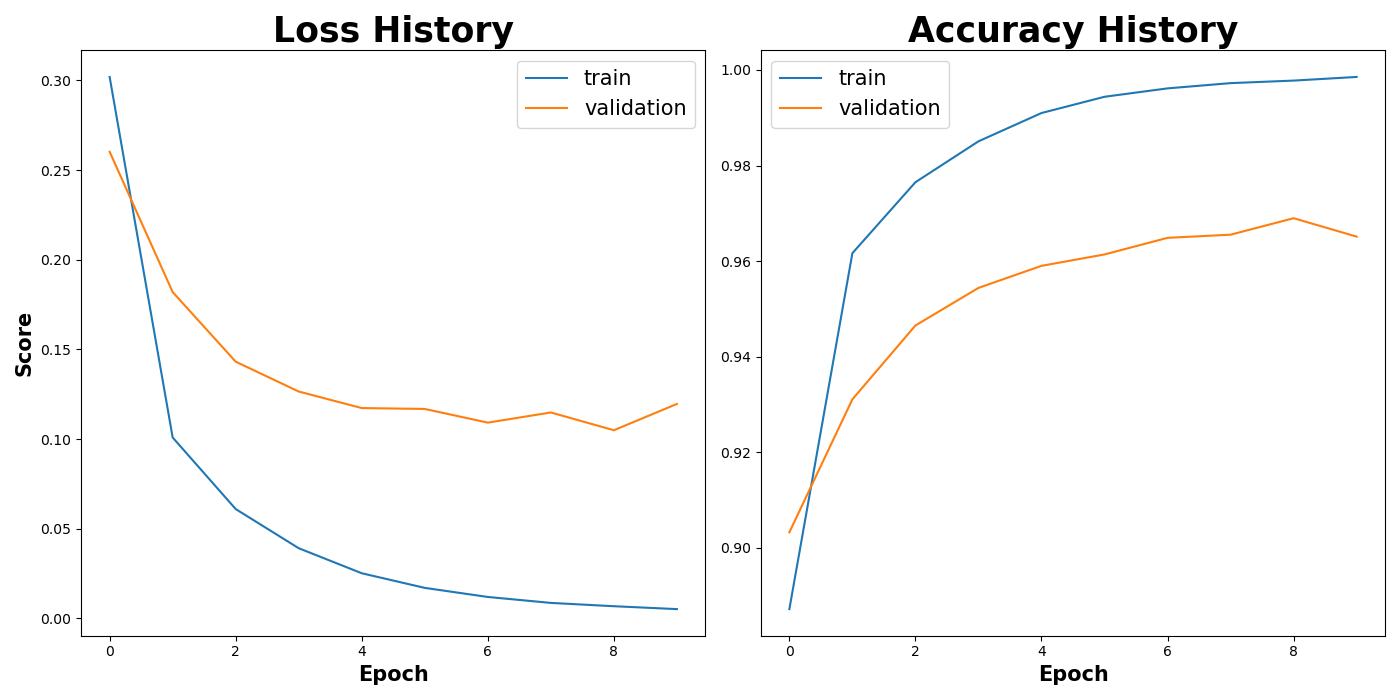

A total of 11 CNNs were trained across local and Google Collab instances. The results were aggregated, compared, and carefully selected to represent the main models shown in this repo.

Based on the findings, this project recommends using the EfficientNet_v2B0 model, which was the best performing model. This out-of-the-box solution would provide the highest scores of validation accuracy of 0.965 and validation precision of 0.992.

Training data did not include further alterations besides the stock deepfake images (eg. the training data set did not include color tints, high contrast, blurred, etc.), while the testing data did. Thus, this project can achieve a higher performance by including such alterations in the data augmentation step. Likewise, increased time horizons and stronger compute can lead to more robust models.

If you wish to contact me, Christopher Denq, please reach out via LinkedIn.

If you're curious about more projects, check out my website or GitHub.