This repository contains the code for project "Audio Scene Classification". This project uses audio in the nearby environment to classify the things in a scene without using a visual component.

PROJECT STATUS: Ongoing

To convert WAVE audio files from 44.1 or 48 KHz to 16 KHz PCM WAVE file, use the following command from the current audio files folder:

for f in *.wav;do

ffmpeg -i $f -ar 16000 path_to_destination_folder/${f};

done

1. Python 3.6

2. Librosa 0.6 [Audio Processing Library]

pip3 install librosa --upgrade

3. Matplotlib

pip3 install matplotlib --upgrade

4. Keras

pip3 install keras --upgrade

5. Tensorflow

pip3 install tensorflow --upgrade

or

pip3 install tensorflow-gpu --upgrade

NOTE: Tensorflow GPU requires CUDA and cuDNN.

6. Pickle

pip3 install pickle --upgrade

7. TQDM [for Progressbar]

pip3 install tqdm --upgrade

The dataset I am using for this project is the "UrbanSound dataset".

Download the dataset from the link below and place inside the dataset folder.

https://serv.cusp.nyu.edu/projects/urbansounddataset/

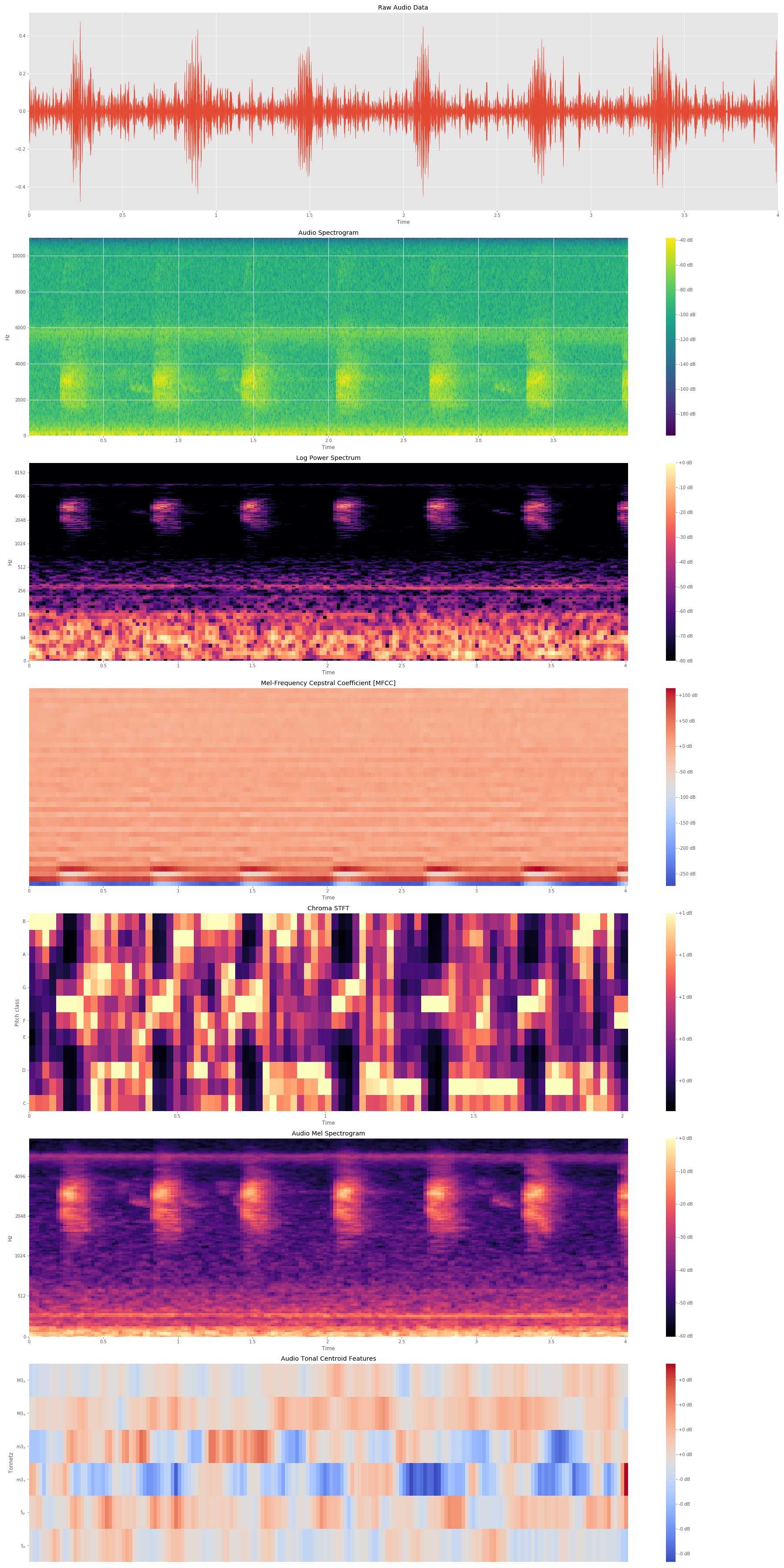

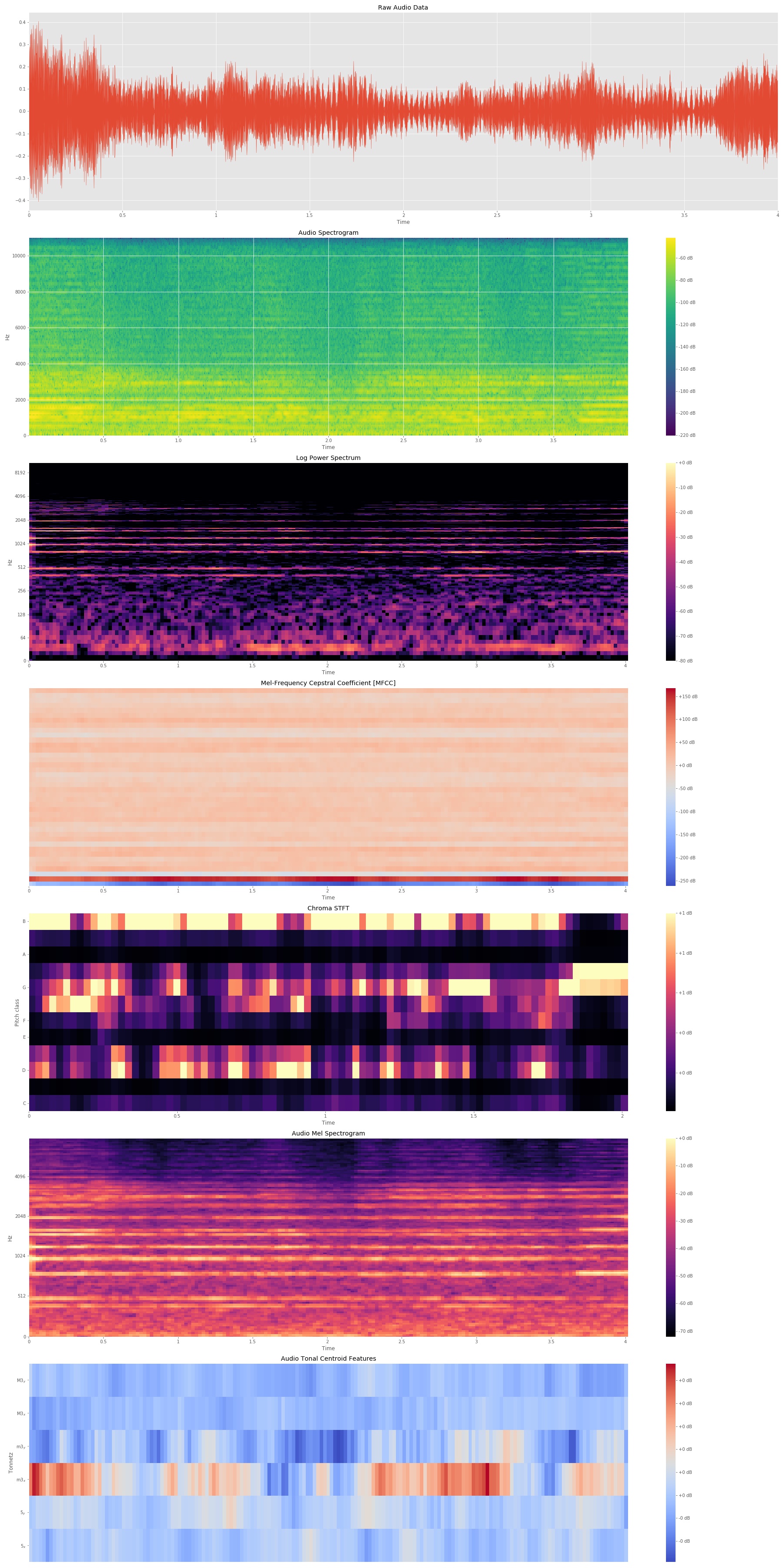

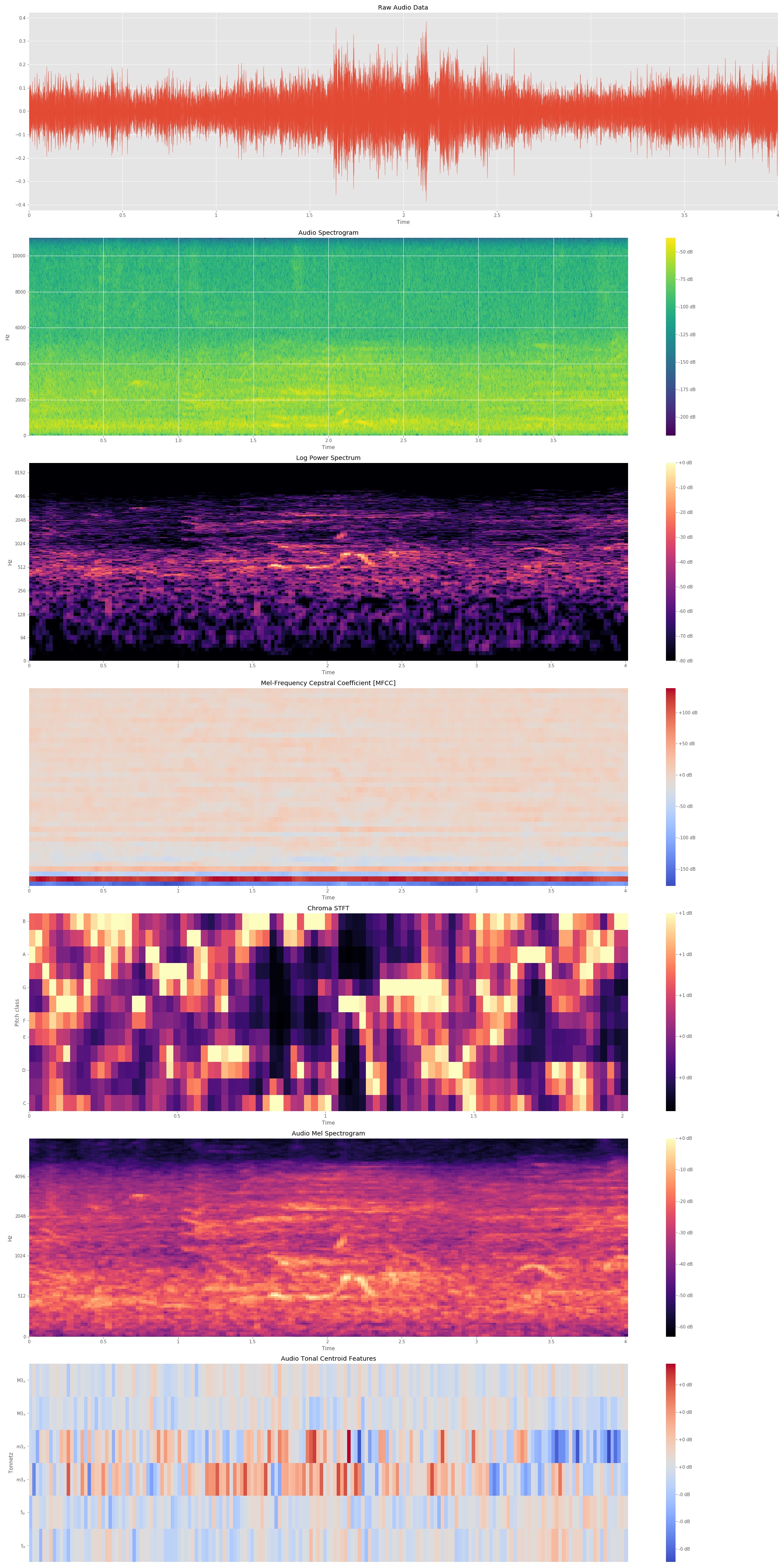

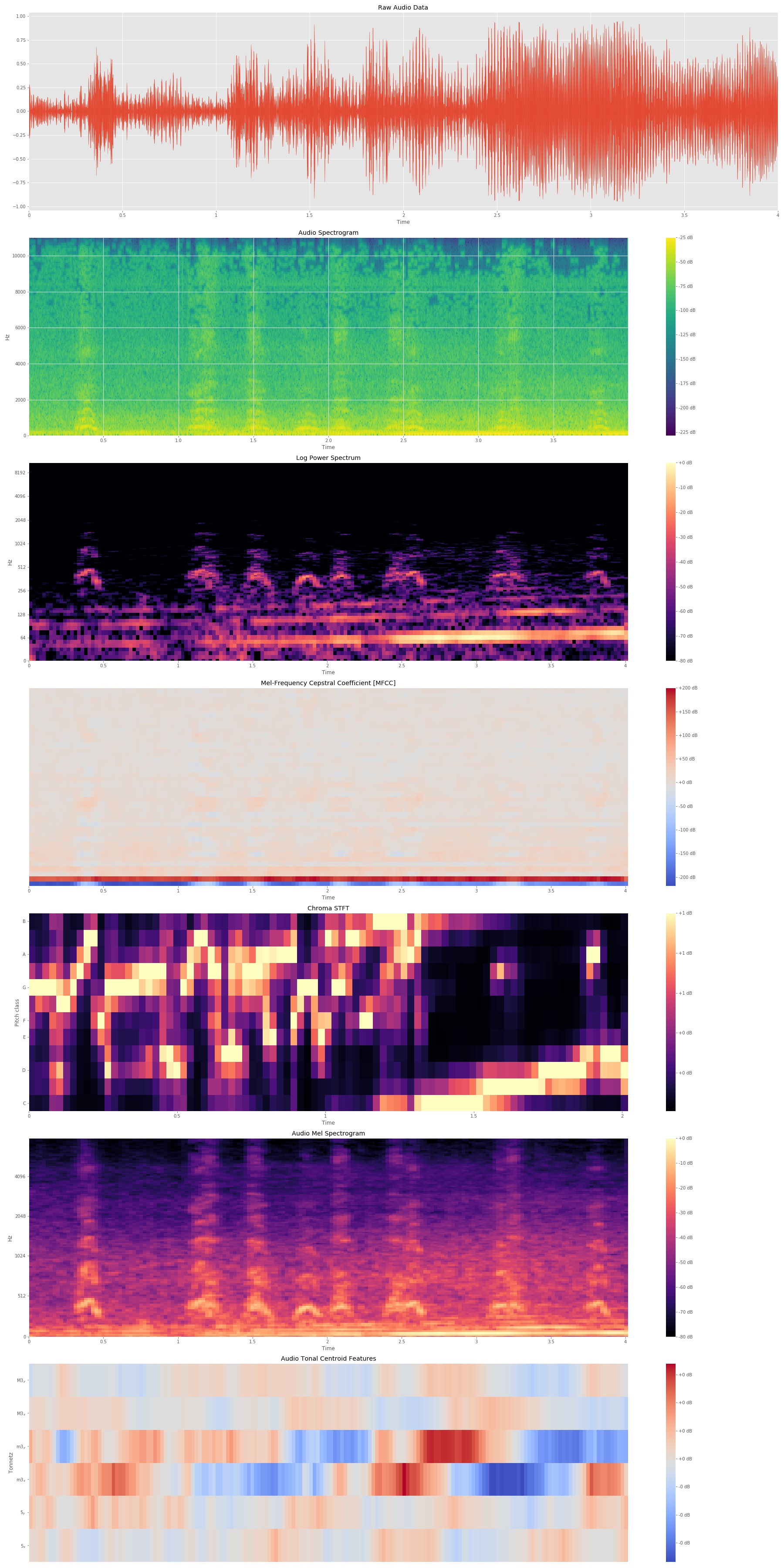

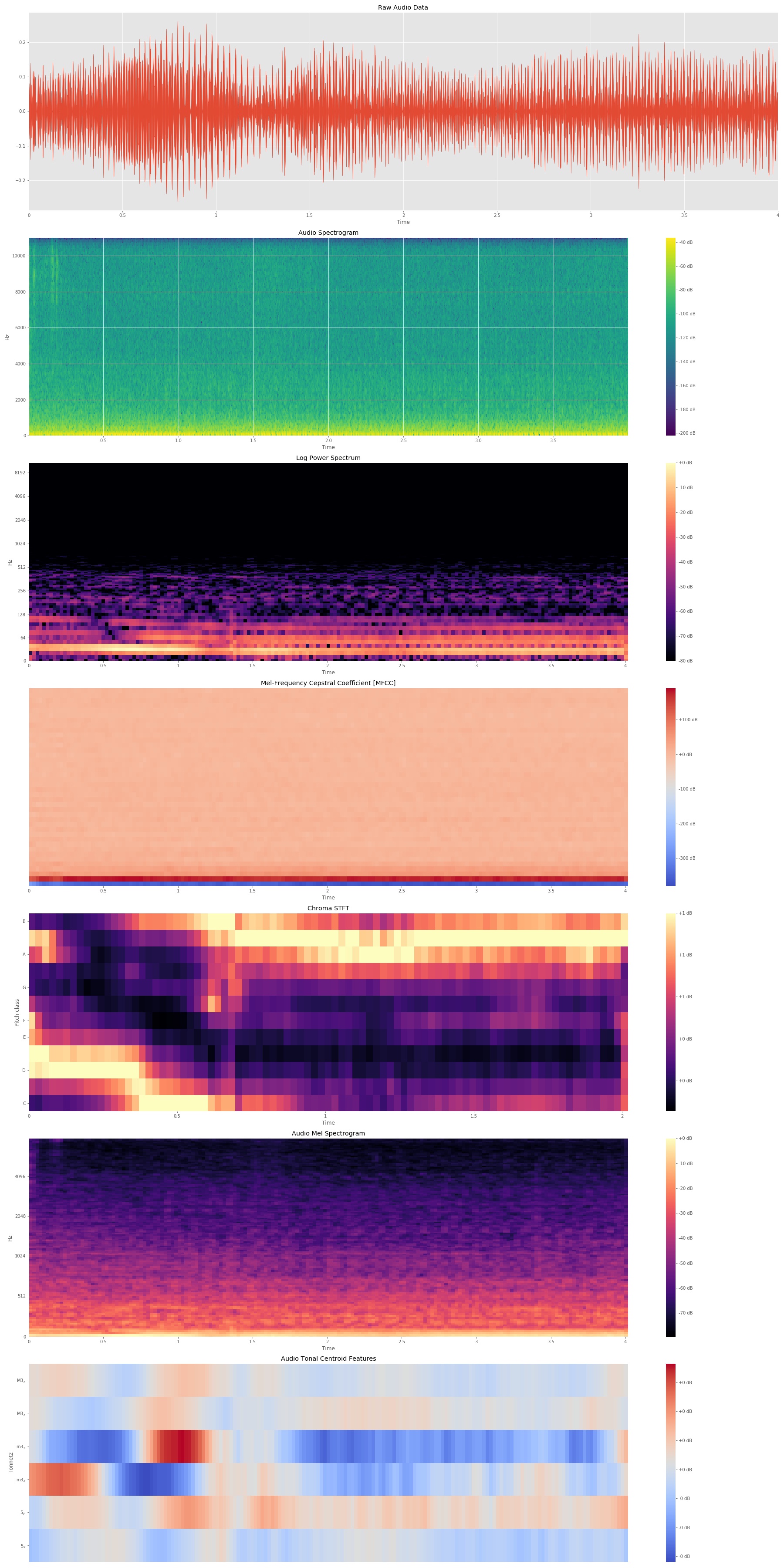

The main extracted features from the audio are:

a). Mel Spectrogram: Mel-scaled Power Spectrogram

b). MFCC: Mel-Frequency Cepstral Coefficients

c). Chorma STFT: Compute a chromagram from a waveform or power spectrogram

d). Spectral Contrast: Compute spectral contrast

e). Tonnetz: Computes the tonal centroid features (tonnetz)

Following are the extracted features for some audio files:

1. Air Conditioner Audio Features

2. Car Horn Audio Features

3. Children Playing Audio Features

4. Dog Barking Audio Features

5. Idle Engine Audio Features