To generate a caption for any image in natural language, English. The architecture for the model is inspired from [1] by Vinyals et al. The module is built using keras, the deep learning library.

This repository serves two purposes:

- present/ discuss my model and results I obtained

- provide a simple architecture for image captioning to the community

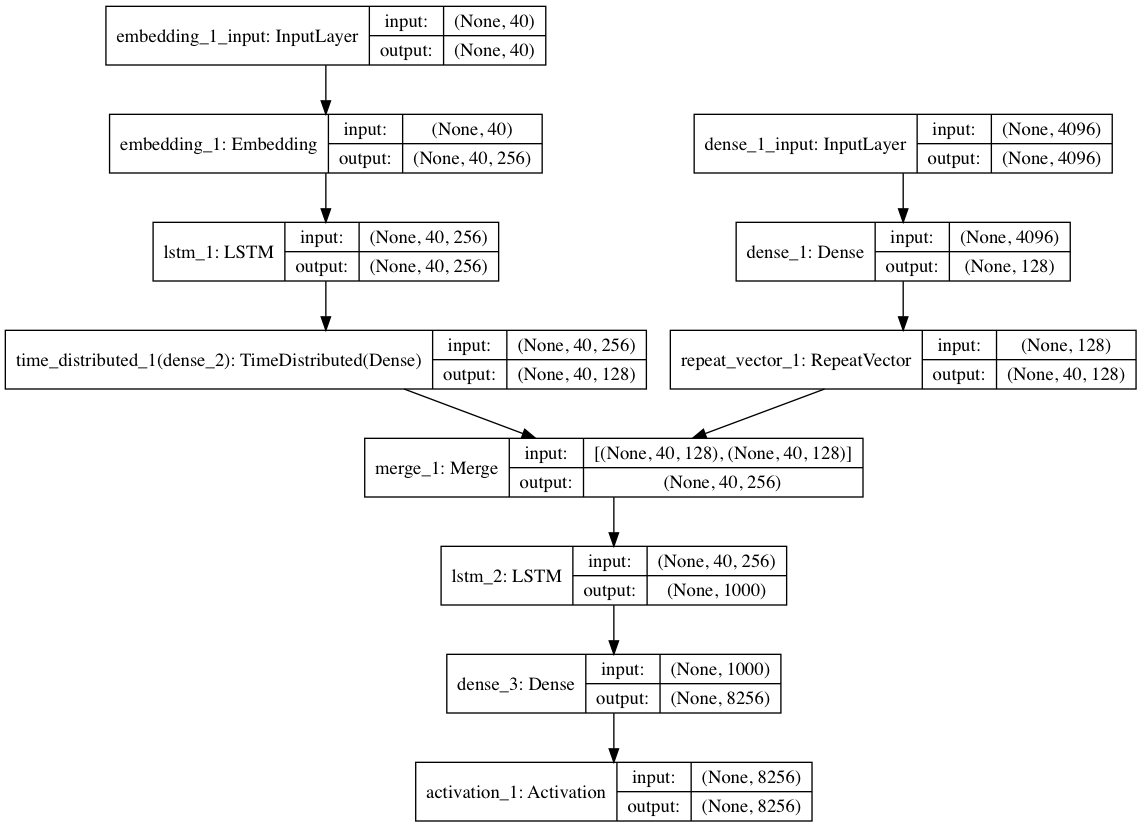

The Image captioning model has been implemented using the Sequential API of keras. It consists of three components:

-

An encoder CNN model: A pre-trained CNN is used to encode an image to its features. In this implementation VGG16 model[d] is used as encoder and with its pretrained weights loaded. The last softmax layer of VGG16 is removed and the vector of dimention (4096,) is obtained from the second last layer.

To speed up my training, I pre-encoded each image to its feature set. This is done in the

prepare_dataset.pyfile to form a resultant pickle fileencoded_images.p. In the current version, the image model takes the (4096,) dimension encoded image vector as input. This can be overrided by uncommenting the VGG model lines incaption_generator.py. There is no fine tuning in the current version but can be implemented. -

A word embedding model: Since the number of unique words can be large, a one hot encoding of the words is not a good idea. An embedding model is trained that takes a word and outputs an embedding vector of dimension (1, 128).

Pre-trained word embeddings can also be used.

-

A decoder RNN model: A LSTM network has been employed for the task of generating captions. It takes the image vector and partial captions at the current timestep and input and generated the next most probable word as output.

The overall architecture of the model is described by the following picture. It also shows the input and output dimension of each layer in the model.

The model has been trained and tested on Flickr8k dataset[2]. There are many other datasets available that can used as well like:

- Flickr30k

- MS COCO

- SBU

- Pascal

The model has been trained for 20 epoches on 6000 training samples of Flickr8k Dataset. It acheives a BLEU-1 = ~0.59 with 1000 testing samples.

- tensorflow

- keras

- numpy

- h5py

- progressbar2

These requirements can be easily installed by:

pip install -r requirements.txt

- caption_generator.py: The base script that contains functions for model creation, batch data generator etc.

- prepare_data.py: Extracts features from images using VGG16 imagenet model. Also prepares annotation for training. Changes have to be done to this script if new dataset is to be used.

- train_model.py: Module for training the caption generator.

- eval_model.py: Contains module for evaluating and testing the performance of the caption generator, currently, it contains the BLEU metric.

- Download pre-trained weights from releases

- Move

model_weight.h5tomodelsdirectory - Prepare data using

python prepare_data.py - For inference on example image, run:

python eval_model.py -i [img-path]

After the requirements have been installed, the process from training to testing is fairly easy. The commands to run:

python prepare_data.pypython train_model.pypython eval_model.py

After training, evaluation on an example image can be done by running:

python eval_model.py -m [model-checkpoint] -i [img-path]

| Image | Caption |

|---|---|

|

Generated Caption: A white and black dog is running through the water |

|

Generated Caption: man is skiing on snowy hill |

|

Generated Caption: man in red shirt is walking down the street |

[1] Oriol Vinyals, Alexander Toshev, Samy Bengio, Dumitru Erhan. Show and Tell: A Neural Image Caption Generator

[2] Kelvin Xu, Jimmy Ba, Ryan Kiros, Kyunghyun Cho, Aaron Courville, Ruslan Salakhutdinov, Richard Zemel, Yoshua Bengio. Show, Attend and Tell: Neural Image Caption Generation with Visual Attention