This test suite tests necessary s3compat APIs and measure simple performance stats.

This test suite requires Java 1.8 or higher, and is built using Maven.

- Checkout source code from Github by running:

git clone git@github.com:snowflakedb/snowflake-s3compat-api-test-suite.git-

Build the test suite

The dependency spf4j-ui used in this repo has a fork build, GitHub Package requires authorized access for it. See GitHub Maven registry.

Add below block to your ~/.m2/settings.xml file, A scoped "read:packages" token is sufficient.

<server>

<id>github</id>

<username>your github username</username>

<password>your github access token</password>

</server>

Build the test suite by running:

cd snowflake-s3compat-api-test-suite && mvn clean install -DskipTests [variables] [description]

BUCKET_NAME_1 The bucket name for testing, locate at REGION_1, expect versioning enabled.

REGION_1 Region for the above bucket, like us-east-1.

REGION_2 Region that is different than REGION_1, like us-west-2.

S3COMPAT_ACCESS_KEY Access key to used to acess to the above bucket.

S3COMPAT_SECRET_KEY Secret key to used to access to the above bucket.

END_POINT End point that can route operations to the provided bucket.

NOT_ACCESSIBLE_BUCKET A bucket that is not accessible by the provided keys, at REGION_1.

PREFIX_FOR_PAGE_LISTING The prefix for testing listing large num of objects, at BUCKET_NAME_1, it needs to have over 1000 objects.

PAGE_LISTING_TOTAL_SIZE The total size of objects on the above prefix: PREFIX_FOR_PAGE_LISTING.

example to set environment variables:

export REGION_1=<region_1_for_bucket_1>

The test suite accept environment variables or CLI arguments.

navigate to target folder

cd s3compatapiTest a specific API, eg: test getBucketLocation

mvn test -Dtest=S3CompatApiTest#getBucketLocationTest all APIs using already setup environment variables

mvn test -Dtest=S3CompatApiTestNote that run all tests may take more than 2 min as one putObject test is testing uploading file upto 5GB.

Test using CLI variables (if environment variables not set setup yet)

mvn test -Dtest=S3CompatApiTest -DREGION_1=us-east-1 -DREGION_2=us-west-2 -D...

mvn test -Dtest=S3CompatApiTest#getObject -DREGION_1=us-east-1 -DREGION_2=us-west-2 -D...Collect perf stats by default: all APIs run 20 times

java -jar target/snowflake-s3compat-api-tests-1.0-SNAPSHOT.jarCollect perf stats by passing arguments:

-a: list of APIs separated by comma; -t: how times to run the APIs.

java -jar target/snowflake-s3compat-api-tests-1.0-SNAPSHOT.jar -a getObject,putObject -t 10Above command indicates to collect perf stats for 10 times of getObject and putObject.

(use below ui to open the generated .tsdb2 file)

java -jar ../spf4jui/target/dependency-jars/spf4j-ui-8.9.5.jarOpen the .tsdb2 file, choose one of the API data generated, click Plot to see the charts.

Perf data is generated and stored in .tsdb2 as binary;

Perf data is also stored in .txt file for other processing if necessary.

Below is the list of APIs called in this repo:

getBucketLocation

getObject

getObjectMetadata

putObject

listObjectsV2

deleteObject

deleteObjects

copyObject

generatePresignedUrl

If all of your APIs pass the tests in this repo, please refer to our public documentations about using this feature on Snowflake deployments.

Working With Amazon S3-compatible Storage

Using On-Premises Data in Place with Snowflake

We expect the storage vendors provide S3-compatible APIs, which should work like S3 APIs. We have observed there are still differences between storage vendors. Below are some troubleshooting tips. When your tests fail, please refer to the source code to see what are the test cases.

-

getBucketLocation tests fail

We call getObjectMetadata() for header "x-amz-bucket-region" to retrieve the region for a bucket, because the region is required for SigV4. If you confirm that your service ignores the bucket location (region) in the SigV4 from the request, then you can ignore the test failures for getBucketLocation. If your service requires SigV4, then your service should support getObjectMetadata() responded with "x-amz-bucket-region" header OR support getBucketLocation().

-

negative tests fail

For some negative test cases, AWS S3 returns 404 or 403, while your APIs return 400 or other error codes, so your test fails as the error code is different from what the test case expects. This should be fine as long as your APIs return reasonable error codes and messages for those negative cases.

-

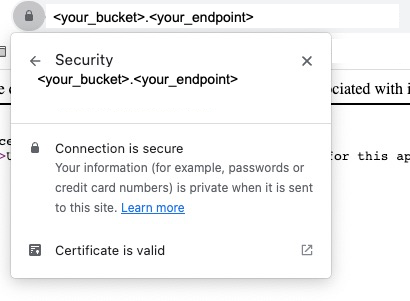

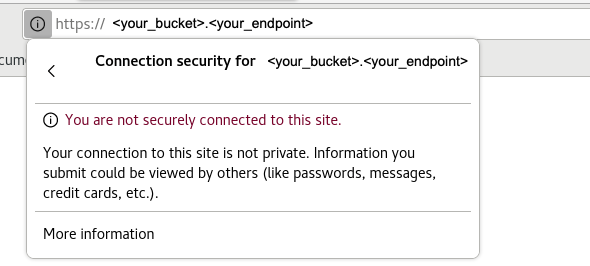

After you finish the tests, please do verify your endpoint has a valid SSL certificate. Construct a host style URL in this format: https://<your_bucket>.<your_endpoint>, and then paste it in your browser, click the padlock icon in the address bar (left side), then click Connection or Certificate.

If you have valid SSL certificate, you should see something like below indicating "Certificate is valid"

If you do not have a valid SSL certificate, you will see something like "This site can’t be reached" or "Your connection to this site is not secure".

Please do make sure you have a valid SSL cert for your endpoint before you run any queries on Snowflake platform.

Feel free to file an issue or submit a PR here for general cases. For official support, contact Snowflake support at: https://community.snowflake.com/s/article/How-To-Submit-a-Support-Case-in-Snowflake-Lodge