In this project, I implement an one-stage detection and classification model based on this paper Focal Loss for Dense Object Detection, to detect and classify traffic signs. This model was trained on the Tsinghua_Tecent_100K Dataset. After carefully model tuning, the RetinaNet finally achieved 90 MAP on 42 classes traffic signs on the test dataset, which is better than previous benchmarks.

- Traffic-Sign Detection and Classification in the Wild

- TAD16K: An enhanced benchmark for autonomous driving

Visualization results show this model works well for traffic signs in various sizes and prospectives.

- Python 2.7+

- Pytorch 0.3.0+

- PIL

- OpenCV-Python

- Numpy

- Matplotlib

- Clone this repository

- Download the pretrained model here

python test.py -m demo- This will run inference on images from /samples, you can also put your own images into this directory to run inference.

python test.py -m valid- This will run inference on the entire valid dataset and output the evaluation result.You can change the backbone model by -backbone flag. See the code for details.

- The training and testing dataset I used can be downloaded here

- Save the training and valid dataset into the /data directory

python train.py -exp model- This will train the RetinaNet model from scratch. You can change the parameters easily in the config.py file and use similar ways to train your own dataset. The flag model specify the directory to save your model. See the code for more details.

-

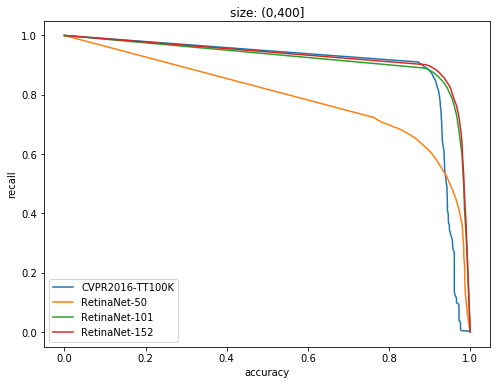

All the parameters are carefully tuned to fit the need for Traffic sign detection. Please note that the size and number of anchors are extremely important for the following steps. We must ensure all detectable targets can be illuminated by at least one anchor. The size of traffic signs in the dataset ranges from [10, 500]. See

encode.pyfor more details. -

The original images in the Tsinghua_Tecent_100K dataset is very large(2048×2048), which is extremely memory-consuming to train. So I cropped the images to 512×512 pixels and implemented slight data augmentation on the training set. All the classes appeared less than 100 times in the dataset were omitted and there are 42 classes left. Classes appeared less than 1000 times were manually augmented to have approximate 1000 samples. Augmentation methods included random crop, illumination change and contrast change. The final training dataset contains 37,212 images.

-

I use the Group Normalization to accelerate the training process. Since the ResNet+RetinaNet model is a very large neural network,only very small batch size(eg. 4,8) can be used on a common GPU. According Yuxin Wu,Kaiming He's paper, Group Normalization performs better in such situations.

All the models were trained on two GTX-1080Ti GPU for 18 epochs with batch size of 8. I use Adam Optimizer with learning rate 1e-4 and the learning rate was decayed to 1e-5 for the last 6 epochs. It generally takes 20 hours for a RetinaNet model to converge.

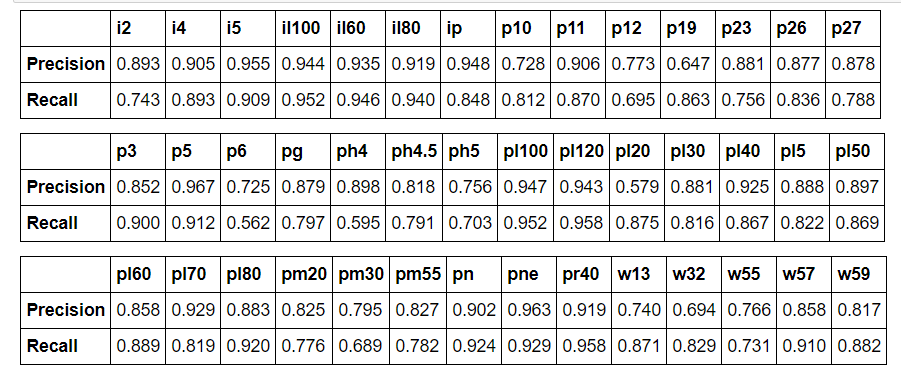

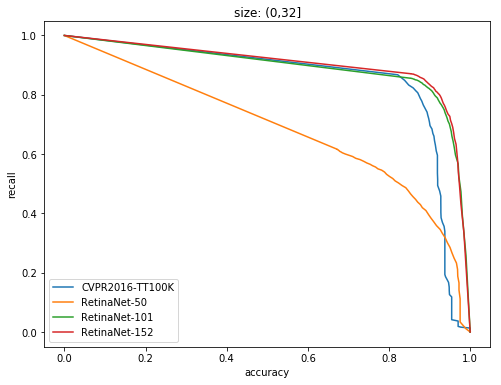

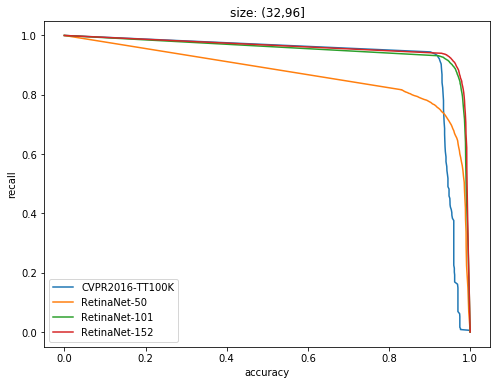

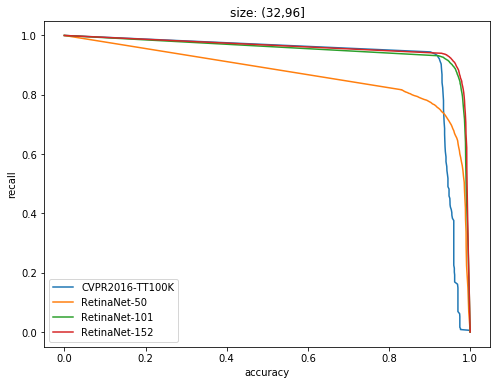

As shown below, the RetinaNet-101 and RetinaNet-152 models achieve 92.03 and 92.80 MAP on the Tsinghua_Tecent_100K dataset respectively, which outperform the previous benchmark for simultanous traffic sign detection and classification. See evaluate/eval_check.ipynb for more details.

| Models | CVPR-2016 TT100K | RetinaNet-50 | RetinaNet-101 | RetinaNet-152 |

|---|---|---|---|---|

| MAP | 0.8979 | 0.7939 | 0.9203 | 0.9280 |

- 1.Focal Loss for Dense Object Detection

- 2.Traffic-Sign Detection and Classification in the Wild

- 3.TAD16K: An enhanced benchmark for autonomous driving

- 4.Group Normalization

- 5.andreaazzini's implementation

- 6.fizyr's implementation