ChatGPT for FinTech: a list of resources to use ChatGPT for FinTech

Financial reinforcement learning (FinRL) (Document website) is the first open-source framework for financial reinforcement learning. FinRL has evolved into an ecosystem

| Dev Roadmap | Stage | Users | Project | Desription |

|---|---|---|---|---|

| 0.0 (Preparation) | entrance | practitioners | FinRL-Meta | gym-style market environments |

| 1.0 (Proof-of-Concept) | full-stack | developers | this repo | automatic pipeline |

| 2.0 (Professional) | profession | experts | ElegantRL | algorithms |

| 3.0 (Production) | service | hedge funds | Podracer | cloud-native deployment |

- Overview

- File Structure

- Supported Data Sources

- Installation

- Status Update

- Tutorials

- Publications

- News

- Citing FinRL

- Welcome Contributions

- Sponsorship

- LICENSE

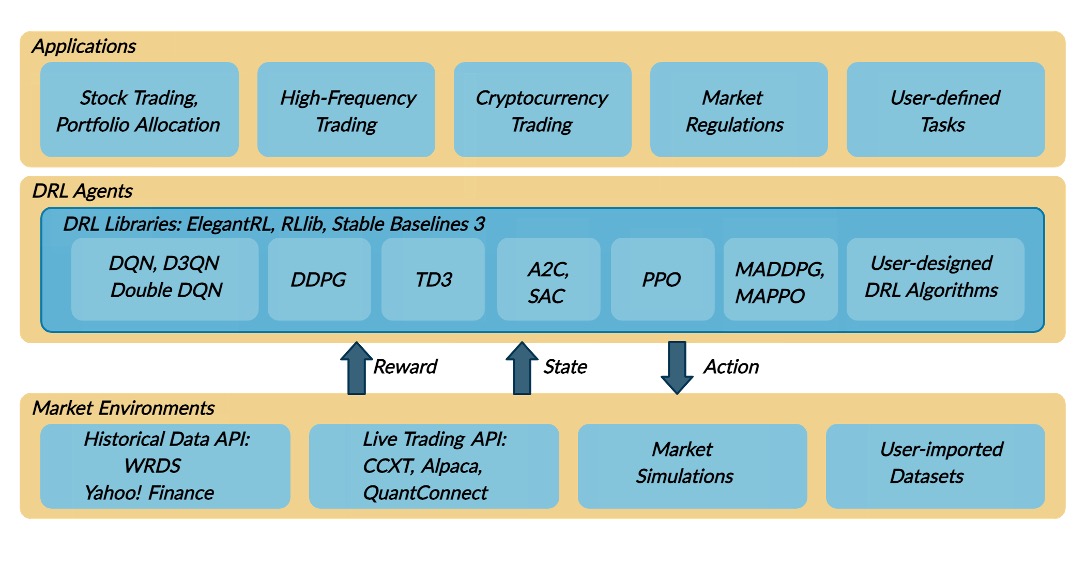

FinRL has three layers: market environments, agents, and applications. For a trading task (on the top), an agent (in the middle) interacts with a market environment (at the bottom), making sequential decisions.

A quick start: Stock_NeurIPS2018.ipynb. Videos FinRL at AI4Finance Youtube Channel.

The main folder finrl has three subfolders applications, agents, meta. We employ a train-test-trade pipeline with three files: train.py, test.py, and trade.py.

FinRL

├── finrl (main folder)

│ ├── applications

│ ├── cryptocurrency_trading

│ ├── high_frequency_trading

│ ├── portfolio_allocation

│ └── stock_trading

│ ├── agents

│ ├── elegantrl

│ ├── rllib

│ └── stablebaseline3

│ ├── meta

│ ├── data_processors

│ ├── env_cryptocurrency_trading

│ ├── env_portfolio_allocation

│ ├── env_stock_trading

│ ├── preprocessor

│ ├── data_processor.py

│ ├── meta_config_tickers.py

│ └── meta_config.py

│ ├── config.py

│ ├── config_tickers.py

│ ├── main.py

│ ├── plot.py

│ ├── train.py

│ ├── test.py

│ └── trade.py

│

├── examples

├── unit_tests (unit tests to verify codes on env & data)

│ ├── environments

│ └── test_env_cashpenalty.py

│ └── downloaders

│ ├── test_yahoodownload.py

│ └── test_alpaca_downloader.py

├── setup.py

├── requirements.txt

└── README.md

| Data Source | Type | Range and Frequency | Request Limits | Raw Data | Preprocessed Data |

|---|---|---|---|---|---|

| Akshare | CN Securities | 2015-now, 1day | Account-specific | OHLCV | Prices&Indicators |

| Alpaca | US Stocks, ETFs | 2015-now, 1min | Account-specific | OHLCV | Prices&Indicators |

| Baostock | CN Securities | 1990-12-19-now, 5min | Account-specific | OHLCV | Prices&Indicators |

| Binance | Cryptocurrency | API-specific, 1s, 1min | API-specific | Tick-level daily aggegrated trades, OHLCV | Prices&Indicators |

| CCXT | Cryptocurrency | API-specific, 1min | API-specific | OHLCV | Prices&Indicators |

| EODhistoricaldata | US Securities | Frequency-specific, 1min | API-specific | OHLCV | Prices&Indicators |

| IEXCloud | NMS US securities | 1970-now, 1 day | 100 per second per IP | OHLCV | Prices&Indicators |

| JoinQuant | CN Securities | 2005-now, 1min | 3 requests each time | OHLCV | Prices&Indicators |

| QuantConnect | US Securities | 1998-now, 1s | NA | OHLCV | Prices&Indicators |

| RiceQuant | CN Securities | 2005-now, 1ms | Account-specific | OHLCV | Prices&Indicators |

| Tushare | CN Securities, A share | -now, 1 min | Account-specific | OHLCV | Prices&Indicators |

| WRDS | US Securities | 2003-now, 1ms | 5 requests each time | Intraday Trades | Prices&Indicators |

| YahooFinance | US Securities | Frequency-specific, 1min | 2,000/hour | OHLCV | Prices&Indicators |

OHLCV: open, high, low, and close prices; volume. adjusted_close: adjusted close price

Technical indicators: 'macd', 'boll_ub', 'boll_lb', 'rsi_30', 'dx_30', 'close_30_sma', 'close_60_sma'. Users also can add new features.

- Install description for all operating systems (MAC OS, Ubuntu, Windows 10)

- FinRL for Quantitative Finance: Install and Setup Tutorial for Beginners

Version History [click to expand]

- 2022-06-25 0.3.5: Formal release of FinRL, neo_finrl is chenged to FinRL-Meta with related files in directory: meta.

- 2021-08-25 0.3.1: pytorch version with a three-layer architecture, apps (financial tasks), drl_agents (drl algorithms), neo_finrl (gym env)

- 2020-12-14 Upgraded to Pytorch with stable-baselines3; Remove tensorflow 1.0 at this moment, under development to support tensorflow 2.0

- 2020-11-27 0.1: Beta version with tensorflow 1.5

- [Towardsdatascience] Deep Reinforcement Learning for Automated Stock Trading

A complete list at blogs

| Title | Conference | Link | Citations | Year |

|---|---|---|---|---|

| FinRL-Meta: FinRL-Meta: Market Environments and Benchmarks for Data-Driven Financial Reinforcement Learning | NeurIPS 2022 | paper code | 1 | 2022 |

| FinRL: Deep reinforcement learning framework to automate trading in quantitative finance | ACM International Conference on AI in Finance (ICAIF) | paper | 22 | 2021 |

| FinRL-Podracer: High performance and scalable deep reinforcement learning for quantitative finance | ACM International Conference on AI in Finance (ICAIF) | paper code | 8 | 2021 |

| Explainable deep reinforcement learning for portfolio management: An empirical approach | ACM International Conference on AI in Finance (ICAIF) | paper code | 3 | 2021 |

| FinRL: A deep reinforcement learning library for automated stock trading in quantitative finance | NeurIPS 2020 Deep RL Workshop | paper | 50 | 2020 |

| Deep reinforcement learning for automated stock trading: An ensemble strategy | ACM International Conference on AI in Finance (ICAIF) | paper code | 98 | 2020 |

| Practical deep reinforcement learning approach for stock trading | NeurIPS 2018 Workshop on Challenges and Opportunities for AI in Financial Services | paper code | 129 | 2018 |

- [央广网] 2021 IDEA大会于福田圆满落幕:群英荟萃论道AI 多项目发布亮点纷呈

- [央广网] 2021 IDEA大会开启AI**盛宴 沈向洋理事长发布六大前沿产品

- [IDEA新闻] 2021 IDEA大会发布产品FinRL-Meta——基于数据驱动的强化学习金融风险模拟系统

- [知乎] FinRL-Meta基于数据驱动的强化学习金融元宇宙

- [量化投资与机器学习] 基于深度强化学习的股票交易策略框架(代码+文档)

- [运筹OR帷幄] 领读计划NO.10 | 基于深度增强学习的量化交易机器人:从AlphaGo到FinRL的演变过程

- [深度强化实验室] 【重磅推荐】哥大开源“FinRL”: 一个用于量化金融自动交易的深度强化学习库

- [商业新知] 金融科技讲座回顾|AI4Finance: 从AlphaGo到FinRL

- [Kaggle] Jane Street Market Prediction

- [矩池云Matpool] 在矩池云上如何运行FinRL股票交易策略框架

- [财智无界] 金融学会常务理事陈学彬: 深度强化学习在金融资产管理中的应用

- [Neurohive] FinRL: глубокое обучение с подкреплением для трейдинга

- [ICHI.PRO] 양적 금융을위한 FinRL: 단일 주식 거래를위한 튜토리얼

@article{liu2022finrl_meta,

title={FinRL-Meta: Market Environments and Benchmarks for Data-Driven Financial Reinforcement Learning},

author={Liu, Xiao-Yang and Xia, Ziyi and Rui, Jingyang and Gao, Jiechao and Yang, Hongyang and Zhu, Ming and Wang, Christina Dan and Wang, Zhaoran and Guo, Jian},

journal={NeurIPS},

year={2022}

}

@article{liu2021finrl,

author = {Liu, Xiao-Yang and Yang, Hongyang and Gao, Jiechao and Wang, Christina Dan},

title = {{FinRL}: Deep reinforcement learning framework to automate trading in quantitative finance},

journal = {ACM International Conference on AI in Finance (ICAIF)},

year = {2021}

}

@article{finrl2020,

author = {Liu, Xiao-Yang and Yang, Hongyang and Chen, Qian and Zhang, Runjia and Yang, Liuqing and Xiao, Bowen and Wang, Christina Dan},

title = {{FinRL}: A deep reinforcement learning library for automated stock trading in quantitative finance},

journal = {Deep RL Workshop, NeurIPS 2020},

year = {2020}

}

@article{liu2018practical,

title={Practical deep reinforcement learning approach for stock trading},

author={Liu, Xiao-Yang and Xiong, Zhuoran and Zhong, Shan and Yang, Hongyang and Walid, Anwar},

journal={NeurIPS Workshop on Deep Reinforcement Learning},

year={2018}

}

We published FinRL papers that are listed at Google Scholar. Previous papers are given in the list.

Welcome to AI4Finance community!

Discuss FinRL via AI4Finance mailing list and AI4Finance Slack channel:

Please check Contributing Guidances.

Thank you!

Welcome gift money to support AI4Finance, a non-profit community.

Network: USDT-TRC20

MIT License

Disclaimer: Nothing herein is financial advice, and NOT a recommendation to trade real money. Please use common sense and always first consult a professional before trading or investing.