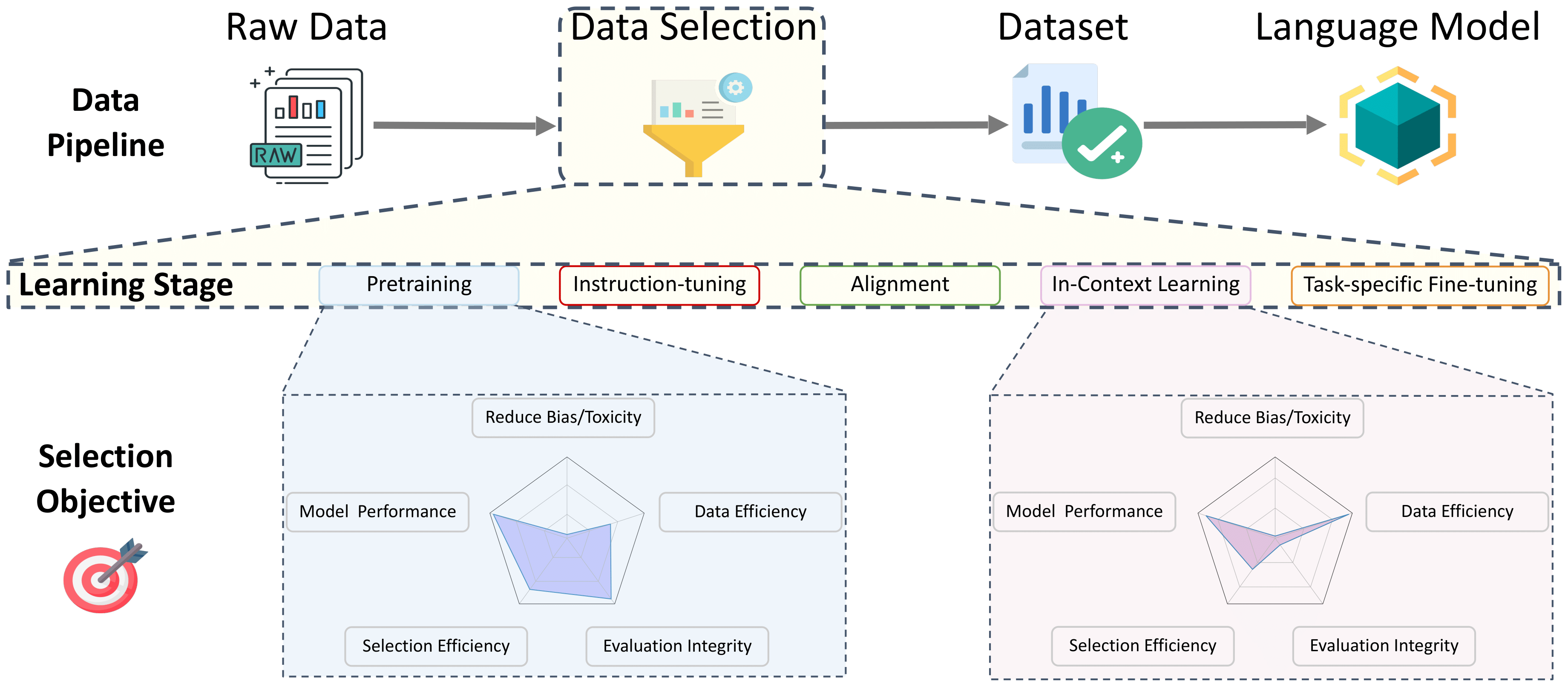

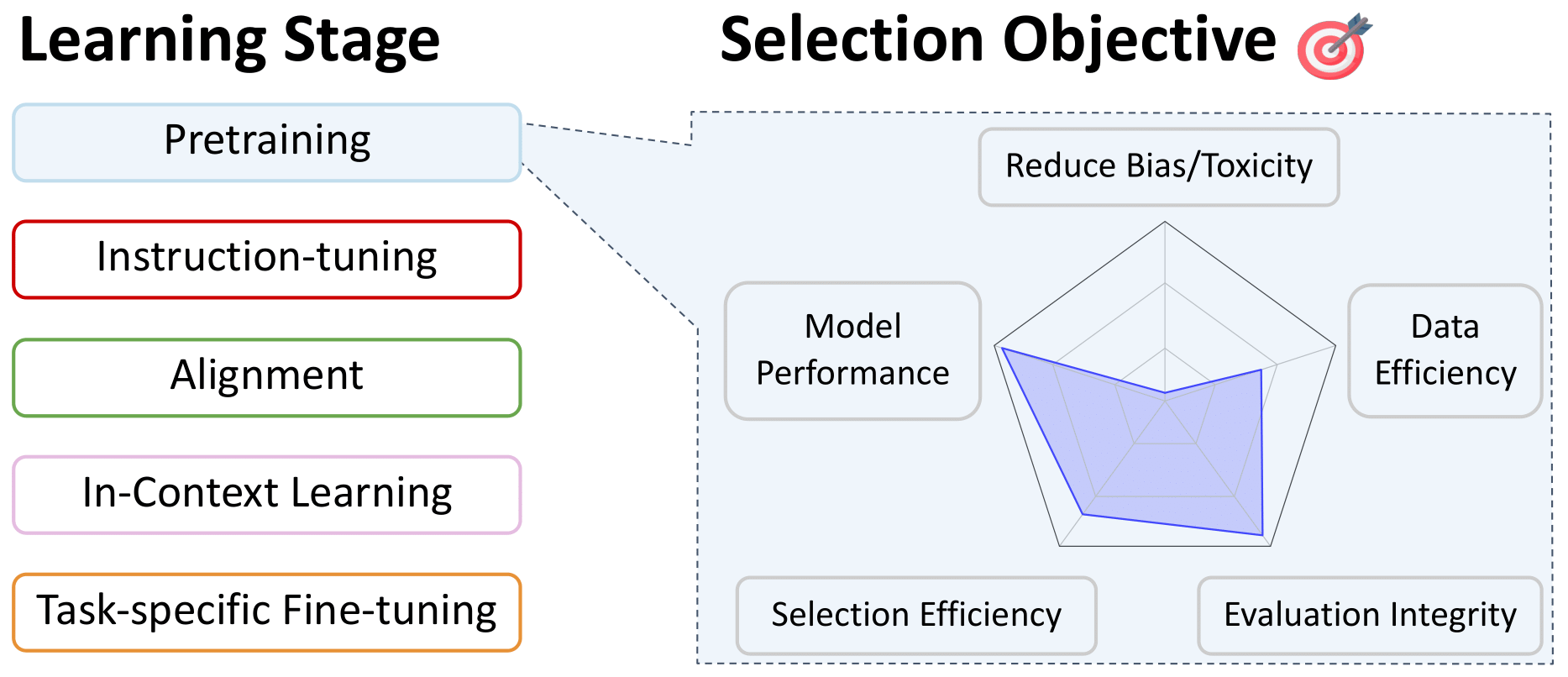

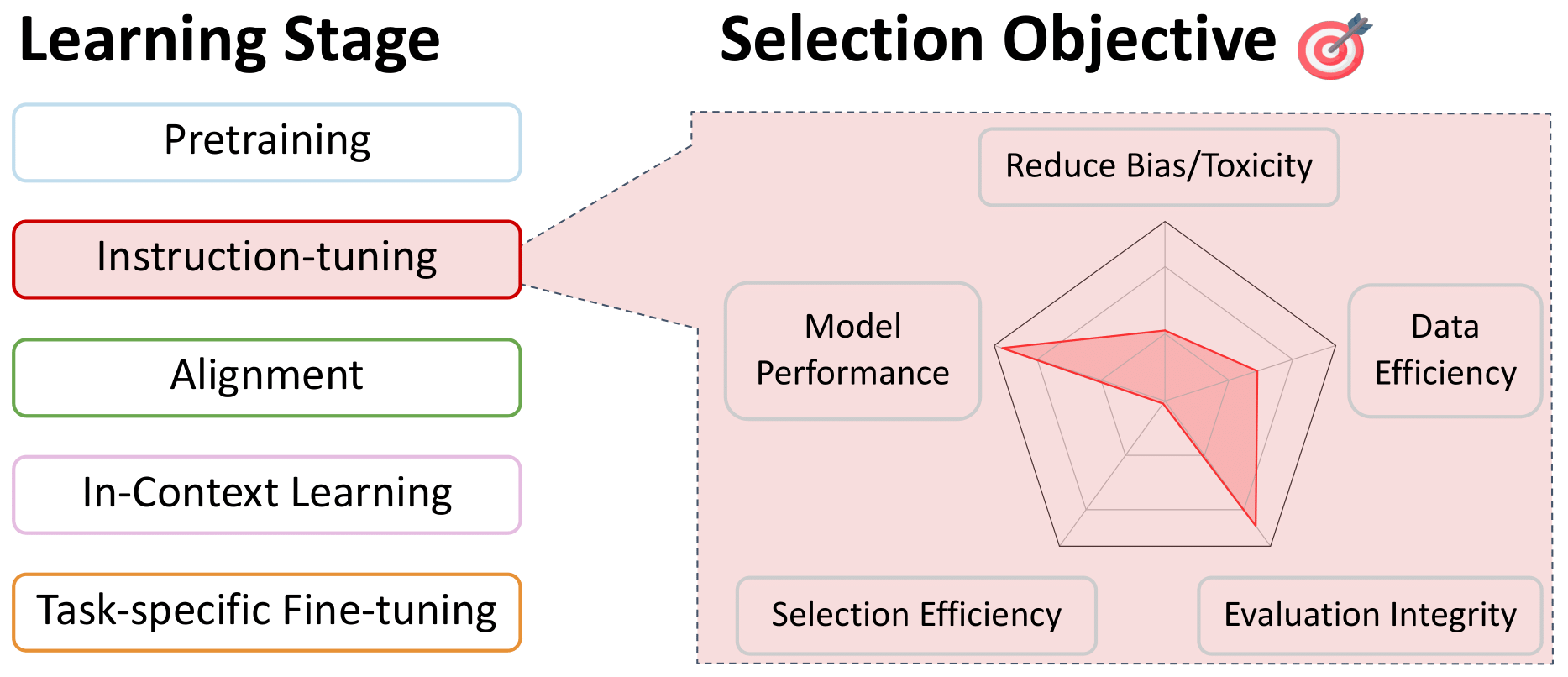

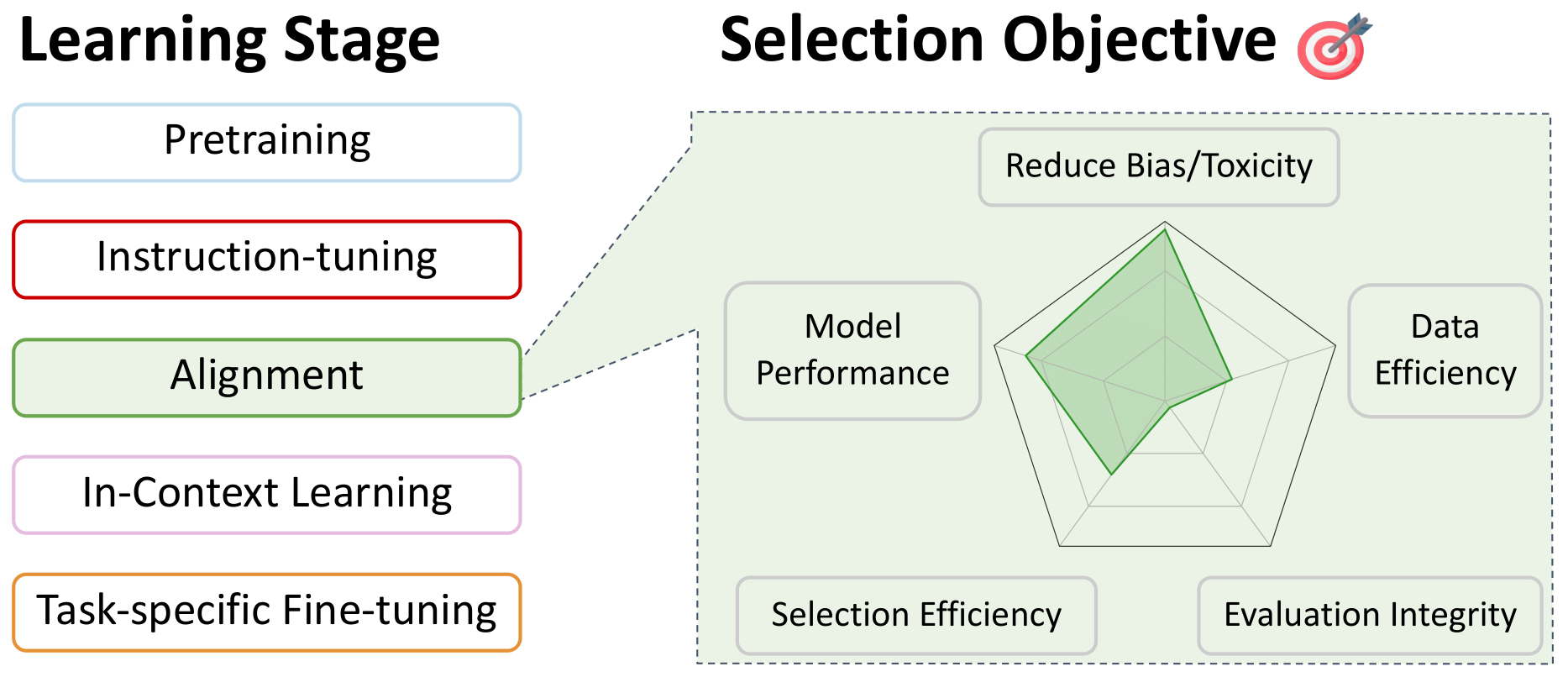

This repo is a convenient listing of papers relevant to data selection for language models, during all stages of training. This is meant to be a resource for the community, so please contribute if you see anything missing!

For more detail on these works, and more, see our survey paper: A Survey on Data Selection for Language Models. By this incredible team: Alon Albalak, Yanai Elazar, Sang Michael Xie, Shayne Longpre, Nathan Lambert, Xinyi Wang, Niklas Muennighoff, Bairu Hou, Liangming Pan, Haewon Jeong, Colin Raffel, Shiyu Chang, Tatsunori Hashimoto, William Yang Wang

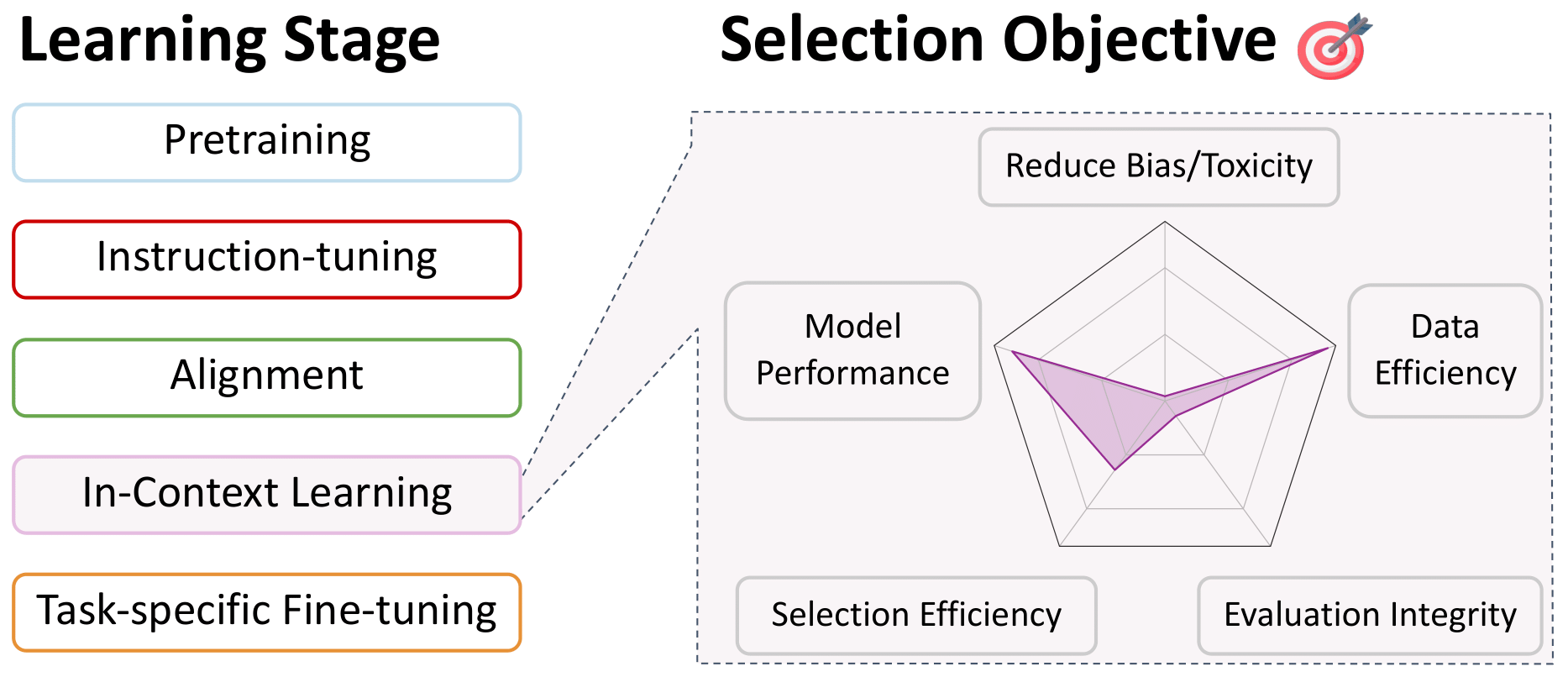

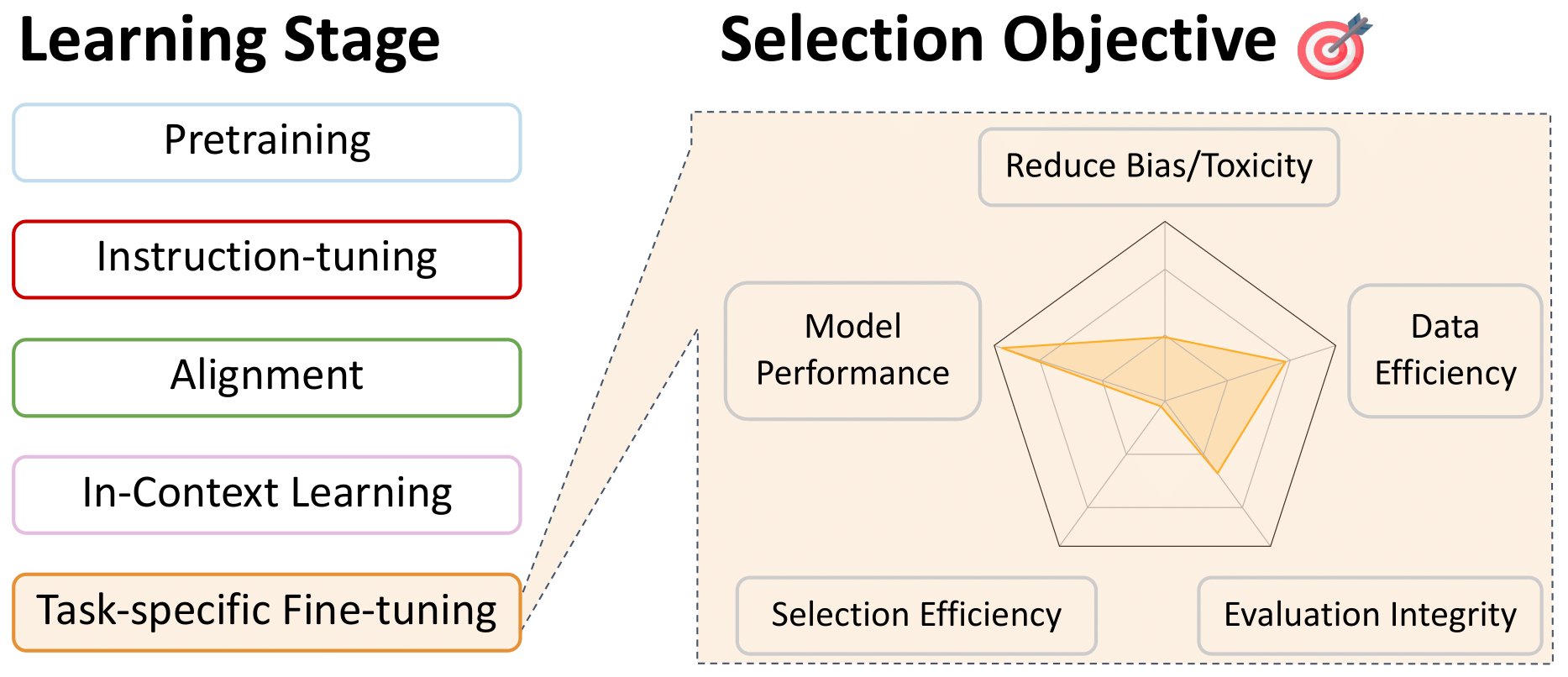

- Data Selection for Pretraining

- Data Selection for Instruction-Tuning and Multitask Training

- Data Selection for Preference Fine-tuning Alignment

- Data Selection for In-Context Learning

- Data Selection for Task-specific Fine-tuning

- FastText.zip: Compressing text classification models: 2016

Armand Joulin and Edouard Grave and Piotr Bojanowski and Matthijs Douze and Hérve Jégou and Tomas Mikolov - Learning Word Vectors for 157 Languages: 2018

Grave, Edouard and Bojanowski, Piotr and Gupta, Prakhar and Joulin, Armand and Mikolov, Tomas - Cross-lingual Language Model Pretraining: 2019

Conneau, Alexis and Lample, Guillaume - Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer: 2020

Raffel, Colin and Shazeer, Noam and Roberts, Adam... 3 hidden ... Zhou, Yanqi and Li, Wei and Liu, Peter J. - Language ID in the wild: Unexpected challenges on the path to a thousand-language web text corpus: 2020

Caswell, Isaac and Breiner, Theresa and van Esch, Daan and Bapna, Ankur - Unsupervised Cross-lingual Representation Learning at Scale: 2020

Conneau, Alexis and Khandelwal, Kartikay and Goyal, Naman... 4 hidden ... Ott, Myle and Zettlemoyer, Luke and Stoyanov, Veselin - CCNet: Extracting High Quality Monolingual Datasets from Web Crawl Data: 2020

Wenzek, Guillaume and Lachaux, Marie-Anne and Conneau, Alexis... 1 hidden ... Guzm'an, Francisco and Joulin, Armand and Grave, Edouard - A reproduction of Apple's bi-directional LSTM models for language identification in short strings: 2021

Toftrup, Mads and Asger Sorensen, Soren and Ciosici, Manuel R. and Assent, Ira - Evaluating Large Language Models Trained on Code: 2021

Mark Chen and Jerry Tworek and Heewoo Jun... 52 hidden ... Sam McCandlish and Ilya Sutskever and Wojciech Zaremba - mT5: A Massively Multilingual Pre-trained Text-to-Text Transformer: 2021

Xue, Linting and Constant, Noah and Roberts, Adam... 2 hidden ... Siddhant, Aditya and Barua, Aditya and Raffel, Colin - Competition-level code generation with AlphaCode: 2022

Li, Yujia and Choi, David and Chung, Junyoung... 20 hidden ... de Freitas, Nando and Kavukcuoglu, Koray and Vinyals, Oriol - PaLM: Scaling Language Modeling with Pathways: 2022

Aakanksha Chowdhery and Sharan Narang and Jacob Devlin... 61 hidden ... Jeff Dean and Slav Petrov and Noah Fiedel - The BigScience ROOTS Corpus: A 1.6TB Composite Multilingual Dataset: 2022

Laurenccon, Hugo and Saulnier, Lucile and Wang, Thomas... 48 hidden ... Mitchell, Margaret and Luccioni, Sasha Alexandra and Jernite, Yacine - Writing System and Speaker Metadata for 2,800+ Language Varieties: 2022

van Esch, Daan and Lucassen, Tamar and Ruder, Sebastian and Caswell, Isaac and Rivera, Clara - FinGPT: Large Generative Models for a Small Language: 2023

Luukkonen, Risto and Komulainen, Ville and Luoma, Jouni... 5 hidden ... Muennighoff, Niklas and Piktus, Aleksandra and others - MC^ 2: A Multilingual Corpus of Minority Languages in China: 2023

Zhang, Chen and Tao, Mingxu and Huang, Quzhe and Lin, Jiuheng and Chen, Zhibin and Feng, Yansong - Madlad-400: A multilingual and document-level large audited dataset: 2023

Kudugunta, Sneha and Caswell, Isaac and Zhang, Biao... 5 hidden ... Stella, Romi and Bapna, Ankur and others - The RefinedWeb Dataset for Falcon LLM: Outperforming Curated Corpora with Web Data, and Web Data Only: 2023

Guilherme Penedo and Quentin Malartic and Daniel Hesslow... 3 hidden ... Baptiste Pannier and Ebtesam Almazrouei and Julien Launay - Dolma: an Open Corpus of Three Trillion Tokens for Language Model Pretraining Research: 2024

Luca Soldaini and Rodney Kinney and Akshita Bhagia... 30 hidden ... Dirk Groeneveld and Jesse Dodge and Kyle Lo

- Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer: 2020

Raffel, Colin and Shazeer, Noam and Roberts, Adam... 3 hidden ... Zhou, Yanqi and Li, Wei and Liu, Peter J. - Language Models are Few-Shot Learners: 2020

Brown, Tom and Mann, Benjamin and Ryder, Nick... 25 hidden ... Radford, Alec and Sutskever, Ilya and Amodei, Dario - The Pile: An 800GB Dataset of Diverse Text for Language Modeling: 2020

Leo Gao and Stella Biderman and Sid Black... 6 hidden ... Noa Nabeshima and Shawn Presser and Connor Leahy - Evaluating Large Language Models Trained on Code: 2021

Mark Chen and Jerry Tworek and Heewoo Jun... 52 hidden ... Sam McCandlish and Ilya Sutskever and Wojciech Zaremba - mT5: A Massively Multilingual Pre-trained Text-to-Text Transformer: 2021

Xue, Linting and Constant, Noah and Roberts, Adam... 2 hidden ... Siddhant, Aditya and Barua, Aditya and Raffel, Colin - Scaling Language Models: Methods, Analysis & Insights from Training Gopher: 2022

Jack W. Rae and Sebastian Borgeaud and Trevor Cai... 74 hidden ... Demis Hassabis and Koray Kavukcuoglu and Geoffrey Irving - The BigScience ROOTS Corpus: A 1.6TB Composite Multilingual Dataset: 2022

Laurenccon, Hugo and Saulnier, Lucile and Wang, Thomas... 48 hidden ... Mitchell, Margaret and Luccioni, Sasha Alexandra and Jernite, Yacine - HTLM: Hyper-Text Pre-Training and Prompting of Language Models: 2022

Armen Aghajanyan and Dmytro Okhonko and Mike Lewis... 1 hidden ... Hu Xu and Gargi Ghosh and Luke Zettlemoyer - LLaMA: Open and Efficient Foundation Language Models: 2023

Hugo Touvron and Thibaut Lavril and Gautier Izacard... 8 hidden ... Armand Joulin and Edouard Grave and Guillaume Lample - The RefinedWeb Dataset for Falcon LLM: Outperforming Curated Corpora with Web Data, and Web Data Only: 2023

Guilherme Penedo and Quentin Malartic and Daniel Hesslow... 3 hidden ... Baptiste Pannier and Ebtesam Almazrouei and Julien Launay - The foundation model transparency index: 2023

Bommasani, Rishi and Klyman, Kevin and Longpre, Shayne... 2 hidden ... Xiong, Betty and Zhang, Daniel and Liang, Percy - Dolma: an Open Corpus of Three Trillion Tokens for Language Model Pretraining Research: 2024

Luca Soldaini and Rodney Kinney and Akshita Bhagia... 30 hidden ... Dirk Groeneveld and Jesse Dodge and Kyle Lo

- KenLM: Faster and Smaller Language Model Queries: 2011

Heafield, Kenneth - FastText.zip: Compressing text classification models: 2016

Armand Joulin and Edouard Grave and Piotr Bojanowski and Matthijs Douze and Hérve Jégou and Tomas Mikolov - Learning Word Vectors for 157 Languages: 2018

Grave, Edouard and Bojanowski, Piotr and Gupta, Prakhar and Joulin, Armand and Mikolov, Tomas - Language Models are Unsupervised Multitask Learners: 2019

Alec Radford and Jeff Wu and Rewon Child and David Luan and Dario Amodei and Ilya Sutskever - Language Models are Few-Shot Learners: 2020

Brown, Tom and Mann, Benjamin and Ryder, Nick... 25 hidden ... Radford, Alec and Sutskever, Ilya and Amodei, Dario - The Pile: An 800GB Dataset of Diverse Text for Language Modeling: 2020

Leo Gao and Stella Biderman and Sid Black... 6 hidden ... Noa Nabeshima and Shawn Presser and Connor Leahy - CCNet: Extracting High Quality Monolingual Datasets from Web Crawl Data: 2020

Wenzek, Guillaume and Lachaux, Marie-Anne and Conneau, Alexis... 1 hidden ... Guzm'an, Francisco and Joulin, Armand and Grave, Edouard - Detoxifying language models risks marginalizing minority voices: 2021

Xu, Albert and Pathak, Eshaan and Wallace, Eric and Gururangan, Suchin and Sap, Maarten and Klein, Dan - PaLM: Scaling Language Modeling with Pathways: 2022

Aakanksha Chowdhery and Sharan Narang and Jacob Devlin... 61 hidden ... Jeff Dean and Slav Petrov and Noah Fiedel - Scaling Language Models: Methods, Analysis & Insights from Training Gopher: 2022

Jack W. Rae and Sebastian Borgeaud and Trevor Cai... 74 hidden ... Demis Hassabis and Koray Kavukcuoglu and Geoffrey Irving - Whose Language Counts as High Quality? Measuring Language Ideologies in Text Data Selection: 2022

Gururangan, Suchin and Card, Dallas and Dreier, Sarah... 2 hidden ... Wang, Zeyu and Zettlemoyer, Luke and Smith, Noah A. - GLaM: Efficient Scaling of Language Models with Mixture-of-Experts: 2022

Du, Nan and Huang, Yanping and Dai, Andrew M... 21 hidden ... Wu, Yonghui and Chen, Zhifeng and Cui, Claire - A Pretrainer's Guide to Training Data: Measuring the Effects of Data Age, Domain Coverage, Quality, & Toxicity: 2023

Shayne Longpre and Gregory Yauney and Emily Reif... 5 hidden ... Kevin Robinson and David Mimno and Daphne Ippolito - Data Selection for Language Models via Importance Resampling: 2023

Sang Michael Xie and Shibani Santurkar and Tengyu Ma and Percy Liang - The RefinedWeb Dataset for Falcon LLM: Outperforming Curated Corpora with Web Data, and Web Data Only: 2023

Guilherme Penedo and Quentin Malartic and Daniel Hesslow... 3 hidden ... Baptiste Pannier and Ebtesam Almazrouei and Julien Launay - Dolma: an Open Corpus of Three Trillion Tokens for Language Model Pretraining Research: 2024

Luca Soldaini and Rodney Kinney and Akshita Bhagia... 30 hidden ... Dirk Groeneveld and Jesse Dodge and Kyle Lo

- Text data acquisition for domain-specific language models: 2006

Sethy, Abhinav and Georgiou, Panayiotis G. and Narayanan, Shrikanth - Intelligent Selection of Language Model Training Data: 2010

Moore, Robert C. and Lewis, William - Cynical Selection of Language Model Training Data: 2017

Amittai Axelrod - Automatic Document Selection for Efficient Encoder Pretraining: 2022

Feng, Yukun and Xia, Patrick and Van Durme, Benjamin and Sedoc, Jo~ao - Data Selection for Language Models via Importance Resampling: 2023

Sang Michael Xie and Shibani Santurkar and Tengyu Ma and Percy Liang - DsDm: Model-Aware Dataset Selection with Datamodels: 2024

Logan Engstrom and Axel Feldmann and Aleksander Madry

- Space/time trade-offs in hash coding with allowable errors: 1970

Bloom, Burton H. - Suffix Arrays: A New Method for On-Line String Searches: 1993

Manber, Udi and Myers, Gene - On the resemblance and containment of documents: 1997

Broder, A.Z. - Similarity Estimation Techniques from Rounding Algorithms: 2002

Charikar, Moses S. - URL normalization for de-duplication of web pages: 2009

Agarwal, Amit and Koppula, Hema Swetha and Leela, Krishna P.... 3 hidden ... Haty, Chittaranjan and Roy, Anirban and Sasturkar, Amit - Asynchronous pipelines for processing huge corpora on medium to low resource infrastructures: 2019

Pedro Javier Ortiz Su'arez and Beno^it Sagot and Laurent Romary - Language Models are Few-Shot Learners: 2020

Brown, Tom and Mann, Benjamin and Ryder, Nick... 25 hidden ... Radford, Alec and Sutskever, Ilya and Amodei, Dario - The Pile: An 800GB Dataset of Diverse Text for Language Modeling: 2020

Leo Gao and Stella Biderman and Sid Black... 6 hidden ... Noa Nabeshima and Shawn Presser and Connor Leahy - CCNet: Extracting High Quality Monolingual Datasets from Web Crawl Data: 2020

Wenzek, Guillaume and Lachaux, Marie-Anne and Conneau, Alexis... 1 hidden ... Guzm'an, Francisco and Joulin, Armand and Grave, Edouard - Beyond neural scaling laws: beating power law scaling via data pruning: 2022

Ben Sorscher and Robert Geirhos and Shashank Shekhar and Surya Ganguli and Ari S. Morcos - Deduplicating Training Data Makes Language Models Better: 2022

Lee, Katherine and Ippolito, Daphne and Nystrom, Andrew... 1 hidden ... Eck, Douglas and Callison-Burch, Chris and Carlini, Nicholas - MTEB: Massive text embedding benchmark: 2022

Muennighoff, Niklas and Tazi, Nouamane and Magne, Lo"ic and Reimers, Nils - PaLM: Scaling Language Modeling with Pathways: 2022

Aakanksha Chowdhery and Sharan Narang and Jacob Devlin... 61 hidden ... Jeff Dean and Slav Petrov and Noah Fiedel - Scaling Language Models: Methods, Analysis & Insights from Training Gopher: 2022

Jack W. Rae and Sebastian Borgeaud and Trevor Cai... 74 hidden ... Demis Hassabis and Koray Kavukcuoglu and Geoffrey Irving - Sgpt: Gpt sentence embeddings for semantic search: 2022

Muennighoff, Niklas - The BigScience ROOTS Corpus: A 1.6TB Composite Multilingual Dataset: 2022

Laurenccon, Hugo and Saulnier, Lucile and Wang, Thomas... 48 hidden ... Mitchell, Margaret and Luccioni, Sasha Alexandra and Jernite, Yacine - C-pack: Packaged resources to advance general chinese embedding: 2023

Xiao, Shitao and Liu, Zheng and Zhang, Peitian and Muennighoff, Niklas - D4: Improving LLM Pretraining via Document De-Duplication and Diversification: 2023

Kushal Tirumala and Daniel Simig and Armen Aghajanyan and Ari S. Morcos - Large-scale Near-deduplication Behind BigCode: 2023

Mou, Chenghao - Paloma: A Benchmark for Evaluating Language Model Fit: 2023

Ian Magnusson and Akshita Bhagia and Valentin Hofmann... 10 hidden ... Noah A. Smith and Kyle Richardson and Jesse Dodge - Quantifying Memorization Across Neural Language Models: 2023

Nicholas Carlini and Daphne Ippolito and Matthew Jagielski and Katherine Lee and Florian Tramer and Chiyuan Zhang - SemDeDup: Data-efficient learning at web-scale through semantic deduplication: 2023

Abbas, Amro and Tirumala, Kushal and Simig, D'aniel and Ganguli, Surya and Morcos, Ari S - The RefinedWeb Dataset for Falcon LLM: Outperforming Curated Corpora with Web Data, and Web Data Only: 2023

Guilherme Penedo and Quentin Malartic and Daniel Hesslow... 3 hidden ... Baptiste Pannier and Ebtesam Almazrouei and Julien Launay - What's In My Big Data?: 2023

Elazar, Yanai and Bhagia, Akshita and Magnusson, Ian... 5 hidden ... Soldaini, Luca and Singh, Sameer and others - Dolma: an Open Corpus of Three Trillion Tokens for Language Model Pretraining Research: 2024

Luca Soldaini and Rodney Kinney and Akshita Bhagia... 30 hidden ... Dirk Groeneveld and Jesse Dodge and Kyle Lo - Generative Representational Instruction Tuning: 2024

Muennighoff, Niklas and Su, Hongjin and Wang, Liang... 2 hidden ... Yu, Tao and Singh, Amanpreet and Kiela, Douwe

- Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer: 2020

Raffel, Colin and Shazeer, Noam and Roberts, Adam... 3 hidden ... Zhou, Yanqi and Li, Wei and Liu, Peter J. - mT5: A Massively Multilingual Pre-trained Text-to-Text Transformer: 2021

Xue, Linting and Constant, Noah and Roberts, Adam... 2 hidden ... Siddhant, Aditya and Barua, Aditya and Raffel, Colin - Perplexed by Quality: A Perplexity-based Method for Adult and Harmful Content Detection in Multilingual Heterogeneous Web Data: 2022

Tim Jansen and Yangling Tong and Victoria Zevallos and Pedro Ortiz Suarez - Scaling Language Models: Methods, Analysis & Insights from Training Gopher: 2022

Jack W. Rae and Sebastian Borgeaud and Trevor Cai... 74 hidden ... Demis Hassabis and Koray Kavukcuoglu and Geoffrey Irving - The BigScience ROOTS Corpus: A 1.6TB Composite Multilingual Dataset: 2022

Laurenccon, Hugo and Saulnier, Lucile and Wang, Thomas... 48 hidden ... Mitchell, Margaret and Luccioni, Sasha Alexandra and Jernite, Yacine - Whose Language Counts as High Quality? Measuring Language Ideologies in Text Data Selection: 2022

Gururangan, Suchin and Card, Dallas and Dreier, Sarah... 2 hidden ... Wang, Zeyu and Zettlemoyer, Luke and Smith, Noah A. - A Pretrainer's Guide to Training Data: Measuring the Effects of Data Age, Domain Coverage, Quality, & Toxicity: 2023

Shayne Longpre and Gregory Yauney and Emily Reif... 5 hidden ... Kevin Robinson and David Mimno and Daphne Ippolito - AI image training dataset found to include child sexual abuse imagery: 2023

David, Emilia - Detecting Personal Information in Training Corpora: an Analysis: 2023

Subramani, Nishant and Luccioni, Sasha and Dodge, Jesse and Mitchell, Margaret - GPT-4 Technical Report: 2023

OpenAI and : and Josh Achiam... 276 hidden ... Juntang Zhuang and William Zhuk and Barret Zoph - SantaCoder: don't reach for the stars!: 2023

Allal, Loubna Ben and Li, Raymond and Kocetkov, Denis... 5 hidden ... Gu, Alex and Dey, Manan and others - The RefinedWeb Dataset for Falcon LLM: Outperforming Curated Corpora with Web Data, and Web Data Only: 2023

Guilherme Penedo and Quentin Malartic and Daniel Hesslow... 3 hidden ... Baptiste Pannier and Ebtesam Almazrouei and Julien Launay - The foundation model transparency index: 2023

Bommasani, Rishi and Klyman, Kevin and Longpre, Shayne... 2 hidden ... Xiong, Betty and Zhang, Daniel and Liang, Percy - What's In My Big Data?: 2023

Elazar, Yanai and Bhagia, Akshita and Magnusson, Ian... 5 hidden ... Soldaini, Luca and Singh, Sameer and others - Dolma: an Open Corpus of Three Trillion Tokens for Language Model Pretraining Research: 2024

Luca Soldaini and Rodney Kinney and Akshita Bhagia... 30 hidden ... Dirk Groeneveld and Jesse Dodge and Kyle Lo - OLMo: Accelerating the Science of Language Models: 2024

Groeneveld, Dirk and Beltagy, Iz and Walsh, Pete... 5 hidden ... Magnusson, Ian and Wang, Yizhong and others

- Bloom: A 176b-parameter open-access multilingual language model: 2022

Workshop, BigScience and Scao, Teven Le and Fan, Angela... 5 hidden ... Luccioni, Alexandra Sasha and Yvon, Franccois and others - Quality at a Glance: An Audit of Web-Crawled Multilingual Datasets: 2022

Kreutzer, Julia and Caswell, Isaac and Wang, Lisa... 46 hidden ... Ahia, Oghenefego and Agrawal, Sweta and Adeyemi, Mofetoluwa - The BigScience ROOTS Corpus: A 1.6TB Composite Multilingual Dataset: 2022

Laurenccon, Hugo and Saulnier, Lucile and Wang, Thomas... 48 hidden ... Mitchell, Margaret and Luccioni, Sasha Alexandra and Jernite, Yacine - What language model to train if you have one million gpu hours?: 2022

Scao, Teven Le and Wang, Thomas and Hesslow, Daniel... 5 hidden ... Muennighoff, Niklas and Phang, Jason and others - Madlad-400: A multilingual and document-level large audited dataset: 2023

Kudugunta, Sneha and Caswell, Isaac and Zhang, Biao... 5 hidden ... Stella, Romi and Bapna, Ankur and others - Scaling multilingual language models under constrained data: 2023

Scao, Teven Le - Aya Dataset: An Open-Access Collection for Multilingual Instruction Tuning: 2024

Shivalika Singh and Freddie Vargus and Daniel Dsouza... 27 hidden ... Ahmet Üstün and Marzieh Fadaee and Sara Hooker

- The Nonstochastic Multiarmed Bandit Problem: 2002

Auer, Peter and Cesa-Bianchi, Nicol`o and Freund, Yoav and Schapire, Robert E. - Distributionally Robust Language Modeling: 2019

Oren, Yonatan and Sagawa, Shiori and Hashimoto, Tatsunori B. and Liang, Percy - Distributionally Robust Neural Networks: 2020

Shiori Sagawa and Pang Wei Koh and Tatsunori B. Hashimoto and Percy Liang - Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer: 2020

Raffel, Colin and Shazeer, Noam and Roberts, Adam... 3 hidden ... Zhou, Yanqi and Li, Wei and Liu, Peter J. - The Pile: An 800GB Dataset of Diverse Text for Language Modeling: 2020

Leo Gao and Stella Biderman and Sid Black... 6 hidden ... Noa Nabeshima and Shawn Presser and Connor Leahy - Scaling Language Models: Methods, Analysis & Insights from Training Gopher: 2022

Jack W. Rae and Sebastian Borgeaud and Trevor Cai... 74 hidden ... Demis Hassabis and Koray Kavukcuoglu and Geoffrey Irving - GLaM: Efficient Scaling of Language Models with Mixture-of-Experts: 2022

Du, Nan and Huang, Yanping and Dai, Andrew M... 21 hidden ... Wu, Yonghui and Chen, Zhifeng and Cui, Claire - Cross-Lingual Supervision improves Large Language Models Pre-training: 2023

Andrea Schioppa and Xavier Garcia and Orhan Firat - [DoGE: Domain Reweighting with Generalization Estimation](https://arxiv.org/abs/arXiv preprint): 2023

Simin Fan and Matteo Pagliardini and Martin Jaggi - DoReMi: Optimizing Data Mixtures Speeds Up Language Model Pretraining: 2023

Sang Michael Xie and Hieu Pham and Xuanyi Dong... 4 hidden ... Quoc V Le and Tengyu Ma and Adams Wei Yu - Efficient Online Data Mixing For Language Model Pre-Training: 2023

Alon Albalak and Liangming Pan and Colin Raffel and William Yang Wang - LLaMA: Open and Efficient Foundation Language Models: 2023

Hugo Touvron and Thibaut Lavril and Gautier Izacard... 8 hidden ... Armand Joulin and Edouard Grave and Guillaume Lample - Pythia: A Suite for Analyzing Large Language Models Across Training and Scaling: 2023

Biderman, Stella and Schoelkopf, Hailey and Anthony, Quentin Gregory... 7 hidden ... Skowron, Aviya and Sutawika, Lintang and Van Der Wal, Oskar - Scaling Data-Constrained Language Models: 2023

Niklas Muennighoff and Alexander M Rush and Boaz Barak... 3 hidden ... Sampo Pyysalo and Thomas Wolf and Colin Raffel - Sheared LLaMA: Accelerating Language Model Pre-training via Structured Pruning: 2023

Mengzhou Xia and Tianyu Gao and Zhiyuan Zeng and Danqi Chen - Skill-it! A Data-Driven Skills Framework for Understanding and Training Language Models: 2023

Mayee F. Chen and Nicholas Roberts and Kush Bhatia... 1 hidden ... Ce Zhang and Frederic Sala and Christopher Ré

- The natural language decathlon: Multitask learning as question answering: 2018

McCann, Bryan and Keskar, Nitish Shirish and Xiong, Caiming and Socher, Richard - Unifying question answering, text classification, and regression via span extraction: 2019

Keskar, Nitish Shirish and McCann, Bryan and Xiong, Caiming and Socher, Richard - Multi-Task Deep Neural Networks for Natural Language Understanding: 2019

Liu, Xiaodong and He, Pengcheng and Chen, Weizhu and Gao, Jianfeng - UnifiedQA: Crossing Format Boundaries with a Single QA System: 2020

Khashabi, Daniel and Min, Sewon and Khot, Tushar... 1 hidden ... Tafjord, Oyvind and Clark, Peter and Hajishirzi, Hannaneh - Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer: 2020

Raffel, Colin and Shazeer, Noam and Roberts, Adam... 3 hidden ... Zhou, Yanqi and Li, Wei and Liu, Peter J. - Muppet: Massive Multi-task Representations with Pre-Finetuning: 2021

Aghajanyan, Armen and Gupta, Anchit and Shrivastava, Akshat and Chen, Xilun and Zettlemoyer, Luke and Gupta, Sonal - Finetuned language models are zero-shot learners: 2021

Wei, Jason and Bosma, Maarten and Zhao, Vincent Y.... 3 hidden ... Du, Nan and Dai, Andrew M. and Le, Quoc V. - Cross-task generalization via natural language crowdsourcing instructions: 2021

Mishra, Swaroop and Khashabi, Daniel and Baral, Chitta and Hajishirzi, Hannaneh - Nl-augmenter: A framework for task-sensitive natural language augmentation: 2021

Dhole, Kaustubh D and Gangal, Varun and Gehrmann, Sebastian... 5 hidden ... Shrivastava, Ashish and Tan, Samson and others - Ext5: Towards extreme multi-task scaling for transfer learning: 2021

Aribandi, Vamsi and Tay, Yi and Schuster, Tal... 5 hidden ... Bahri, Dara and Ni, Jianmo and others - Super-NaturalInstructions: Generalization via Declarative Instructions on 1600+ NLP Tasks: 2022

Wang, Yizhong and Mishra, Swaroop and Alipoormolabashi, Pegah... 29 hidden ... Patro, Sumanta and Dixit, Tanay and Shen, Xudong - Scaling instruction-finetuned language models: 2022

Chung, Hyung Won and Hou, Le and Longpre, Shayne... 5 hidden ... Dehghani, Mostafa and Brahma, Siddhartha and others - Bloom+ 1: Adding language support to bloom for zero-shot prompting: 2022

Yong, Zheng-Xin and Schoelkopf, Hailey and Muennighoff, Niklas... 5 hidden ... Kasai, Jungo and Baruwa, Ahmed and others - OPT-IML: Scaling Language Model Instruction Meta Learning through the Lens of Generalization: 2022

Srinivasan Iyer and Xi Victoria Lin and Ramakanth Pasunuru... 12 hidden ... Asli Celikyilmaz and Luke Zettlemoyer and Ves Stoyanov - MetaICL: Learning to Learn In Context: 2022

Min, Sewon and Lewis, Mike and Zettlemoyer, Luke and Hajishirzi, Hannaneh - Unnatural Instructions: Tuning Language Models with (Almost) No Human Labor: 2022

Honovich, Or and Scialom, Thomas and Levy, Omer and Schick, Timo - Crosslingual generalization through multitask finetuning: 2022

Muennighoff, Niklas and Wang, Thomas and Sutawika, Lintang... 5 hidden ... Yong, Zheng-Xin and Schoelkopf, Hailey and others - Multitask Prompted Training Enables Zero-Shot Task Generalization: 2022

Victor Sanh and Albert Webson and Colin Raffel... 34 hidden ... Leo Gao and Thomas Wolf and Alexander M Rush - Prometheus: Inducing fine-grained evaluation capability in language models: 2023

Kim, Seungone and Shin, Jamin and Cho, Yejin... 5 hidden ... Kim, Sungdong and Thorne, James and others - SlimOrca: An Open Dataset of GPT-4 Augmented FLAN Reasoning Traces, with Verification: 2023

Wing Lian and Guan Wang and Bleys Goodson... 1 hidden ... Austin Cook and Chanvichet Vong and "Teknium" - Is A.I. Art Stealing from Artists?: 2023

Chayka, Kyle - Paul Tremblay, Mona Awad vs. OpenAI, Inc., et al.: 2023

Saveri, Joseph R. and Zirpoli, Cadio and Young, Christopher K.L. and McMahon, Kathleen J. - Making Large Language Models Better Data Creators: 2023

Lee, Dong-Ho and Pujara, Jay and Sewak, Mohit and White, Ryen and Jauhar, Sujay - The Flan Collection: Designing Data and Methods for Effective Instruction Tuning: 2023

Shayne Longpre and Le Hou and Tu Vu... 5 hidden ... Barret Zoph and Jason Wei and Adam Roberts - Wizardlm: Empowering large language models to follow complex instructions: 2023

Xu, Can and Sun, Qingfeng and Zheng, Kai... 2 hidden ... Feng, Jiazhan and Tao, Chongyang and Jiang, Daxin - LIMA: Less Is More for Alignment: 2023

Chunting Zhou and Pengfei Liu and Puxin Xu... 9 hidden ... Mike Lewis and Luke Zettlemoyer and Omer Levy - Camels in a Changing Climate: Enhancing LM Adaptation with Tulu 2: 2023

Hamish Ivison and Yizhong Wang and Valentina Pyatkin... 5 hidden ... Noah A. Smith and Iz Beltagy and Hannaneh Hajishirzi - Self-Instruct: Aligning Language Models with Self-Generated Instructions: 2023

Wang, Yizhong and Kordi, Yeganeh and Mishra, Swaroop... 1 hidden ... Smith, Noah A. and Khashabi, Daniel and Hajishirzi, Hannaneh - What Makes Good Data for Alignment? A Comprehensive Study of Automatic Data Selection in Instruction Tuning: 2023

Liu, Wei and Zeng, Weihao and He, Keqing and Jiang, Yong and He, Junxian - Instruction Tuning for Large Language Models: A Survey: 2023

Shengyu Zhang and Linfeng Dong and Xiaoya Li... 5 hidden ... Tianwei Zhang and Fei Wu and Guoyin Wang - Stanford Alpaca: An Instruction-following LLaMA model: 2023

Rohan Taori and Ishaan Gulrajani and Tianyi Zhang... 2 hidden ... Carlos Guestrin and Percy Liang and Tatsunori B. Hashimoto - How Far Can Camels Go? Exploring the State of Instruction Tuning on Open Resources: 2023

Yizhong Wang and Hamish Ivison and Pradeep Dasigi... 5 hidden ... Noah A. Smith and Iz Beltagy and Hannaneh Hajishirzi - OpenAssistant Conversations--Democratizing Large Language Model Alignment: 2023

K"opf, Andreas and Kilcher, Yannic and von R"utte, Dimitri... 5 hidden ... Stanley, Oliver and Nagyfi, Rich'ard and others - OctoPack: Instruction Tuning Code Large Language Models: 2023

Niklas Muennighoff and Qian Liu and Armel Zebaze... 4 hidden ... Xiangru Tang and Leandro von Werra and Shayne Longpre - Self: Language-driven self-evolution for large language model: 2023

Lu, Jianqiao and Zhong, Wanjun and Huang, Wenyong... 3 hidden ... Wang, Weichao and Shang, Lifeng and Liu, Qun - The Flan Collection: Designing Data and Methods for Effective Instruction Tuning: 2023

Longpre, Shayne and Hou, Le and Vu, Tu... 5 hidden ... Zoph, Barret and Wei, Jason and Roberts, Adam - #InsTag: Instruction Tagging for Analyzing Supervised Fine-tuning of Large Language Models: 2023

Keming Lu and Hongyi Yuan and Zheng Yuan... 2 hidden ... Chuanqi Tan and Chang Zhou and Jingren Zhou - Instruction Mining: When Data Mining Meets Large Language Model Finetuning: 2023

Yihan Cao and Yanbin Kang and Chi Wang and Lichao Sun - Active Instruction Tuning: Improving Cross-Task Generalization by Training on Prompt Sensitive Tasks: 2023

Po-Nien Kung and Fan Yin and Di Wu and Kai-Wei Chang and Nanyun Peng - The Data Provenance Initiative: A Large Scale Audit of Dataset Licensing & Attribution in AI: 2023

Longpre, Shayne and Mahari, Robert and Chen, Anthony... 5 hidden ... Kabbara, Jad and Perisetla, Kartik and others - Aya Dataset: An Open-Access Collection for Multilingual Instruction Tuning: 2024

Shivalika Singh and Freddie Vargus and Daniel Dsouza... 27 hidden ... Ahmet Üstün and Marzieh Fadaee and Sara Hooker - Astraios: Parameter-Efficient Instruction Tuning Code Large Language Models: 2024

Zhuo, Terry Yue and Zebaze, Armel and Suppattarachai, Nitchakarn... 1 hidden ... de Vries, Harm and Liu, Qian and Muennighoff, Niklas - Aya Model: An Instruction Finetuned Open-Access Multilingual Language Model: 2024

"Ust"un, Ahmet and Aryabumi, Viraat and Yong, Zheng-Xin... 5 hidden ... Ooi, Hui-Lee and Kayid, Amr and others - Smaller Language Models are capable of selecting Instruction-Tuning Training Data for Larger Language Models: 2024

Dheeraj Mekala and Alex Nguyen and Jingbo Shang - Automated Data Curation for Robust Language Model Fine-Tuning: 2024

Jihai Chen and Jonas Mueller

- WebGPT: Browser-assisted question-answering with human feedback: 2021

Nakano, Reiichiro and Hilton, Jacob and Balaji, Suchir... 5 hidden ... Kosaraju, Vineet and Saunders, William and others - Training a Helpful and Harmless Assistant with Reinforcement Learning from Human Feedback: 2022

Bai, Yuntao and Jones, Andy and Ndousse, Kamal... 5 hidden ... Ganguli, Deep and Henighan, Tom and others - Understanding Dataset Difficulty with $mathcalV$-Usable Information: 2022

Ethayarajh, Kawin and Choi, Yejin and Swayamdipta, Swabha - Constitutional AI: Harmlessness from AI Feedback: 2022

Bai, Yuntao and Kadavath, Saurav and Kundu, Sandipan... 5 hidden ... Mirhoseini, Azalia and McKinnon, Cameron and others - Prometheus: Inducing fine-grained evaluation capability in language models: 2023

Kim, Seungone and Shin, Jamin and Cho, Yejin... 5 hidden ... Kim, Sungdong and Thorne, James and others - Notus: 2023

Alvaro Bartolome and Gabriel Martin and Daniel Vila - UltraFeedback: Boosting Language Models with High-quality Feedback: 2023

Ganqu Cui and Lifan Yuan and Ning Ding... 3 hidden ... Guotong Xie and Zhiyuan Liu and Maosong Sun - Exploration with Principles for Diverse AI Supervision: 2023

Liu, Hao and Zaharia, Matei and Abbeel, Pieter - Wizardlm: Empowering large language models to follow complex instructions: 2023

Xu, Can and Sun, Qingfeng and Zheng, Kai... 2 hidden ... Feng, Jiazhan and Tao, Chongyang and Jiang, Daxin - LIMA: Less Is More for Alignment: 2023

Chunting Zhou and Pengfei Liu and Puxin Xu... 9 hidden ... Mike Lewis and Luke Zettlemoyer and Omer Levy - Shepherd: A Critic for Language Model Generation: 2023

Tianlu Wang and Ping Yu and Xiaoqing Ellen Tan... 4 hidden ... Luke Zettlemoyer and Maryam Fazel-Zarandi and Asli Celikyilmaz - No Robots: 2023

Nazneen Rajani and Lewis Tunstall and Edward Beeching and Nathan Lambert and Alexander M. Rush and Thomas Wolf - Starling-7B: Improving LLM Helpfulness & Harmlessness with RLAIF: 2023

Zhu, Banghua and Frick, Evan and Wu, Tianhao and Zhu, Hanlin and Jiao, Jiantao - Scaling laws for reward model overoptimization: 2023

Gao, Leo and Schulman, John and Hilton, Jacob - SALMON: Self-Alignment with Principle-Following Reward Models: 2023

Zhiqing Sun and Yikang Shen and Hongxin Zhang... 2 hidden ... David Cox and Yiming Yang and Chuang Gan - Open Problems and Fundamental Limitations of Reinforcement Learning from Human Feedback: 2023

Stephen Casper and Xander Davies and Claudia Shi... 26 hidden ... David Krueger and Dorsa Sadigh and Dylan Hadfield-Menell - Camels in a Changing Climate: Enhancing LM Adaptation with Tulu 2: 2023

Hamish Ivison and Yizhong Wang and Valentina Pyatkin... 5 hidden ... Noah A. Smith and Iz Beltagy and Hannaneh Hajishirzi - Llama 2: Open Foundation and Fine-Tuned Chat Models: 2023

Hugo Touvron and Louis Martin and Kevin Stone... 62 hidden ... Robert Stojnic and Sergey Edunov and Thomas Scialom - What Makes Good Data for Alignment? A Comprehensive Study of Automatic Data Selection in Instruction Tuning: 2023

Liu, Wei and Zeng, Weihao and He, Keqing and Jiang, Yong and He, Junxian - HuggingFace H4 Stack Exchange Preference Dataset: 2023

Lambert, Nathan and Tunstall, Lewis and Rajani, Nazneen and Thrush, Tristan - Textbooks Are All You Need: 2023

Gunasekar, Suriya and Zhang, Yi and Aneja, Jyoti... 5 hidden ... de Rosa, Gustavo and Saarikivi, Olli and others - Quality-Diversity through AI Feedback: 2023

Herbie Bradley and Andrew Dai and Hannah Teufel... 4 hidden ... Kenneth Stanley and Grégory Schott and Joel Lehman - Direct preference optimization: Your language model is secretly a reward model: 2023

Rafailov, Rafael and Sharma, Archit and Mitchell, Eric and Ermon, Stefano and Manning, Christopher D and Finn, Chelsea - Scaling relationship on learning mathematical reasoning with large language models: 2023

Yuan, Zheng and Yuan, Hongyi and Li, Chengpeng and Dong, Guanting and Tan, Chuanqi and Zhou, Chang - The History and Risks of Reinforcement Learning and Human Feedback: 2023

Lambert, Nathan and Gilbert, Thomas Krendl and Zick, Tom - Zephyr: Direct distillation of lm alignment: 2023

Tunstall, Lewis and Beeching, Edward and Lambert, Nathan... 5 hidden ... Fourrier, Cl'ementine and Habib, Nathan and others - Perils of Self-Feedback: Self-Bias Amplifies in Large Language Models: 2024

Wenda Xu and Guanglei Zhu and Xuandong Zhao and Liangming Pan and Lei Li and William Yang Wang - Suppressing Pink Elephants with Direct Principle Feedback: 2024

Louis Castricato and Nathan Lile and Suraj Anand and Hailey Schoelkopf and Siddharth Verma and Stella Biderman - West-of-N: Synthetic Preference Generation for Improved Reward Modeling: 2024

Alizée Pace and Jonathan Mallinson and Eric Malmi and Sebastian Krause and Aliaksei Severyn - Statistical Rejection Sampling Improves Preference Optimization: 2024

Liu, Tianqi and Zhao, Yao and Joshi, Rishabh... 1 hidden ... Saleh, Mohammad and Liu, Peter J and Liu, Jialu - Self-play fine-tuning converts weak language models to strong language models: 2024

Chen, Zixiang and Deng, Yihe and Yuan, Huizhuo and Ji, Kaixuan and Gu, Quanquan - Self-Rewarding Language Models: 2024

Weizhe Yuan and Richard Yuanzhe Pang and Kyunghyun Cho and Sainbayar Sukhbaatar and Jing Xu and Jason Weston - Theoretical guarantees on the best-of-n alignment policy: 2024

Beirami, Ahmad and Agarwal, Alekh and Berant, Jonathan... 1 hidden ... Eisenstein, Jacob and Nagpal, Chirag and Suresh, Ananda Theertha - KTO: Model Alignment as Prospect Theoretic Optimization: 2024

Ethayarajh, Kawin and Xu, Winnie and Muennighoff, Niklas and Jurafsky, Dan and Kiela, Douwe

- Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks: 2019

Reimers, Nils and Gurevych, Iryna - Language Models are Few-Shot Learners: 2020

Brown, Tom and Mann, Benjamin and Ryder, Nick... 25 hidden ... Radford, Alec and Sutskever, Ilya and Amodei, Dario - True Few-Shot Learning with Language Models: 2021

Ethan Perez and Douwe Kiela and Kyunghyun Cho - Active Example Selection for In-Context Learning: 2022

Zhang, Yiming and Feng, Shi and Tan, Chenhao - Careful Data Curation Stabilizes In-context Learning: 2022

Chang, Ting-Yun and Jia, Robin - Learning To Retrieve Prompts for In-Context Learning: 2022

Rubin, Ohad and Herzig, Jonathan and Berant, Jonathan - Fantastically Ordered Prompts and Where to Find Them: Overcoming Few-Shot Prompt Order Sensitivity: 2022

Lu, Yao and Bartolo, Max and Moore, Alastair and Riedel, Sebastian and Stenetorp, Pontus - What Makes Good In-Context Examples for GPT-3?: 2022

Liu, Jiachang and Shen, Dinghan and Zhang, Yizhe and Dolan, Bill and Carin, Lawrence and Chen, Weizhu - MetaICL: Learning to Learn In Context: 2022

Min, Sewon and Lewis, Mike and Zettlemoyer, Luke and Hajishirzi, Hannaneh - Unified Demonstration Retriever for In-Context Learning: 2023

Li, Xiaonan and Lv, Kai and Yan, Hang... 3 hidden ... Xie, Guotong and Wang, Xiaoling and Qiu, Xipeng - Which Examples to Annotate for In-Context Learning? Towards Effective and Efficient Selection: 2023

Mavromatis, Costas and Srinivasan, Balasubramaniam and Shen, Zhengyuan... 1 hidden ... Rangwala, Huzefa and Faloutsos, Christos and Karypis, George - Large Language Models Are Latent Variable Models: Explaining and Finding Good Demonstrations for In-Context Learning: 2023

Xinyi Wang and Wanrong Zhu and Michael Saxon and Mark Steyvers and William Yang Wang - Selective Annotation Makes Language Models Better Few-Shot Learners: 2023

Hongjin SU and Jungo Kasai and Chen Henry Wu... 5 hidden ... Luke Zettlemoyer and Noah A. Smith and Tao Yu - In-context Example Selection with Influences: 2023

Nguyen, Tai and Wong, Eric - Coverage-based Example Selection for In-Context Learning: 2023

Gupta, Shivanshu and Singh, Sameer and Gardner, Matt - Compositional exemplars for in-context learning: 2023

Ye, Jiacheng and Wu, Zhiyong and Feng, Jiangtao and Yu, Tao and Kong, Lingpeng - Take one step at a time to know incremental utility of demonstration: An analysis on reranking for few-shot in-context learning: 2023

Hashimoto, Kazuma and Raman, Karthik and Bendersky, Michael - Ambiguity-aware in-context learning with large language models: 2023

Gao, Lingyu and Chaudhary, Aditi and Srinivasan, Krishna and Hashimoto, Kazuma and Raman, Karthik and Bendersky, Michael - IDEAL: Influence-Driven Selective Annotations Empower In-Context Learners in Large Language Models: 2023

Zhang, Shaokun and Xia, Xiaobo and Wang, Zhaoqing... 1 hidden ... Liu, Jiale and Wu, Qingyun and Liu, Tongliang - ScatterShot: Interactive In-context Example Curation for Text Transformation: 2023

Wu, Sherry and Shen, Hua and Weld, Daniel S and Heer, Jeffrey and Ribeiro, Marco Tulio - Diverse Demonstrations Improve In-context Compositional Generalization: 2023

Levy, Itay and Bogin, Ben and Berant, Jonathan - Finding supporting examples for in-context learning: 2023

Li, Xiaonan and Qiu, Xipeng - Misconfidence-based Demonstration Selection for LLM In-Context Learning: 2024

Xu, Shangqing and Zhang, Chao - In-context Learning with Retrieved Demonstrations for Language Models: A Survey: 2024

Xu, Xin and Liu, Yue and Pasupat, Panupong and Kazemi, Mehran and others

- A large annotated corpus for learning natural language inference: 2015

Bowman, Samuel R. and Angeli, Gabor and Potts, Christopher and Manning, Christopher D. - GLUE: A Multi-Task Benchmark and Analysis Platform for Natural Language Understanding: 2018

Wang, Alex and Singh, Amanpreet and Michael, Julian and Hill, Felix and Levy, Omer and Bowman, Samuel - A Broad-Coverage Challenge Corpus for Sentence Understanding through Inference: 2018

Williams, Adina and Nangia, Nikita and Bowman, Samuel - Sentence Encoders on STILTs: Supplementary Training on Intermediate Labeled-data Tasks: 2019

Jason Phang and Thibault Févry and Samuel R. Bowman - Distributionally Robust Neural Networks: 2020

Shiori Sagawa and Pang Wei Koh and Tatsunori B. Hashimoto and Percy Liang - Dataset Cartography: Mapping and Diagnosing Datasets with Training Dynamics: 2020

Swayamdipta, Swabha and Schwartz, Roy and Lourie, Nicholas... 1 hidden ... Hajishirzi, Hannaneh and Smith, Noah A. and Choi, Yejin - Intermediate-Task Transfer Learning with Pretrained Language Models: When and Why Does It Work?: 2020

Pruksachatkun, Yada and Phang, Jason and Liu, Haokun... 3 hidden ... Vania, Clara and Kann, Katharina and Bowman, Samuel R. - On the Complementarity of Data Selection and Fine Tuning for Domain Adaptation: 2021

Dan Iter and David Grangier - FETA: A Benchmark for Few-Sample Task Transfer in Open-Domain Dialogue: 2022

Albalak, Alon and Tuan, Yi-Lin and Jandaghi, Pegah... 3 hidden ... Getoor, Lise and Pujara, Jay and Wang, William Yang - LoRA: Low-Rank Adaptation of Large Language Models: 2022

Edward J Hu and yelong shen and Phillip Wallis... 2 hidden ... Shean Wang and Lu Wang and Weizhu Chen - Training Subset Selection for Weak Supervision: 2022

Lang, Hunter and Vijayaraghavan, Aravindan and Sontag, David - On-Demand Sampling: Learning Optimally from Multiple Distributions: 2022

Haghtalab, Nika and Jordan, Michael and Zhao, Eric - The Trade-offs of Domain Adaptation for Neural Language Models: 2022

Grangier, David and Iter, Dan - Data Pruning for Efficient Model Pruning in Neural Machine Translation: 2023

Azeemi, Abdul and Qazi, Ihsan and Raza, Agha - Skill-it! A Data-Driven Skills Framework for Understanding and Training Language Models: 2023

Mayee F. Chen and Nicholas Roberts and Kush Bhatia... 1 hidden ... Ce Zhang and Frederic Sala and Christopher Ré - D2 Pruning: Message Passing for Balancing Diversity and Difficulty in Data Pruning: 2023

Adyasha Maharana and Prateek Yadav and Mohit Bansal - Improving Few-Shot Generalization by Exploring and Exploiting Auxiliary Data: 2023

Alon Albalak and Colin Raffel and William Yang Wang - Efficient Online Data Mixing For Language Model Pre-Training: 2023

Alon Albalak and Liangming Pan and Colin Raffel and William Yang Wang - Data-Efficient Finetuning Using Cross-Task Nearest Neighbors: 2023

Ivison, Hamish and Smith, Noah A. and Hajishirzi, Hannaneh and Dasigi, Pradeep - Make Every Example Count: On the Stability and Utility of Self-Influence for Learning from Noisy NLP Datasets: 2023

Bejan, Irina and Sokolov, Artem and Filippova, Katja - LESS: Selecting Influential Data for Targeted Instruction Tuning: 2024

Mengzhou Xia and Sadhika Malladi and Suchin Gururangan and Sanjeev Arora and Danqi Chen

There are likely some amazing works in the field that we missed, so please contribute to the repo.

Feel free to open a pull request with new papers or create an issue and we can add them for you. Thank you in advance for your efforts!

We hope this work serves as inspiration for many impactful future works. If you found our work useful, please cite this paper as:

@article{albalak2024survey,

title={A Survey on Data Selection for Language Models},

author={Alon Albalak and Yanai Elazar and Sang Michael Xie and Shayne Longpre and Nathan Lambert and Xinyi Wang and Niklas Muennighoff and Bairu Hou and Liangming Pan and Haewon Jeong and Colin Raffel and Shiyu Chang and Tatsunori Hashimoto and William Yang Wang},

year={2024},

journal={arXiv preprint arXiv:2402.16827},

note={\url{https://arxiv.org/abs/2402.16827}}

}