📑 Paper | 🤗 Leaderboard & 🤗 Dataset

HF_MODEL_ID="Magpie-Align/Llama-3-8B-Magpie-Align-v0.1" # example model id

MODEL_PRETTY_NAME="Llama-3-8B-Magpie-Align-v0.1" # example model name

NUM_GPUS=4 # depending on your hardwares;

# do inference on WildBench

bash scripts/_common_vllm.sh $HF_MODEL_ID $MODEL_PRETTY_NAME $NUM_GPUS

# submit to OpenAI for eval (WB-Score)

bash evaluation/run_score_eval_batch.sh ${MODEL_PRETTY_NAME}

# check the batch job status

python src/openai_batch_eval/check_batch_status_with_model_name.py ${MODEL_PRETTY_NAME}

# show the table

bash leaderboard/show_eval.sh score_only Note

If your model is on HuggingFace and/or it is supported by vLLM, please add the chat template to the tokenizer config and follow the Shortcut below. If your model is not supported by vLLM, you can still create an Issue and let us know how to run your model.

Click to expand

conda create -n zeroeval python=3.10

conda activate zeroeval

# pip install vllm -U # pip install -e vllm

pip install vllm==0.5.1

pip install -r requirements.txtbash scripts/_common_vllm.sh [hf_model_id] [model_pretty_name] [num_gpus]

# bash scripts/_common_vllm.sh m-a-p/neo_7b_instruct_v0.1 neo_7b_instruct_v0.1 4 # example

# 1st arg is hf_name; 2nd is the pretty name; 3rd is the number of shards (gpus)Case 1: Models supported by vLLM

You can take the files under scripts as a reference to add a new model to the benchmark, for example, to add Yi-1.5-9B-Chat.sh to the benchmark, you can follow the following steps:

- Create a script named "Yi-1.5-9B-Chat.sh.py" under

scriptsfolder. - Copy and paste the most similar existing script file to it, rename the file to the

[model_pretty_name].sh. - Change the

model_nameandmodel_pretty_nameto01-ai/Yi-1.5-9B-ChatandYi-1.5-9B-Chat.shrespectively. Make sure thatmodel_nameis the same as the model name in the Hugging Face model hub, and themodel_pretty_nameis the same as the script name without the.pyextension. - Specify the conversation template for this model by modifying the code in

src/fastchat_conversation.pyor setting the--use_hf_conv_templateargument if your hugingface model contains a conversation template in tokenizer config. - Run your script to make sure it works. You can run the script by running

bash scripts/Yi-1.5-9B-Chat.shin the root folder. - Create a PR to add your script to the benchmark.

For Step 3-5, you can also use the above shortcut common command to run the model if your model is supported by vLLM and has a conversation template on hf's tokenizer config.

Case 2: Models that are only supported by native HuggingFace API

Some new models may not be supported by vLLM for now. You can do the same thing as above but use --engine hf in the script instead, and test your script. Note that some models may need more specific configurations, and you will need to read the code and modify them accordingly. In these cases, you should add name-checking conditions to ensure that the model-specific changes are only applied to the specific model.

Case 3: Private API-based Models

You should change the code to add these APIs, for example, gemini, cohere, claude, and reka. You can refer to the --engine openai logic in the existing scripts to add your own API-based models. Please make sure that you do not expose your API keys in the code. If your model is on Together.AI platform, you can use the --engine together option to run your model, see scripts/dbrx-instruct@together.sh for an example.

Note

If you'd like to have your model results verified and published on our leaderboard, please create an issue telling us and we'll do the inference and evaluation for you.

bash evaluation/run_score_eval_batch.sh ${MODEL_PRETTY_NAME}

bash leaderboard/show_eval.sh score_only

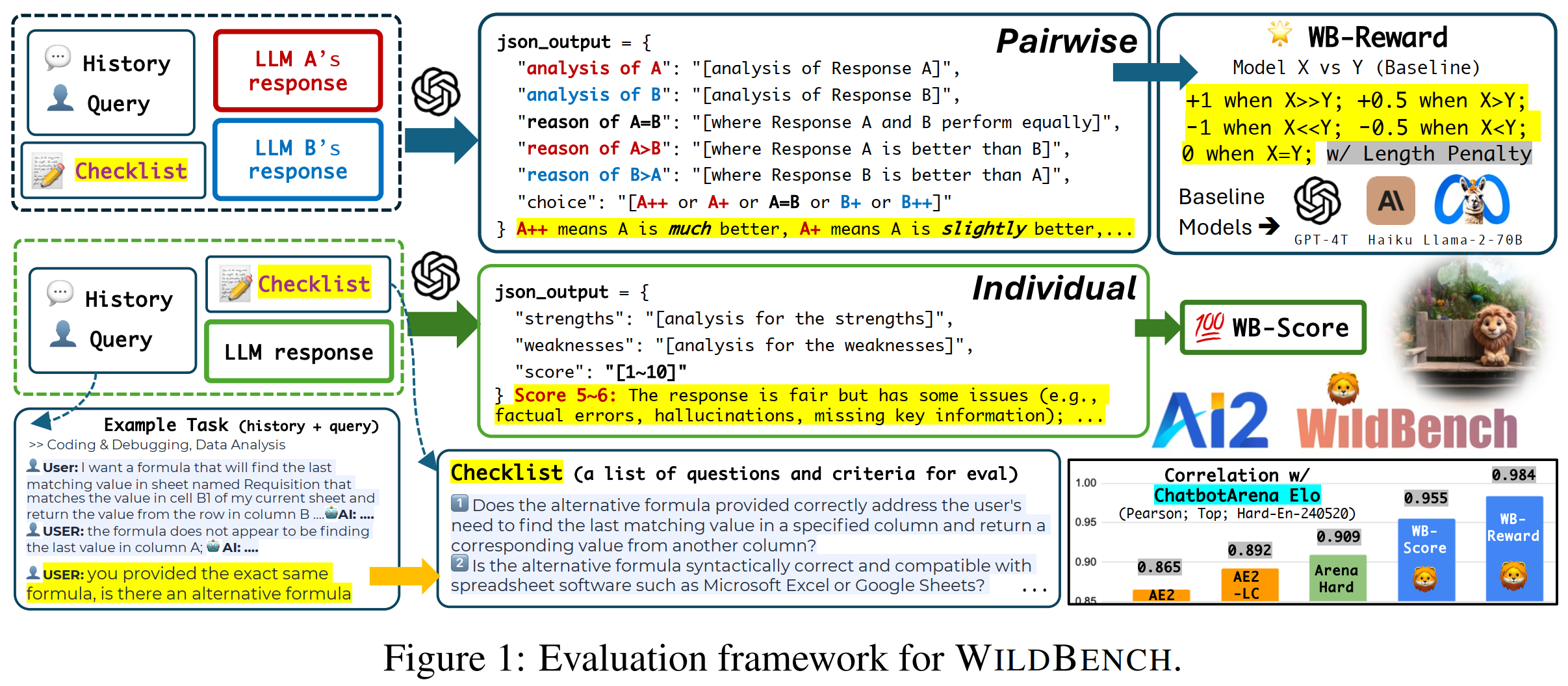

How do you evaluate the performance of LLMs on WildBench? (V2 Updates)

- Reward=100 if the A is much better than B.

- Reward=50 if the A is slightly better than B.

- Reward=0 if there is a Tie.

- Reward=-50 if the A is slightly worse than B.

- Reward=-100 if the A is much worse than B.

We suggest to use OpenAI's Batch Mode for evaluation, which is faster, cheaper and more reliable.

You can:

-

- Run

bash evaluation/run_all_eval_batch.sh ${MODEL_PRETTY_NAME}to submit the eval jobs.; Or if you only want to do scoring, runningbash evaluation/run_score_eval_batch.shto submit the eval jobs for only doing the WB Score. (about $5 per model)

- Run

-

- Run

python src/openai_batch_eval/check_batch_status_with_model_name.py ${MODEL_PRETTY_NAME}to track the status of the batch jobs.

- Run

-

- Step 2 will download the results when batch jobs are finished, and then you can view the results (see next section).

Remarks

${MODEL_PRETTY_NAME}should be the same as the script name without the.shextension.- You can also track the progress of your batch jobs here: https://platform.openai.com/batches. The maximum turnaround time is 24 hours, but it is usually much faster depending on the queue and rate limits.

- If you'd like to have more control on the evaluation methods, the detail steps are illustrated in EVAL.md.

When Step 3 in the above section is finished, you can view the results by running the following commands:

bash leaderboard/show_eval.sh # run all and show the main leaderboard

python leaderboard/show_table.py --mode main # (optional) to show the main leaderboard w/o recomputing

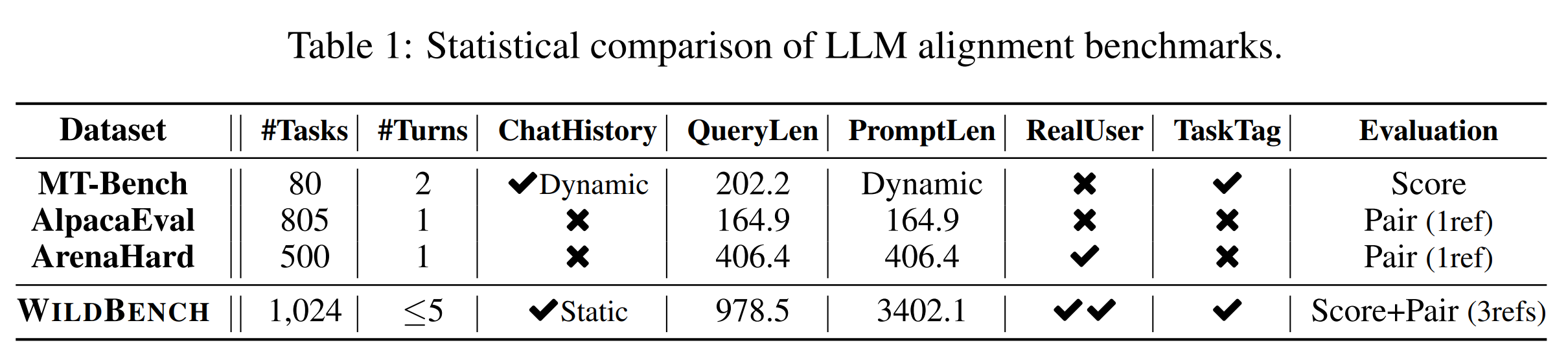

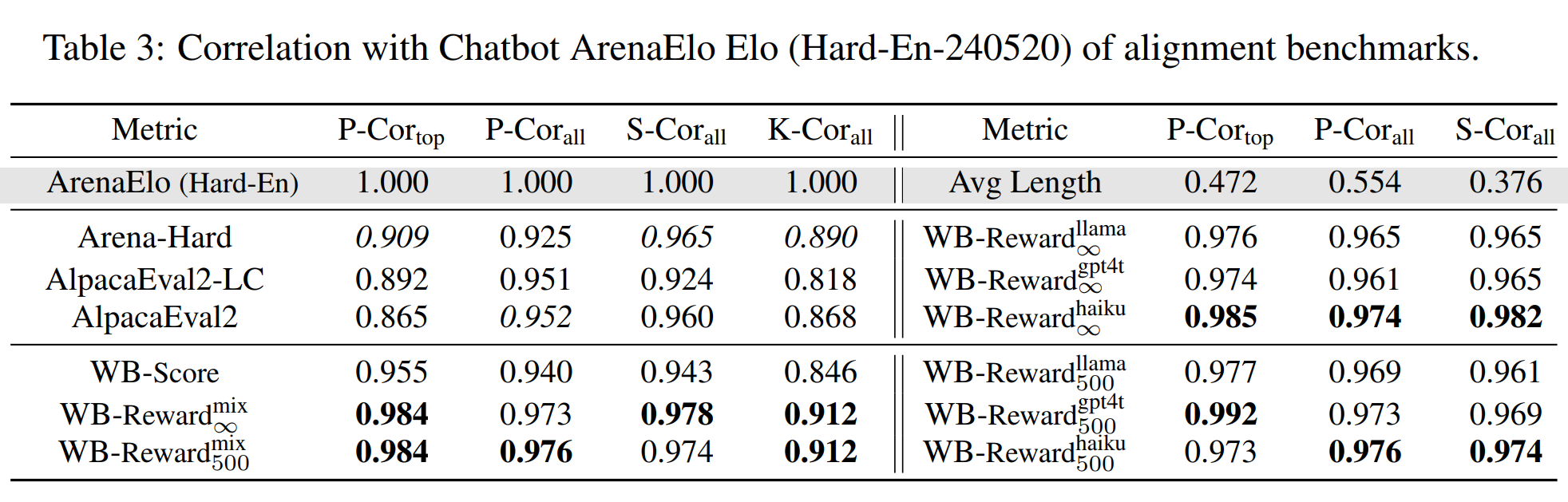

python leaderboard/show_table.py --mode taskwise_score # (optional) to show the taskwise scoreTo analyze the correlation between WildBench (v2) and human evaluation, we consider the correlation between different metrics and human-based Chatbot Arena Elo scores (until 2024-05-20 on Hard-English split). We find that the WB Reward-Mix has the highest correlation. Please find the pearson correlation coefficients below:

- Top Models:

['gpt-4-turbo-2024-04-09', 'claude-3-opus-20240229', 'Meta-Llama-3-70B-Instruct', 'claude-3-sonnet-20240229', 'mistral-large-2402', 'Meta-Llama-3-8B-Instruct'] - All Models:

['gpt-4-turbo-2024-04-09', 'claude-3-opus-20240229', 'Meta-Llama-3-70B-Instruct', 'Qwen1.5-72B-Chat', 'claude-3-sonnet-20240229', 'mistral-large-2402', 'dbrx-instruct@together', 'Mixtral-8x7B-Instruct-v0.1', 'Meta-Llama-3-8B-Instruct', 'tulu-2-dpo-70b', 'Llama-2-70b-chat-hf', 'Llama-2-7b-chat-hf', 'gemma-7b-it', 'gemma-2b-it']

- openchat/openchat-3.6-8b-20240522

- gemma-2

- SimPO-v0.2

- Qwen2-7B-Chat

- LLM360/K2-Chat

- DeepSeek-V2-Code

- Yi-large-preview

- THUDM/glm-4-9b-chat

- chujiezheng/neo_7b_instruct_v0.1-ExPO

- ZhangShenao/SELM-Llama-3-8B-Instruct-iter-3

- m-a-p/neo_7b_instruct_v0.1

- Reka Flash

- DeepSeekV2-Chat

- Reka Core

- Yi-Large (via OpenAI-like APIs)

- chujiezheng/Llama-3-Instruct-8B-SimPO-ExPO

- chujiezheng/Starling-LM-7B-beta-ExPO

- Gemini 1.5 series

- Qwen2-72B-Instruct

- ZhangShenao/SELM-Zephyr-7B-iter-3

- NousResearch/Hermes-2-Theta-Llama-3-8B

- princeton-nlp/Llama-3-Instruct-8B-SimPO

- Command-R-plus

- Phi-3 series

Create an Issue if you'd like to add a model that you wanna see on our leaderboard!

- support models via openai-style apis

- Show task categorized results

@misc{lin2024wildbench,

title={WildBench: Benchmarking LLMs with Challenging Tasks from Real Users in the Wild},

author={Bill Yuchen Lin and Yuntian Deng and Khyathi Chandu and Faeze Brahman and Abhilasha Ravichander and Valentina Pyatkin and Nouha Dziri and Ronan Le Bras and Yejin Choi},

year={2024},

eprint={2406.04770},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2406.04770}

}