This repository contains a demo of using Files in Repos functionality with Databricks Delta Live Tables (DLT) to perform unit & integration testing of DLT pipelines.

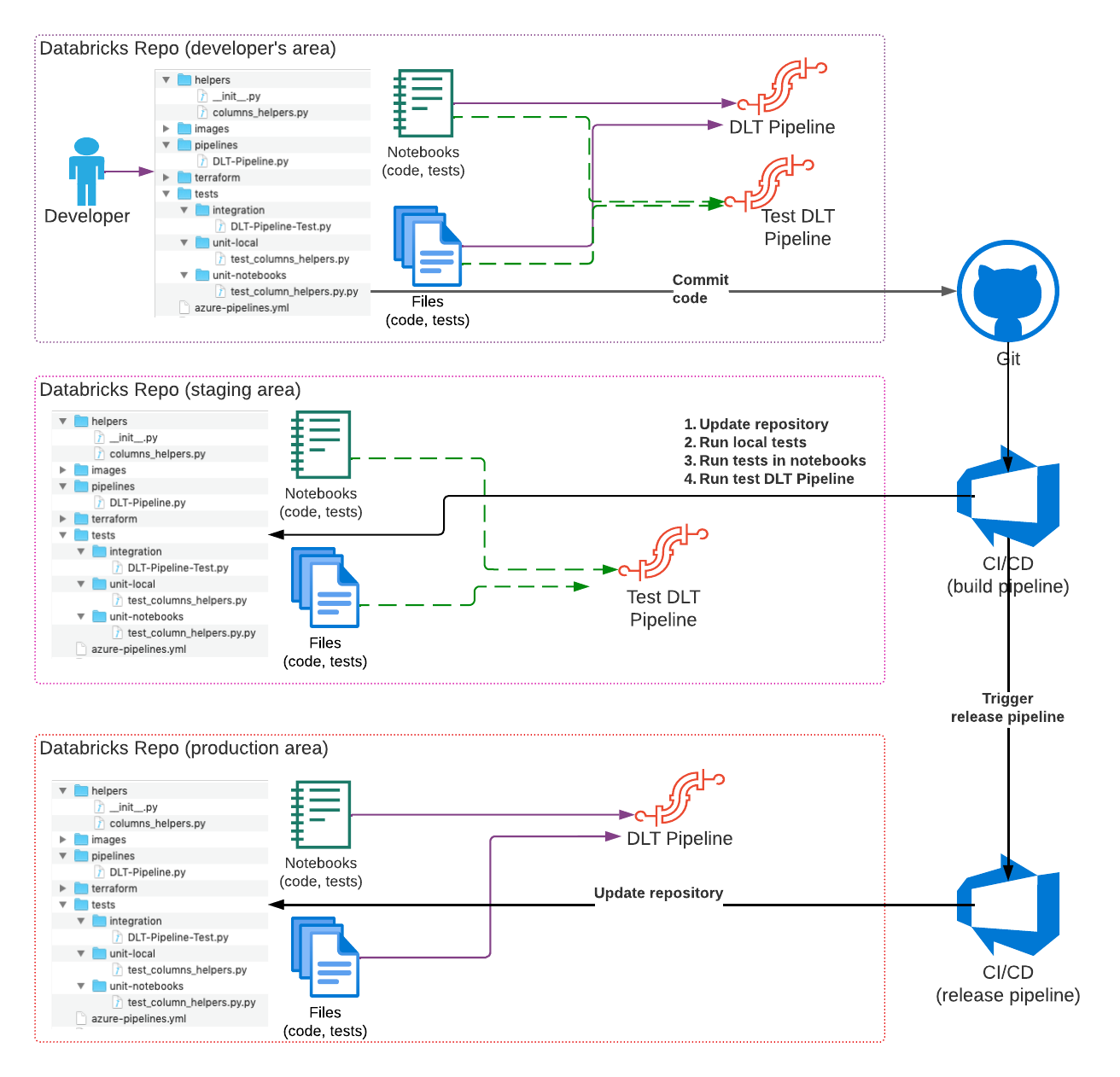

The development workflow is organized as on following image:

More detailed description is available in the blog post Applying software development & DevOps best practices to Delta Live Table pipelines.

🚧 Work in progress...

There are two ways of setting up everything:

- using Terraform - it's the easiest way of getting everything configured in a short time. Just follow instructions in terraform/azuredevops/ folder.

⚠️ This doesn't include creation of release pipeline as there is no REST API and Terraform resource for it. - manually - follow instructions below to create all necessary objects.

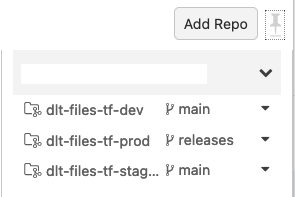

In this example we're using three checkouts of our sample repository:

- Development: is used for actual development of the new code, running tests before committing the code, etc.

- Staging: will be used to run tests on commits to branches and/or pull requests. This checkout will be updated to the actual branch to which commit happened. We're using one checkout just for simplicity, but in real-life we'll need to create such checkouts automatically to allow multiple tests to run in parallel.

- Production: is used to keep the production code - this checkout always will be on the

releasesbranch, and will be updated only when commit happens to that branch and all tests are passed.

Here is an example of repos created with Terraform:

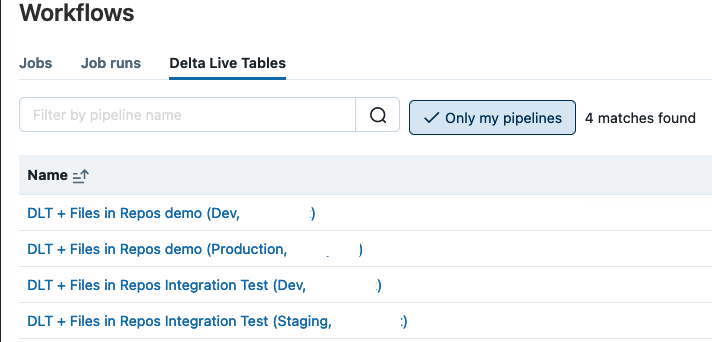

We need to create a few DLT pipelines for our work:

- for main code that is used for development - use only

pipelines/DLT-Pipeline.pynotebook from the development repository. - (optional) for integration test that could be run as part of development - from the development repository use main code notebook (

pipelines/DLT-Pipeline.py) together with integration test notebook (tests/integration/DLT-Pipeline-Test.py). - for integration test running as part of CI/CD pipeline - similar to the previous item, but use the staging repository.

- for production pipeline - use only

pipelines/DLT-Pipeline.pynotebook from the production repository.

Here is an example of pipelines created with Terraform:

If you decide to run notebooks with tests located in tests/unit-notebooks directory, you will need to create a Databricks cluster that will be used by the Nutter library. To speedup tests, attach the nutter & chispa libraries to the created cluster.

If you don't want to run these tests, comment out in the azure-pipelines.yml the block with displayName "Execute Nutter tests".

🚧 Work in progress...

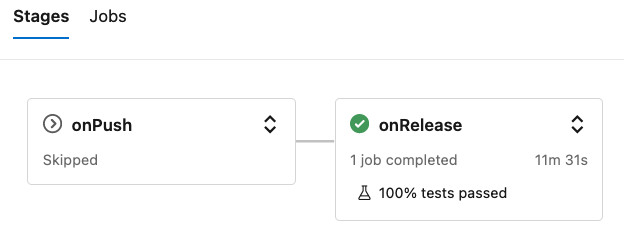

The ADO build pipeline consists of the two stages:

onPushis executed on push to any Git branch exceptreleasesbranch and version tags. This stage only runs & reports unit tests results (both local & notebooks).onReleaseis executed only on commits to thereleasesbranch, and in addition to the unit tests it will execute a DLT pipeline with integration test (see image).

🚧 Work in progress...