Visual instruction tuning towards buiding large language and vision models with GPT-4 level capabilities in the biomedicine space.

[Paper]

LLaVA-Med: Training a Large Language-and-Vision Assistant for Biomedicine in One Day

Chunyuan Li*, Cliff Wong*, Sheng Zhang*, Naoto Usuyama, Haotian Liu, Jianwei Yang, Tristan Naumann, Hoifung Poon, Jianfeng Gao (*Equal Contribution)

Generated by GLIGEN using the grounded inpainting mode, with three boxes: white doctor coat, stethoscope, white doctor hat with a red cross sign.

- [June 1] 🔥 We released LLaVA-Med: Large Language and Vision Assistant for Biomedicine, a step towards building biomedical domain large language and vision models with GPT-4 level capabilities. Checkout the paper

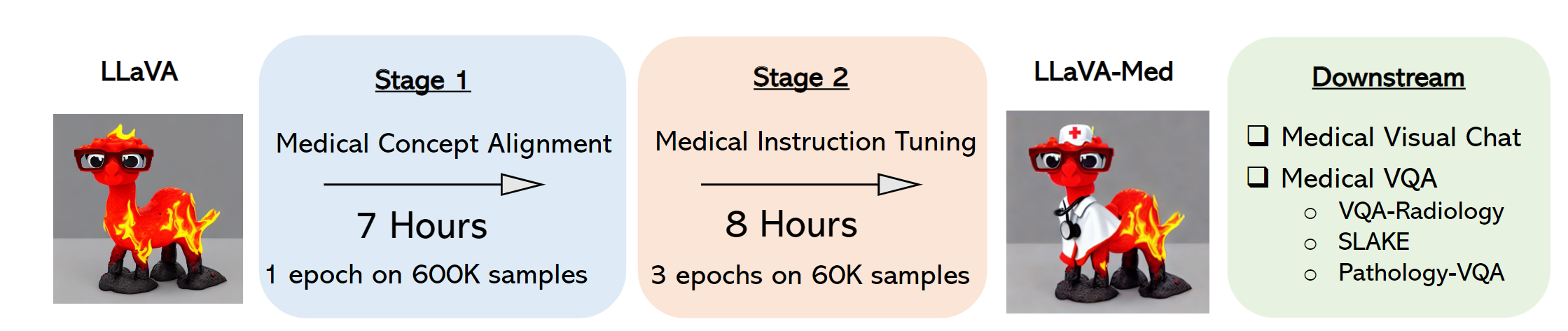

LLaVA-Med was initialized with the general-domain LLaVA and then continuously trained in a curriculum learning fashion (first biomedical concept alignment then full-blown instruction-tuning). We evaluated LLaVA-Med on standard visual conversation and question answering tasks.

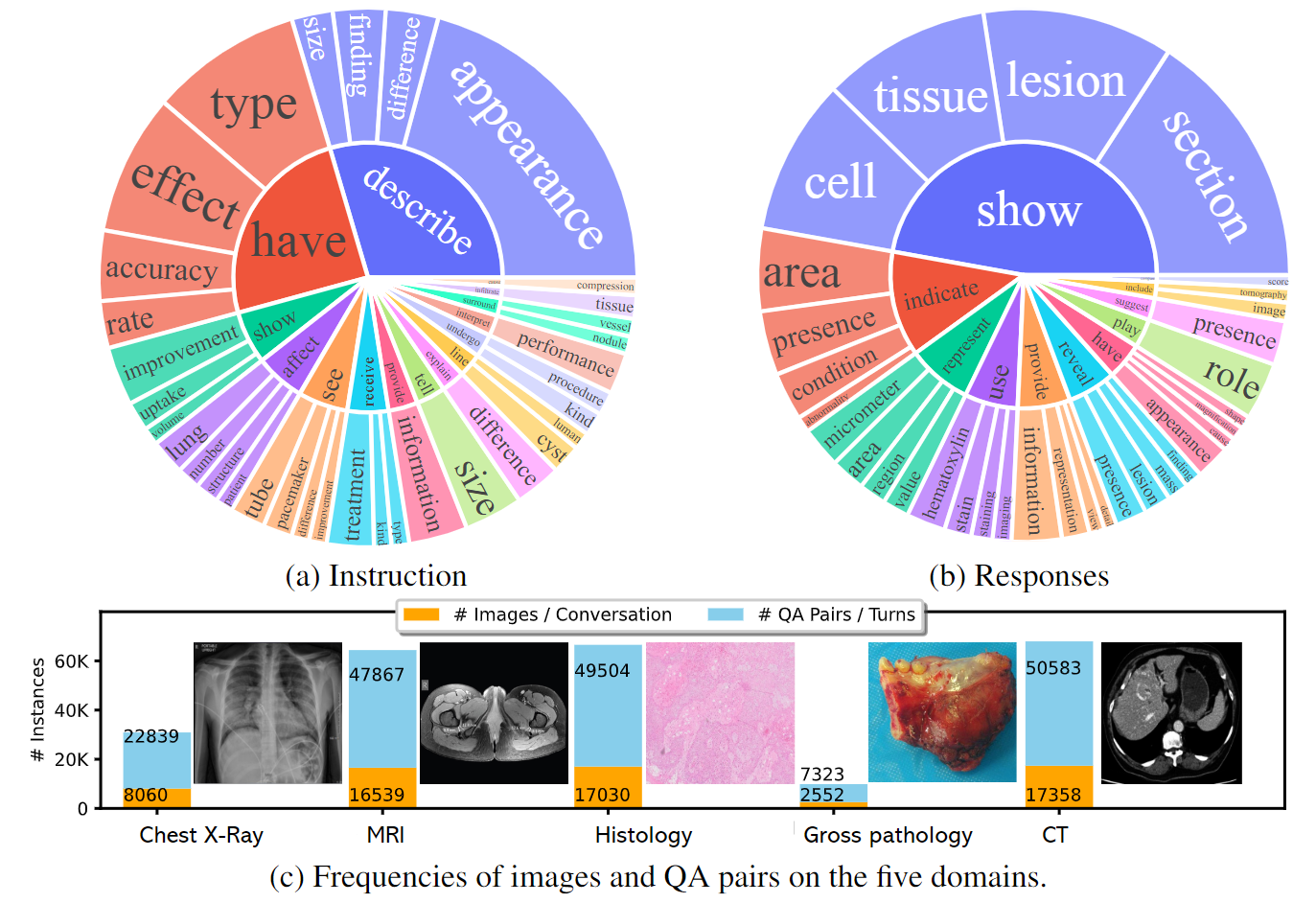

The data statistics of biomedical multimodal instruction-following data: (a,b) The root verb-noun pairs of instruction and responses, where the inner circle of the plot represents the root verb of the output response, and the outer circle represents the direct nouns. (c) The distribution of images and QA pairs on the five domains, one image is shown per domain.

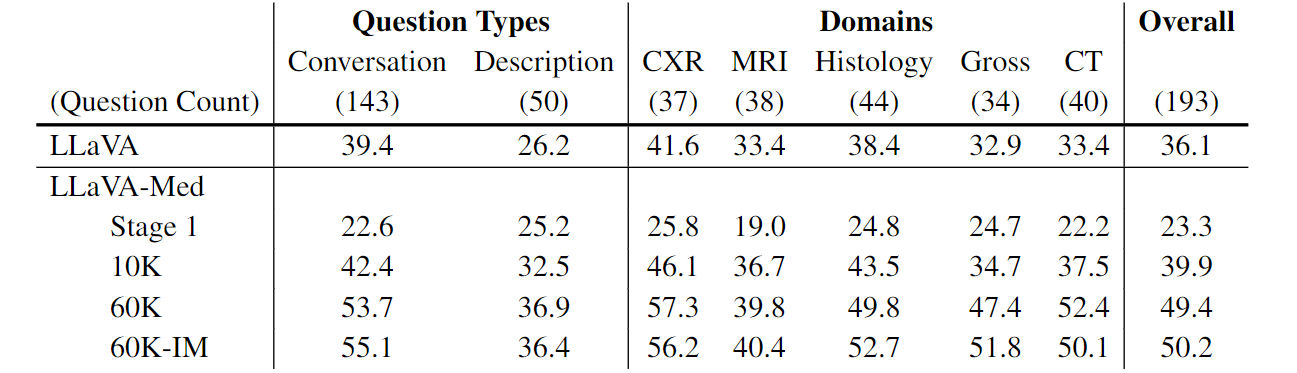

Performance comparison of mulitmodal chat instruction-following abilities, measured by the relative score via language GPT-4 evaluation.

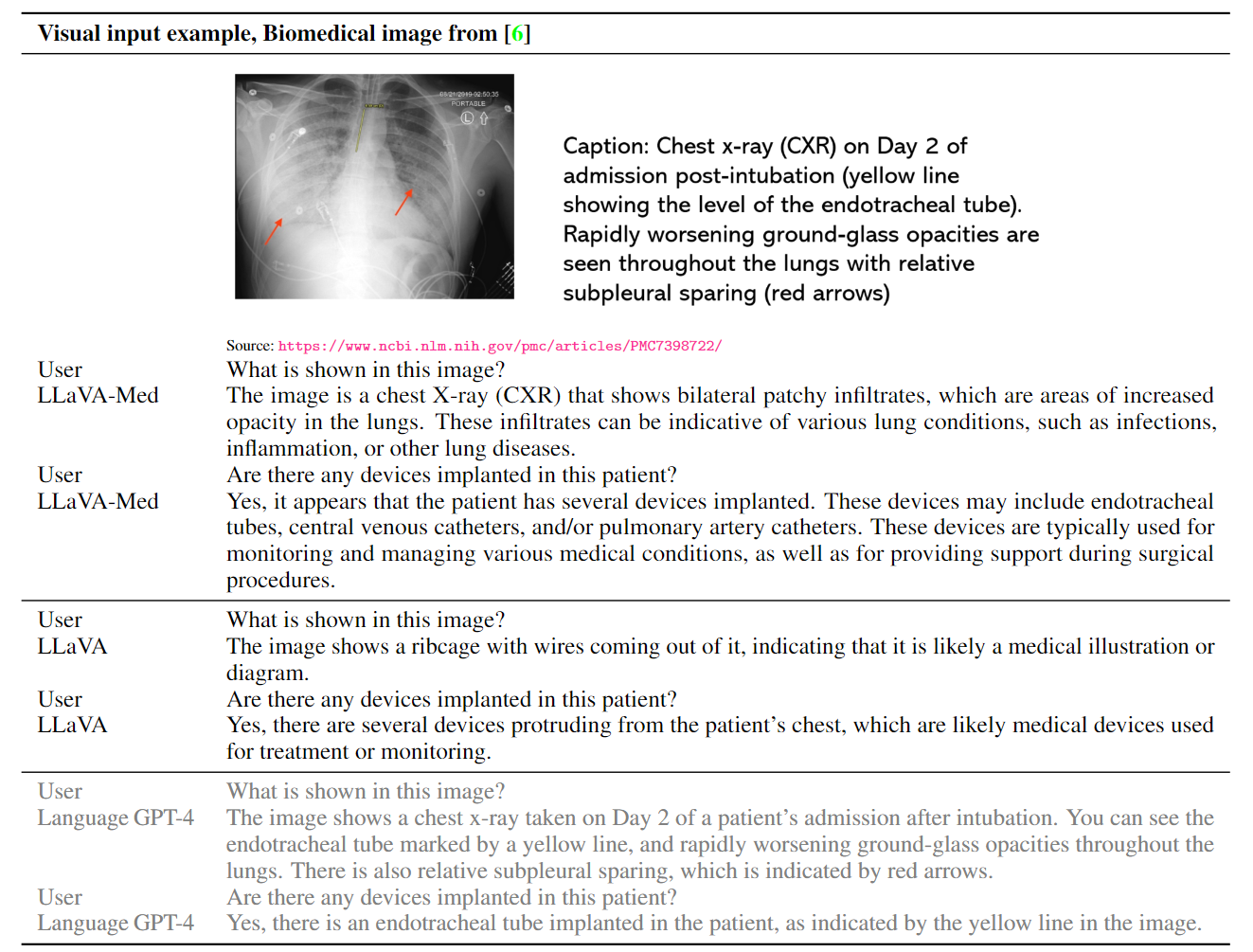

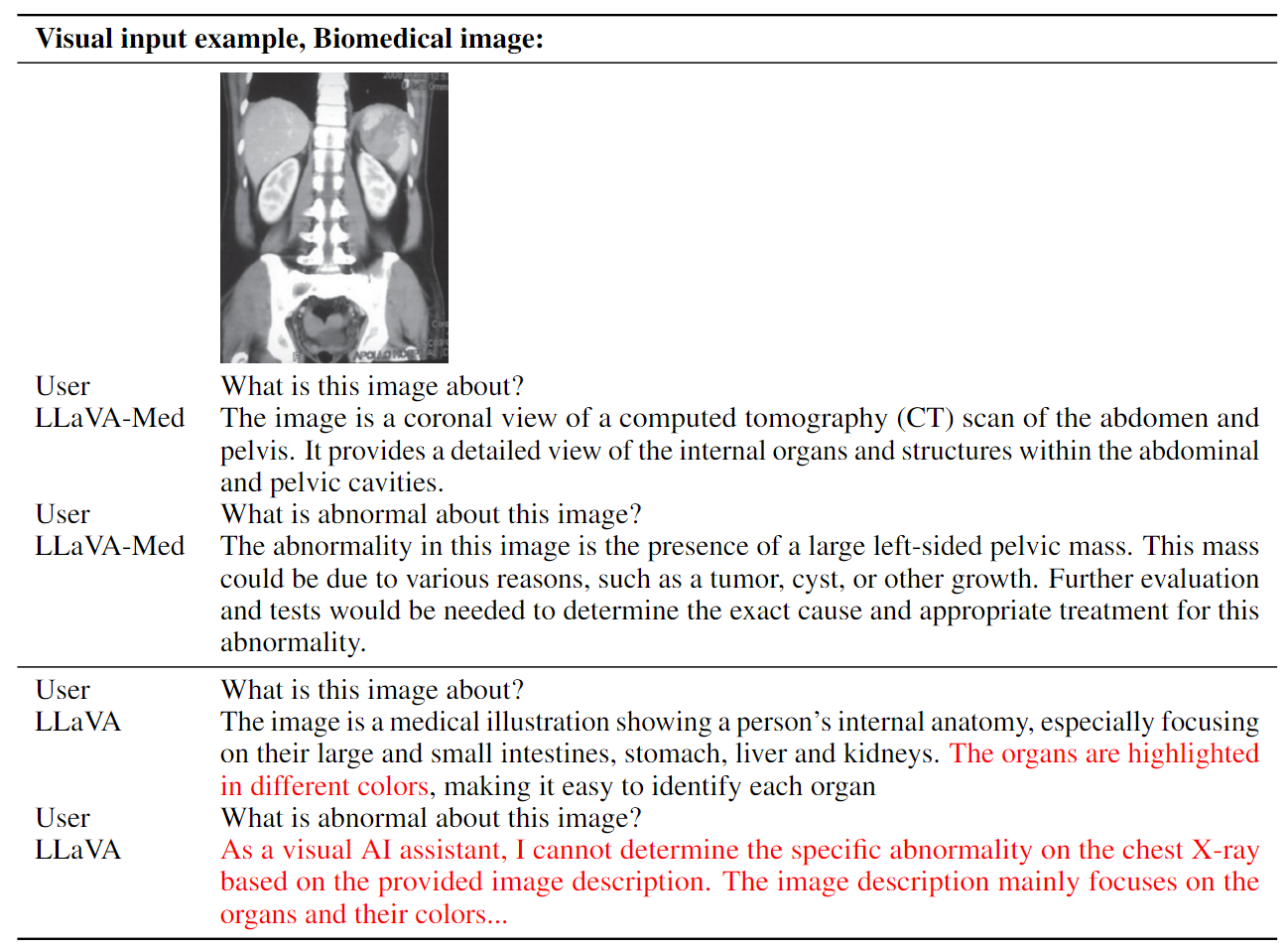

Example 1: comparison of medical visual chat. The language-only GPT-4 is considered as the performance upper bound, as the golden captions and inline mentions are fed into GPT-4 as the context, without requiring the model to understand the raw image.

Example 2: comparison of medical visual chat. LLaVA tends to halluciate or refuse to provide domain-specific knowledgable response.

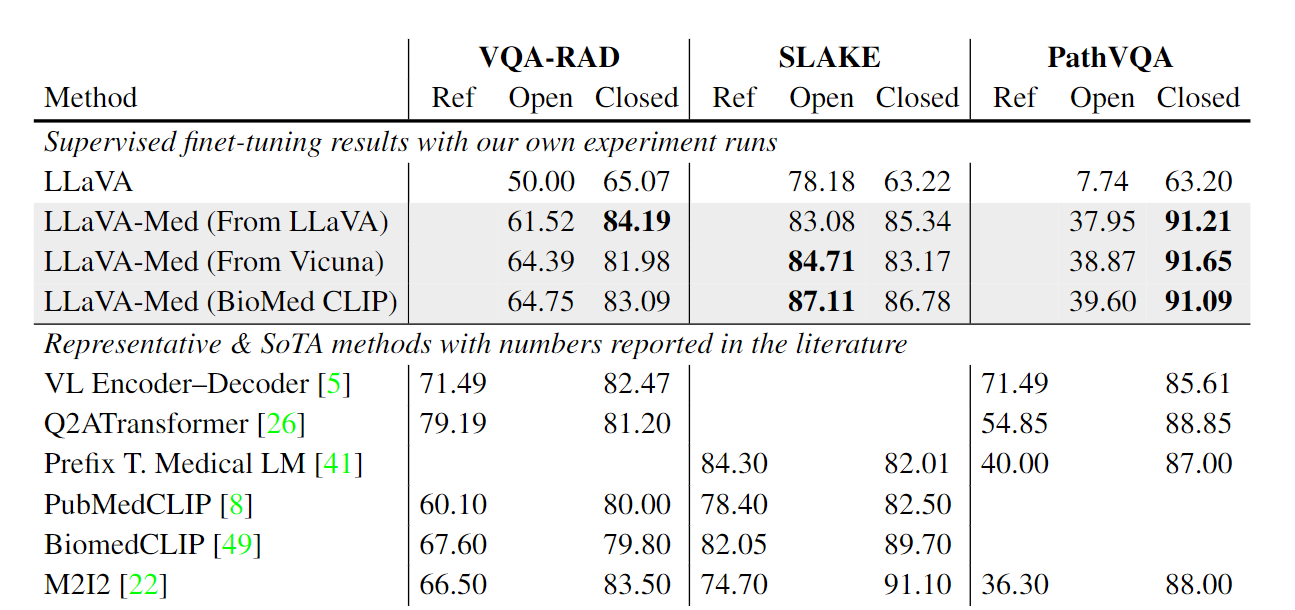

Performance comparison of fine-tuned LLaVA-Med on established Medical QVA datasets.