Disentangled Representation Learning and Generative Adversarial Networks for Emotional Voice Cloning

- Given a recorded speech sample we would like to generate new samples having some qualitative aspects like speaker's voice timbre, prosody, emotion etc. altered.

- Naive application of state-of-the-art GANs for image style transfer doesn't deliver good results because these in general are not well suited to handle sequential data like speech.

- The goal is to design an adversarially trained network capable of generating high quality speech samples in our setting.

- SpeechSplit is an autoencoder neural network whcih decomposes speech into disentangled latent representations corresponding to four main perceptual aspects of speech i.e. pitch, rhythm, lexical content and speaker's voice timbre.

- The latents can be synthesized back into speech, hence it may be possible to perform style transfer by simply generating and substituting some of the latents to synthesize altered samples.

- Authors of SpeechSplit confirm that this method works if the latents are swapped between parallel utterances (i.e. actual recordings of people uttering the same sentence).

- We show that some latents can also be successfully generated by a relatively simple GAN, which introduces significant sample quality improvement over baseline end-to-end GANs in our voice style transfer task.

- In other words, SpeechSplit autoencoder is used in our proposed model to simplify the structure of the data so that it can be more easily captured by a GAN.

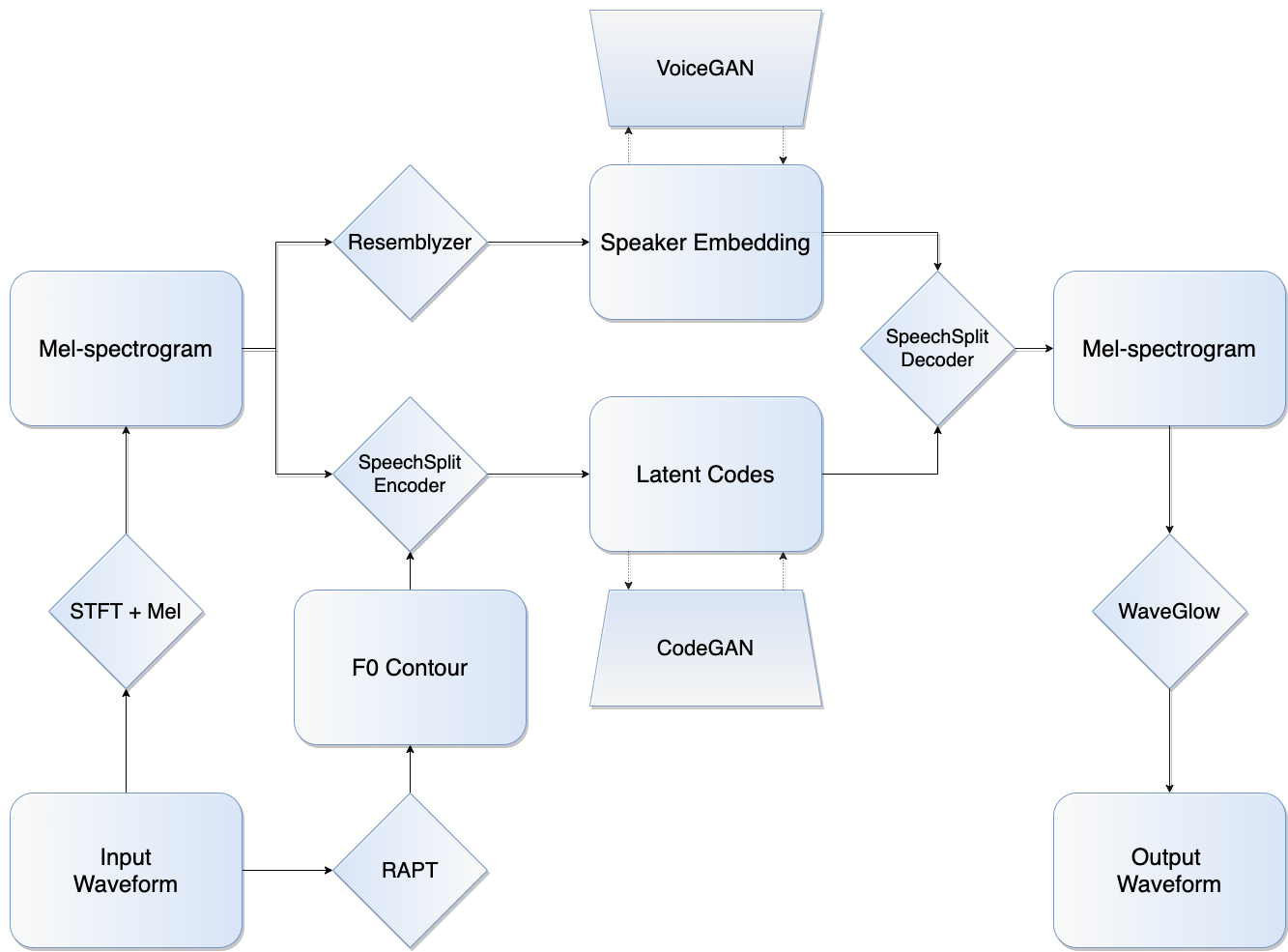

- Mel-spectrogram and pitch contour are extracted from raw waveform.

- Resemblyzer (an independent neural network trained on a speaker verification task) computes speaker embedding (a vector being a high-level representation of speaker's voice) from a mel-spectrogram.

- Style codes for pitch, rhythm and lexical content are provided by SpeechSplit encoder.

- New latent representations for pitch and rhythm are generated by CodeGAN, whereas new speaker embeddings are sampled from VoiceGAN.

- SpeechSplit decoder synthesizes output mel-spectrogram from speaker embedding and pitch, rhythm and content codes.

- Mel-spectrograms are converted to output waveform by WaveGlow.

See requirements.txt

git clone https://github.com/alexbrx/emo-clone.git

cd emo-clone

bash setup.sh

pip install -r requirements.txt

python utils/fake_cvoice_samples.py

- IEMOCAP

- VCTK

- Common Voice

- LJSpeech

- SpeechSplit is trained on VCTK and fine-tuned on IEMOCAP after which the weights become frozen.

- Latent codes are computed by SpeechSplit for IEMOCAP samples on which CodeGAN is subsequently trained.

- A dataset of speaker embeddings is created from Common Voice dataset on which VoiceGAN is subsequently trained.

- WaveGlow vocoder is independently trained on LJSpech to convert mel-spectrograms into waveforms.