Source code for "Creating a fully serverless workflow management platform" talk from ServerlessDays Virtual by Alexandra Abbas.

Credit: This presentation was inspired by a Reddit post from wil19558

A workflow management platform allows you to write, organise, execute, schedule and monitor workflows. A workflow is a collection of data processing setps that could form an ETL (Extract-Transform-Load) pipeline or a Machine Learning pipeline.

With the release of Workflows on Google Cloud it became possible to create a fully serverless workflow manager which is easier to maintain and has a lower cost than other self-hosted or managed services like Apache Airflow or Luigi. Workflows could be a good alternative if you have to manage only a few pipelines.

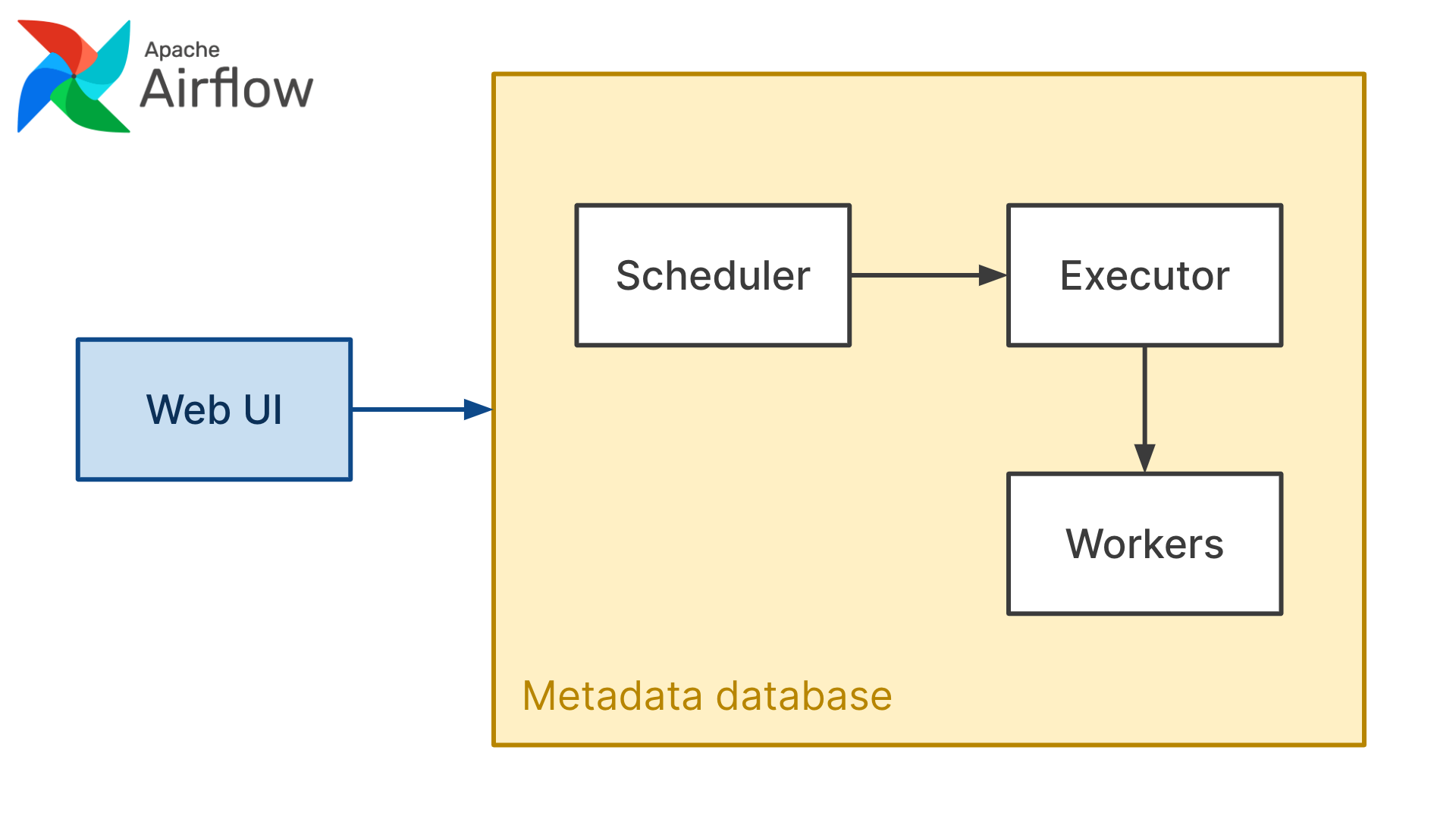

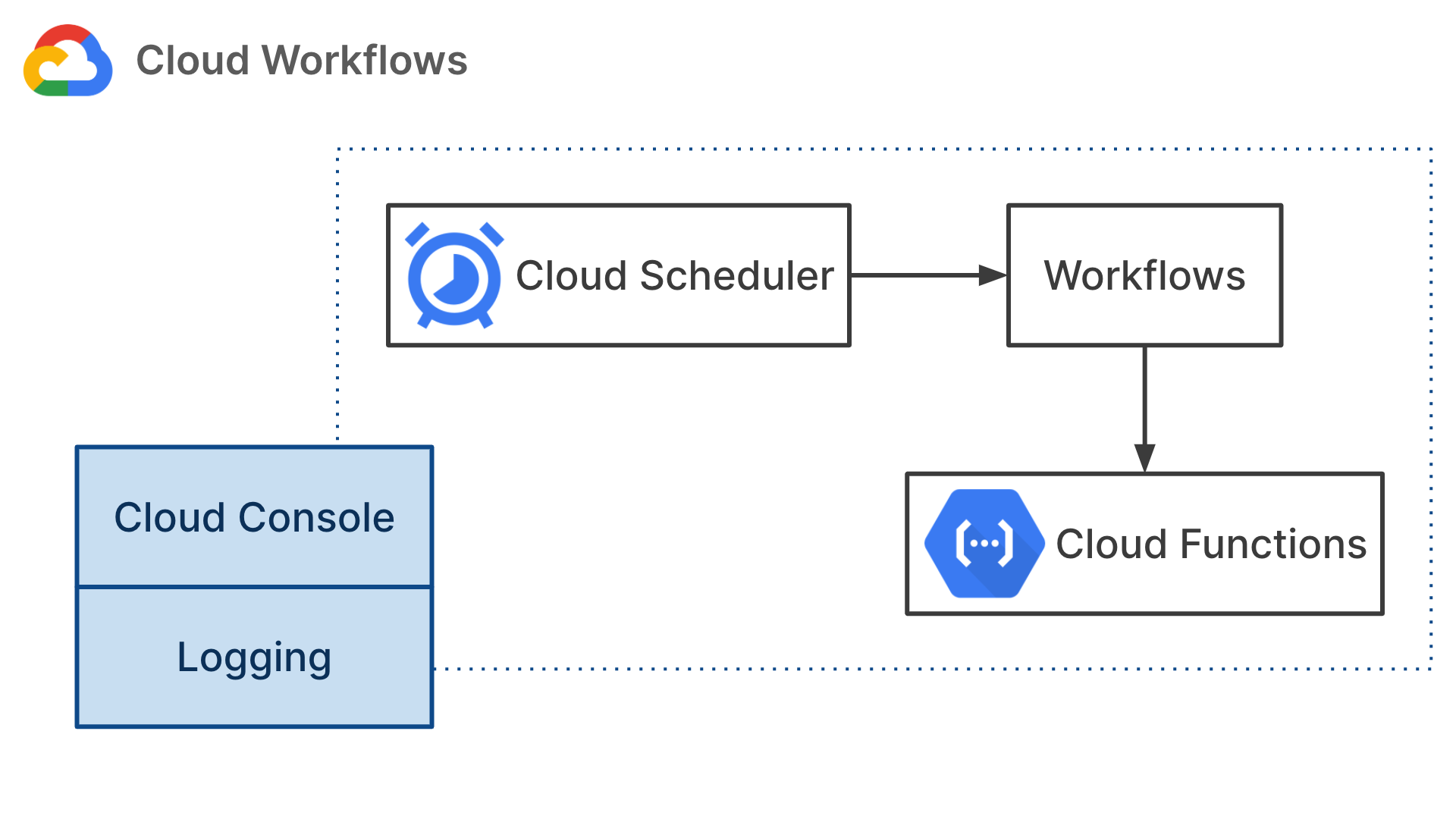

To create a serverless workflow manager we could basically copy the architecture of Apache Airflow replacing Airflow's components with serverless alternatives. Main components of Apache Airflow are the web server, scheduler, executor, metadata database and workers.

We could use Cloud Scheduler as the scheduler component which is responsible for triggering workflows at the right time. Google Cloud's new service, Workflows can serve as the executor which manages the steps in a workflow and triggers workers to run tasks. We could think of Cloud Functions as workers which are responsible for actually running tasks.

In a serverless setup we don't need a metadata datasbase. In Airflow metadata database is basically a shared state that all components can read from and write to. In a serverless architecture if we would like to share data between components we need to send that data as a JSON string (request body) when calling that component. Think of each component as a stateless API.

I compared these two architectures in my presentation. Have a look at the presentation slides for details.

P.S. I didn't mention in my presentation although it's quite important that Cloud Functions have a 540s timeout. Which means that data processing jobs which take longer than that would fail. In that case I recommend using App Engine instead of Cloud Functions.

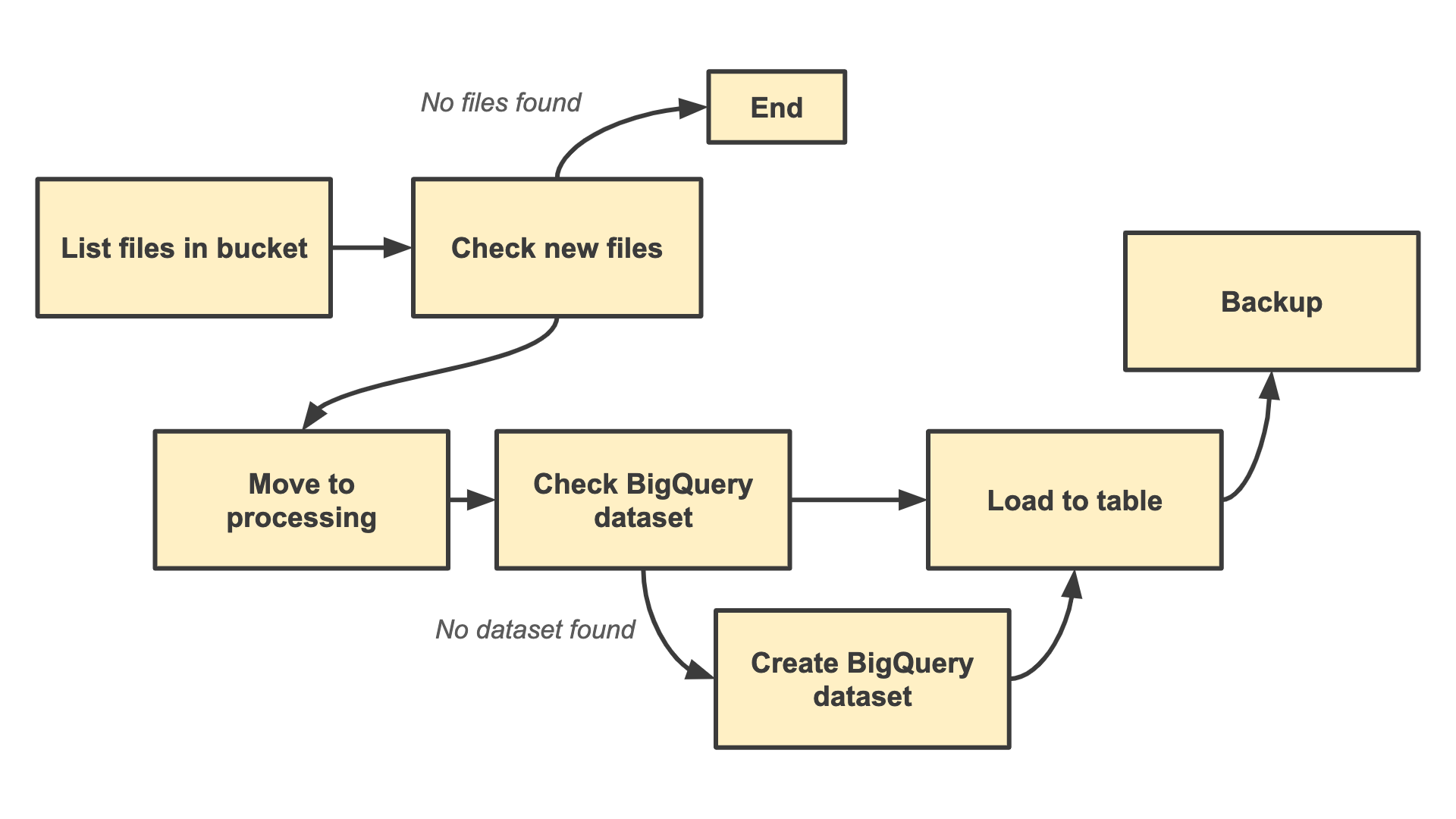

Let's imagine that we're working for a logistics company. This company has vehicles equiped with sensors. These sensors collect data about the state of each vehicle, they send CSV files to Cloud Storage every hour.

Our task is to take the CSV files in Cloud Storage and load all files into a table in BigQuery so analysts and data scientists can access historical data.

Create the following resources in Google Cloud in order to run this project.

- Create a Google Cloud project

- Create a Cloud Storage bucket

- Create 3 "folders" called landing, processing and backup in your Cloud Storage bucket

Take note of your project ID, bukcet name and region as we're going to use these later. Alternatively export these variables as environment variables.

export PROJECT=<your-project-name>

export BUCKET=<your-bucket-name>

export REGION=<your-bucket-region>Set the project that you just created as your default project using the gcloud command line tool.

gcloud config set project ${PROJECT}Run the following command to enable Cloud Functions, Workflows, Cloud Build and Cloud Storage APIs.

gcloud services enable \

cloudfunctions.googleapis.com \

workflows.googleapis.com \

cloudbuild.googleapis.com \

storage.googleapis.comUpload sample CSV files to your storage bucket.

cd data/

gsutil cp *.csv gs://${BUKCET}/landingThis repository includes 3 Cloud Functions. These functions contain the business logic corresponding to steps in our workflow.

list_files: Lists file names in a bucket or in a "folder" of a bucketmove_files: Renames prefixes of objects in a bucket (moves object from one "folder" to another in a bucket)load_files: Loads files from Cloud Storage into a BigQuery table

Each function has its own directory. Change to that directory and deploy each function using the following command.

cd list_files/

gcloud functions deploy list_files \

--runtime python37 \

--trigger-http \

--allow-unauthenticated \

--region=${REGION}Run the same command for each function.

Our workflow is defined in bigquery_data_load.yaml file. Workflows require you to write your pipelines in YAML. I recommend reviewing the syntax reference for writing YAML workflows. Let's deploy our worlfow by running the following command. You cannot deploy your workflow to any region, make sure that the region you're using is supported by Workflows.

gcloud beta workflows deploy bigquery_data_load \

--source=bigquery_data_load.yaml \

--region=${REGION}You can execute your workflows manually either via the UI or command line. To execute your workflow via the command line, run the following command.

gcloud beta workflows execute bigquery_data_load \

--location=${REGION} \

--data='{"bucket":"<your-bucket-name>","project":"<your-project-name>","region":"<your-bucket-region>"}'Let's create a Service Account for Cloud Scheduler which will be responsible for triggering workflows.

export SERVICE_ACCOUNT=scheduler-sa

gcloud iam service-accounts create ${SERVICE_ACCOUNT}

gcloud projects add-iam-policy-binding ${PROJECT} \

--member="serviceAccount:${SERVICE_ACCOUNT}@${PROJECT}.iam.gserviceaccount.com" \

--role="roles/workflows.invoker"Let's create a job in Cloud Scheduler which will trigger our workflow once every hour. This job will use the service account we've just created. We also specify a request body which will contain input arguments for our workflow.

gcloud scheduler jobs create http bigquery_data_load_hourly \

--schedule="0 * * * *" \

--uri="https://workflowexecutions.googleapis.com/v1beta/projects/${PROJECT}/locations/${REGION}/workflows/bigquery_data_load/executions" \

--oauth-service-account-email="${SERVICE_ACCOUNT}@${PROJECT}.iam.gserviceaccount.com" \

--message-body='{"bucket":"<your-bucket-name>","project":"<your-project-name>","region":"<your-bucket-region>"}'You can monitor your workflows via Google Cloud Consolse. Unfortunately Google Cloud Console doesn't generate any visual graph of your workflows yet. You can assess all logs in Workflows and Cloud Functions UI, these logs allow you to configure metrics, alerts and build monitoring bashboards.