- Description

- Installation

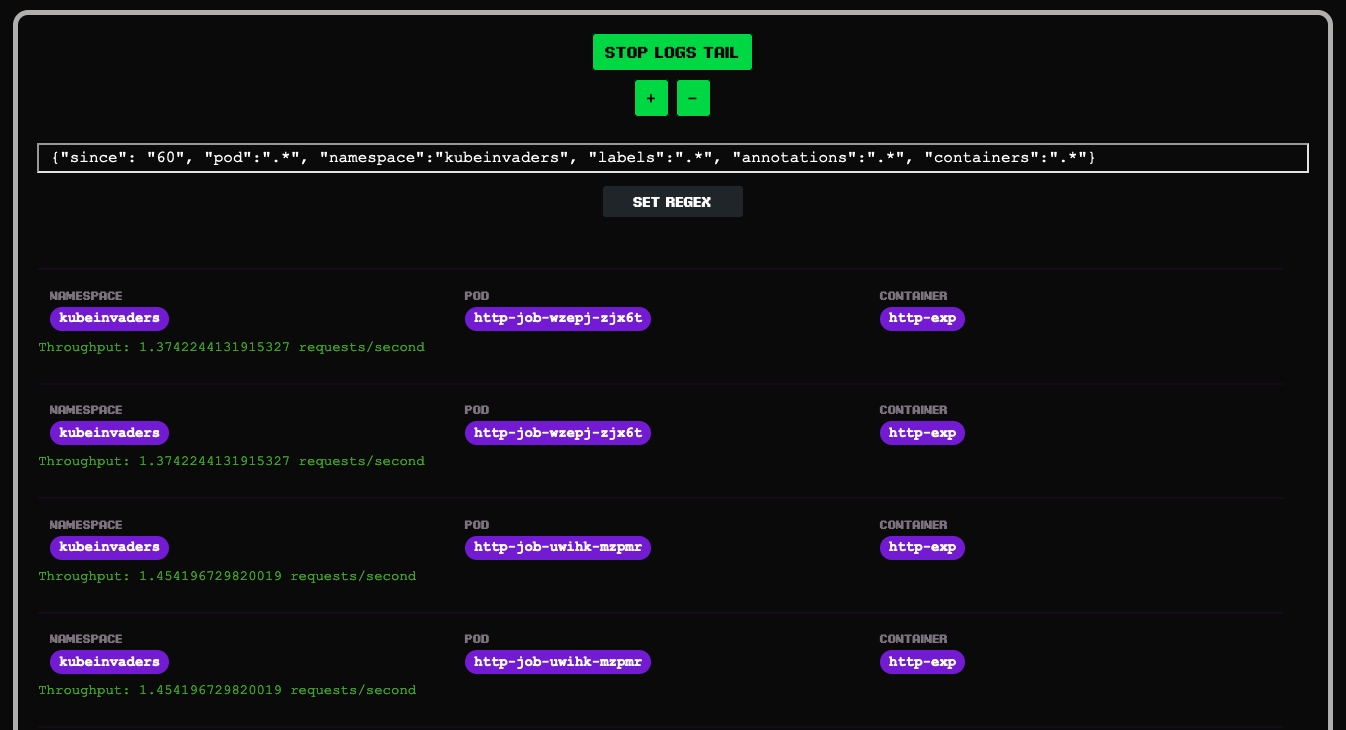

- Usage

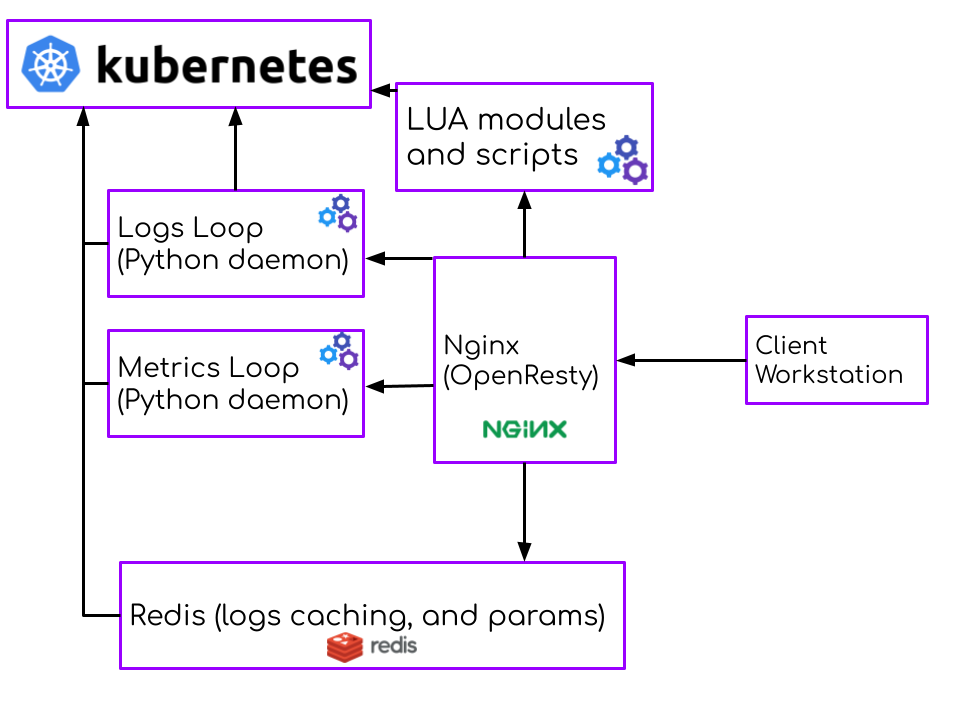

- Architecture

- Persistence

- Known Problems & Troubleshooting

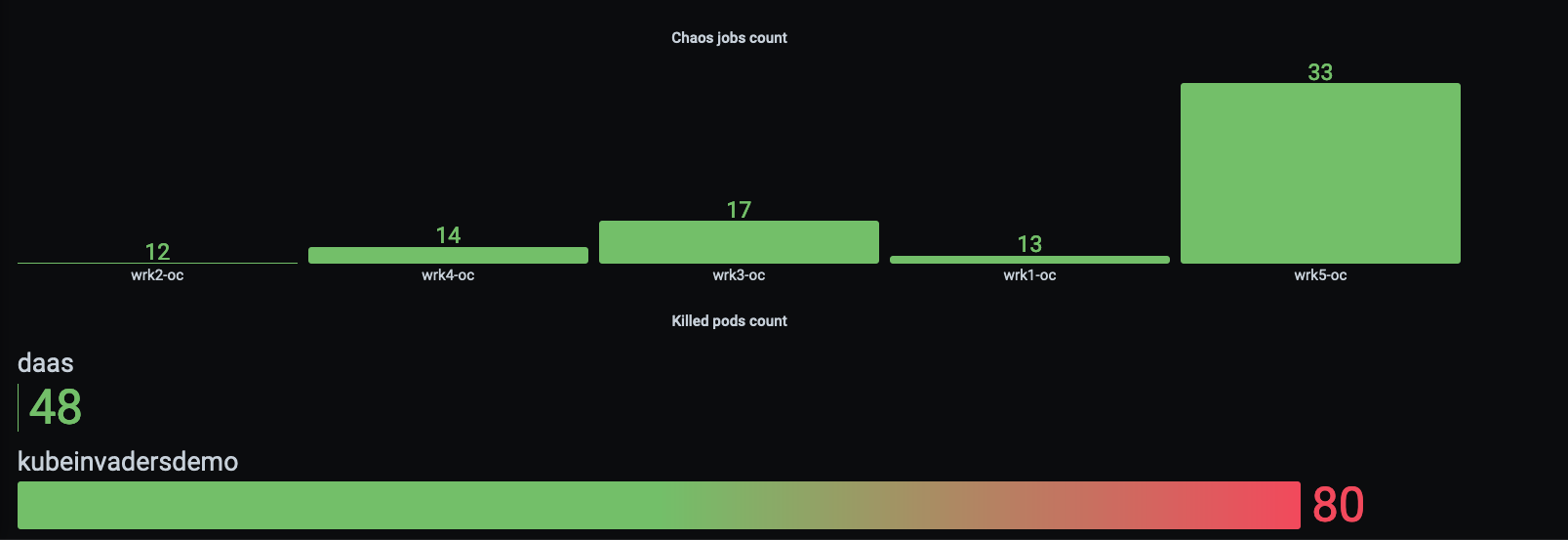

- Metrics

- Security

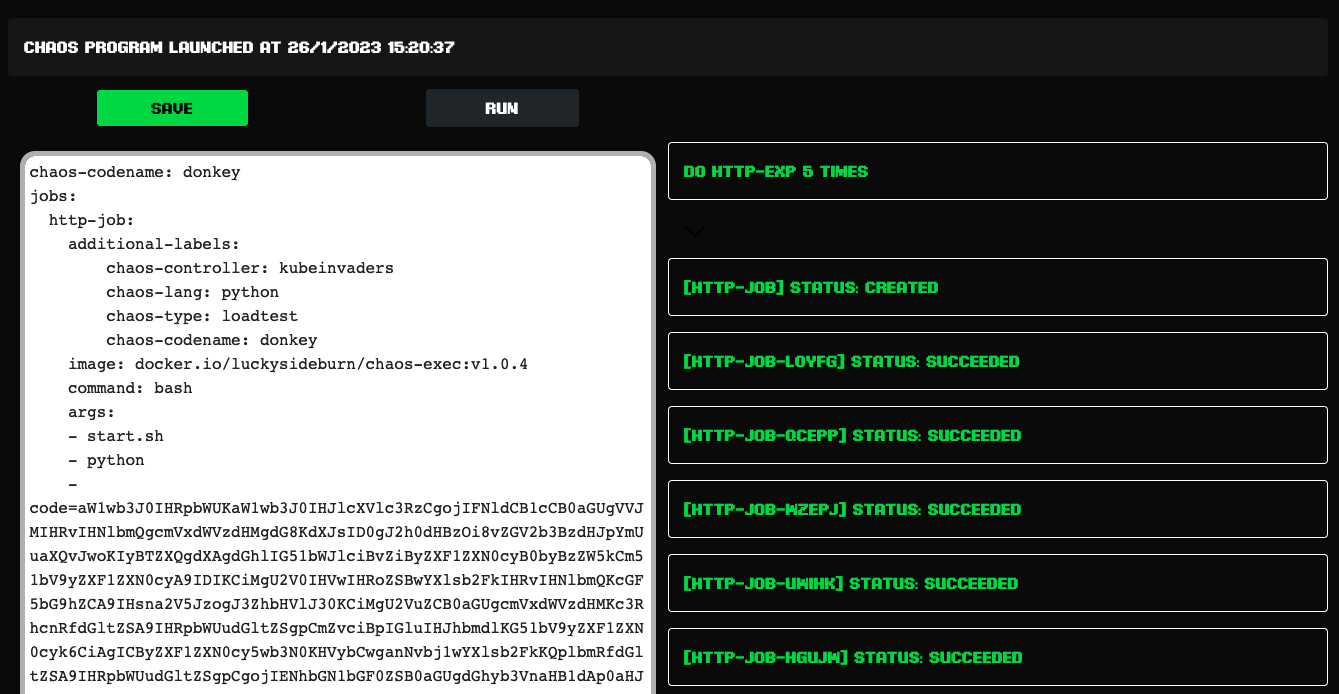

- Test Loading and Chaos Experiment Presets

- Community

- Community blogs and videos

- License

These are the slides from the Chaos Engineering speech I prepared for Fosdem 2023. Unfortunately I could not be present at my talk :D but I would still like to share them with the community

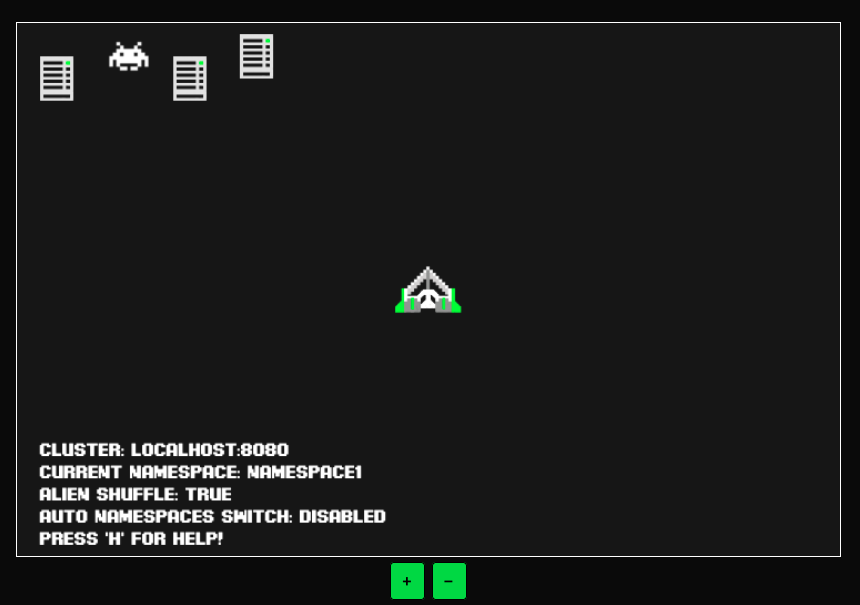

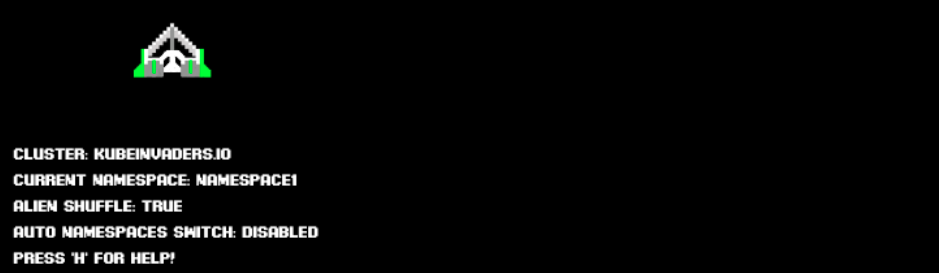

Through k-inv a.k.a. KubeInvaders you can stress a Kubernetes cluster in a fun way and check how resilient it is.

docker run -p 8080:8080 \

--env K8S_TOKEN=<k8s_service_account_token> \

--env ENDPOINT=localhost:8080 \

--env INSECURE_ENDPOINT=true \

--env KUBERNETES_SERVICE_HOST=<k8s_controlplane_host> \

--env KUBERNETES_SERVICE_PORT_HTTPS=<k8s_controlplane_port> \

--env NAMESPACE=<comma_separated_namespaces_to_stress> \

luckysideburn/kubeinvaders:develophelm repo add kubeinvaders https://lucky-sideburn.github.io/helm-charts/

helm repo update

kubectl create namespace kubeinvaders

helm install kubeinvaders --set-string config.target_namespace="namespace1\,namespace2" \

-n kubeinvaders kubeinvaders/kubeinvaders --set ingress.enabled=true --set ingress.hostName=kubeinvaders.io --set deployment.image.tag=v1.9.6oc adm policy add-scc-to-user anyuid -z kubeinvadersI should add this to the helm chart...

apiVersion: route.openshift.io/v1

kind: Route

metadata:

name: kubeinvaders

namespace: "kubeinvaders"

spec:

host: "kubeinvaders.io"

to:

name: kubeinvaders

tls:

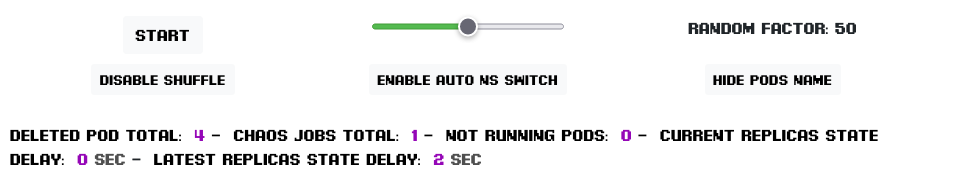

termination: EdgeAt the top you will find some metrics as described below:

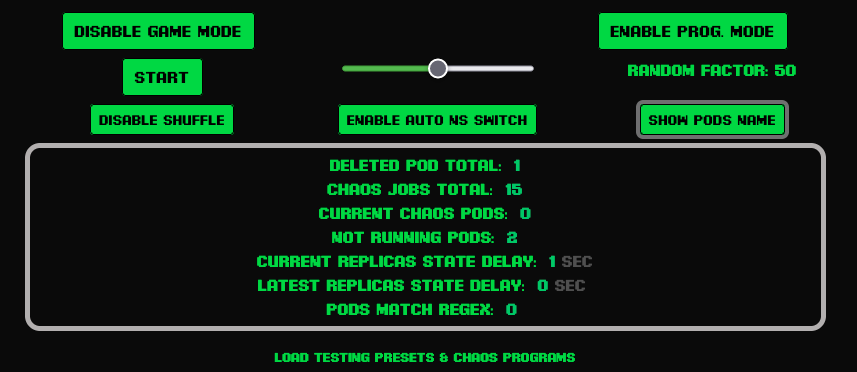

Current Replicas State Delay is a metric that show how much time the cluster takes to come back at the desired state of pods replicas.

This is a control-plane you can use to switch off & on various features.

Press the button "Start" to start automatic pilot (button changes to "Stop" to disable this feature).

Press the button "Enable Shuffle" to randomly switch the positions of pods or k8s nodes (button changes to "Disable Shuffle" to disable this feature).

Press the button "Auto NS Switch" to randomly switch between namespaces (button changes to "Disable Auto NS Switch" to disable this feature).

Press the button "Hide Pods Name" to hide the name of the pods under the aliens (button changes to "Show Pods Name" to disable this feature).

As described below, on the game screen, near the spaceship, there are details about current cluster, namespace and some configurations.

Under + and - buttons appears a bar with the latest occurred game events.

It is possibile using kube-linter through KubeInvaders in order to scan resources looking for best-practices or improvements to apply.

Press 'h' or select 'Show Special Keys' from the menu.

Press + or - buttons to increase or decrease the game screen.

-

Select from the menu "Show Current Chaos Container for nodes" to watch which container start when you fire against a worker node (not an alien, they are pods).

-

Select from the menu "Set Custom Chaos Container for nodes" to use your preferred image or configuration against nodes.

"Kinv" uses Redis for save and manage data. Redis is configured with "appendonly".

At moment the helm chart does not support PersistentVolumes but this task is in the to do list...

- It seems that KubeInvaders does not work with EKS because of problems with ServiceAccount.

- At moment the installation of KubeInvaders into a namespace that is not named "kubeinvaders" is not supported

- I have only tested KubeInvaders with a Kubernetes cluster installed through KubeSpray

- If you don't see aliens please do the following steps:

- Open a terminal and do "kubectl logs <pod_of_kubeinvader> -n kubeinvaders -f"

- Execute from another terminal

curl "https://<your_kubeinvaders_url>/kube/pods?action=list&namespace=namespace1" -k - Open an issue with attached logs

To experience KubeInvaders in action, try it out in this free O'Reilly Katacoda scenario, KubeInvaders.

KubeInvaders exposes metrics for Prometheus through the standard endpoint /metrics

This is an example of Prometheus configuration:

scrape_configs:

- job_name: kubeinvaders

static_configs:

- targets:

- kubeinvaders.kubeinvaders.svc.cluster.local:8080Example of metrics:

| Metric | Description |

|---|---|

| chaos_jobs_node_count{node=workernode01} | Total number of chaos jobs executed per node |

| chaos_node_jobs_total | Total number of chaos jobs executed against all worker nodes |

| deleted_pods_total 16 | Total number of deleted pods |

| deleted_namespace_pods_count{namespace=myawesomenamespace} | Total number of deleted pods per namespace |

In order to restrict the access to the Kubeinvaders endpoint add this annotation into the ingress.

nginx.ingress.kubernetes.io/whitelist-source-range: <your_ip>/32from cassandra.cluster import Cluster

from random import randint

import time

def main():

cluster = Cluster(['127.0.0.1'])

session = cluster.connect()

session.execute("CREATE KEYSPACE IF NOT EXISTS test WITH REPLICATION = { 'class': 'SimpleStrategy', 'replication_factor': 1 }")

session.execute("CREATE TABLE IF NOT EXISTS test.messages (id int PRIMARY KEY, message text)")

for i in range(1000):

session.execute("INSERT INTO test.messages (id, message) VALUES (%s, '%s')" % (i, str(randint(0, 1000))))

time.sleep(0.001)

cluster.shutdown()

if __name__ == "__main__":

main()import time

import consul

# Connect to the Consul cluster

client = consul.Consul()

# Continuously register and deregister a service

while True:

# Register the service

client.agent.service.register(

"stress-test-service",

port=8080,

tags=["stress-test"],

check=consul.Check().tcp("localhost", 8080, "10s")

)

# Deregister the service

client.agent.service.deregister("stress-test-service")

time.sleep(1)

import time

from elasticsearch import Elasticsearch

# Connect to the Elasticsearch cluster

es = Elasticsearch(["localhost"])

# Continuously index and delete documents

while True:

# Index a document

es.index(index="test-index", doc_type="test-type", id=1, body={"test": "test"})

# Delete the document

es.delete(index="test-index", doc_type="test-type", id=1)

time.sleep(1)

import time

import etcd3

# Connect to the etcd3 cluster

client = etcd3.client()

# Continuously set and delete keys

while True:

# Set a key

client.put("/stress-test-key", "stress test value")

# Delete the key

client.delete("/stress-test-key")

time.sleep(1)

import gitlab

import requests

import time

gl = gitlab.Gitlab('https://gitlab.example.com', private_token='my_private_token')

def create_project():

project = gl.projects.create({'name': 'My Project'})

print("Created project: ", project.name)

def main():

for i in range(1000):

create_project()

time.sleep(0.001)

if __name__ == "__main__":

main()import time

import requests

# Set up the URL to send requests to

url = 'http://localhost:8080/'

# Set up the number of requests to send

num_requests = 10000

# Set up the payload to send

payload = {'key': 'value'}

# Send the requests

start_time = time.time()

for i in range(num_requests):

requests.post(url, json=payload)

end_time = time.time()

# Calculate the throughput

throughput = num_requests / (end_time - start_time)

print(f'Throughput: {throughput} requests/second')

import time

from jira import JIRA

# Connect to the Jira instance

jira = JIRA(

server="https://jira.example.com",

basic_auth=("user", "password")

)

# Continuously create and delete issues

while True:

# Create an issue

issue = jira.create_issue(

project="PROJECT",

summary="Stress test issue",

description="This is a stress test issue.",

issuetype={"name": "Bug"}

)

# Delete the issue

jira.delete_issue(issue)

time.sleep(1)

import time

import random

from kafka import KafkaProducer

# Set up the Kafka producer

producer = KafkaProducer(bootstrap_servers=['localhost:9092'])

# Set up the topic to send messages to

topic = 'test'

# Set up the number of messages to send

num_messages = 10000

# Set up the payload to send

payload = b'a' * 1000000

# Send the messages

start_time = time.time()

for i in range(num_messages):

producer.send(topic, payload)

end_time = time.time()

# Calculate the throughput

throughput = num_messages / (end_time - start_time)

print(f'Throughput: {throughput} messages/second')

# Flush and close the producer

producer.flush()

producer.close()import time

import kubernetes

# Create a Kubernetes client

client = kubernetes.client.CoreV1Api()

# Continuously create and delete pods

while True:

# Create a pod

pod = kubernetes.client.V1Pod(

metadata=kubernetes.client.V1ObjectMeta(name="stress-test-pod"),

spec=kubernetes.client.V1PodSpec(

containers=[kubernetes.client.V1Container(

name="stress-test-container",

image="nginx:latest"

)]

)

)

client.create_namespaced_pod(namespace="default", body=pod)

# Delete the pod

client.delete_namespaced_pod(name="stress-test-pod", namespace="default")

time.sleep(1)

import time

import random

from pymongo import MongoClient

# Set up the MongoDB client

client = MongoClient('mongodb://localhost:27017/')

# Set up the database and collection to use

db = client['test']

collection = db['test']

# Set up the number of documents to insert

num_documents = 10000

# Set up the payload to insert

payload = {'key': 'a' * 1000000}

# Insert the documents

start_time = time.time()

for i in range(num_documents):

collection.insert_one(payload)

end_time = time.time()

# Calculate the throughput

throughput = num_documents / (end_time - start_time)

print(f'Throughput: {throughput} documents/second')

# Close the client

client.close()

import time

import mysql.connector

# Connect to the MySQL database

cnx = mysql.connector.connect(

host="localhost",

user="root",

password="password",

database="test"

)

cursor = cnx.cursor()

# Continuously insert rows into the "test_table" table

while True:

cursor.execute("INSERT INTO test_table (col1, col2) VALUES (%s, %s)", (1, 2))

cnx.commit()

time.sleep(1)

# Close the database connection

cnx.close()

import time

import nomad

# Create a Nomad client

client = nomad.Nomad()

# Create a batch of jobs to submit to Nomad

jobs = [{

"Name": "stress-test-job",

"Type": "batch",

"Datacenters": ["dc1"],

"TaskGroups": [{

"Name": "stress-test-task-group",

"Tasks": [{

"Name": "stress-test-task",

"Driver": "raw_exec",

"Config": {

"command": "sleep 10"

},

"Resources": {

"CPU": 500,

"MemoryMB": 512

}

}]

}]

}]

# Continuously submit the batch of jobs to Nomad

while True:

for job in jobs:

client.jobs.create(job)

time.sleep(1)

import time

import random

import psycopg2

# Set up the connection parameters

params = {

'host': 'localhost',

'port': '5432',

'database': 'test',

'user': 'postgres',

'password': 'password'

}

# Connect to the database

conn = psycopg2.connect(**params)

# Set up the cursor

cur = conn.cursor()

# Set up the table and payload to insert

table_name = 'test'

payload = 'a' * 1000000

# Set up the number of rows to insert

num_rows = 10000

# Insert the rows

start_time = time.time()

for i in range(num_rows):

cur.execute(f"INSERT INTO {table_name} (col) VALUES ('{payload}')")

conn.commit()

end_time = time.time()

# Calculate the throughput

throughput = num_rows / (end_time - start_time)

print(f'Throughput: {throughput} rows/second')

# Close the cursor and connection

cur.close()

conn.close()

import time

import random

from prometheus_client import CollectorRegistry, Gauge, push_to_gateway

# Set up the metrics registry

registry = CollectorRegistry()

# Set up the metric to push

gauge = Gauge('test_gauge', 'A test gauge', registry=registry)

# Set up the push gateway URL

push_gateway = 'http://localhost:9091'

# Set up the number of pushes to send

num_pushes = 10000

# Set up the metric value to push

value = random.random()

# Push the metric

start_time = time.time()

for i in range(num_pushes):

gauge.set(value)

push_to_gateway(push_gateway, job='test_job', registry=registry)

end_time = time.time()

# Calculate the throughput

throughput = num_pushes / (end_time - start_time)

print(f'Throughput: {throughput} pushes/second')

import pika

import time

def send_message(channel, message):

channel.basic_publish(exchange='', routing_key='test_queue', body=message)

print("Sent message: ", message)

def main():

connection = pika.BlockingConnection(pika.ConnectionParameters('localhost'))

channel = connection.channel()

channel.queue_declare(queue='test_queue')

for i in range(1000):

send_message(channel, str(i))

time.sleep(0.001)

connection.close()

if __name__ == "__main__":

main()import paramiko

# Define servers array

servers = ['server1', 'server2', 'server3']

for server in servers:

public_key = paramiko.RSAKey(data=b'your-public-key-string')

ssh = paramiko.SSHClient()

ssh.set_missing_host_key_policy(paramiko.AutoAddPolicy())

ssh.connect(hostname='your-server-name', username='your-username', pkey=public_key)

stdin, stdout, stderr = ssh.exec_command('your-command')

print(stdout.read())

ssh.close()import time

import hvac

# Connect to the Vault instance

client = hvac.Client()

client.auth_approle(approle_id="approle-id", secret_id="secret-id")

# Continuously read and write secrets

while True:

# Write a secret

client.write("secret/stress-test", value="secret value")

# Read the secret

client.read("secret/stress-test")

time.sleep(1)

Please reach out for news, bugs, feature requests, and other issues via:

- On Twitter: @kubeinvaders & @luckysideburn

- New features are published on YouTube too in this channel

- Kubernetes.io blog: KubeInvaders - Gamified Chaos Engineering Tool for Kubernetes

- acloudguru: cncf-state-of-the-union

- DevNation RedHat Developer: Twitter

- Flant: Open Source solutions for chaos engineering in Kubernetes

- Reeinvent: KubeInvaders - gamified chaos engineering

- Adrian Goins: K8s Chaos Engineering with KubeInvaders

- dbafromthecold: Chaos engineering for SQL Server running on AKS using KubeInvaders

- Pklinker: Gamification of Kubernetes Chaos Testing

- Openshift Commons Briefings: OpenShift Commons Briefing KubeInvaders: Chaos Engineering Tool for Kubernetes

- GitHub: awesome-kubernetes repo

- William Lam: Interesting Kubernetes application demos

- The Chief I/O: 5 Fun Ways to Use Kubernetes

- LuCkySideburn: Talk @ Codemotion

- Chaos Carnival: Chaos Engineering is fun!

- Kubeinvaders (old version) + OpenShift 4 Demo: YouTube_Video

- KubeInvaders (old version) Vs Openshift 4.1: YouTube_Video

- Chaos Engineering for SQL Server | Andrew Pruski | Conf42: Chaos Engineering: YouTube_Video

KubeInvaders is licensed under the Apache License, Version 2.0. See LICENSE for the full license text.