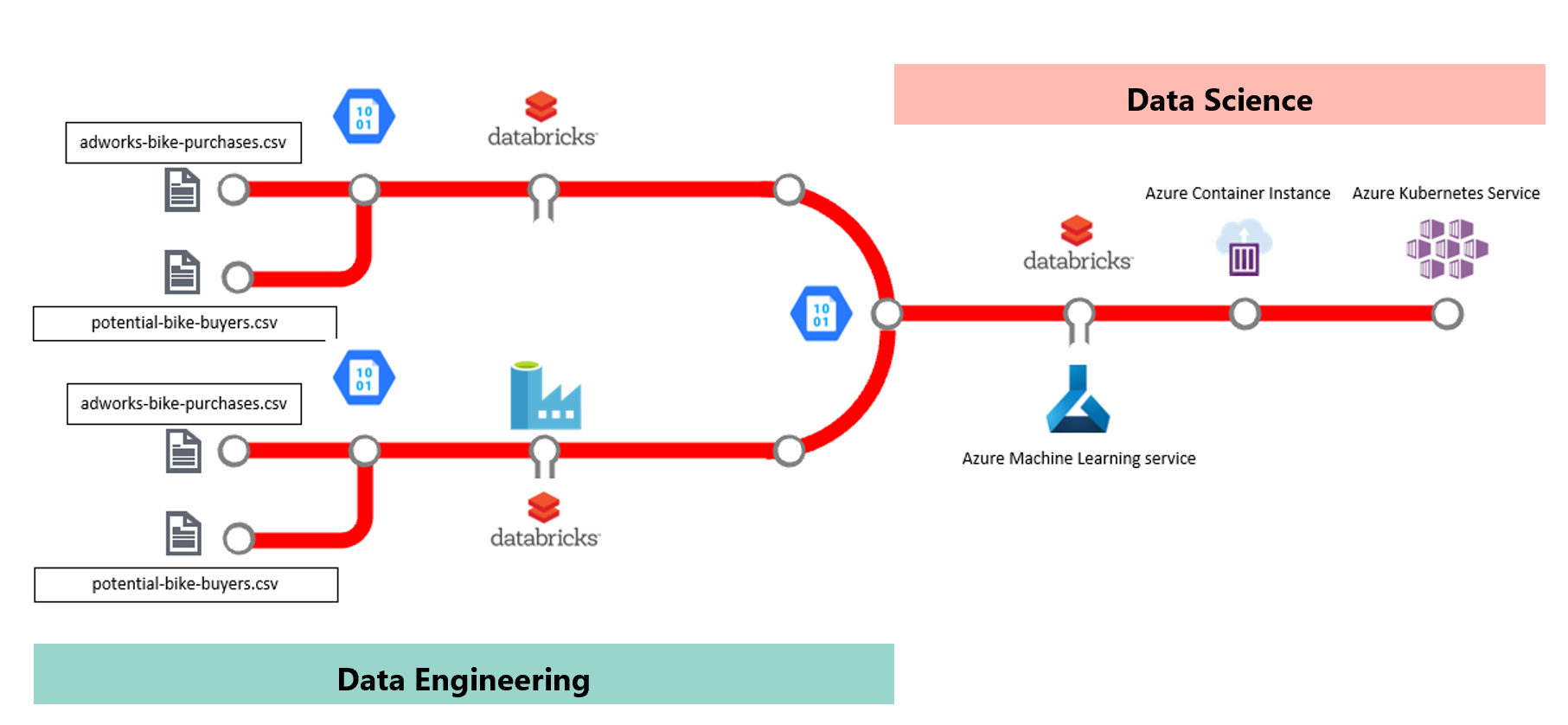

Welcome to a hands-on workshop for Machine Learning on Big Data using Azure Databricks, Azure Data Factory, and Azure Machine Learning service

The datasets and base notebooks were provided with data from the SQL Server 2017 Adventureworks Data Warehouse AdventureWorksDW2017.bak and the Azure Machine Learning Notebooks

Prerequisites

To deploy the Azure resources required for this lab, you will need:

-

Note:If you don't have an account you can create your free Azure account here -

Microsoft Azure Storage Explorer

-

Clone this GitHub repository using Git and the following commands:

git clone https://github.com/DataSnowman/MLonBigData.git

Note that you will be deploying a number of Azure resources into your Azure Subscription when either clicking on the Deploy to Azure button, or by alternatively deploying by using an ARM template and parameters file via the Azure CLI.

Deploy Bike Buyer Template to Azure

Important `While Azure Data Factory Data Flows is in Preview please use Southeast Asia Region to deploy this solution

Note: If you encounter issues with resources please check by running the following commands in the Azure CLI (Note more information on using the CLI is found in the Provisioning using the Azure CLI section below):

az login

az account show

az account list-locations

az provider show --namespace Microsoft.Databricks --query "resourceTypes[?resourceType=='workspaces'].locations | [0]" --out table

Choices for Provisioning

You can provision using the Deploy to Azure button above or by using the Azure CLI.

Provisioning using the Azure Portal

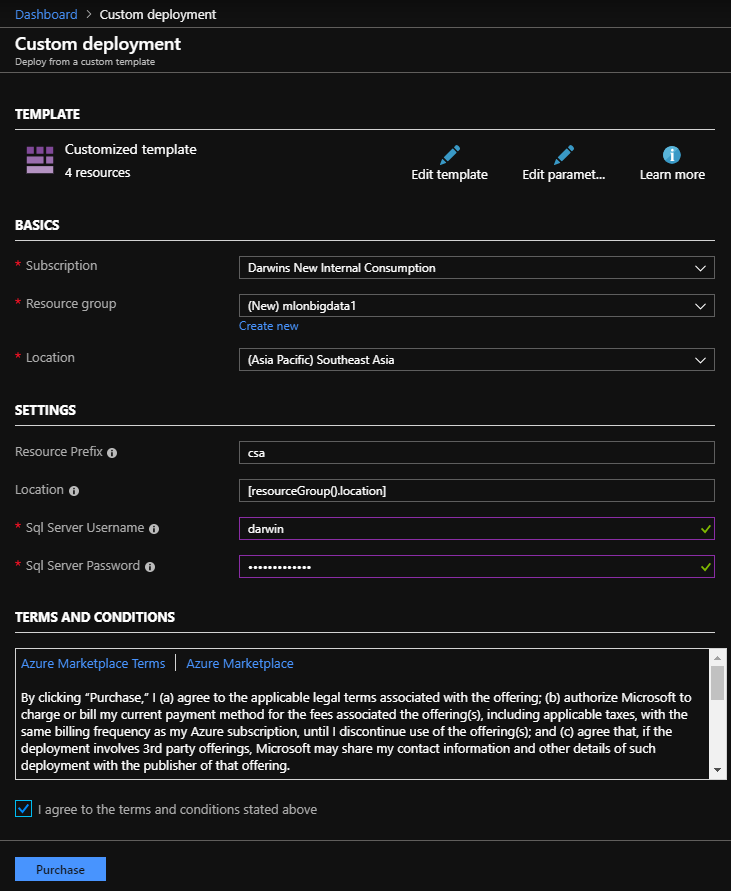

Choose your Subscription, and enter a Resource Group group, Location (Southeast Asia for the ADF Data Flow Preview) SQL Server Username, SQL Server Password, and agree to the Terms and Conditions. Then click the Purchase button.

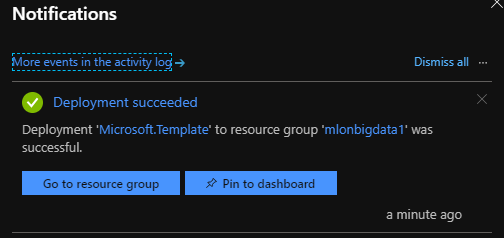

When the Deployment completes you will receive a notification in the Azure Portal. Click the Go to resource group button.

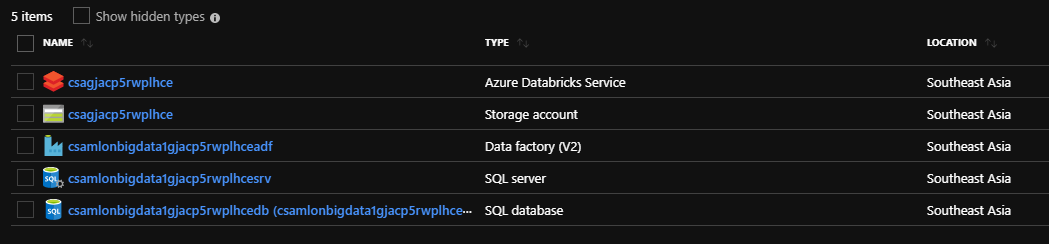

After you open the resource group in the Azure portal you should see these deployed resources

Provisioning using the Azure CLI

-

Download and install the Azure CLI Installer (MSI) for Windows or Mac or Linux . Once the installation is complete open the command prompt and run

az login, then copy the access code returned. In a browser, open a private tab and enter the URLaka.ms/devicelogin. When prompted, paste in the access code from above. You will be prompted to authenticate using our Azure account. Go through the appropriate multifaction authenication. -

Navigate to the folder

MLonBigData\setupIf using Windows Explorer you can launch the command prompt by going to the address bar and typingcmd(for the Windows command prompt) orbash(for the Linux command prompt assuming it is installed already) and typeaz --versionto check the installation. Look for theparameters-mlbigdata.jsonfile you cloned during the Prerequisites above. -

When you logged in to the CLI in step 1 above you will see a json list of all the Azure account you have access to. Run

az account showto see you current active account. Runaz account list -o tableif you want to see all of you Azure account in a table. If you would like to switch to another Azure account runaz account set --subscription <your SubscriptionId>to set the active subcription. Runaz group create -n mlbigdata -l southeastasiato create a resource group calledmlbigdata. -

Next run the following command to provision the Azure resources:

az group deployment create -g mlbigdata --template-file azureclideploy.json --parameters @parameters-mlbigdata.json

Once the provisioning is finished, we can run az resource list -g mlbigdata -o table to check what resources were launched. Our listed resources includes:

* 1 Storage account

* 1 Data Factory

* 1 Databricks workspace

* 1 SQL Server

* 1 SQL database.

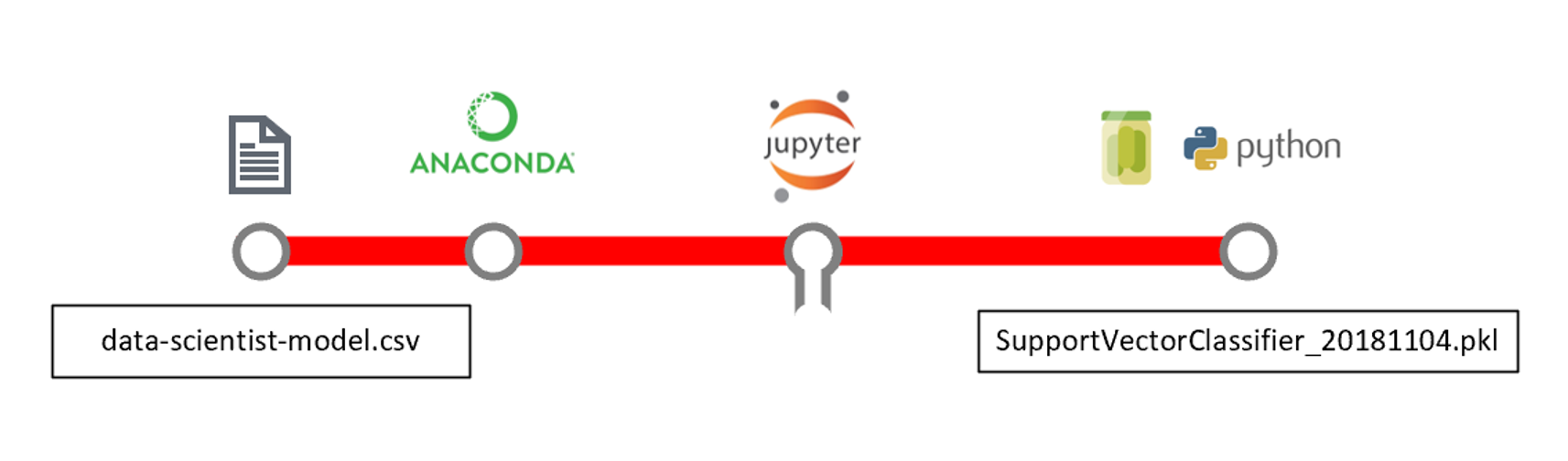

Data Scientist using Anaconda and Jupyter Notebooks

If you are interested in this scenario start here

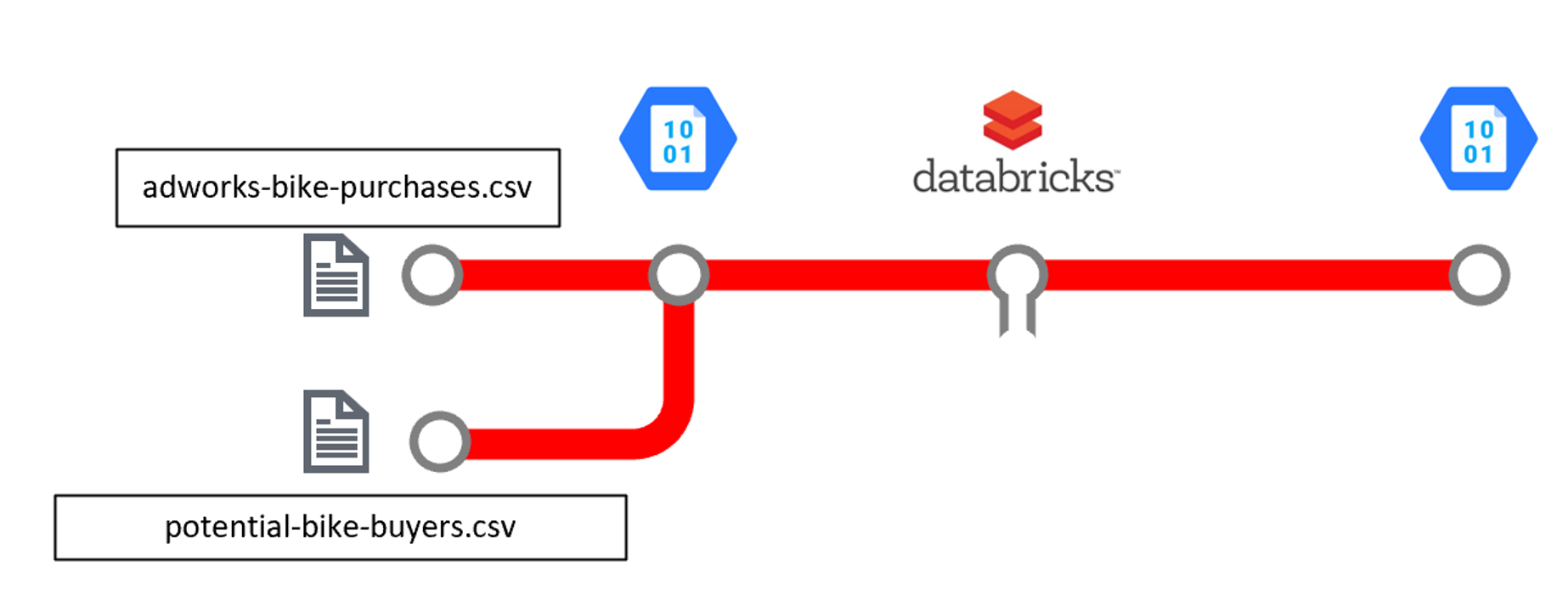

Data Engineer using Azure Databricks and Jupyter Notebook

If you are interested in this scenario start here

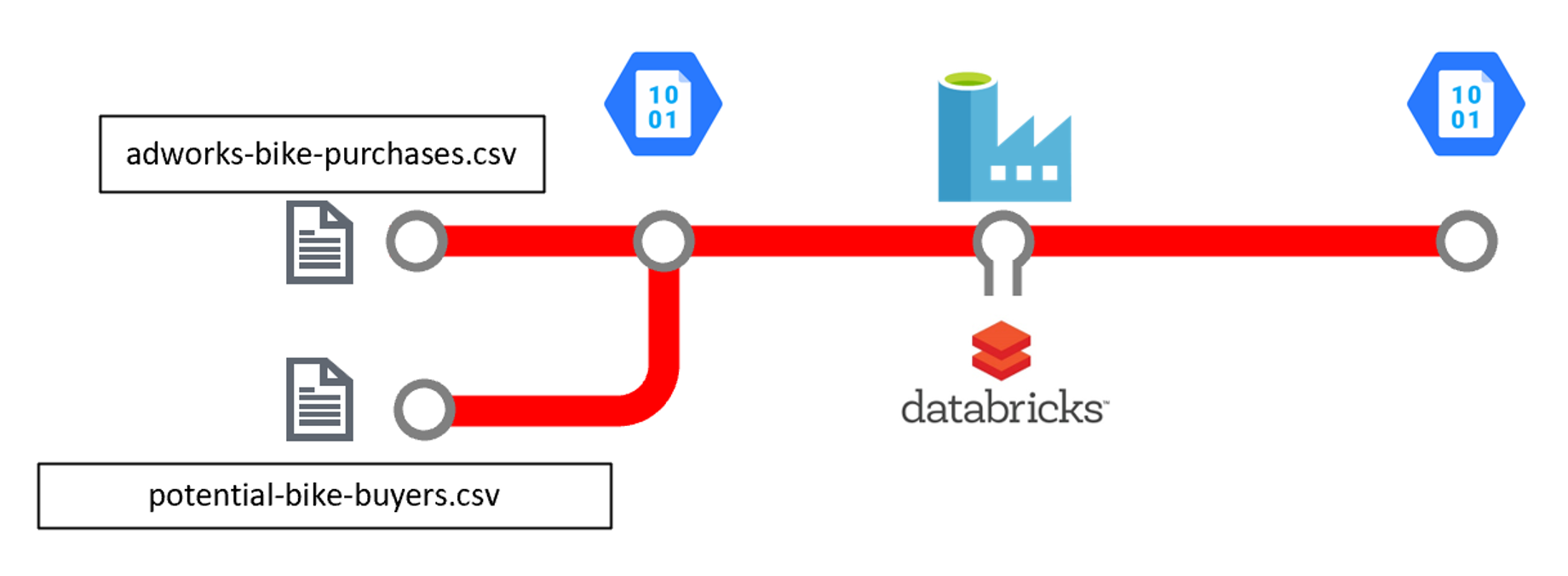

Data Engineer using Azure Data Factory Data Flow and Azure Databricks

If you are interested in this scenario start here

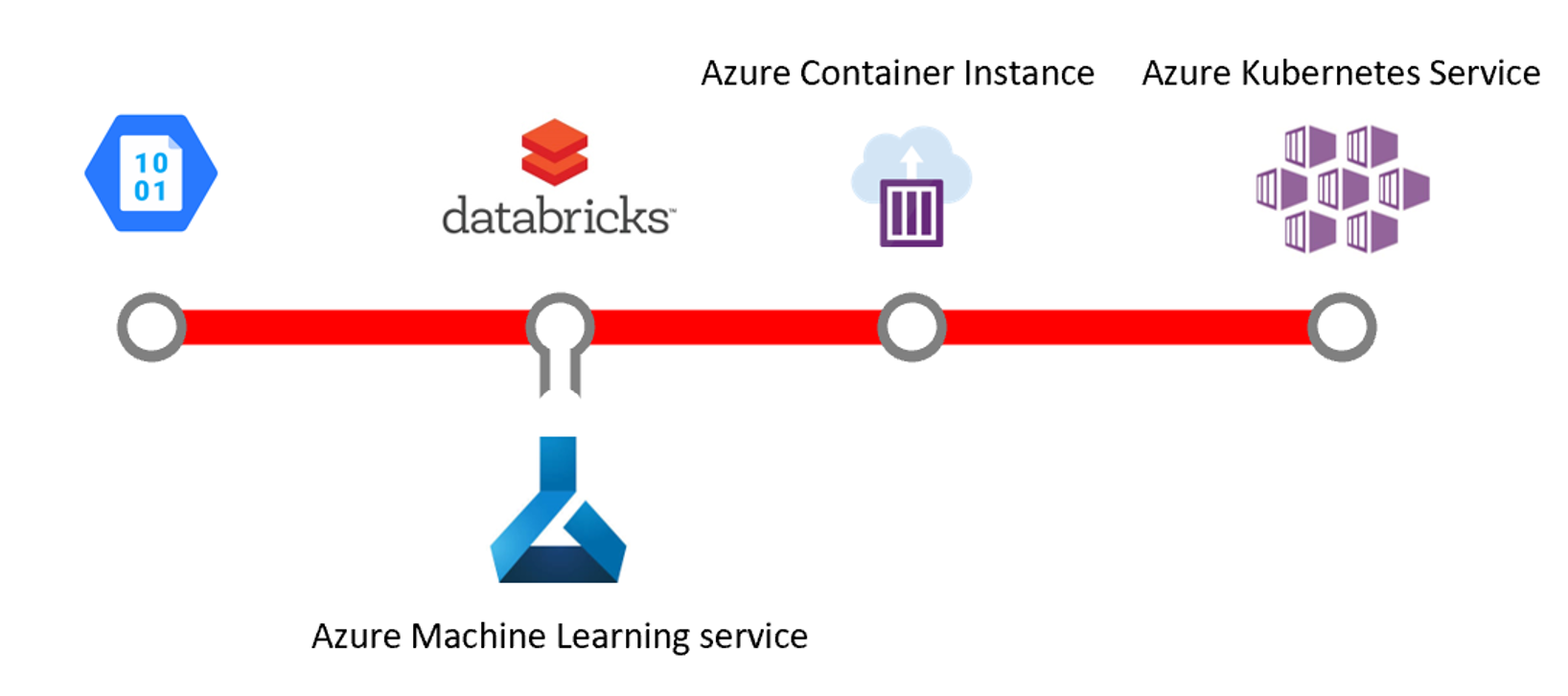

Data Scientist using Azure Databricks and Databricks Notebooks and Azure Machine Learning service SDK

If you are interested in this scenario start here

Hope you enjoyed this workshop.