pNLP-Mixer - Unofficial PyTorch Implementation

pNLP-Mixer: an Efficient all-MLP Architecture for Language

Implementation of pNLP-Mixer in PyTorch and PyTorch Lightning.

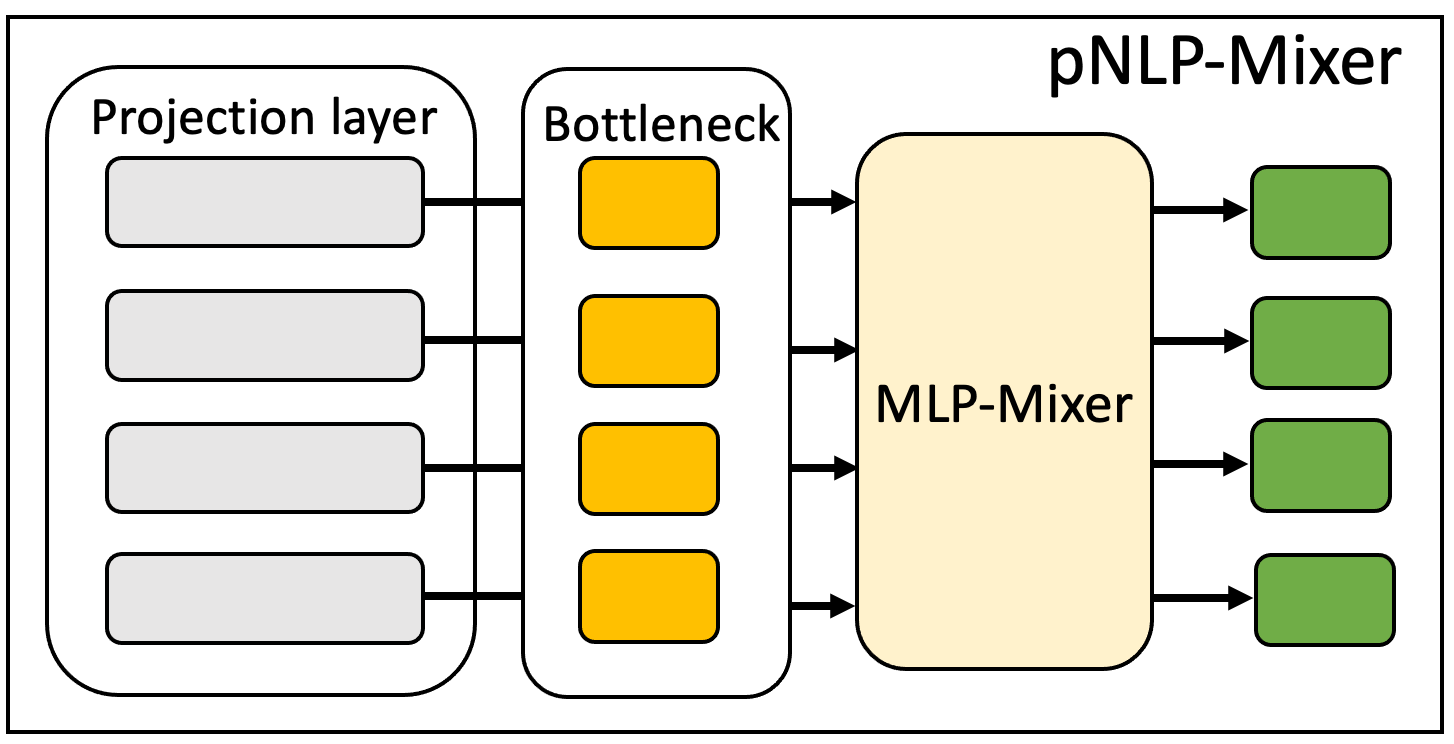

pNLP-Mixer is the first successful application of the MLP-Mixer architecture in NLP. With a novel embedding-free projection layer, pNLP-Mixer shows performance comparable to transformer-based models (e.g. mBERT, RoBERTa) with significantly smaller parameter count and no expensive pretraining procedures.

Requirements

- Python >= 3.6.10

- PyTorch >= 1.8.0

- PyTorch Lightning >= 1.4.3

- All other requirements are listed in the

requirements.txtfile.

Configurations

Please check configuration examples and also comments in the cfg directory.

Commands

Caching Vocab Hashes

python projection.py -v VOCAB_FILE -c CFG_PATH -g NGRAM_SIZE -o OUTPUT_FILEVOCAB_FILE: path to the vocab file that containsCFG_PATH: path to the configurations fileNGRAM_SIZE: size of n-grams used during hashingOUTPUT_FILE: path where the resulting.npyfile will be stored

Training / Testing

python run.py -c CFG_PATH -n MODEL_NAME -m MODE -p CKPT_PATHCFG_PATH: path to the configurations fileMODEL_NAME: model name to be used for pytorch lightning loggingMODE:trainortest(default:train)CKPT_PATH: (optional) checkpoint path to resume training from or to use for testing

Results

The checkpoints used for evaluation are available here.

MTOP

| Model Size | Reported | Ours |

|---|---|---|

| pNLP-Mixer X-Small | 76.9% | 79.3% |

| pNLP-Mixer Base | 80.8% | 79.4% |

| pNLP-Mixer X-Large | 82.3% | 82.1% |

MultiATIS

| Model Size | Reported | Ours |

|---|---|---|

| pNLP-Mixer X-Small | 90.0% | 91.3% |

| pNLP-Mixer Base | 92.1% | 92.8% |

| pNLP-Mixer X-Large | 91.3% | 92.9% |

* Note that the paper reports the performance on the MultiATIS dataset using a 8-bit quantized model, whereas our performance was measured using a 32-bit float model.

IMDB

| Model Size | Reported | Ours |

|---|---|---|

| pNLP-Mixer X-Small | 81.9% | 81.5% |

| pNLP-Mixer Base | 78.6% | 82.2% |

| pNLP-Mixer X-Large | 82.9% | 82.9% |

Paper

@article{fusco2022pnlp,

title={pNLP-Mixer: an Efficient all-MLP Architecture for Language},

author={Fusco, Francesco and Pascual, Damian and Staar, Peter},

journal={arXiv preprint arXiv:2202.04350},

year={2022}

}Contributors

- Tony Woo @ MINDsLab Inc. (shwoo@mindslab.ai)

Special thanks to:

- Hyoung-Kyu Song @ MINDsLab Inc.

- Kang-wook Kim @ MINDsLab Inc.

TODO

- 8-bit quantization