This is code for "Bridging the Gap between Classification and Localization for Weakly Supervised Object Localization."

ArXiv | CVF Open Access

This code is heavily borrowed from Evaluating Weakly Supervised Object Localization Methods Right.

This code is only allowed for non-commercial use.

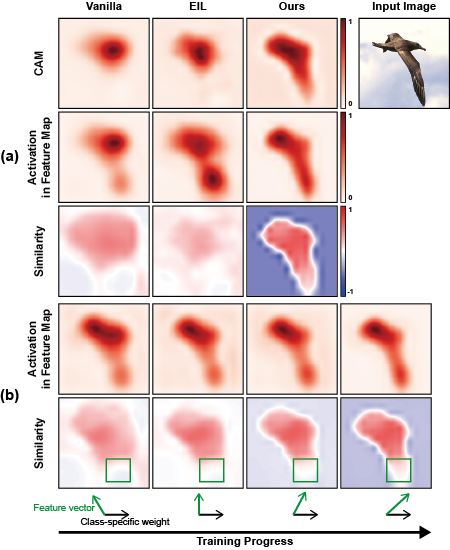

Weakly supervised object localization aims to find a target object region in a given image with only weak supervision, such as image-level labels. Most existing methods use a class activation map (CAM) to generate a localization map; however, a CAM identifies only the most discriminative parts of a target object rather than the entire object region. In this work, we find the gap between classification and localization in terms of the misalignment of the directions between an input feature and a class-specific weight. We demonstrate that the misalignment suppresses the activation of CAM in areas that are less discriminative but belong to the target object. To bridge the gap, we propose a method to align feature directions with a class-specific weight. The proposed method achieves a state-of-the-art localization performance on the CUB-200-2011 and ImageNet-1K benchmarks.

Fig.1 - (a) Examples of CAM and decomposed terms from the classifier trained with the vanilla method and with EIL. (b) Visualization of the changes of CAM and decomposed terms as training with our method progresses.

python == 3.8

pytorch == 1.7.1 or 1.9.0

matplotlib == 3.4.3

opencv-python == 4.5.4.58

munch == 2.5.0

pyyaml == 5.4.1

tqdm == 4.62.3

For the dataset, please refer to the page. We use 'train-fullsup' that the work provides as a validation set. The dataset should be included in the folder 'dataset'. If not, please change the 'data_root' in a config file.

We do not include metadata due to the capacity limit. Please copy the files in the folder "metatdata" from the page into the folder "metadata".

An example command line for the train+eval:

python main.py --config ./configs/config_exp.yaml --gpu {gpu_num}After training, it will automatically evaluate the trained model on the test set.

An example command line only for the test:

python main.py --config {log_folder}/config_exp.yaml --gpu {gpu_num} --only_evalIf you want to save cam, please add "--save_cam".