Cyclic learning rate schedules -

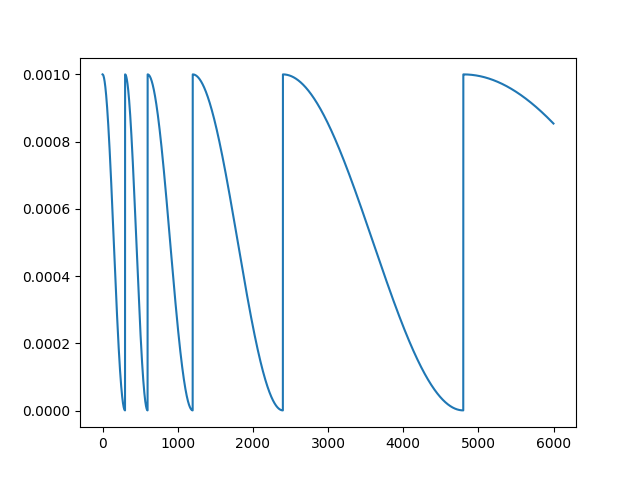

- cyclic cosine annealing - CycilcCosAnnealingLR()

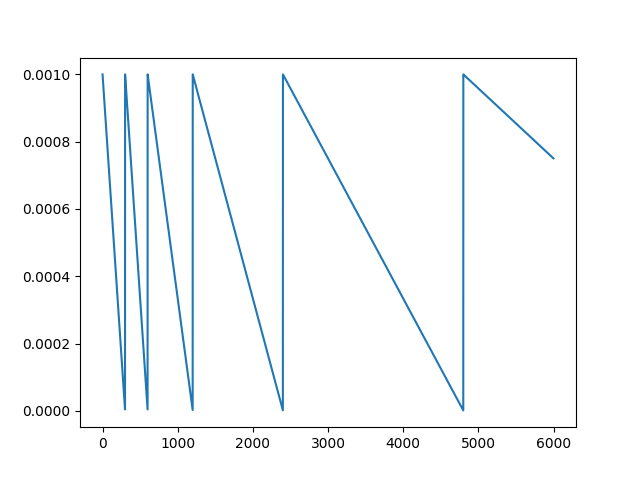

- cyclic linear decay - CyclicLinearLR()

- numpy

- python >= 2.7

- PyTorch >= 0.4.0

SGDR: Stochastic Gradient Descent with Warm Restarts

Sample - (follow similarly for CyclicLinearLR)

from cyclicLR import CyclicCosAnnealingLR

import torch

optimizer = torch.optim.SGD(lr=1e-3)

scheduler = CyclicCosAnnealingLR(optimizer,milestones=[30,80],eta_min=1e-6)

for epoch in range(100):

scheduler.step()

train(..)

validate(..)

Note: scheduler.step() shown is called at every epoch. It can be called even in every batch. Remember to specify milestones in number of batches (and not number of epochs) in such as case.