To do:

- Comment results

- Try others sentences embedding models or transformers models

Wikinews and CNN passages dataset and code to train a segmenter model to find passages segmentation from continuous text.

| Table of contents |

|---|

| Dataset |

| Task |

| My Model |

| Results |

| Getting started |

| License |

The dataset in composed of 18997 Wikinews articles segmented in passages according to the author of the news. All the data is in the wikinews.data.jsonl file (download). The data/ folder contains scripts to convert the .jsonl file into train and test files.

wikinews.data.jsonl file is composed by an article per line. The article is saved in json format:

{"title": "Title of the article", "date": "Weekday, Month Day, Year", "passages": ["Passage 1.", "Passage 2.", ...]}

You can create your own wikinews.data.jsonl file by running the following command:

cd data/

python create_data.py --num 40000 \

--output "wikinews.data.jsonl"

Remark: --num is the number of wikinews articles to use. The final number of data is less than this number because certain articles are depreciated

To make the training easier, I recommend to transform the wikinews.data.jsonl file into train.txt and test.data.txt and test.gold.txt by running the following command:

cd data/

python create_train_test_data.py --input "wikinews.data.jsonl" \

--train_output "train.txt" \

--test_data_output "test.data.txt" \

--test_gold_output "test.gold.txt"

Remark: 80/20 training/test split

These files contain one sentence per line with the corresponding label (1 if the sentence is the beginning of a passage, 0 otherwise). The passages are segmented into sentences using sent_tokenize from the nltk library. Articles are separated by \n\n. Sentences are separated by \n. Elements of a sentence are separated by \t.

Article 1

Sentence 1 Text of the sentence 1. label

Sentence 2 Text of the sentence 2. label

...

Article 2

Sentence 1 Text of the sentence 1. label

Sentence 2 Text of the sentence 2. label

...

...

train.txt contains all elements of sentences.

test.data.txt does not contain the label element of sentences.

test.gold.txt does not contain the text element of sentences.

The dataset in composed of more than 93000 CNN news articles segmented in passages according to the author of the news. Stories are from the DeepMind Q&A dataset. All the data is in the cnn.data.jsonl file (download). The data/ folder contains scripts to convert the .jsonl file into train and test files.

cnn.data.jsonl file is composed by an article per line. The article is saved in json format:

{"title": "", "date": "", "passages": ["Passage 1.", "Passage 2.", ...]}

Remark: There was no title and date in the stories DeepMind Q&A dataset so the fields "title" and "date" are always empty. I leave them to have the same format that for wikinews.data.jsonl.

To create cnn.data.jsonl, you have first to download all stories here and extract the archive in the data/ folder. Then you have to run:

cd data/

python create_data_cnn.py --directory path/to/the/stories/directory \

--output "cnn.data.jsonl"

Same command that for Wikinews except change the input by cnn.data.jsonl.

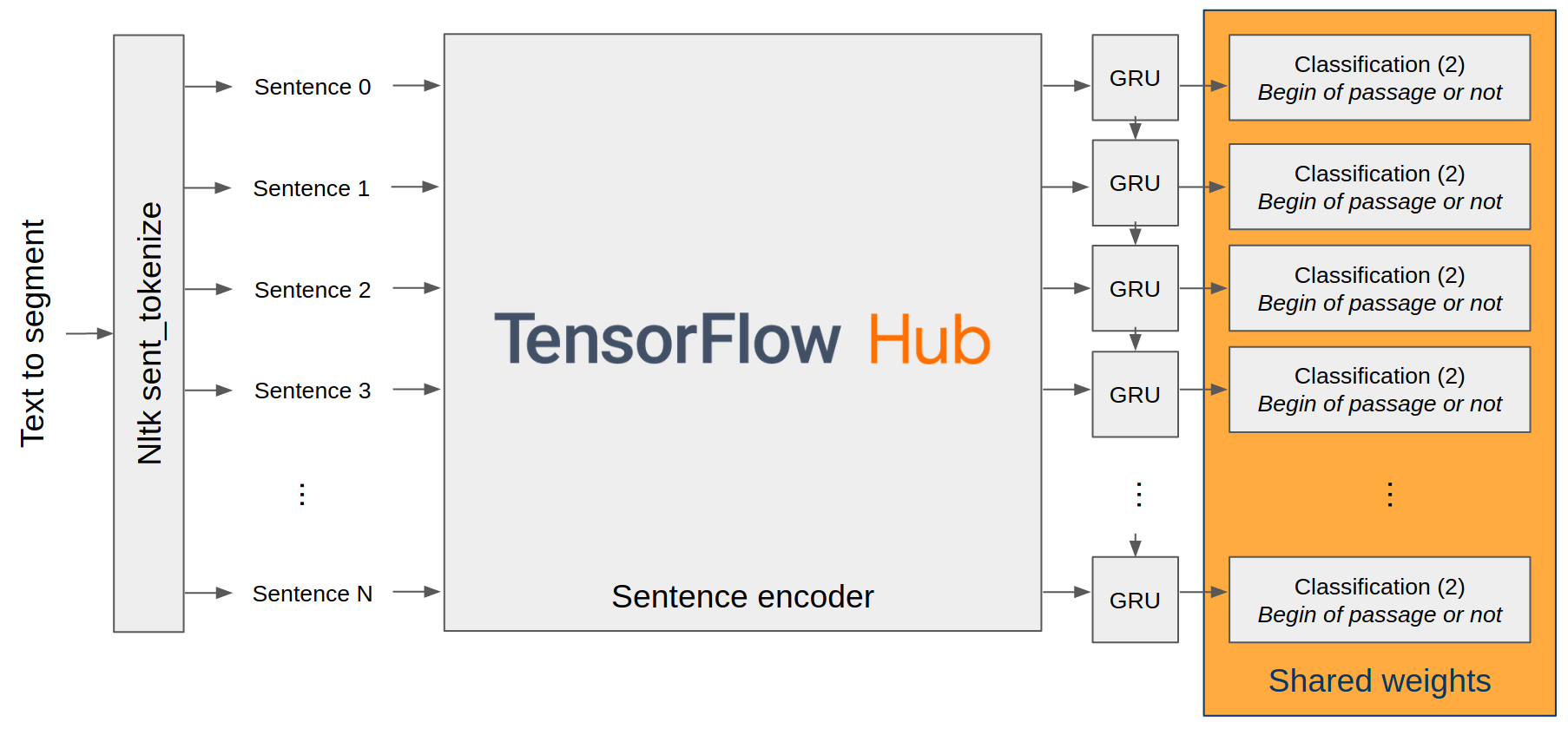

Given a continuous text composed a sentences S1, S2, S3, ..., the segmenter model has to find which sentences are the beginning of a passage. And so give in output a set of passages P1, P2, P3, ... composed by the sentences in the same order.

The objective is that passages contain one information. In the best case passages are self-contained passages and do not require an external context to be understood.

The model is composed of a sentence encoder (pre-trained sentence encoder from tf hub or transformer from HuggingFace) follows by a recurrent layer (simple or bidirectional) on each sentence and then a classification layer.

The result and the model weight are obtained after a training with parameters :

learning_rate = 0.001batch_size = 12epochs = 8max_sentences = 64(Number max of sentences per article)

| Precision | Recall | Fscore | Saved weights | |

|---|---|---|---|---|

| wikinews | 0.761 | 0.757 | 0.758 | here |

| cnn | xxx | xxx | xxx | xxx |

| Precision | Recall | Fscore | Saved weights | |

|---|---|---|---|---|

| wikinews | 0.769 | 0.767 | 0.767 | here |

| cnn | 0.781 | 0.783 | 0.782 | here |

| Precision | Recall | Fscore | Saved weights | |

|---|---|---|---|---|

| wikinews | 0.784 | 0.781 | 0.782 | here |

| cnn | 0.786 | 0.790 | 0.788 | here |

python3 -m venv textsegmentation_env

source textsegmentation_env/bin/activate

pip install -r requirements.txt

You can download the wikinews.data.jsonl file here or the wikinews.data.jsonl file here. Otherwise you can recreate the data.jsonl file (See above). Then move the file to data/ and run create_train_test_data.py with the correct --input (See above).

python train.py --learning_rate 0.001 \

--max_sentences 64 \

--epochs 8 \

--train_path "data/train.txt"

To see full usage of train.py, run python train.py --help.

Remark: During training the accuracy can be very low. It is because the model does not train on padding sentences and so these padding sentences are not predicted well.