This repository comprises my solution to a datachallenge organised at Telecom Paris at the end of a Machine Learning course, before delving deeper into Deep Learning. It contains :

- A jupyter notebook detailing my classification algorithm and the choices taken to develop it

- A presentation of the approach in PDF format, used to explain my reasoning to the whole promotion at the end of the challenge

- The images used as dataset for the challenge

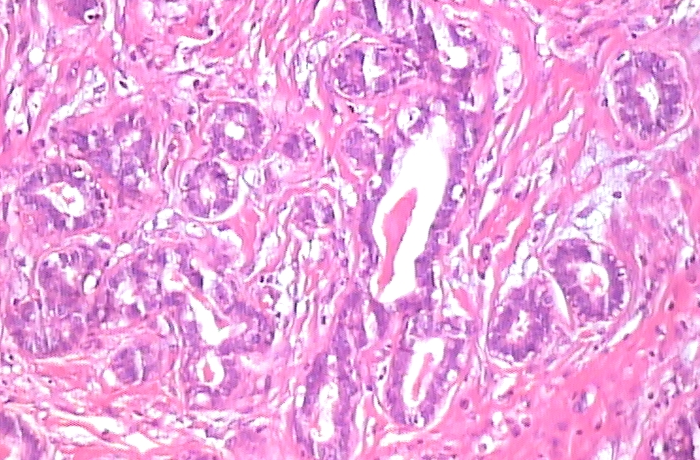

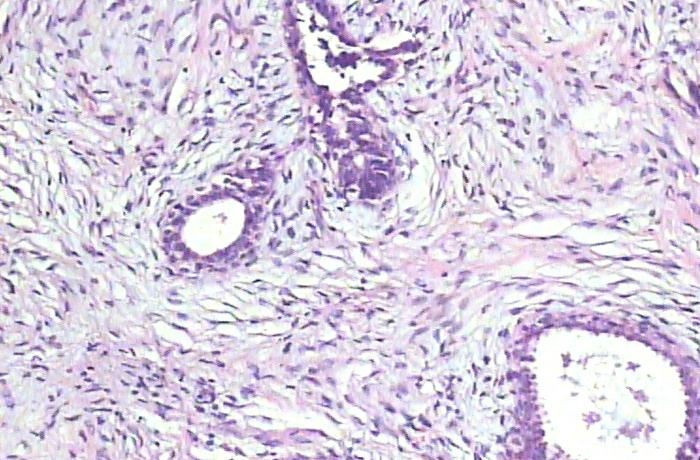

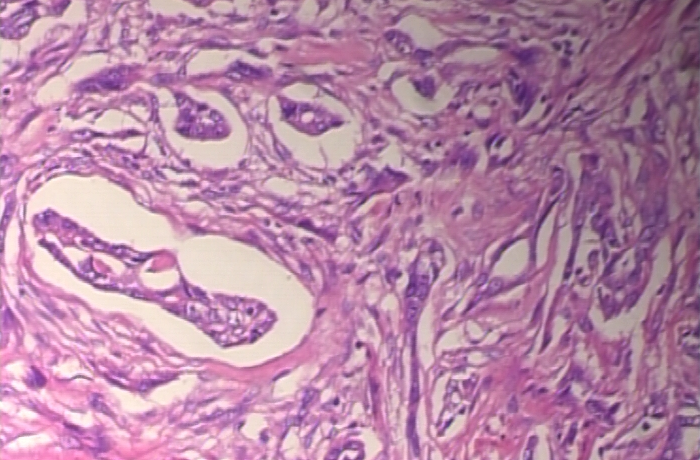

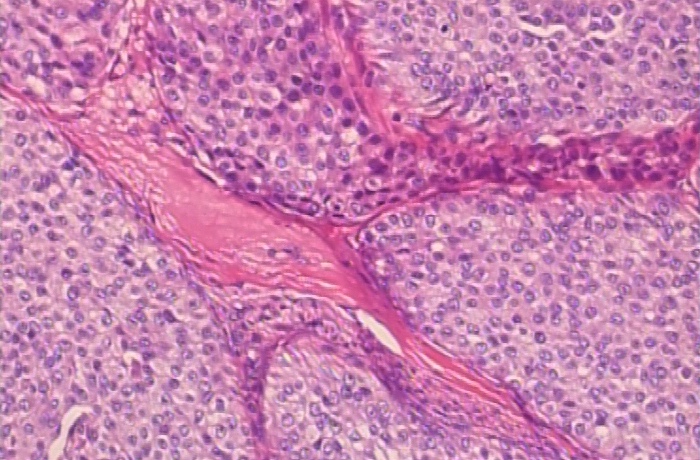

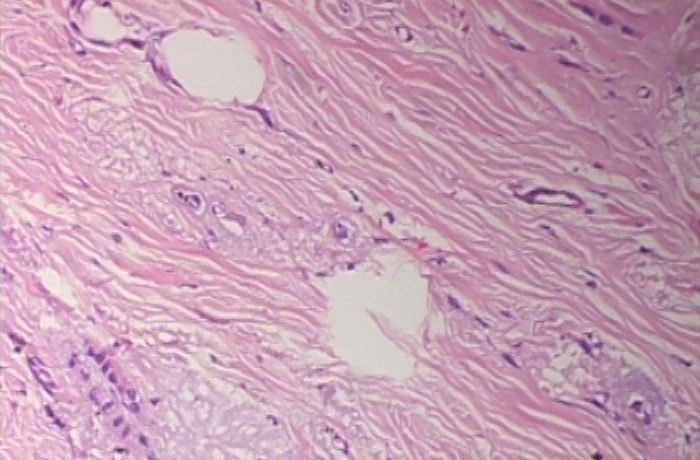

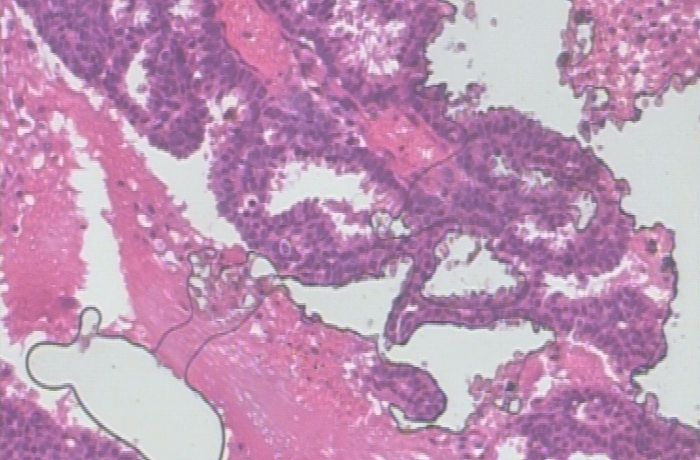

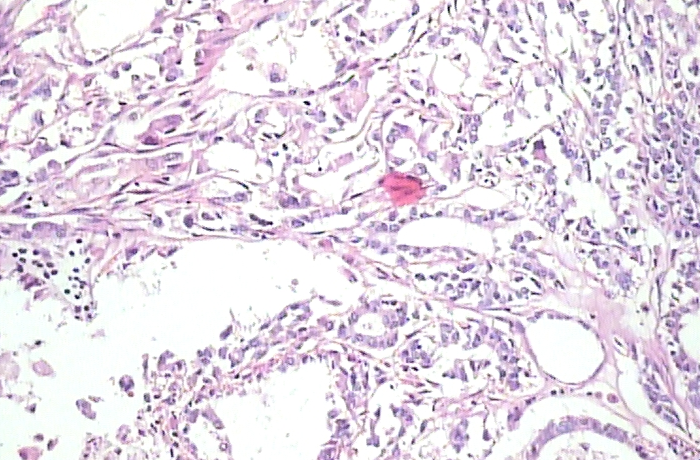

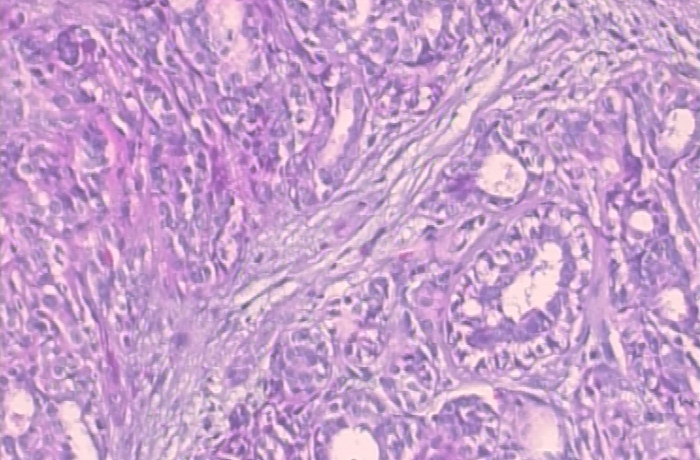

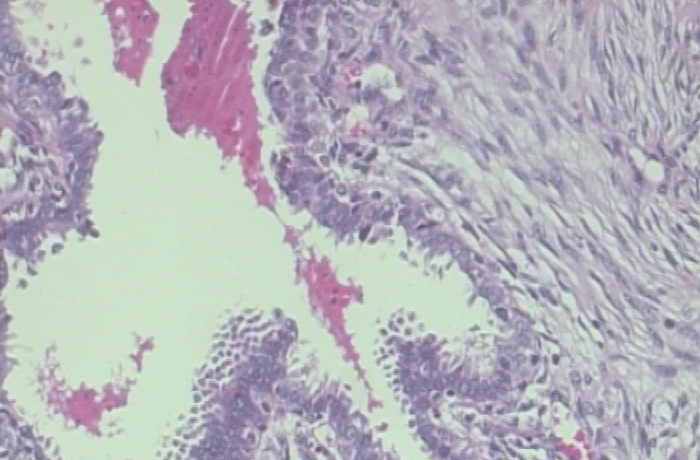

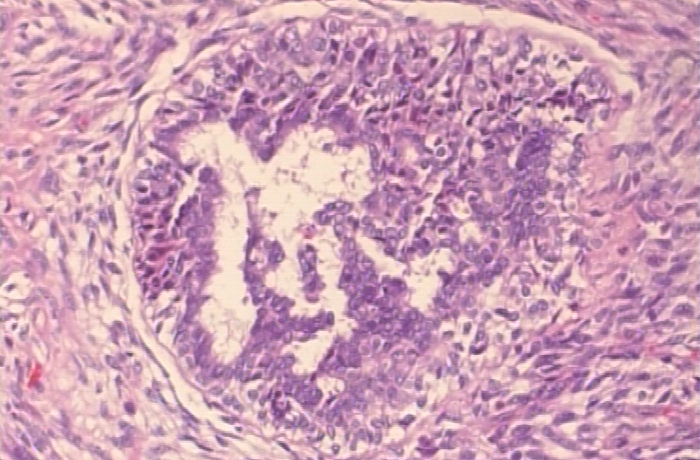

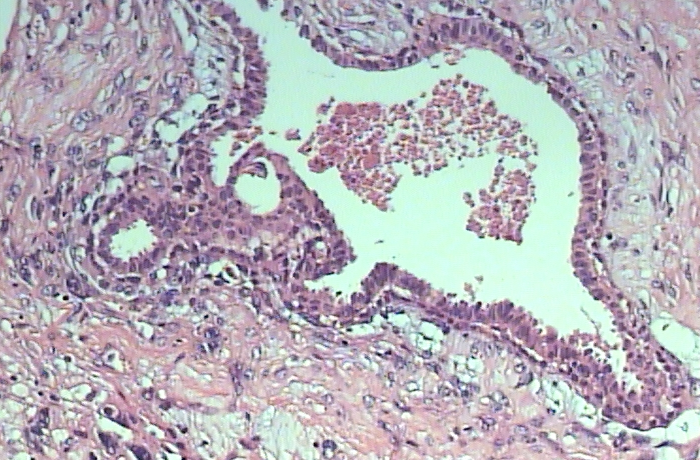

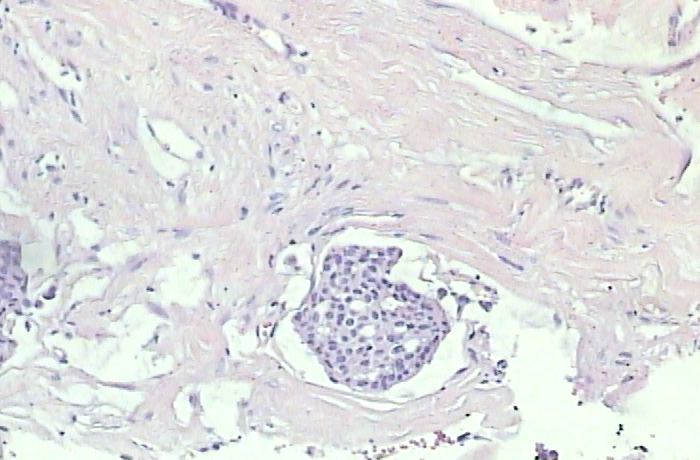

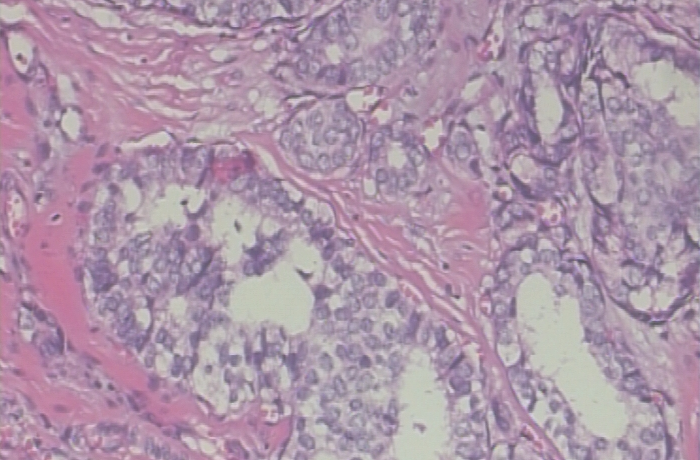

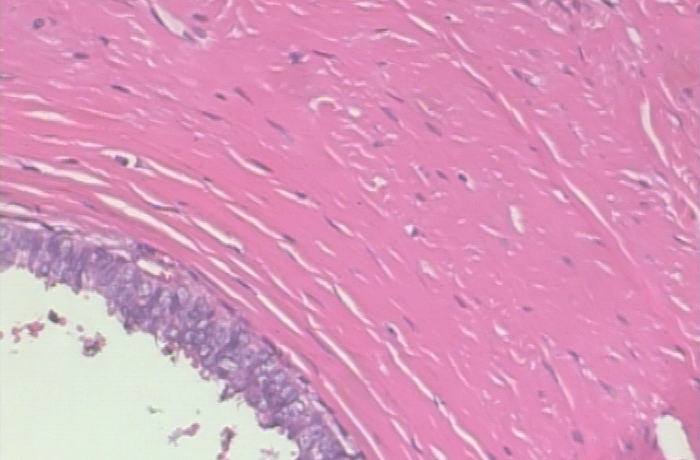

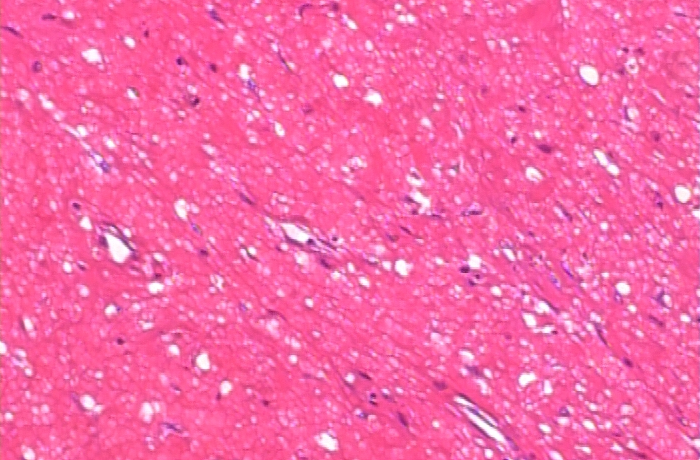

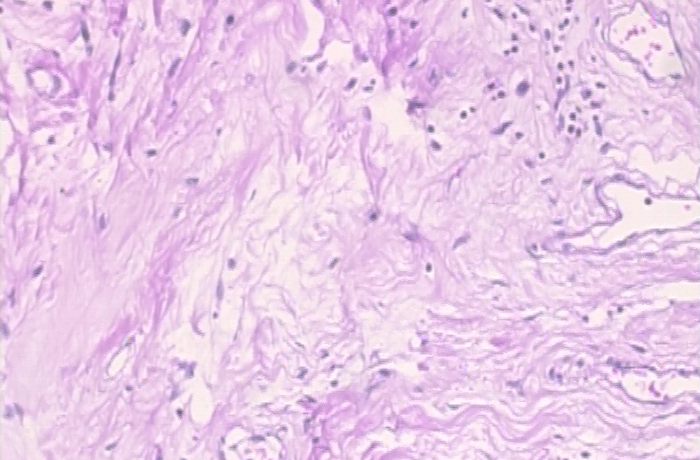

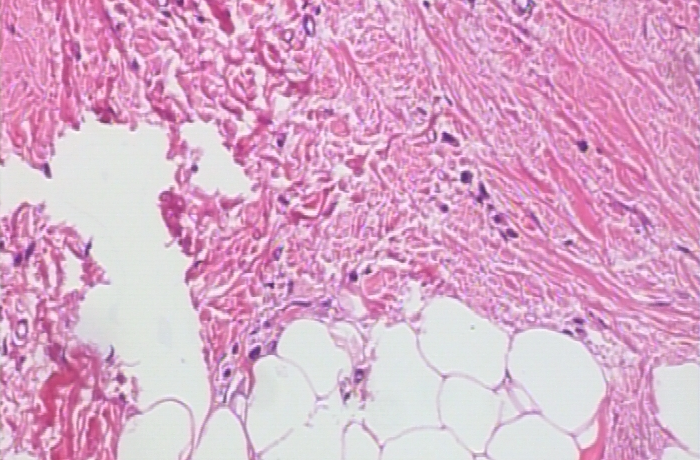

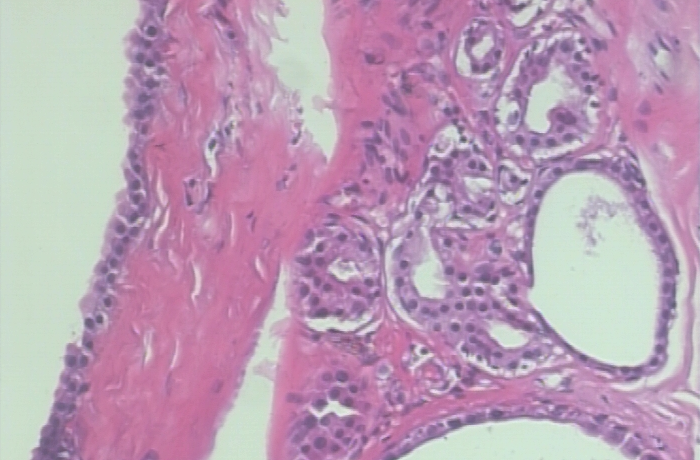

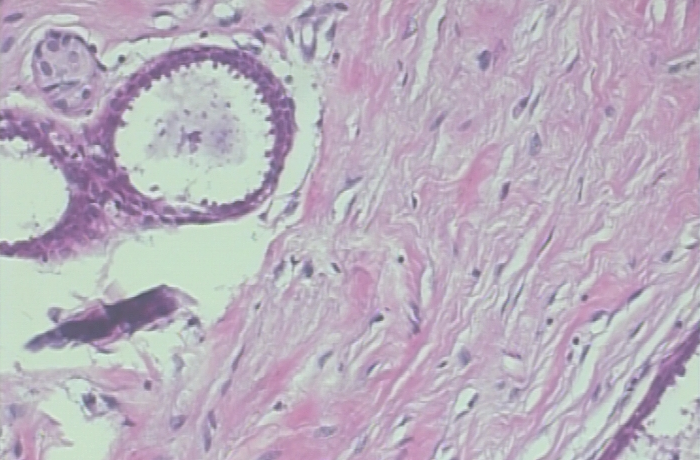

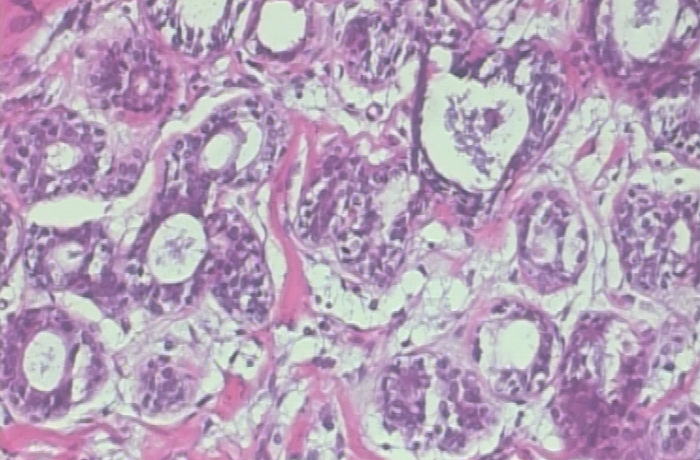

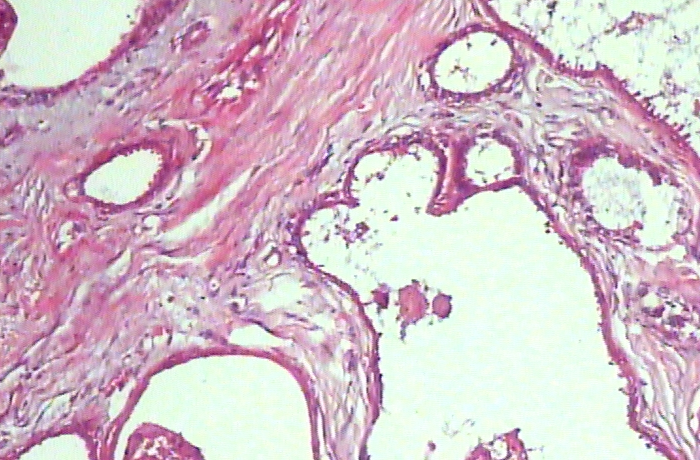

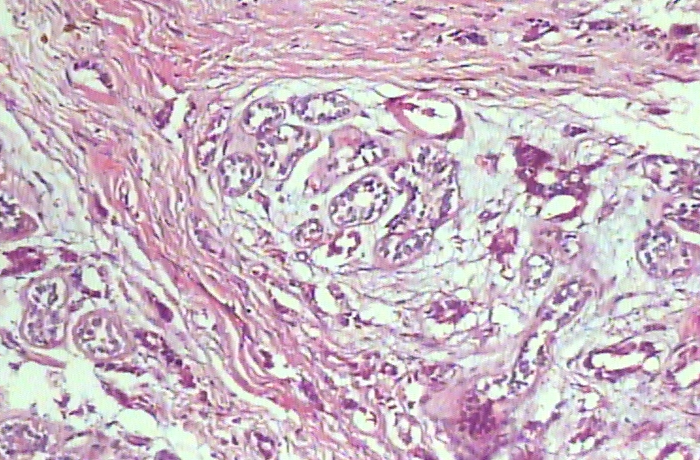

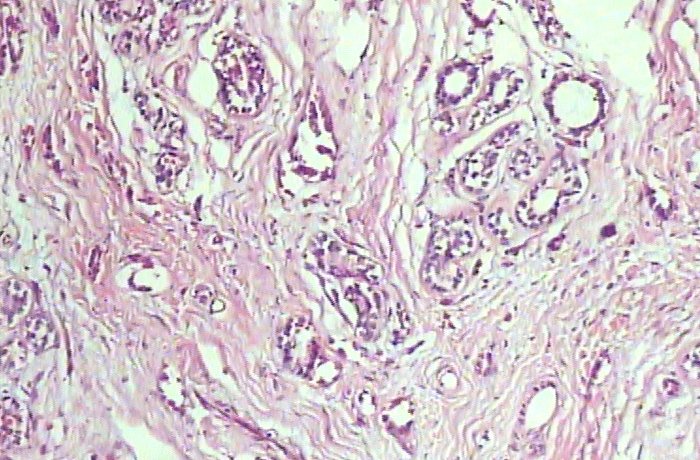

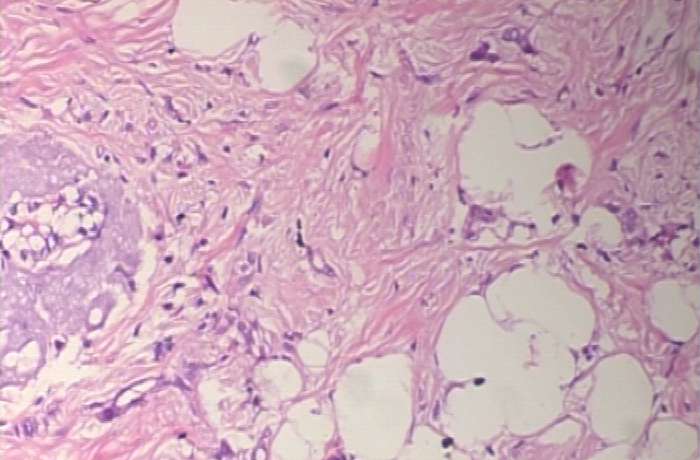

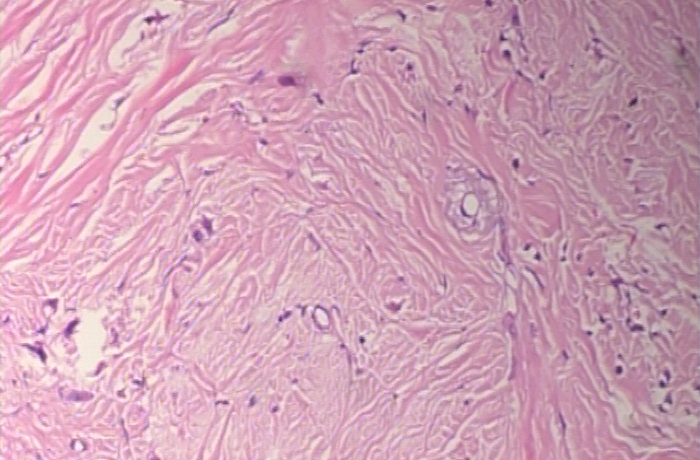

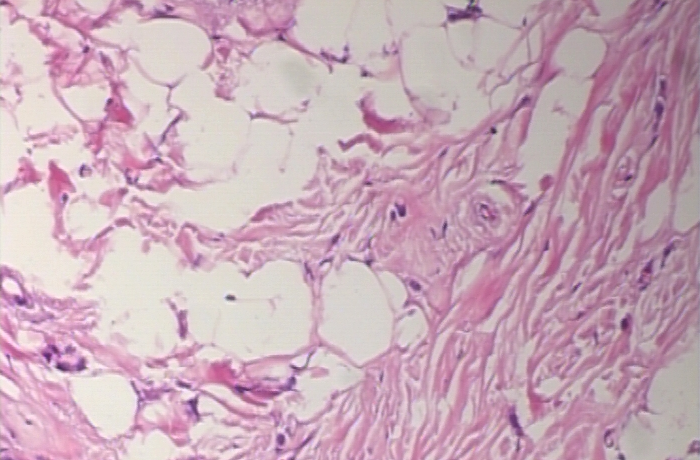

The goal of the project was to classify breast cancer histopathological images into 8 different classes, each identified by different letters in the image filename. An overview of the different classes involved is given in the table below :

All images are extracted from the BreakHis dataset. It is used as a benchmark in many medical imaging competition, but often only for binary classification (identifying if a tumor is benign or malignant). Histologically benign is a term referring to a lesion that does not match any criteria of malignancy – e.g., marked cellular atypia, mitosis, disruption of basement membranes, metastasize, etc. Normally, benign tumors are relatively “innocents”, presents slow growing and remains localized. Malignant tumor is a synonym for cancer: lesion can invade and destroy adjacent structures (locally invasive) and spread to distant sites (metastasize) to cause death.

The samples present in the dataset were collected by SOB method, also named partial mastectomy or excisional biopsy. This type of procedure, compared to any methods of needle biopsy, removes the larger size of tissue sample and is done in a hospital with general anesthetic.

The annoted dataset (Train folder) used in this challenge consists of 422 images randomly extracted from BreakHis, the number of images to classify (Test folder) contains 207 images.

The images are of dimension 700x456 or 700x460 pixels in RGB format.

The metric used to rank the submissions in this datachallenge was the F1-score, which gives equal importance to precision and recall. The accuracy of the submitted classifiers was also displayed, but not used for scoring.

Three main difficulties had to be addressed during this datachallenge :

- Small size of the dataset

The state of the art for histopathological image classification is currently composed of methods based on Deep Learning, which require a consequent number of images to train from scratch. The small number of training images made this kind of approach unreasonable, so I instead opted for more traditionnal image classification techniques based on feature extraction and classical machine learning (e.g. SVM, Random Forest, Boosting, Logistic Regression). The small number of images could alos be leveraged by using more computationally intensive but rigorous cross-validation methods, such as Leave-One-Out. - Class imbalance

As can be seen on the following graph, the repartition of images in each class is heavily unbalanced.

This can bias the model towards more represented classes, and make the learning of general features harder for the least represented classes (LC and TA in this case).

- Multi-labels images

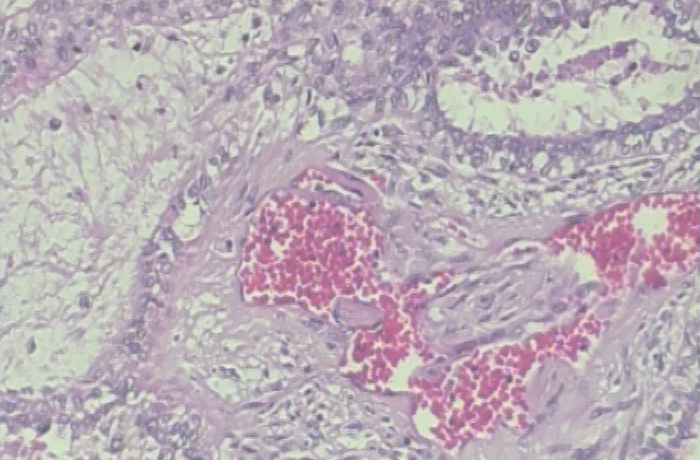

From the images alone, finding the dataset from which they had been extracted was not difficult. However, the annotations for each image are made by experts who have access to more than just the histopathological images, and who know that several images actually come from a single slice, from a single patient - information which is not available on the test set. Finally, several types of tumor may be present in a single image. For all these reasons, many images can actually be classified into several classes. There is an especially high number of such cases for classes DC (Carcinoma) and LC (Lobular Carcinoma), as can be seen in the following examples where the same image was found in the Train folder, in the Test folder and in the BreakHis dataset with a name different from the Train Folder

| Image | Name in the Test folder | Alias name in the BreakHis dataset | Name in the Train folder | Possible classes |

|---|---|---|---|---|

|

SOB_18 | SOB_M_LC-14-13412-100-026 | SOB_M_DC-14-13412-100-026 | LC or DC |

|

SOB_28 | SOB_M_LC-14-13412-100-025 | SOB_M_DC-14-13412-100-025 | LC or DC |

|

SOB_29 | SOB_M_LC-14-13412-100-001 | SOB_M_DC-14-13412-100-001 | LC or DC |

Even with a perfect classifier, getting a perfect score is thus dependent on luck !

I obtained my best score with the following classifier :

- SVM classifier with Tanimoto Kernel (implementation found here), using a regularization parameter C=6

- 7 feature extractors

- Parameter-Free Threshold Adjacency Statistics (PFTAS)

- Channel color statistics (mean, standard deviation, skewness, kurtosis)

- Hu Moments

- Haralick features

- 11 bits HSV color histogram

- Local Binary Patterning (LBP), with a radius of 9 pixels and 72 points

- SIFT, with a Bag of Words of 300 centroids

The theory behind each feature is explained in the presentation in PDF format, the choice of the parameters for each feature is detailed in the jupyter notebook.

The combination of these features allowed me to score 1st amongst 36 participants in the alloted time, as shown in the screenshot below :

Interestingly, with some efforts, Joffrey MA managed to get a better F1-score of 0.815458990715 after the deadline, by finetuning a Swin model pretrained on Imagenet (weights taken from Huggingface). A deep learning approach was thus reasonable, but required a pretrained model and considerably more computing ressources.

- PFTAS : Nicholas A Hamilton et al. Fast automated cell phenotype image classification, BMC Bioinformatics, March 2007

- Hu moments : Ming-Kuei Hu Visual pattern recognition by moment invariants, IEEE IRE Transactions on Information Theory, February 1962

- Haralick : Robert M Haralick et al. Textural Features for Image Classification, IEEE Transactions On Systems Man And Cybernetics, November 1973

- LBP : T. Ojala and al. Multiresolution gray-scale and rotation invariant texture classification with local binary patterns, IEEE Transactions on Pattern Analysis and Machine Intelligence, July 2002

- SIFT : David G. Lowe. Distinctive Image Features from Scale-Invariant Keypoints, International Journal of Computer Vision, November 2004