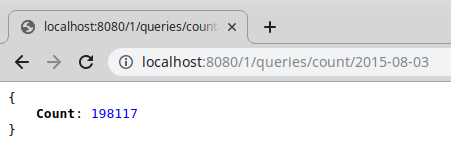

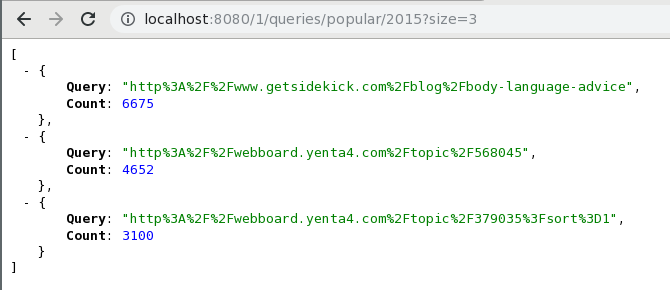

This go program creates a server that allows to print a report of a log file on demand. It can either count the distinct request during a timeframe, or print an object listing the popular searches during a timeframe:

The program is written in go, with no external lib used.

- The file is read, every line is split in two strings

timestampandquery. - The data is stored in a slice, that we sort against the timestamp key. The timestamps are properly formatted, so sorting alphabetically yields a proper sort by timestamp

- At this point the server can start listening to requests

- When receiving a request, find the first matching index of the sorted data (using a binary sort, thus

O(log(n))), scan every line that matches the filter (simple scan until the matching condition fails) and reduce to provide the desired output (either distinct count or popular report).

This program can either be installed using a go environment, or via a provided Dockerfile

- A prebuilt image is available on dockerhub; simply run

docker run -v <absolute-path-to-your-local-file>:/tmp/log.gz -p 8080:8080 aherve/testolia /tmp/log.gz

Each push on github triggers a new docker build

- build the image with

docker build -t testolia . - run it with

docker run -v <your-local-file>:/tmp/log.gz -p 8080:8080 testolia /tmp/log.gz

run go build to create the testolia executable and run it with ./testolia <filename>

The <filename> argument can either be a .tsv file, or a gzipped .tsv.gz file

go test files are present and can be launched using go test.

The tests are also automatically run on codeship:

- I hope I didn't step on too many naming conventions, go-specific patterns or so. This is the first time I write something in go so I'm hoping this is easily readable by more experienced go developers

- I assumed the input logs are not going to be updated during runtime so I only read it once, and do not check for any file update. To go further, we could for instance watch any changes and reload the file if need be.

- I assumed the server RAM memory is large enough so that we can keep the entire file content in memory. This allows a much faster response time, whilst raising the footprint of the program. For significantly larger datasets, a strategy where we stream the input file and filter it on the fly could be preferable.

- The error handling is really basic: just panic if anything goes wrong. I'd advocate to set at least a treshold on how many missed lines the loading script tolerates before throwing a panic

- There is close to no server logging, this would obviously be mandatory to create something production-proof.