How to setup the workshop using OpenShift4

Create a OCP4 cluster by visiting try.openshift.com and downloading the openshift installer binary for Linux or Mac

1) ./openshift-install --dir=june22v2 create install-config

Follow the prompts for your SSH key, your favorite AWS Region, your Route53 domain, your PullSecret (visible at try.openshift.com), etc. This creates a directory called "june22v2" and inside it a file called install-config.yaml

2) Edit this file to override your aws/azure/gcp Instance Types (the default ones are tiny), here is an example snippet

name: worker

platform:

aws:

type: m5.4xlarge

name: master

platform:

aws:

type: c5.4xlarge

3) ./openshift-install --dir=june22v2 create cluster

4) You can monitoring the activity by refreshing your AWS EC2 console

5) Make note of the resulting URLs and the kubeadmin password when the process is completed. It will take several minutes, go have lunch.

6) Also keep the kubeconfig file in june22v2/auth

7) OpenShift4 CLI for your environment from https://mirror.openshift.com/pub/openshift-v4/clients/ocp/latest/.

|

Important

|

ONLY FOR RHPDS

The RHPDS by default applies a |

# (optional) take a the back up of listed clusterresource quotas

oc get clusterresourcequotas.quota.openshift.io clusterquota-opentlc-mgr -o yaml > <your-backup-dir>/clusterquota-opentlc-mgr.yaml

# scale down the operator

oc -n gpte-userquota-operator scale deployments --replicas=0 userquota-operator

# delete the mulitproject clusterresource quota

oc delete clusterresourcequotas.quota.openshift.io clusterquota-opentlc-mgr

# check if its deleted

oc get clusterresourcequotas.quota.openshift.ioSince there will be lot java artifacts required

oc new-project rhd-workshop-infra

oc new-app -n rhd-workshop-infra sonatype/nexus|

Note

|

If you are using RHPDS then the users are already created, hence you skip this step |

./workshopper createUsersAll the components such as Knative Serving, Knative Eventing, Eclipse Che and Tekton will be installed using operators via Opeator Hub. As a first step towards that we need to install the sources from where the components will be installed

./workshopper installCatalogSources|

Note

|

It will take few minutes to get the operator sources to be configured |

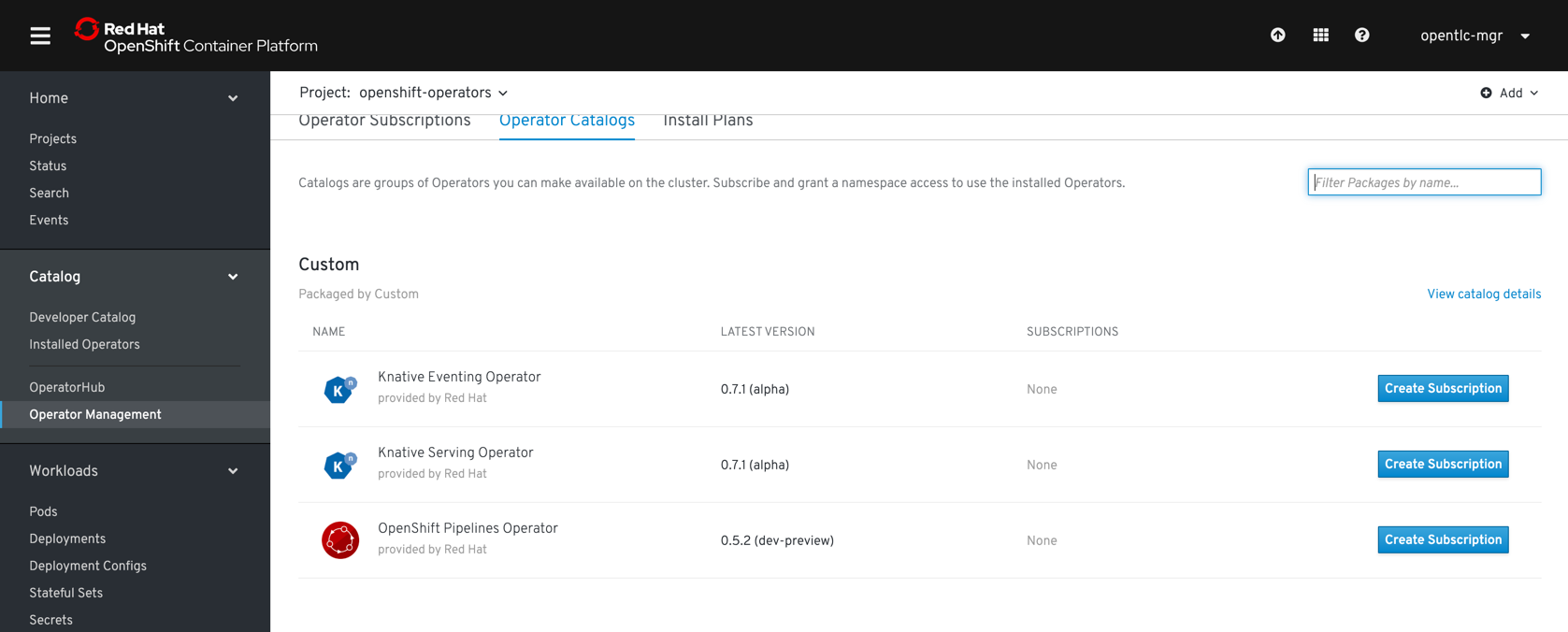

Please check if your Operator Catalog looks like below before proceeding to next steps:

Navigate to project openshift-operators and navigate to Catalog › Operator Management › Operator Catalogs

$ *bash <(curl -sL https://www.eclipse.org/che/chectl/)*

$ *./workshopper createCheCluster*cat ./config/che/che.env | oc set env -e - deployment/che -n che"export KEYCLOAK_PASSWORD=$(oc get -n che deployment keycloak -o jsonpath='{.spec.template.spec.containers[pass:['*']].env[?(@.name=="KEYCLOAK_PASSWORD")].value}')Istio will will be installed using Red Hat Servicemesh Operator, the following section details on how to install using operator and oc CLI.

./workshopper installServicemeshIt will take for some time for Servicemesh and its dependencies to be resolve, you can watch the status via:

watch 'oc get csv -n openshift-operators'A successful install will show an output like

NAME DISPLAY VERSION

REPLACES PHASE

elasticsearch-operator.4.1.18-201909201915 Elasticsearch Operator 4.1.18-2019

09201915 Succeeded

jaeger-operator.v1.13.1 Jaeger Operator 1.13.1

Succeeded

kiali-operator.v1.0.5 Kiali Operator 1.0.5

Succeeded

servicemeshoperator.v1.0.0 Red Hat OpenShift Service Mesh 1.0.0

Succeeded|

Important

|

Operator versions may vary from your output based on the latest available csv |

./workshopper createServicemesh|

Note

|

It will take sometime for Istio to be deployed completely, wait for all the Istio Pods to be available: |

oc -n istio-system get pods -w|

Note

|

If you see no pods getting created for long time, try running the command to |

oc get routes -n istio-system -o custom-columns='NAME:.metadata.name,URL:.spec.host'We will be using Knative Serving and Knative Eventing Operators to install Knative Serving and Eventing components:

./workshopper installKnativeServing|

Note

|

It will take few minutes for the Knative serving pods to appear please run the following commands to watch the status: oc -n knative-serving get pods -w |

./workshopper installKafka|

Note

|

It will take few minutes for the Kafka pods to appear please run the following commands to watch the status: oc -n kafka get pods -w |

./workshopper installKnativeKafka|

Note

|

It will take few minutes for the Knative Eventing Kafka pods to appear please run the following commands to watch the status: oc -n knative-eventing get pods -w |

$*oc api-resources --api-group=sources.eventing.knative.dev*

NAME SHORTNAMES APIGROUP NAMESPACED KIND

apiserversources sources.eventing.knative.dev true ApiServerSource

containersources sources.eventing.knative.dev true ContainerSource

cronjobsources sources.eventing.knative.dev true CronJobSource

kafkasources sources.eventing.knative.dev true KafkaSource

sinkbindings sources.eventing.knative.dev true SinkBinding$ *oc api-resources --api-group=messaging.knative.dev*

NAME SHORTNAMES APIGROUP NAMESPACED KIND

channels ch messaging.knative.dev true Channel

inmemorychannels imc messaging.knative.dev true InMemoryChannel

kafkachannels kc messaging.knative.dev true KafkaChannel

parallels messaging.knative.dev true Parallel

sequences messaging.knative.dev true Sequence

subscriptions sub messaging.knative.dev true Subscription./workshopper installPipelines|

Note

|

It will take few minutes for the OpenShift pipelines pods to appear please run the following commands to watch the status: oc -n openshift-pipelines get pods -w |

./workshopper installKamel$ *kubectl api-resources --api-group=camel.apache.org*

NAME SHORTNAMES APIGROUP NAMESPACED KIND

builds camel.apache.org true Build

camelcatalogs cc camel.apache.org true CamelCatalog

integrationkits ik camel.apache.org true IntegrationKit

integrationplatforms ip camel.apache.org true IntegrationPlatform

integrations it camel.apache.org true IntegrationRun `kamel install` in the namespace where you want kamel integrations to be deployed. Since the opeator and API are setup globally in previous setup, you can skip the install using the options `--skip-cluster-setup` and `--skip-operator-setup` -- that is, `kamel install --skip-operator-setup --skip-cluster-setup` --

./workshopper installKnativeCamel$*oc api-resources --api-group=sources.eventing.knative.dev*

NAME SHORTNAMES APIGROUP NAMESPACED KIND

apiserversources sources.eventing.knative.dev true ApiServerSource

*camelsources sources.eventing.knative.dev true CamelSource*

containersources sources.eventing.knative.dev true ContainerSource

cronjobsources sources.eventing.knative.dev true CronJobSource

kafkasources sources.eventing.knative.dev true KafkaSource

sinkbindings sources.eventing.knative.dev true SinkBinding./workshopper usersAndGroupsYou can check the group users via command, which should basically list all workshop users.

oc get groups workshop-students./workshopper createWorkspacesIt will take sometime to create the workspaces, all the workspaces created will be logged in $PROJECT_HOME/workspace.txt file.