Overview

Overscore is an automatic score reader, that recognizes musical sheets and plays them using Overtone. Overscore consists of three main stages:

- an Optical Music Recognition (OMR) system to recognize partitions and compile them into MusicXML files

- a MusicXML parser that compiles MusicXML files into Clojure files

- multiple helper macros and functions built over Overtone to have a system more adapted to encode classical music

This project is the result of work done at the ULB (Université Libre de Bruxelles), for the course PROJ-H-402.

Dependencies

Aside from the dependencies listed in the project.clj, OpenCV is

also needed for the classification step, and the classifier (which is

written in C++) should be compiled:

cd src/overscore/recognition/classification

g++ opencv_knn.cpp -o opencv_knn `pkg-config opencv --libs --cflags`The Gamera python plugin is also needed for staffline detection.

Usage

The application can be launched using leiningen:

$ lein2 run help

Possible arguments:

convert <in> <out>

Convert symbols from Audiveris training set to png

images in the <out> directory (from the <in> directory)

preprocessing <in> <out> <out-ref>

Preprocess the image <in>, saving the output image to

<out> and the reference lengths descriptions in

<out-ref>

staffline <in>

Isolate the systems and find the staffline positions

on each system, from the image <in>. Saves the output

for each system to <in>-n.png and <in>-n.txt, where n

is an integer

segmentation <in-img> <in-refs> <out-segs>

Segment the image <in-img>, with reference lengths

described in <in-refs>, isolating each symbol. Save

the segments descriptions in <out-segs>

classification <training-set> <in-img> <in-segs> <out-classes>

Classify the segments of <in-img> described by the

file <in-segs> (generated by the segmentation step),

using the training set at location <training-set>

(created by the convert step). Save the segments along

with their classes in <out-classes>

semantics <in-classes> <in-refs> <in-stafflines> <out-xml>

Convert the recognized score to a MusicXML

document (<out-xml>), from the classes (<in-classes>) output

by the classification step, the reference lengths (<in-refs>)

output by the preprocessing step, and the staff lines

positions (<in-stafflines>, the .txt file) output by the

staffline step.

generate <in> <out> <name>

parse the <in> MusicXML file, and generate the song

<name> in the clojure file <out>

play <file> <ns>/<name>

play the song called <name> defined in the file <file>, which

defines the namespace <ns>. If no namespace is defined, only

<name> can be givenSome example data is provided in the example directory, which contains both input data and (almost) perfect output data. For example, after running the following command:

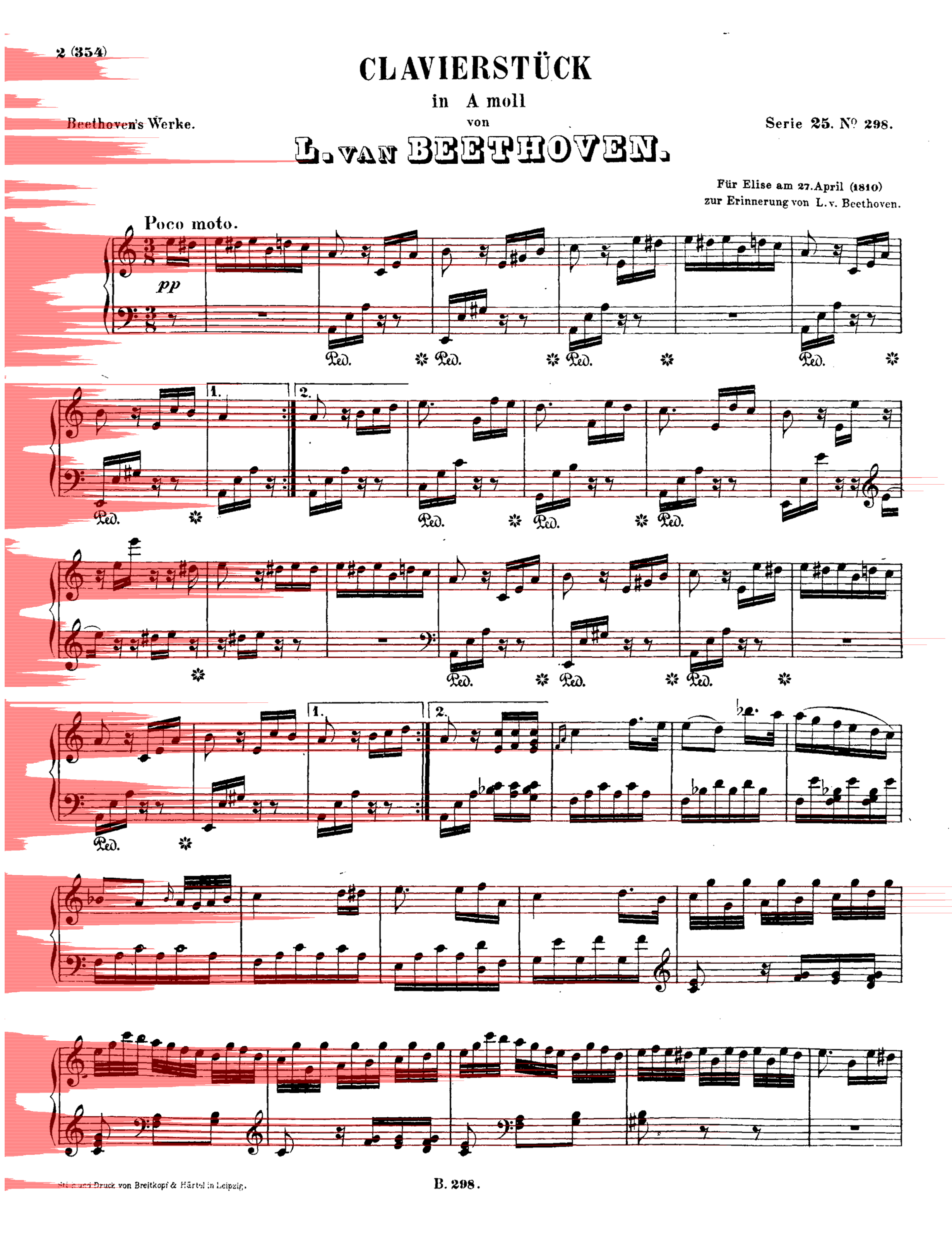

$ lein2 run preprocessing example/input/furelise.png /tmp/furelise.png /tmp/refs.txtThe image /tmp/furelise.png should (roughly) correspond to the image

example/preprocessed/furelise.png, and the file /tmp/refs.txt to

example/preprocessed/refs.txt.

The other possible commands, on the example files, are:

$ lein2 run staffline example/preprocessed/furelise.png

$ lein2 run segmentation example/staffline/furelise-0.png \

example/preprocessed/refs.txt /tmp/segs.txt

$ lein2 run classification training-set/ \

example/staffline/furelise-0.png \

example/segmentation/segs.txt \

/tmp/classes.txt

$ lein2 run semantics example/classification/classes.txt \

example/preprocessed/refs.txt \

example/staffline/furelise-0.txt \

/tmp/furelise-0.xml

$ lein2 run generate example/semantics/furelise-0.xml \

/tmp/furelise-0.clj \

furelise

$ lein2 run play example/generate/furelise-0.clj \

furelise/fureliseNote: the staffline step stores its output in the same directory as its input.

How it works

OMR

The initial plan for the OMR part is documented in doc/design/design.pdf. In this section we will briefly describe how it is currently implemented and discuss some results.

Part 1: Image Preprocessing

Binarization

Since the input image might be in grayscale or color, a binarization step is sometimes necessary. However, most musical sheets found on the internet are already binarized and this step is thus performed only if necessary.

It is done in two steps:

- If the image is in color, convert it into grayscale using the luma component, implemented in preprocessing/gray.clj.

- Convert the grayscale image into binary using Otsu’s method, implemented in preprocessing/otsu.clj.

Reference lengths

Once the image is binarized, the reference lengths (staff line and staff space height) are found by analyzing the most common vertical runs (black for staff line height, white for staff space height) as described in Rebelo, Fujinaga et. al., 2012

Improvements

Multiple steps could be added to the preprocessing part. The most useful would be a skew detection and correction step, since it is common to have skewed images among scanned documents. Another possibly useful step would be a noise reduction step, depending on the quality of the scanned documents.

Part 2: Staff line processing

System isolation

The first step done by this part is to isolate the systems to process them independently. This is done by a technique similar to the one described in Fujinaga, 1988.

First, a y-projection of the pixel is done (see an example result in

), and the systems are located by looking for five

distinct peaks of black pixels. Then, the boundaries of the system are

found by looking around the system for the lines where the number of

black pixel is minimal.

), and the systems are located by looking for five

distinct peaks of black pixels. Then, the boundaries of the system are

found by looking around the system for the lines where the number of

black pixel is minimal.

Once each system is found, they are each saved in an image.

This step is implemented in staffline/identification.clj.

Staff line identification and removal

The staff line removal is done by using Gamera’s music-staves plugin. A python script is simply called with the input image, and outputs the same image without the staffline (in an image), as well as the staff line positions (in a text file).

If no staff line were found in an image, it can be discarded since it is most likely not a relevant image (eg. some text, like the title of the partition).

This step is implemented in staffline/removal.clj and calls the script staffline/removal.py.

Improvements

If the staff line removal does not work as expected because the image is skewed, a skew correction algorithm should be implemented in the preprocessing.

Part 3: Symbol Recognition

Symbol Segmentation

The segmentation is done in a similar way as done in OpenOMR. All the sub parts of the segmentation process are assembled in recognition/segmentation/segmentation.clj, and takes as input a path to an image (output by the preprocessing step), a path to a text files containing the reference lengths (also part of the output of the preprocessing step). It outputs all the segments represented as a vector of 4-element vectors in a text file.

Level-0 Segmentation

The first segmentation is done by finding consecutive columns which contains black pixels. The results are refined by:

- Grouping close segments, which can happen for example in the case of a dotted note

- Not taking small segments, which are probably due to noise in the scanned image.

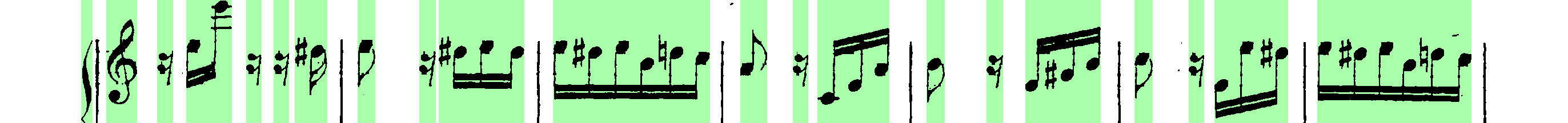

The level-0 segmentation is implemented in recognition/segmentation/level0.clj. An

example of level-0 segmentation result can be found in

.

.

Note Head Detection

For each level-0 segment, we need to know if it contains a note head or not.

To detect if a segment contains note heads, the following algorithm is used (taken from OpenOMR):

- For each column, find (if present) the biggest black run that is:

- Smaller than 3/2 of the staffspace height

- Bigger than 2 times the staffline height

Remember the columns where such runs are present.

- Find segments of columns having those black runs, such that the lengths of the segment is at least half of the staffspace height. Those segments correspond to the note heads.

Segments having note heads in it are further decomposed into multiple level-1 segments. The others can directly be used as level-1 segments without further decomposition.

The note head detection is implemented in recognition/segmentation/notehead.clj.

Level-1 Segmentation

Level-1 segmentation use the data computed by the note head detection: for each note head found, it creates a level-1 segment. The space between the note heads is also saved in a level-1 segment.

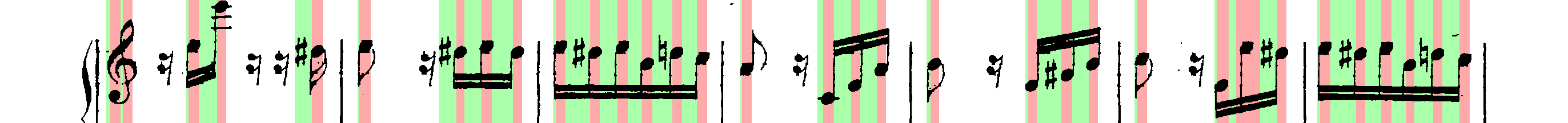

Level-1 segmentation is implemented in recognition/segmentation/level1.clj and an

example output on level-0 segments that contains notes can be found in

.

.

Level-2 Segmentation

The level-2 segmentation separates the symbol contained in each level-1 segment vertically. The resulting segments should then correspond to the musical features (eg. a note head, a sharp, …) and can then be classified.

Level-2 segmentation is implemented in recognition/segmentation/level2.clj.

Symbol recognition

Multiple symbol recognition methods are implemented. The one used by default uses the k nearest neighbors algorithm provided by OpenCV, using Audiveris’ training set.

Since Audiveris store its training set as xml files describing vertical runs for each image, we need to convert it to “normal” (2-bit PNG) images (for easier manipulation). This is done in tools/audiveris.clj.

The training set is then loaded in recognition/classification/training.clj, each image being resized to a 20x20 image and represented by a vector of 400 integer (1 meaning the pixel is on (ie. black), 0 meaning it is off).

OpenCV’s k nearest neighbor method is called directly from a C++ program, recognition/classification/opencv_knn.cpp, and the resulting program is called from clojure in recognition/classification/opencv_knn.clj. Once OpenCV is installed, the C++ program can be compiled with:

$ g++ opencv_knn.cpp -o opencv_knn `pkg-config opencv --libs --cflags`Another simple classifier using kNN (implemented by hand) is implemented in recognition/classification/knn.clj, and can use the Hausdorff distance or the Euclidian distanceto compute the distance between two images. The Hausdorff distance is implemented in recognition/classification/hausdorff.clj, and the Euclidian distance in recognition/classification/euclidian.clj. However, this implementation of the kNN algorithm is really slow, and that is the reason why OpenCV’s kNN is used by default.

A neural network classifier using Encog is also implemented, in recognition/classification/nn.clj.

All the parts of the classification step are assembled in recognition/classification/classification.clj, and takes as input the image (output by the preprocessing step) and a file describing the segments (output by the segmentation step), and outputs a file describing the class of each segment (as a vector of 5-element vectors, where the 4 first elements are the segment description and the last element is the class (as a symbol) of the vector)

Improvements

The segmentation might be improved by fine tuning the parameters. The level-0 and level-1 segmentation works quite accurately, but the level-2 segmentation performs really poorly at the moment.

The symbol recognition process is currently not accurate enough. It might be because a big part (around 25%) of the training set consists of black noteheads. This part could be reduced, and the rest of the training set could be improved.

The kNN algorithm implemented by hand also suffers from huge performance issues.

Part 4: Musical Semantics

The musical semantics are defined by a set of rule, as the following LL(1) grammar:

<P> → <clef> <P'>

<P'> → <time> <notes>

<notes>

<notes> → <note> <notes>

ε

<note> → <pre> <note_body> <post>

<rest>

<pre> → sharp

flat

natural

<post> → <flag>

dot_set

<note_body> → <beam> <notehead>

<notehead>

<time> → common_time

cut_time

time_four

time_four_four

time_six_eight

time_three

time_three_four

time_two

time_two_four

<clef> → g_clef

g_clef_8vb

f_clef

c_clef

<rest> → eighth_rest

one_16th_rest

quarter_rest

<notehead> → notehead_black

notehead_black_2

notehead_black_3

notehead_void

notehead_void_2

whole_note

whole_note_2

<beam> → beam

beam_hook

<flag> → flag_1

flag_1_up

flag_2

flag_2_up

MusicXML parser

A simple MusicXML parser is implemented in musicxml.clj, and converts MusicXML files to the notation described in the next section, according to the rules given in doc/conversion/conversion.pdf.

Musical Notation

This section describes the musical notation used to describe the scores. The notation is implemented in src/overscore/notation.clj, and some examples of scores transcribed into this notation can be found in src/overscore/examples.

Notes and rests

The most basic element of a score is a note. A note is expressed as its duration and its pitch:

(play :A4 1)This corresponds to a 440Hz A, played as a quarter note. The interpretation of the duration depends on the time signature and the tempo of the score. In this case, we assume that the time signature is 4/4, so a duration of 1 corresponds to a quarter of the entire bar (so, a quarter note).

A rest is simply a note without pitch, which is noted :rest:

(play :rest 1)Bars

A bar contains notes played at certain times. With the most basic constructs, it can be defined as a set of notes and the time they have to be played at:

(bar

(beat 0 (play :C4 1))

(beat 1 (play :A4 1))

(beat 2 (play :G4 1))

(beat 3 (play :C5 1)))A bar can also be named, to be reffered to later:

(defbar foo

(beat 0 (play :C4 1))

(beat 1 (play :A4 1))

(beat 2 (play :G4 1))

(beat 3 (play :C5 1)))Multiple combinators simplifies the notation, and are described later.

Progressions

A progression is a set of bars to be played in sequence. It can also

be defined anonymously with prog, or named with defprog:

(defprog foo-twice

foo foo)Progression definitions can also be simplified through the use of combinators described later.

The tempo and time signature can be changed during a progression:

(defprog foo-twice

{:tempo 60} foo {:tempo 40} foo)Songs

A song consists of a set of progressions, played simultaneously, associated with a set of instruments:

(defsong foo-song

{:time-signature [4 4] :tempo 60}

[foo-twice sampled-piano]

[foo-twice pad])Combinators

Notes, bars and progressions are internally represented as a function that takes a state (containing the tempo and the time signature), a time (at which to play the element), and an instrument, and returns the duration of the element. When called, those function spawn Overtone notes that will be played at the given time, and returns immediately. So, they are all considered as elements, and can be manipulated with the following predefined combinators:

play-chords: plays all the arguments at the same timeplay-seq: play all the arguments one after the othersimple-seq: a macro that ease the writing of multiple notes in sequence, without needing to callsimple-seqandplaybeat: delay the time at which the element will be played by n beatsrepeat-elements: repeat an element n times

For example:

(defbar foo

(repeat-elements 2

(play-seq

(simple-seq 1/2 :C4 :A4 :G4)

(play-chord

(play :C5 1/2)

(play :A5 1/2)

(play :G5 1/2)))))

(defprog foo-prog

(repeat-elements 2 foo))If needed, other combinators can be defined easily, since they only consists of manipulating clojure functions.

Playing a score

A score can be played by using the start function, which takes a

song as argument. A element (note, bar, progression) can be played

using the start-element function, which takes as argument at least

the element and the instrument it should be played with (and

optionally the tempo and time signature).

Improvements

The notation currently works well, but lacks lots of classical musical constructs. It should not be to hard to extend it to support more complicated musical constructs (ties, slurs, tuplets, staccato, mordents, …).

One current defect of the implementation of the notation is that it spawns lots of Overtone nodes, and this number is limited by the SuperCollider synth server (while apparently this limitation could be changed). This might results in bugs (“No more IDs!” message) when stopping a song when it has not been entirely playing, and relaunching it later. See here for more explanations.

License

Copyright (C) 2013 Quentin Stievenart

Distributed under the Eclipse Public License, the same as Clojure.

Bibliography

The papers cited in this documentation are given in this section. For

more papers about the topic of OMR, see doc/design/design.pdf.

- A. Rebelo, I. Fujinaga, F. Paszkiewicz, A. R. S. Marcal, C. Guedes, and J. S. Cardoso, Optical Music Recognition - state-of-the-art and open issues, 2012, link.

- I. Fujinaga, Optical Music Recognition using Projections, 1988, link.

- A. Desaedeleer, Reading Sheet Music, 2006, link to OpenOMR (pdf is included in the sources).