Coming soon...

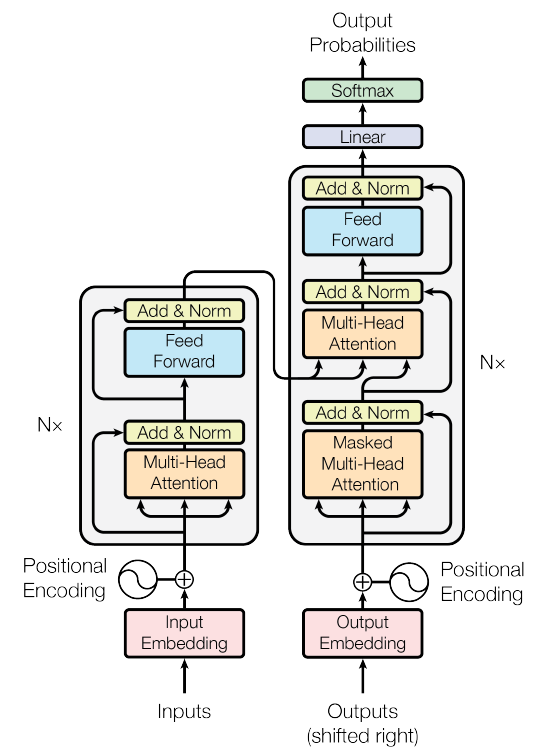

Unofficial code for the original paper Attention is all you need by Ashish Vaswani et al.

- Sublayer Residual Connection

- Encoder Layer

- Encoder

- Decoder Layer

- Decoder

- Multi-Head Attention

- Position-wise Fully Connected Feed-Forward Network

- Positional Encoding

- Embedding

- PyTorch Transformer

- Training Data

- Utils

- Training Scripts

- Train

- JAX implementation

I was heavily inspired by